Transferring data to Windows Azure SQL Database from PostgreSQL

Good day!

In this article, I will show how easy it is to migrate (transfer data) from PostgreSQL to the Windows Azure SQL Database.

Basic steps:

To create a new database, go to the Windows Azure Control Panel ( https://manage.windowsazure.com/ ). Even if you do not have a subscription, you can use the trial period - $ 200 for one month, this is more than enough for various experiments.

Click the + New button in the bottom left and select Data Services> SQL Database> Quick Create . Fill in the required fields - database name, data center region and login / password to access the database:

')

After a moment, a message should appear that the database creation was successful:

By default, a database with a maximum size of 1 GB is created - after the upper limit is reached, the database switches to read-only mode. However, if necessary, the size can be increased up to 150GB.

Now you need to install the ODBC driver for postgresql. Download the latest version from the official site (download the 32-bit version, there are fewer problems with it). Attention! It is necessary to install the driver on the computer on which data will be copied later, and not on the database server.

Installation is simple and does not cause any problems - Next-Next-Next-Finish.

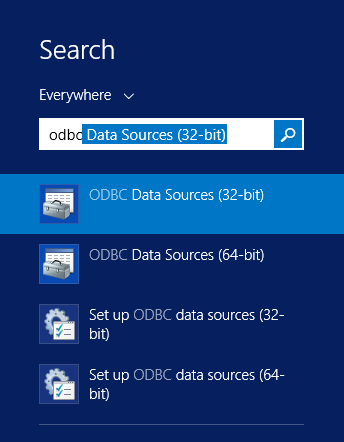

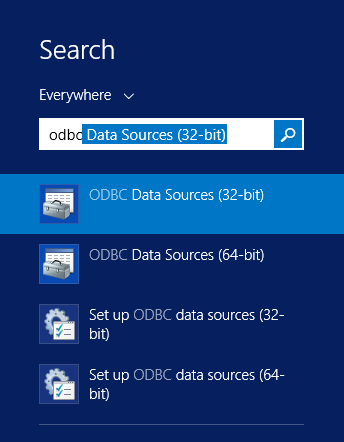

After that, you need to add ODBC Source for both PostgreSQL and SQL Database - just enter odbc on the start screen and select from the list ODBC Data Sources (32-bit)

In the window that appears, click Add ... , select PostgreSQL Unicode from the list and click Finish

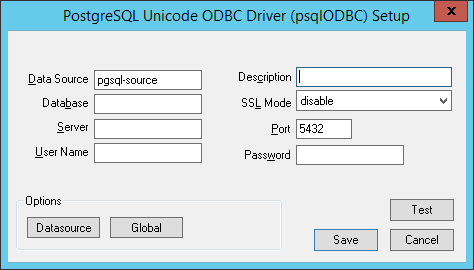

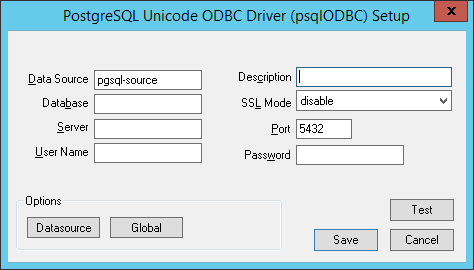

After that enter all necessary data:

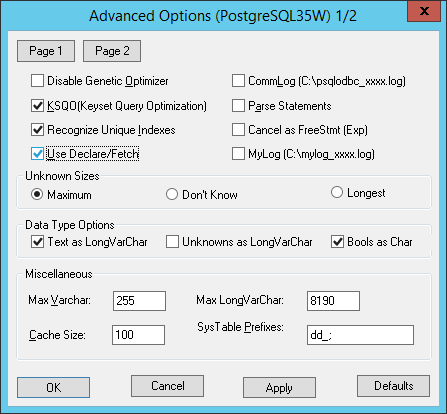

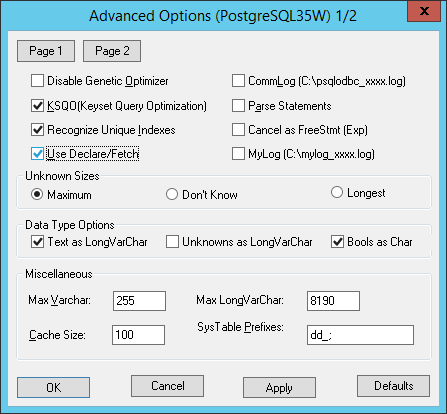

Now one of the moments for the sake of which this article was written - on this window, click the Datasource button and be sure to tick Use Userere / Fetch .

Without this, everything will work as long as there is little data, but it will crash with an Out of memory error on large volumes - so, I had this error on a table with approximately 60 million records.

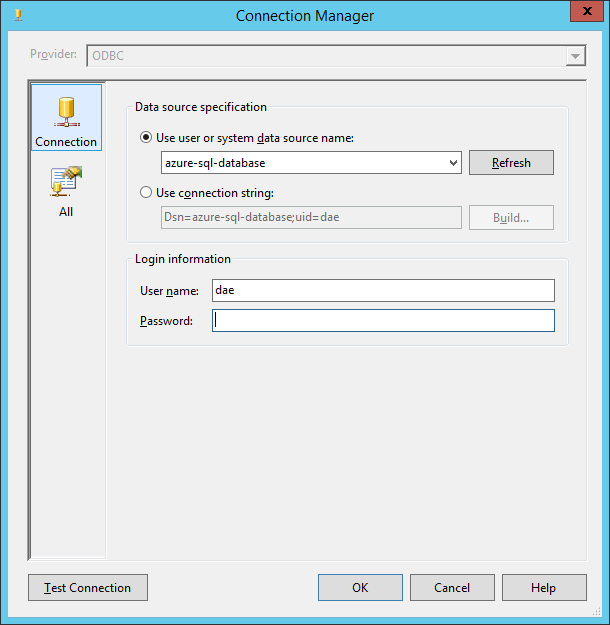

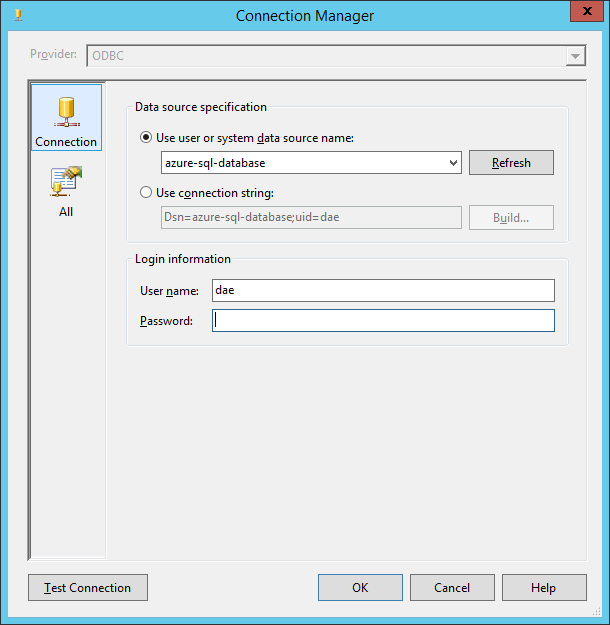

Now also create a Data Source for the end server in the cloud - select SQL Server Native Client in the list only, and you can see the connection data in the server control panel by clicking "View SQL Database connection strings for ADO .Net, ODBC, PHP, and JDBC " . And, as this window reminds us, we need to remember to allow connections to the database from this IP address - by default, the connection is possible only from IP addresses of Windows Azure services (and even if this option is enabled in the server settings)

The setup process is also not complicated, and after that you will have something like this:

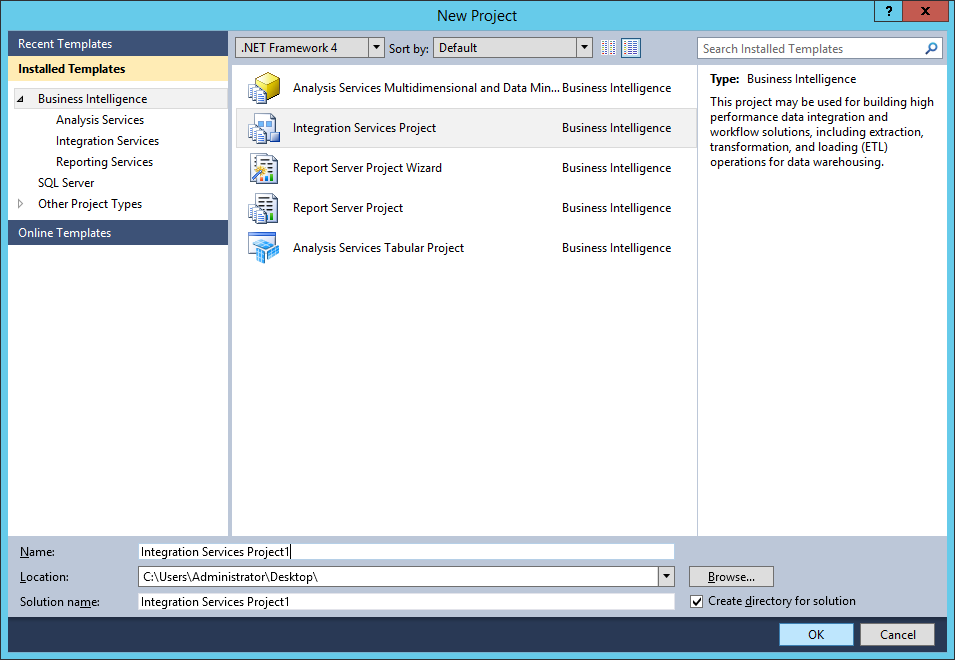

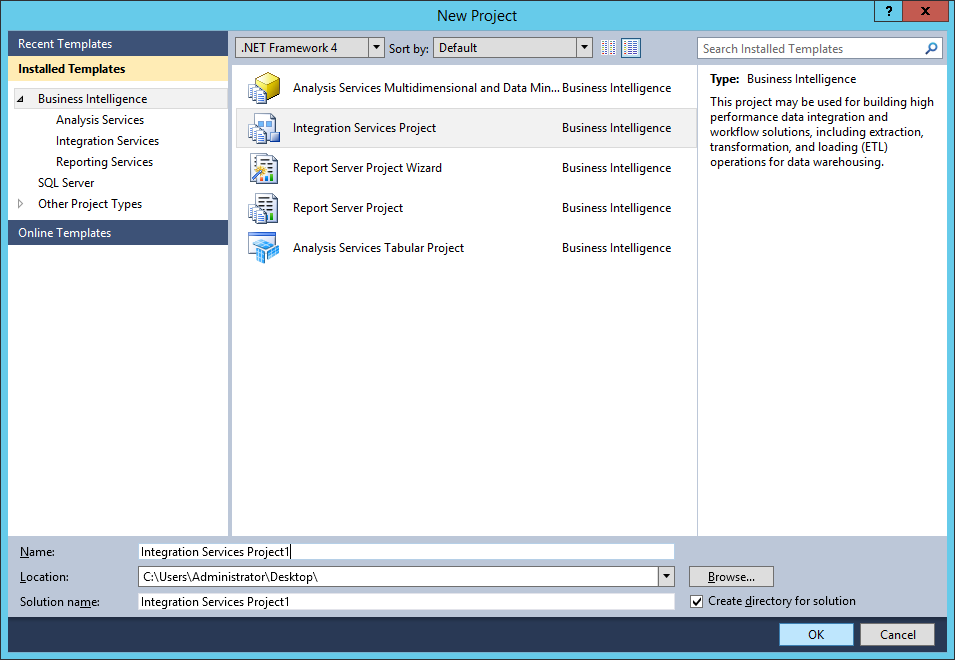

If the migration occurred from MySQL, then everything would be simpler - there is a wonderful tool SQL Server Migration Assistant (SSMA) , which supports, among other things, MySQL, and allows you to migrate very easily and quickly. But there is no such thing for PostgreSQL, so you will have to use the SQL Server Data Tool (which, by the way, is also not difficult). Start the SQL Server Data Tool, click New Project and create a project based on the Integration Service Project template.

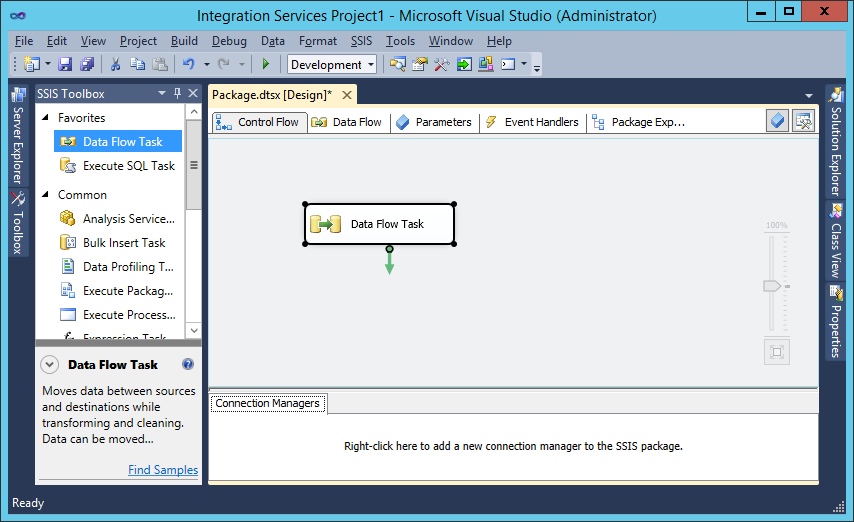

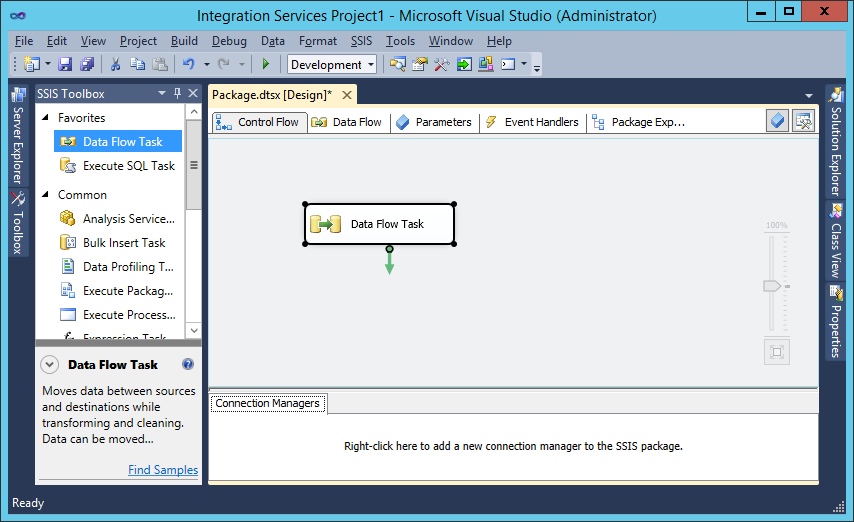

Drag Data Flow Task from Toolbox, double click on it - you will go to the Data Flow tab.

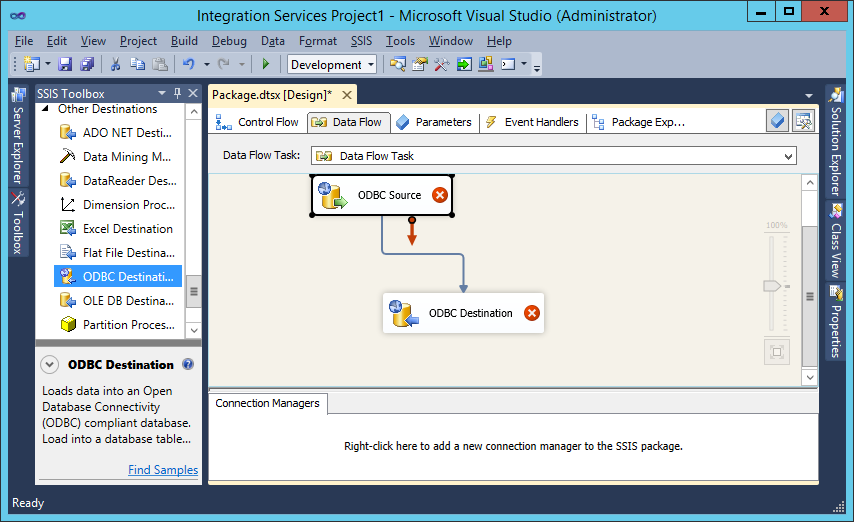

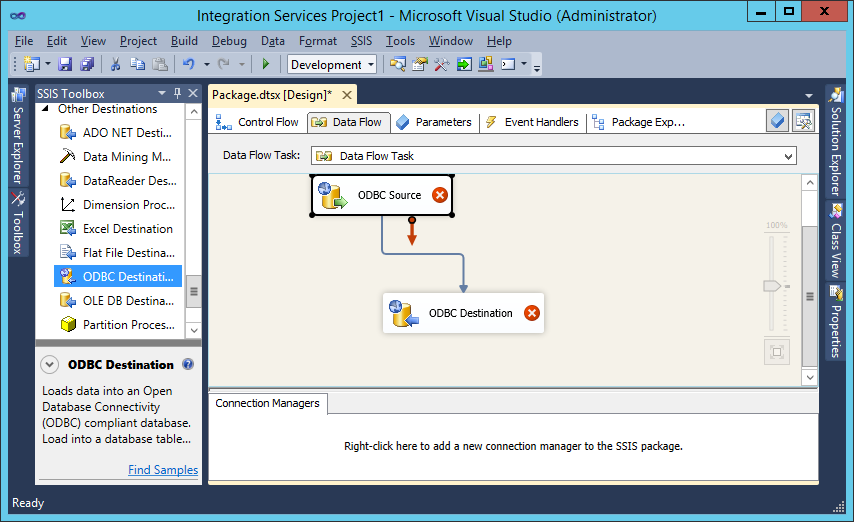

Drag and drop ODBC Data Source and ODBC Destination onto the workspace and connect them with the blue arrow coming from ODBC Source:

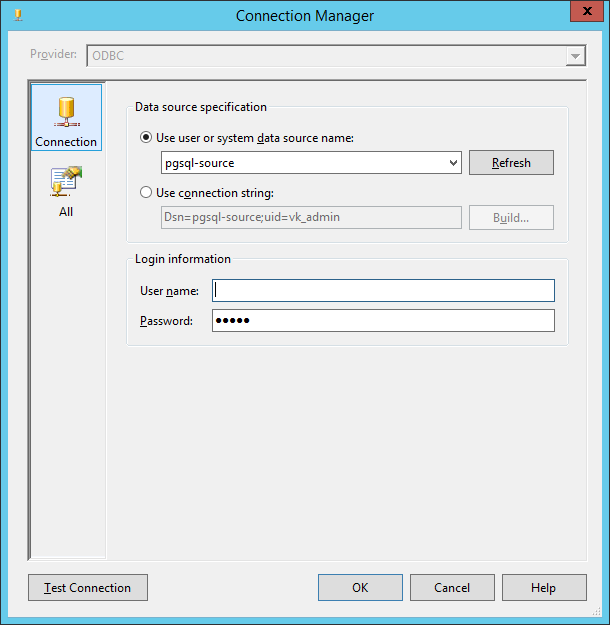

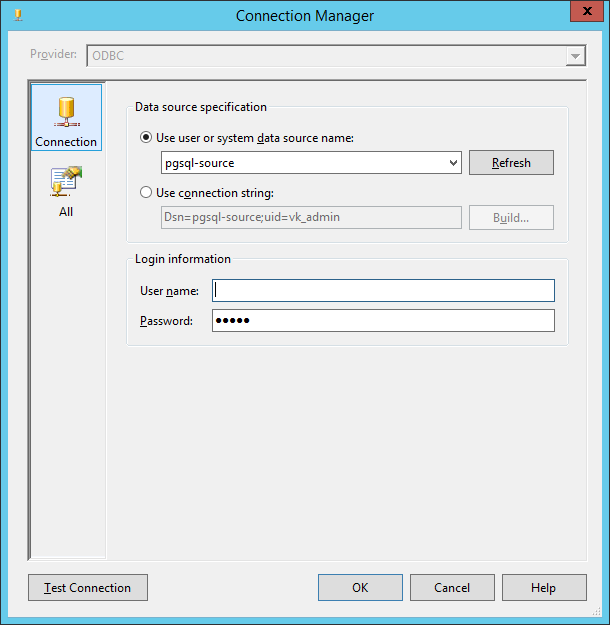

Double-click on ODBC Source, click New in the appeared window, then New again and select our data source:

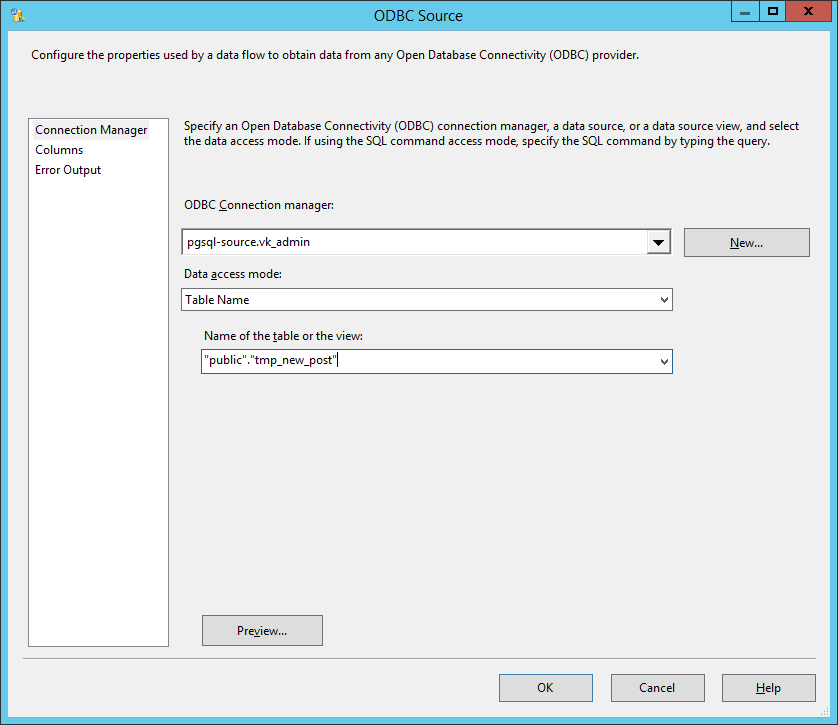

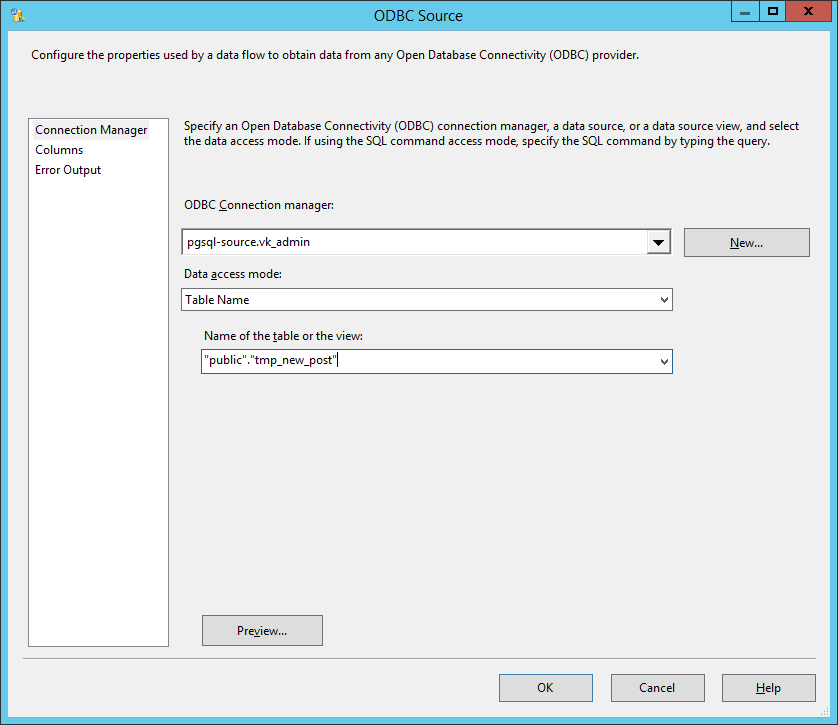

Double-click Ok to close these windows and select the table from which data will be imported:

Now you need to configure the data receiver in the same way - ODBC Destination

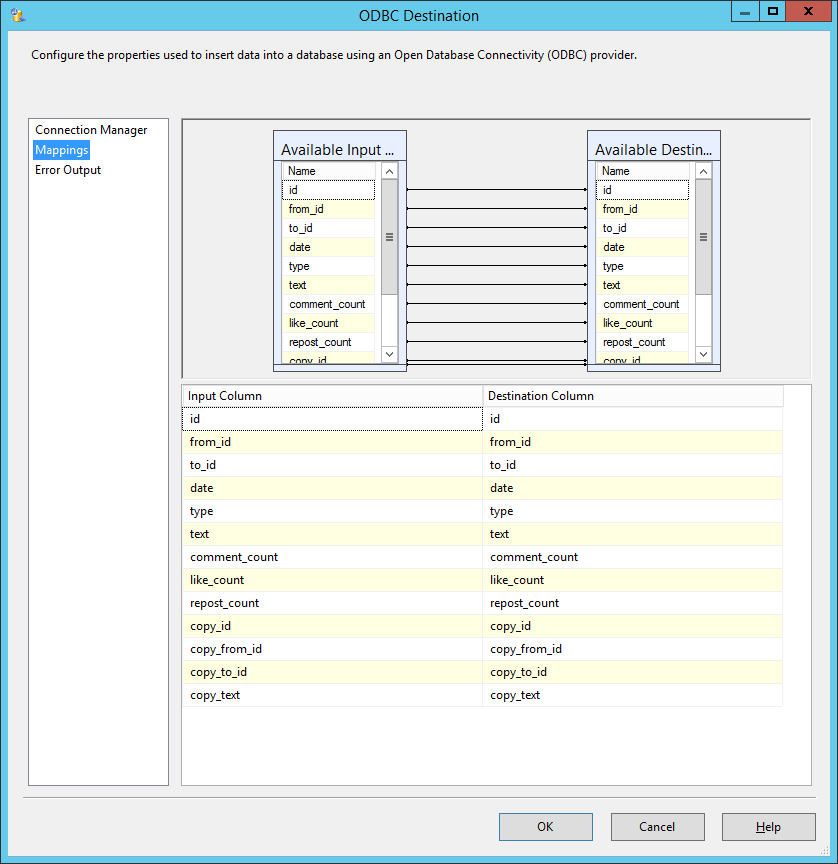

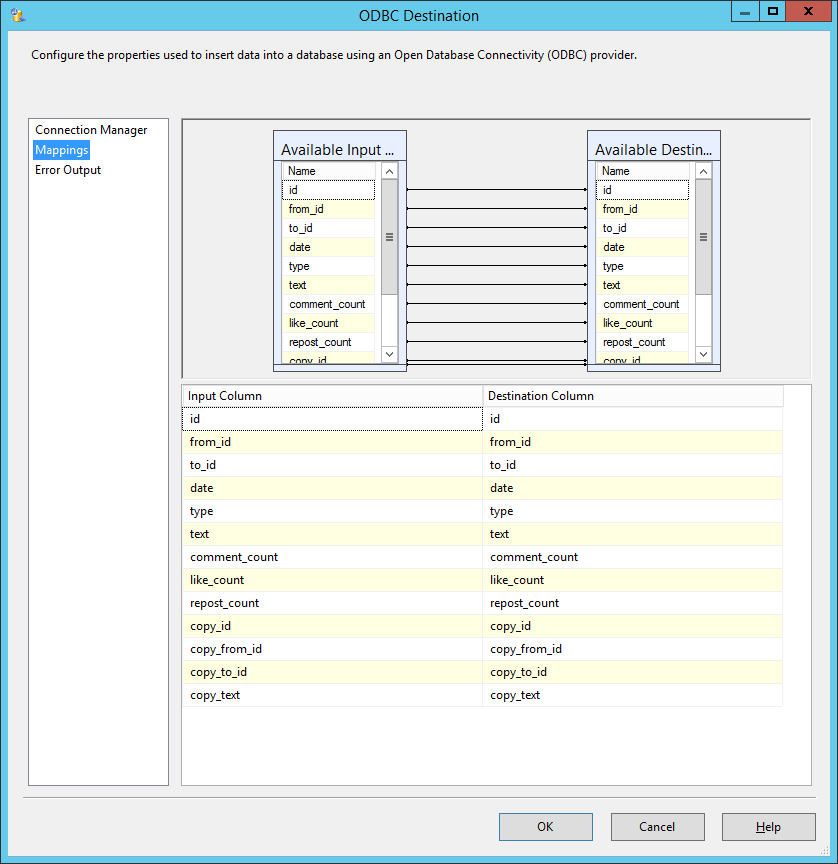

You can also customize the matching columns, if necessary:

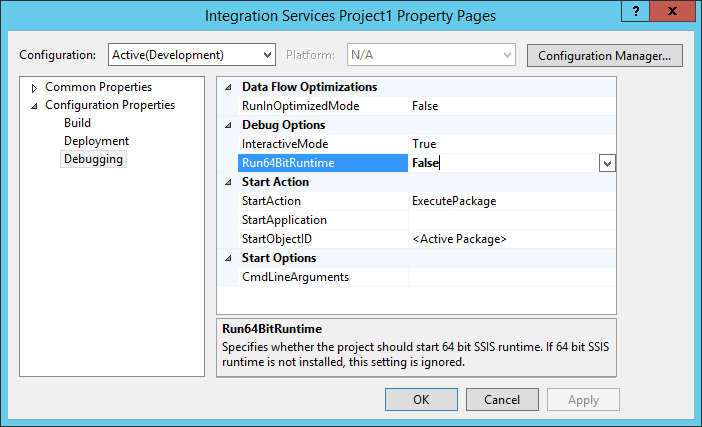

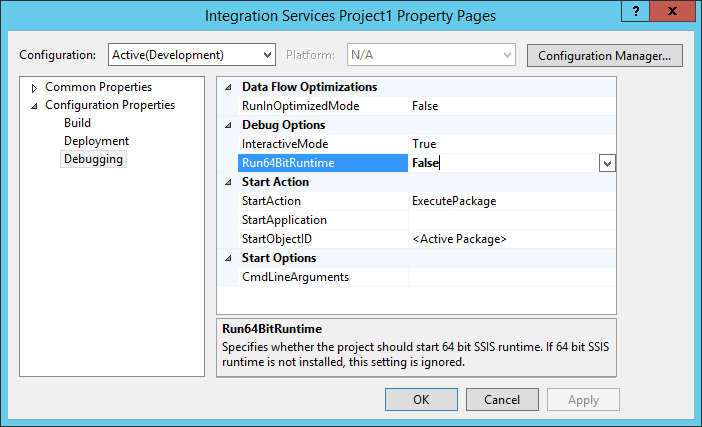

Now one more important point - you should switch the launch of the project to 32-bit mode. To do this, right-click on the project in Solution Explorer, select Properties , and in the Configuration Properties -> Debugging section, set Run64BitRuntime to False .

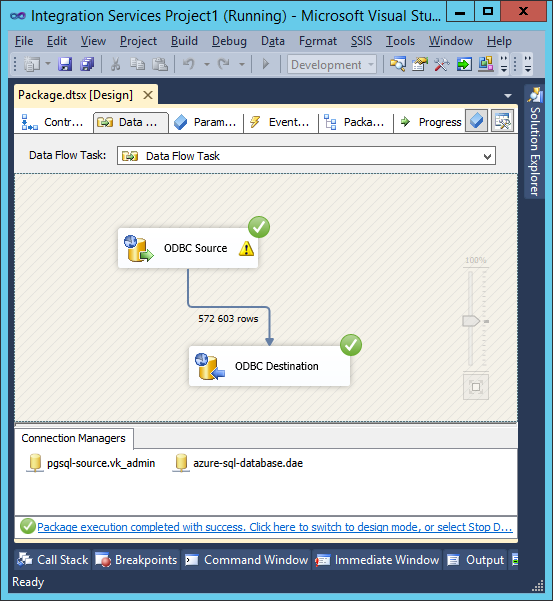

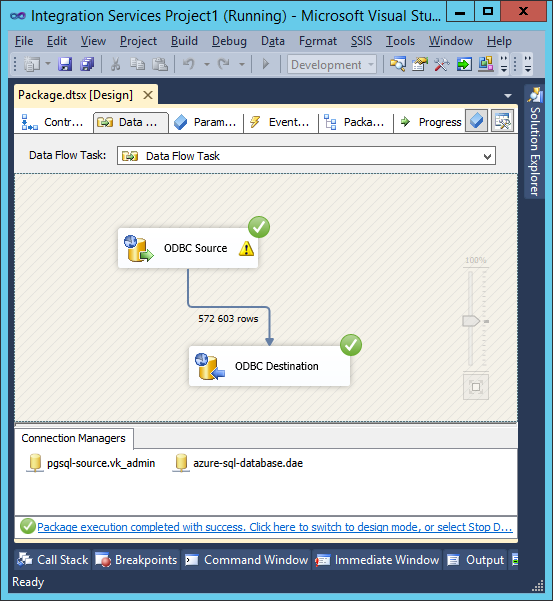

Now everything is ready for data transfer! Press F5 or the “Start Debugging” button on the toolbar. Indicators appear in the work area indicating that data is being transferred:

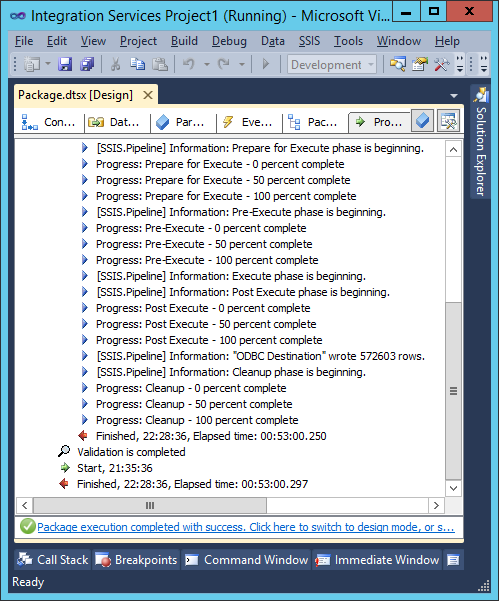

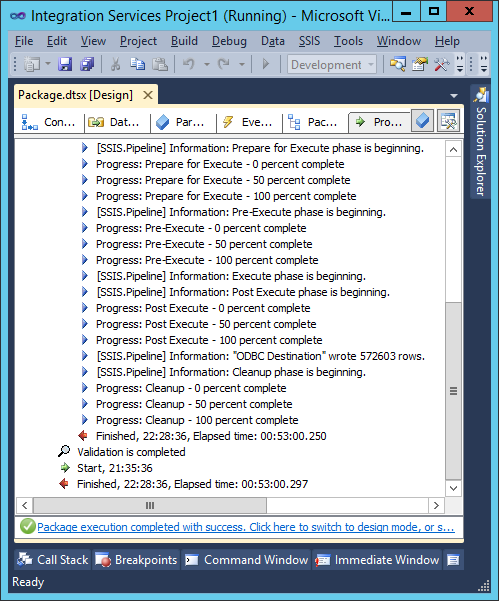

And in the Progress panel you can see a text report:

Making sure that everything goes according to plan, you can go to drink coffee / tea - this is quite a long process (in my opinion, SSMA worked faster). After the process is completed, the source and receiver of the data will be green ticks:

And in the Progress window - a text report:

As you can see, 570K lines were copied in 50 minutes. You can connect to the cloud database and make sure that the data is really there:

This is how you can easily and quickly transfer a large amount of data from PostgreSQL to the Windows Azure SQL Database.

In this article, I will show how easy it is to migrate (transfer data) from PostgreSQL to the Windows Azure SQL Database.

Basic steps:

- Creating a new database in the Windows Azure Control Panel

- Installing the ODBC driver for PostgreSQL

- Creating a project in SQL Server Data Tool

- Data import

Creating a new database in the Windows Azure Control Panel

To create a new database, go to the Windows Azure Control Panel ( https://manage.windowsazure.com/ ). Even if you do not have a subscription, you can use the trial period - $ 200 for one month, this is more than enough for various experiments.

Click the + New button in the bottom left and select Data Services> SQL Database> Quick Create . Fill in the required fields - database name, data center region and login / password to access the database:

')

After a moment, a message should appear that the database creation was successful:

By default, a database with a maximum size of 1 GB is created - after the upper limit is reached, the database switches to read-only mode. However, if necessary, the size can be increased up to 150GB.

Installing the ODBC driver for PostgreSQL

Now you need to install the ODBC driver for postgresql. Download the latest version from the official site (download the 32-bit version, there are fewer problems with it). Attention! It is necessary to install the driver on the computer on which data will be copied later, and not on the database server.

Installation is simple and does not cause any problems - Next-Next-Next-Finish.

After that, you need to add ODBC Source for both PostgreSQL and SQL Database - just enter odbc on the start screen and select from the list ODBC Data Sources (32-bit)

In the window that appears, click Add ... , select PostgreSQL Unicode from the list and click Finish

After that enter all necessary data:

Now one of the moments for the sake of which this article was written - on this window, click the Datasource button and be sure to tick Use Userere / Fetch .

Without this, everything will work as long as there is little data, but it will crash with an Out of memory error on large volumes - so, I had this error on a table with approximately 60 million records.

Now also create a Data Source for the end server in the cloud - select SQL Server Native Client in the list only, and you can see the connection data in the server control panel by clicking "View SQL Database connection strings for ADO .Net, ODBC, PHP, and JDBC " . And, as this window reminds us, we need to remember to allow connections to the database from this IP address - by default, the connection is possible only from IP addresses of Windows Azure services (and even if this option is enabled in the server settings)

The setup process is also not complicated, and after that you will have something like this:

Creating a project in SQL Server Data Tool

If the migration occurred from MySQL, then everything would be simpler - there is a wonderful tool SQL Server Migration Assistant (SSMA) , which supports, among other things, MySQL, and allows you to migrate very easily and quickly. But there is no such thing for PostgreSQL, so you will have to use the SQL Server Data Tool (which, by the way, is also not difficult). Start the SQL Server Data Tool, click New Project and create a project based on the Integration Service Project template.

Drag Data Flow Task from Toolbox, double click on it - you will go to the Data Flow tab.

Drag and drop ODBC Data Source and ODBC Destination onto the workspace and connect them with the blue arrow coming from ODBC Source:

Double-click on ODBC Source, click New in the appeared window, then New again and select our data source:

Double-click Ok to close these windows and select the table from which data will be imported:

Now you need to configure the data receiver in the same way - ODBC Destination

You can also customize the matching columns, if necessary:

Now one more important point - you should switch the launch of the project to 32-bit mode. To do this, right-click on the project in Solution Explorer, select Properties , and in the Configuration Properties -> Debugging section, set Run64BitRuntime to False .

Data import

Now everything is ready for data transfer! Press F5 or the “Start Debugging” button on the toolbar. Indicators appear in the work area indicating that data is being transferred:

And in the Progress panel you can see a text report:

Making sure that everything goes according to plan, you can go to drink coffee / tea - this is quite a long process (in my opinion, SSMA worked faster). After the process is completed, the source and receiver of the data will be green ticks:

And in the Progress window - a text report:

As you can see, 570K lines were copied in 50 minutes. You can connect to the cloud database and make sure that the data is really there:

This is how you can easily and quickly transfer a large amount of data from PostgreSQL to the Windows Azure SQL Database.

Links

Source: https://habr.com/ru/post/212249/

All Articles