Artificial Intelligence for Programmers

How did it happen that artificial intelligence is developing successfully, but there is still no “correct” definition for it? Why did not the hopes placed on neurocomputers come true, and what are the three main tasks facing the creator of artificial intelligence?

How did it happen that artificial intelligence is developing successfully, but there is still no “correct” definition for it? Why did not the hopes placed on neurocomputers come true, and what are the three main tasks facing the creator of artificial intelligence?You will find the answer to these and other questions in the article under the cut, written on the basis of a speech by Konstantin Anisimovich, director of the technology development department of ABBYY, one of the country's leading experts in the field of artificial intelligence.

With his personal participation, document recognition technologies were created, which are used in ABBYY FineReader and ABBYY FormReader products. Konstantin spoke about the history and basics of developing AI at one of the master classes for students at Technopark Mail.Ru. Material master class and became the basis for a series of articles.

In total, there will be three posts in the cycle:

• Artificial intelligence for programmers

• Application of knowledge: state space search algorithms

• Knowledge acquisition: knowledge engineering and machine learning

The ups and downs of approaches in AI

Since the 1950s, two approaches have emerged in the field of creating artificial intelligence - symbolic computing and connectionism. Symbolic computation is a direction based on the modeling of a person’s thinking, and connectionism is on the modeling of a brain device.

')

The first achievements in the field of symbolic computation were the Lisp language created in the 50s and the work of J. Robinson in the field of inference. In connectionism, such was the creation of a perceptron - a self-learning linear classifier that simulates the work of a neuron. Further bright achievements were mainly in line with the symbolic paradigm. In particular, these are the works of Seymour Paypert and Robert Anton Winson in the field of the psychology of perception and, of course, the frames of Marvin Minsky.

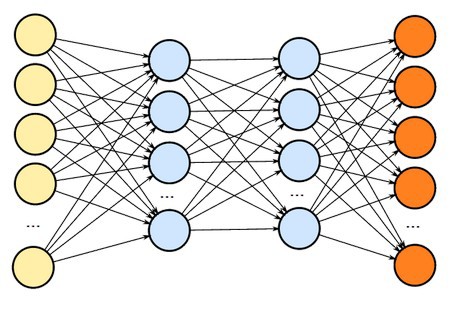

In the 70s, the first applied systems that use elements of artificial intelligence - expert systems appeared. Then there was a renaissance of connectionism with the advent of multilayer neural networks and the algorithm of their learning by the method of back propagation. In the 80s, the fascination with neural networks was just general. Proponents of this approach promised to create neurocomputers that would work almost like a human brain.

But nothing special came of it, because real neurons are much more complex than formal ones on which multilayered neural networks are based. And the number of neurons in the human brain is also much more than you could afford in a neural network. The main thing for which multilayered neural networks turned out to be suitable is the solution to the problem of classification.

But nothing special came of it, because real neurons are much more complex than formal ones on which multilayered neural networks are based. And the number of neurons in the human brain is also much more than you could afford in a neural network. The main thing for which multilayered neural networks turned out to be suitable is the solution to the problem of classification.The next popular paradigm in artificial intelligence was machine learning. The approach began to flourish since the late 80s and does not lose popularity until now. Significant impetus to the development of machine learning gave the appearance of the Internet and a large number of various easily accessible data that can be used to train algorithms.

The main tasks in the design of artificial intelligence

You can analyze what unites those tasks that relate to artificial intelligence. It is easy to see that the common thing in them is the absence of a well-known, clearly defined decision procedure. This, in fact, the tasks related to AI differ from the tasks of the theory of compilation or computational mathematics. Intellectual systems are looking for suboptimal solutions to the problem. You can neither prove nor guarantee that the solution found by artificial intelligence will be strictly optimal. However, in most practical problems, the suboptimal solutions suit everyone. Moreover, it must be remembered that a person almost never solves the problem optimally. Rather the opposite.

A very important question arises: how can AI solve a problem for which there is no solution algorithm? The point is to do it the same way a person does - to put forward and test plausible hypotheses. Naturally, knowledge and knowledge are necessary for putting forward and testing hypotheses.

Knowledge is the description of the subject area in which the intelligent system operates. If we face the system of recognition of symbols of a natural language, then knowledge includes descriptions of the structure of symbols, the structure of the text, and certain properties of the language. If it is a client's credit rating system, it must have knowledge of the types of clients and knowledge of how the client profile is related to its potential insolvency. There are two types of knowledge - about the subject area and about finding solutions (metacognition).

Knowledge is the description of the subject area in which the intelligent system operates. If we face the system of recognition of symbols of a natural language, then knowledge includes descriptions of the structure of symbols, the structure of the text, and certain properties of the language. If it is a client's credit rating system, it must have knowledge of the types of clients and knowledge of how the client profile is related to its potential insolvency. There are two types of knowledge - about the subject area and about finding solutions (metacognition).The main tasks of the design of an intellectual system are reduced to the choice of ways of presenting knowledge, ways of obtaining knowledge and ways of applying knowledge.

Knowledge representation

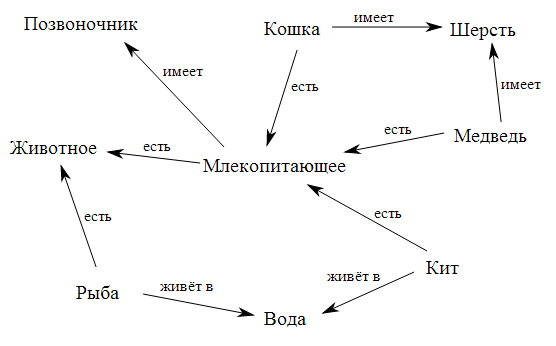

There are two basic ways to represent knowledge - declarative and procedural. Declarative knowledge can be presented in a structured or unstructured form. Structured representations are one or another kind of frame approach. Semantic networks or formal grammars, which can also be considered variations of frames. Knowledge in these formalisms is represented as a set of objects and relations between them.

Unstructured representations are usually used in those areas that are related to solving classification problems. These are usually vectors of weights, probabilities, and the like.

Virtually all methods of structured knowledge representation are based on the formalism of frames, which Marvin Minsky of MIT introduced in the 1970s to define the structure of knowledge for perception of spatial scenes. As it turned out, this approach is suitable for almost any task.

The frame consists of a name and individual units, called slots. The value of the slot can be, in turn, a link to another frame ... The frame can be a descendant of another frame, inheriting from it the values of the slots. In this case, the child can override the values of the ancestor's slots and add new ones. Inheritance is used to make the description more compact and to avoid duplication.

It is easy to see that there is a similarity between frames and object-oriented programming, where the frame corresponds to the object and the slot corresponds to the field. This similarity is not accidental, because frames were one of the sources of OOP. In particular, one of the first object-oriented languages, Small Talk, implemented frame representations of objects and classes almost exactly.

For procedural representation of knowledge, products or production rules are used. A production model is a model based on rules that allow knowledge to be presented in the form of “condition-action” sentences. This approach used to be popular in various diagnostic systems. It is natural enough to describe symptoms, problems or malfunctions in the form of a condition, and a possible malfunction that leads to the presence of these symptoms as an action.

In the next article we will talk about how to apply knowledge.

Bibliography.

- John Alan Robinson. A Machine-Oriented Logic Based on the Resolution Principle. Communications of the ACM, 5: 23-41, 1965.

- Seymour Papert, Marvin Minsky. Perceptrons. MIT Press, 1969

- Marvin Minsky. Symbolic vs. Connectionist, 1990

- Marvin Minsky. Framework for representing knowledge. MIT AI Laboratory Memo 306, June, 1974.

- Russell, Norvig. Artificial Intelligence: A Modern Approach.

- Simon Haykin. Neural networks: a comprehensive foundation.

- Nils J. Nilsson. Artificial Intelligence: A New Synthesis.

Source: https://habr.com/ru/post/211707/

All Articles