Intel Quark. Better late than never

The release of the new Intel Quark SoC processor and the first systems based on it made my heart squeeze and be remembered. Each system engineer was a favorite project, even a brainchild, who was born and was not destined to go out for various reasons.

I want to tell a little about my similar project and speculate what would happen if then today's technologies like Quark were available to me. I would also like to ask the Habrosocommunity: what would you be able to implement, having a choice from today's systems. But comments on this post are the most suitable place for holivar ARM vs. x86, as Intel enters a dangerous territory where controllers with RISC cores from ARM and Atmel have been ruling for a long time. But then please compare not just pieces of hardware (megahertz, kilobytes, and milliwatts), but be considered in conjunction with the program ecosystem and in the context of the specific application of controllers.

So, ten years ago, I worked in a small

')

I worked both as a system developer and as an application programmer for a little more than three years, bringing my experience from the “hardware” past and learning a lot from my colleagues in programming. The firm’s R & D was made up of a department of system developers (6-7 people performing electronic filling design and low-level programming, mainly controllers and signal processors), an application programmers department (10-12 people writing GUI for Windows workstations, implementing data processing algorithms and forming the user's infrastructure), and the company's brain is the department of algorithms (3-4 people interacting with science, medicine, writing algorithms on mathematical simulators - they are not programmers at all).

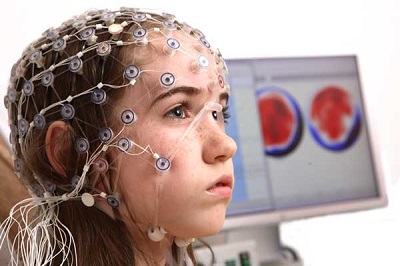

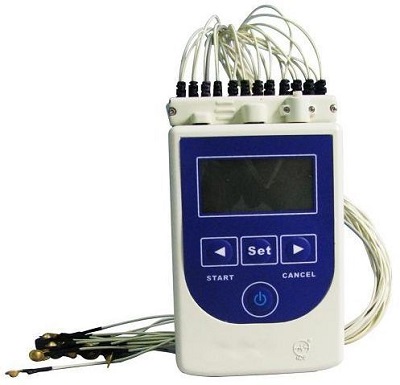

Without going into details about the entire wide range of products being developed and manufactured (devices for encephalography, polysomnography, myography, cardiography, and much more), I will describe the project in which I worked and a prototype of a new generation for which I was allowed to work out on paper - my own swan song "in the company. This project was a complex for electro-encephalographic research, a distinctive feature of which was that the information collection unit was autonomous and compact, now it would be called mobile. For that time it was a completely revolutionary decision, and even not all world manufacturers of medical equipment had such in their arsenal. The main advantage was that the patient, who is being filmed electro-encephalogram, is not tied to a workstation with a cable loop, can move or sleep freely. Such a decision is especially in demand among young patients, who, due to certain diseases, stretching somewhere from the head wires, led to incessant hysteria, which greatly complicated the examination.

Thus, the requirements for the primary data collection unit were rather stringent and contradictory:

- The minimum weight and size of the dust-and-dustproof case (without a cable and sensors), ideally up to 200-250 grams, and the size of a mobile phone (yes, the phones in 2004 were bigger than they are now)

- The possibility of battery life up to several hours, ideally 12 hours.

- Constant wireless communication with the workstation of the diagnostician, with a data transmission channel capacity of up to 0.5 Mb / s, and ideally up to 2-3 Mb / s, with a distance of stable communication up to 10 m.

- The ability to group devices in a peer-to-peer network, as well as safely and noiseproofly manage groups of devices registered in the local network of a workstation.

- Primary digital signal processing of 19 or more channels in real time by a DSP processor - filtering, noise reduction, segmentation and data packaging for transmission.

- The ability to save daily monitoring data on removable media, as well as the ability to read data to the station without disassembling the device for removing this media.

- Control system, communication, configuration, transmission and storage of data, charge control, alarm. Also in real time. Work for a controller or general purpose processor.

- Well, there is also such a trifle as certification by Rosstandart and the permission of the Ministry of Health for use as medical equipment.

As usual, the first prototype was built on the basis of already existing solutions - the accumulated DSP-based circuit from Analog Devices was used (as the most labor-intensive part of devices) with DRAM, EEROM and clock generator, which was used in stationary devices before, and the rest the harness was already hung on it:

- CF Memory Card Controller

- USB controller

- Sound input module

- Acceleration sensors

- Light / sound indication system

- Charge controller

- Bluetooth interface with its controller (the only possible wireless solution that meets the requirements at that time)

It was a monster, in every sense, for the accelerated development of which (in order to conquer the market) the best forces of the company were thrown, and it took almost a year to bring the product to the first working samples. But at the same time, this first product based on the prototype “made a small revolution” in the industry, and it promised to be even more if we could design a fundamentally new system that would not require such incredible efforts to connect all of these peripherals. to the DSP-processor and management of all this color music with the help of a program written in a low-level language. Yes, yes, everything that is done in conventional systems with the help of drivers, in embedded systems is controlled from the main program loop. Can you imagine a stack of communication protocols for Bluetooth, file system support, or an algorithm for optimizing the recording of flash memory addresses written from scratch in assembler or embedded C? Not? Welcome to the world of embedded devices!

(The picture was taken specially from another encephalograph, but in general it reflects the demand of the industry )

It became clear: to develop further, you need a System-on-Chip at the heart of the device, which would solve all the described tasks with its CPU and interfaces without extremely time-consuming programming. At that moment, having broken off from work in an almost finished first prototype, they launched into free floating: analyze the market, compare different solutions from microcontrollers to ready mini-computers, and most importantly, understand how you can re-use an existing software infrastructure, open source or proprietary. As it turned out (surprise!), That riveting the device in the gland is a matter of one month, and it is necessary to program a lot and fill it with life. And in order for these revived devices to be connected to a network, you need to perform a miracle. At the same time, the rest of the system developers department was looking for a different, more conservative direction, that is, not departing much from the concept of “DSP with control functions”.

It is difficult to fit in one article a review of what options were considered (I think that all possible) and what was taken into account when choosing, since in addition to the main device we needed to build a system base for other products that would also be transferred to it over time. Some of the promising devices required the presence of a display, which dramatically changed the approach, and turned the search vector from controllers to SoC or even mini-computers, for which traditionally there is a fairly wide choice of operating systems, software stacks, and drivers. Some devices were pretty simple to use microcontrollers there, but with powerful peripherals, since they also had to interact with workstations and with diagnostic equipment. For example, the patient's response stimulation system, such a set of light and sound stimulators, whose activation according to a given algorithm for certain diseases (of the same epilepsy) causes certain waves in the patient's brain, which are recorded by the encephalograph before the onset of attacks. Previously, an additional PC was used for such stimulants. The interaction (preparation, synchronization) of the main station was made with it. Now imagine how much work needs to be done in terms of the communication stack, if you build such a device on a simple microcontroller.

( this is how a photo-phono-stimulator on a rack looks, rigidly tied to a workstation )

Naturally, my search paths intersected with hardware solutions based on x86, as the most promising in terms of estimated complexity for developing software (forgive me for this jargon). About the ARM, or rather about the software ecosystem for it, while not yet heard. However, the existing industrial cards with a passively cooled i486 processor (passively cooled Pentium did not exist) were still so huge and consumed so much (up to 12-15W) that their use was out of the question.

And then the famous XScale PXA255 , SoC with 400 MHz ARMv5TE core from Intel (StrongARM), appeared on the market, having on board everything that was needed from iron and even more. The motherboards for it were supplied by various manufacturers, as well as were accompanied by a basic set of startup software: minimalistic Linux with BusyBox, with drivers, IP stack, and Bluetooth protocol stack. We just had to connect our main DSP processor data supplier module to it on the bus and “just” write a driver for it. Did we have experience writing drivers for ARM? Of course not. Well, the truth is, there was one more trifle - to deal with the new features of Linux kernel 2.6 - the possibility of crowding out tasks to maintain the “soft real time” of the system, but this would be the second stage, since the huge amount of memory on board and the processor capabilities made it possible to pack data on the fly, and real-time stiffness requirements were dropping. In addition, we looked at possible “ready-made solutions” from various Embedded-Linux vendors to MontaVista Linux and QNX Neutrino.

To better familiarize myself with the XScale software ecosystem, I urgently went to participate in the IDF, which was then held in Moscow, and began pestering Intel engineers, figuring out all the possible details regarding software support from the Intel Software Services Group. What was my disappointment when, apart from a few utilities for developing custom applications (under Windows CE!), Intel did not offer anything. Well, almost nothing. Later, somewhere deep in the depths of the then Intel's web site, which was a dump of precious articles and materials, I fished out the Linux-based software kit, which was even more limited than what the module manufacturers offered for money - only BusyBox and cross compiler. Apparently, this whale was taken as a basis by them.

Thus, I learned that Intel only ODMs are important, in particular, Dell, Acer, Compaq, who finished their PDA races under Windows CE, and small mobile equipment manufacturers were not interested at all. No, of course, for my part there was a desperate attempt to mold a prototype on my knees and provide at least some results, but the pricing policy regarding StarterKit developer boards (aimed at the same Microsoft, Dell and others) simply killed the whole idea.

In the meantime, the system developers department received the first OMAP cards from Texas Instruments. I don’t remember exactly which series it was, it seems 5912, but it contained the D55-processor C55xx series - one of the best signal processors with a fixed point on the market, and was controlled by a 204 MHz ARM926EJ-S core. The fee and StarterKit were also not cheap, but you know what melted the heart of our leader, and part-time chairman of the scientific council? Several thousand dollars were easily allocated for the fact that Code Composer Studio for OMAP was supplied with the board - a complete software suite for emulation, development of real-time applications for DSP and ARM processor in C / C ++, with lots of libraries, drivers and

The platform was perfect. What you need from a hardware point of view: an excellent DSP, an economical general-purpose CPU, and a lot of peripherals. And the dream of a system programmer is a complete set for emulation, development and debugging. Did it work as written in datasheets? Not! Iron mostly worked, but somehow it was not always predictable. The software (CCS) was written by the Chinese on an outsourcing contract, the studio was constantly falling, and the drivers did not work as expected and had to be rewritten. Did the work as a whole take as much time as you would if using XScale? I think about, yes. But the initial start was much faster and more successful with the decision from TI.

However, as far as we know, despite TI’s much longer-term support policy for its hardware solutions and the further development of the OMAP platform, the company abandoned plans to play a crucial role in the mobile market, risking losing to cheaper and less technologically advanced platforms. Intel, too, did not last long, selling Marx Xll's business. What to do to small companies developing their compact, wearable, portable and other mobile devices? I think that if the form factor allows, that is, dimensions and weight, then you need to make the most versatile solutions using standard development tools, including open (and maybe even preferable).

And I allowed myself to dream what the system would look like, having us at the disposal of today's hardware capabilities, but remembering the financial and resource constraints of a small company. As a DSP, I would certainly take a processor from a TI, for example, today's C6204 series. We do not need a chip with a PCI bus, since it is still not compatible with PCI-E, but the Expandable Bus is probably probably screwed on (although this will require additional efforts). Yes, TI has KeyStone processors with an ARM core, but we have already done this: having system developers and Windows programmers available for the workstation, it will be an unaffordable luxury to still recruit people, or force system engineers to write drivers and a control system for ARM. Although, as far as I know, my colleagues went precisely along this path, and it was solely because they already

Further, in my opinion, the system should be managed by a universal module with developed peripherals, for which there is and there is a huge choice of open source applications and development tools, and programmers do not need to learn another microarchitecture and assembly language (after all, they are not with DSP asm knowledge was born, and came out of the environment of the good old x86). And if the processor manufacturer adds its own stack of tools, then it would be great. The system should start working out-of-box, and the developers of communication software should write their layers without waiting for the hardware to appear. The issue of developing wireless components should not arise at all. SoC on an x86-compatible CPU would be ideal for this if x86 were controllers, but they never were. And here comes Intel Quark. Yes, he is now far from an ideal controller. Yes, (built at 32 nm) still consumes about 2W at peak performance. But look from the other side (on specifications ):

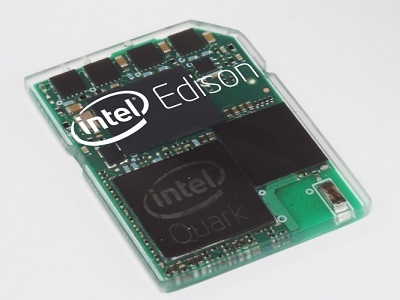

- There is already Edison - Quark baked on 22 nm technology. And this is not the New Year nonsense of drunken marketers, as some comrades have tried to make a joke in the comments . Intel does not joke with such announcements, especially its CEO, announcing the news at the Consumer Electronics Show 2014.

- The chip supports C0-C2 CPU power states, and S0, S3, S4 power states for the system. That is, it will not consume 2W all the time, but mainly during the period of data packing and transmission.

- Intel has a roadmap for the Quark family of controllers (not yet published, but, as they say, stay tuned).

- The Quark core is scalable, that is, it does not need to be re-developed when transferring to a new, more economical technology.

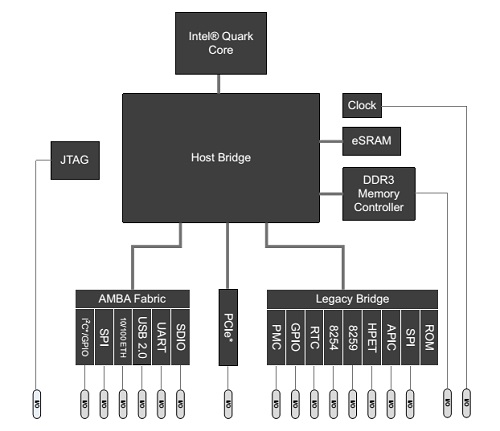

- 32-bit 400 MHz core with Pentium ISA, DDR3 memory controller with 800 MT / s, fast e-SRAM system memory, PCI Express with a bandwidth of 2.5 GT / s. If desired, and the energy possibilities on such a core, it is even possible to process the recorded signals, for example, for logging or signaling critical states in a mobile cardiograph.

- Peripherals: the system has 10/100 Ethernet, USB 2.0, Wi-Fi, an SD card controller, and the most interesting is the I2C controller and 16 GPIO ports, 6 of which work, even if the system is sleeping in the S3 state. Simply grandiose possibilities for connecting various sensors and sensors, which are usually abundant in special devices.

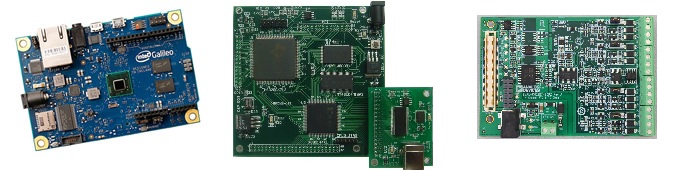

Of course, there is a certain functional redundancy in the modular construction of the device: you need a double set of DRAM and EEPROM voltage regulators. Although it should be noted that the lion’s share of space in the device is still not occupied by the system module, but by the battery and the analog module with transformers, multichannel operational amplifiers and ADCs. But in return, we get practically independent parts of the project, transferred from one functional device to another without significant processing, and developed by different teams almost asynchronously. Having at our disposal a ready-made control module, in due time, we could release the product for a year, and for some three to four months. This is a promising release cycle of updated modular devices.

( three modules from which you can build a prototype of a mobile electroencephalograph )

And most importantly, Intel is finally accompanying its new platforms with a set of tools for development, debugging and optimization. We will not take into account the option of the module with the extended temperature range and the WindRiver Linux and VxWorks bundled with it. These kits are supposed to be used in the industrial sector, in devices with high stability of visibility, and most likely will cost a lot.

The Board Support Package for the Intel Quark SoC X1000 reference module is accompanied by a Quark Linux (Yocto) patched, debugging kit for the OpenOCD kernel with gdb or Eclipse supporting a pair of JTAG hardware. As far as I know, using insider information, at the Mobile World Congress '14 in Barcelona, a wider set of system software will be presented, which should make it even easier to start working with the module. To help an application developer, Intel offers toolkits for both conventional Intel Core processors and SoC, such as Atom-based. New Intel System Studio 2014 (ISS) has already been released in the beta version and in addition to the BSP and a huge number of open source tools, we get the C / C ++ cross-compiler, JTAG debugger, performance libraries, tools for remote profiling and validation of user applications.

The Board Support Package for the Intel Quark SoC X1000 reference module is accompanied by a Quark Linux (Yocto) patched, debugging kit for the OpenOCD kernel with gdb or Eclipse supporting a pair of JTAG hardware. As far as I know, using insider information, at the Mobile World Congress '14 in Barcelona, a wider set of system software will be presented, which should make it even easier to start working with the module. To help an application developer, Intel offers toolkits for both conventional Intel Core processors and SoC, such as Atom-based. New Intel System Studio 2014 (ISS) has already been released in the beta version and in addition to the BSP and a huge number of open source tools, we get the C / C ++ cross-compiler, JTAG debugger, performance libraries, tools for remote profiling and validation of user applications.It is still too early to say anything, but I really want this time, Intel did not underestimate the importance of system software and application tools for its controller, and the ISS fully supported Quark processors, as is customary for all other families from Intel Atom to Xeon Phi. I would like to wish the community of developers and enthusiasts not to discount the first attempt by Intel to enter the world of “big” controllers (in the world of microcontrollers, Intel has already made a serious contribution) and to successfully implement their engineering fantasies - today there are a lot more possibilities.

Source: https://habr.com/ru/post/209940/

All Articles