Creating reliable iSCSI storage on Linux, part 1

Part two

Today I will tell you how I created a low-cost, fault-tolerant iSCSI storage from two Linux-based servers to serve the needs of a VMWare vSphere cluster. There were similar articles ( for example ), but my approach is somewhat different, and the solutions (the same heartbeat and iscsitarget) used there are already outdated.

The article is intended for sufficiently experienced administrators who are not afraid of the phrase “patch and compile the kernel”, although some parts could be simplified and dispensed with no compilation at all, but I will write as I did myself. Some simple things I will skip so as not to inflate the material. The purpose of this article is rather to show general principles, and not to put everything in steps.

')

My requirements were simple: create a cluster for running virtual machines that does not have a single point of failure. And as a bonus, the repository had to be able to encrypt the data so that the enemies, having dragged the server away, would not get to them.

VSphere was chosen as the hypervisor as the most well-established and complete product, and iSCSI as the protocol, as not requiring additional financial investments in the form of FC or FCoE switches. With open source SAS targets, it’s pretty tight, if not worse, so this option was also rejected.

Left storage. Different branded solutions from leading vendors were discarded due to the high cost of both their own and the licenses for synchronous replication. So we will do it ourselves, at the same time and learn.

As the software was selected:

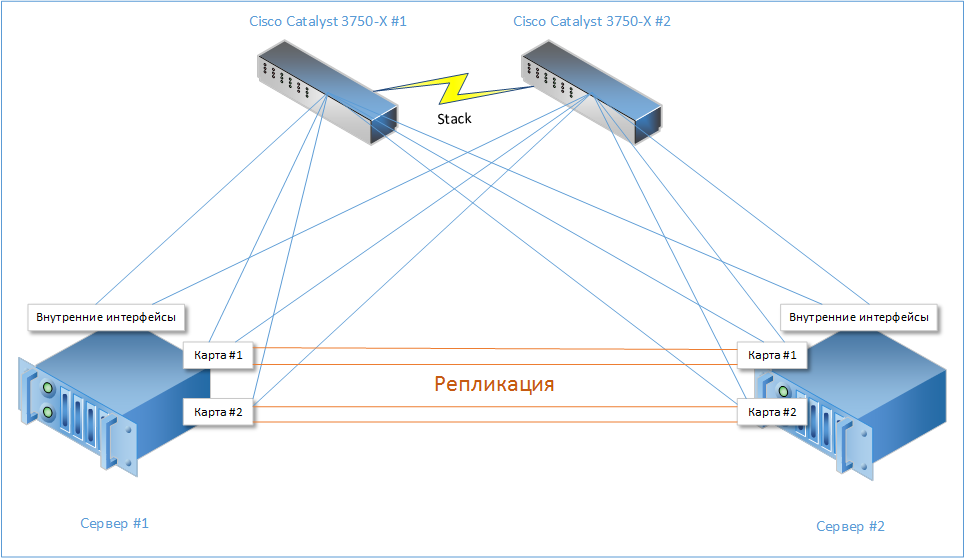

As a result, such a simple scheme was born in a brief torment:

It shows that each of the servers has 10 gigabit interfaces (2 built-in and 4 on additional network cards). 6 of them are connected to the stack of switches (3 to each), and the remaining 4 - to the server-neighbor.

Then replication through DRBD will go with them. Replication cards can be replaced with 10-Gbps if you wish, but I had these on hand, so "I blinded him from what was."

Thus, the sudden death of any of the cards will not lead to complete inoperability of any of the subsystems.

Since the main task of these storages is reliable storage of big data (file server, mail archives, etc.), servers with 3.5 "disks were selected:

I created two RAID10 arrays of 8 disks on each of the servers.

I decided to refuse RAID6 because there was plenty of space, and the performance of RAID10 on random access tasks is higher. Plus, the rebuild time is lower and the load goes only on one disk, and not on the whole array at once.

In general, here everyone decides for himself.

With the iSCSI protocol, it’s pointless to use Bonding / Etherchannel to speed up its work.

The reason is simple - it uses hash functions to distribute packets across channels, so it is very difficult to find such IP / MAC addresses so that a packet from IP1 to IP2 goes on one channel, and from IP1 to IP3 on another.

On cisco, there is even a command that allows you to see which of the Etherchannel interfaces the packet will fly off:

Therefore, for our purposes, it is much better to use several paths to the LUN, which we will configure.

On the switch, I created 6 VLANs (one for each external interface of the server):

Interfaces were made trunk for universality and something else will be seen later:

The MTU on the switch should be set to maximum in order to reduce the server load (larger packet -> fewer packets per second -> fewer interrupts are generated). In my case, this is 9198:

ESXi does not support MTU greater than 9000, so there is still some margin.

Each VLAN-u was chosen address space, for simplicity, which looks like this: 10.1. VLAN_ID .0 / 24 (for example - 10.1.24.0/24). With a shortage of addresses, you can keep within smaller subnets, but it is more convenient.

Each LUN will be represented by a separate iSCSI target, so each target has been selected “common” cluster addresses, which will be raised on the node serving this target at the moment: 10.1. VLAN_ID .10 and 10.1. VLAN_ID .20

The servers will also have permanent addresses for management, in my case it is 10.1.0.100/24 and .200 (in a separate VLAN)

So, here we install on both Debian servers in the minimum form, I will not dwell on this in detail.

I spent the assembly on a separate virtual machine so as not to litter the server with compilers and sources.

To build a kernel for Debian, it’s enough to install a build-essential meta-package, and perhaps something else trivially, I don’t remember exactly.

Download the latest kernel 3.10 with kernel.org : and unpack:

Next, download the latest revision of the stable SCST branch via SVN, generate a patch for our kernel version and apply it:

Now let's build the iscsi-scstd daemon:

The resulting iscsi-scstd will need to be put on the server, for example in / opt / scst

Next, configure the kernel for your server.

Enable encryption (if needed).

Do not forget to enable these options for SCST and DRBD:

Putting it in the form of a .deb package (for this you need to install the fakeroot, kernel-package and at the same time debhelper packages):

At the output we get the package kernel-scst-image-3.10.27_1_amd64.deb

Next, build the package for DRBD:

We change the debian / rules file to the following state (there is a standard file, but it does not collect kernel modules):

In the Makefile.in file, we will correct the SUBDIRS variable, remove documentation from it, otherwise the package will not pack up with the abuse on the documentation.

We collect:

Get the drbd_8.4.4_amd64.deb package

Everything, it is necessary to collect more like nothing, copy both packages to the servers and install:

My interfaces were renamed to /etc/udev/rules.d/70-persistent-net.rules as follows:

int1-6 go to the switch, and drbd1-4 go to the neighboring server.

/ etc / network / interfaces has an extremely frightening look, which even in a nightmare will not dream:

Since we want to have fault tolerance and server management, we use the military trick: in active-backup bonding, we collect not the interfaces themselves, but VLAN-subinterfaces. Thus, the server will be available as long as at least one interface is running. This is redundant, but the puruet would not be pas. And at the same time, the same interfaces can be freely used for iSCSI traffic.

For replication, the bond_drbd interface was created in balance-rr mode, in which packets are sent stupidly sequentially across all interfaces. He was assigned an address from the gray network / 24, but one could get by and / 30 or / 31 because there will be only two hosts.

Since this sometimes leads to the arrival of packets out of turn, we increase the buffer of extraordinary packets in /etc/sysctl.conf . Below I will give the whole file, which options I will not explain, for a very long time. You can independently read if desired.

According to the test results, the replication interface produces somewhere 3.7 Gbit / s , which is quite acceptable.

Since our server is multi-core, and network cards and a RAID controller are able to separate interrupt handling across several queues, a script was written that binds the interrupts to the cores:

Before exporting the disks, we encrypt them and save the master keys for every fireman:

The password must be written on the inside of the skull and never forget, and hide the key backups to far.

It should be borne in mind that after changing the password on the sections, the backup of the master key can be decrypted with the old password.

Next, a script was written to simplify decryption:

The script works with the UUIDs of the disks in order to always uniquely identify the disk in the system without reference to / dev / sd * .

The encryption speed depends on the processor frequency and the number of cores, and the recording is parallelized better than the reading. You can check the speed with which the server encrypts using the following simple method:

As you can see, the speeds are not so hot, but they will rarely be achieved in practice, because the random nature of access usually prevails.

For comparison, the results of the same test on the new Xeon E3-1270 v3 on the Haswell core:

Here, here it is much more fun. Frequency is the decisive factor, apparently.

And if you deactivate AES-NI, it will be several times slower.

Configuring replication, configs from both ends should be 100% identical.

/etc/drbd.d/global_common.conf

Here the most interesting parameter is the protocol, let's compare them.

Recording is considered successful if the block was recorded ...

The slowest (read - high latency) and, at the same time, reliable is C , and I chose a middle ground.

Next comes the definition of the resources with which the DRBD and the nodes involved in their replication operate.

/etc/drbd.d/VM_STORAGE_1.res

/etc/drbd.d/VM_STORAGE_2.res

Each resource has its own port.

Now we initialize the metadata of the DRBD resources and activate them; this needs to be done on each server:

Next, you need to select a single server (you can have your own for each resource) and determine that it is the main one and the primary synchronization will go to another one:

Everything went, went, synchronization began.

Depending on the size of the arrays and the speed of the network, it will take a long or very long time.

You can watch the progress with the watch command -n0.1 cat / proc / drbd , it pacifies and tunes philosophically.

In principle, the devices can already be used in the synchronization process, but I advise you to relax :)

For one part, I think that's enough. And so much information to absorb.

In the second part I will talk about setting up the cluster manager and ESXi hosts to work with this product.

Prelude

Today I will tell you how I created a low-cost, fault-tolerant iSCSI storage from two Linux-based servers to serve the needs of a VMWare vSphere cluster. There were similar articles ( for example ), but my approach is somewhat different, and the solutions (the same heartbeat and iscsitarget) used there are already outdated.

The article is intended for sufficiently experienced administrators who are not afraid of the phrase “patch and compile the kernel”, although some parts could be simplified and dispensed with no compilation at all, but I will write as I did myself. Some simple things I will skip so as not to inflate the material. The purpose of this article is rather to show general principles, and not to put everything in steps.

')

Introductory

My requirements were simple: create a cluster for running virtual machines that does not have a single point of failure. And as a bonus, the repository had to be able to encrypt the data so that the enemies, having dragged the server away, would not get to them.

VSphere was chosen as the hypervisor as the most well-established and complete product, and iSCSI as the protocol, as not requiring additional financial investments in the form of FC or FCoE switches. With open source SAS targets, it’s pretty tight, if not worse, so this option was also rejected.

Left storage. Different branded solutions from leading vendors were discarded due to the high cost of both their own and the licenses for synchronous replication. So we will do it ourselves, at the same time and learn.

As the software was selected:

- Debian Wheezy + LTS core 3.10

- iSCSI target SCST

- DRBD for replication

- Pacemaker for cluster resource management and monitoring

- DM-Crypt kernel subsystem for encryption (AES-NI instructions in the processor will help us a lot)

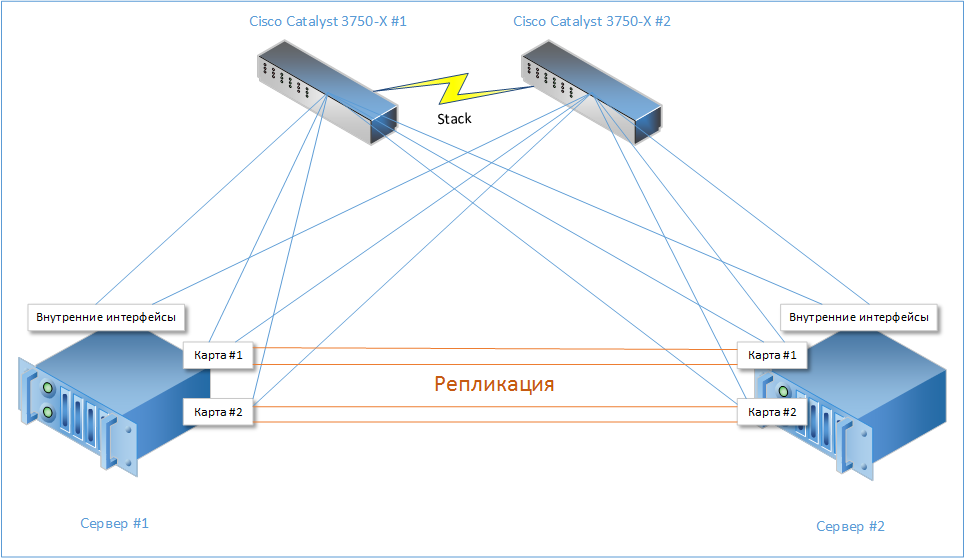

As a result, such a simple scheme was born in a brief torment:

It shows that each of the servers has 10 gigabit interfaces (2 built-in and 4 on additional network cards). 6 of them are connected to the stack of switches (3 to each), and the remaining 4 - to the server-neighbor.

Then replication through DRBD will go with them. Replication cards can be replaced with 10-Gbps if you wish, but I had these on hand, so "I blinded him from what was."

Thus, the sudden death of any of the cards will not lead to complete inoperability of any of the subsystems.

Since the main task of these storages is reliable storage of big data (file server, mail archives, etc.), servers with 3.5 "disks were selected:

- The Supermicro SC836E26-R1200 case on 16 3.5 "disks

- Motherboard Supermicro X9SRi-F

- Intel E5-2620 processor

- 4 x 8GB DDR3 ECC memory

- LSI 9271-8i RAID controller with supercapacitor for emergency cache flush to flash module

- 16 disks Seagate Constellation ES.3 3Tb SAS

- 2 x 4-port Intel Ethernet I350-T4 network cards

For the cause

Discs

I created two RAID10 arrays of 8 disks on each of the servers.

I decided to refuse RAID6 because there was plenty of space, and the performance of RAID10 on random access tasks is higher. Plus, the rebuild time is lower and the load goes only on one disk, and not on the whole array at once.

In general, here everyone decides for himself.

Network part

With the iSCSI protocol, it’s pointless to use Bonding / Etherchannel to speed up its work.

The reason is simple - it uses hash functions to distribute packets across channels, so it is very difficult to find such IP / MAC addresses so that a packet from IP1 to IP2 goes on one channel, and from IP1 to IP3 on another.

On cisco, there is even a command that allows you to see which of the Etherchannel interfaces the packet will fly off:

# test etherchannel load-balance interface port-channel 1 ip 10.1.1.1 10.1.1.2 Would select Gi2/1/4 of Po1 Therefore, for our purposes, it is much better to use several paths to the LUN, which we will configure.

On the switch, I created 6 VLANs (one for each external interface of the server):

stack-3750x# sh vlan | i iSCSI 24 iSCSI_VLAN_1 active 25 iSCSI_VLAN_2 active 26 iSCSI_VLAN_3 active 27 iSCSI_VLAN_4 active 28 iSCSI_VLAN_5 active 29 iSCSI_VLAN_6 active Interfaces were made trunk for universality and something else will be seen later:

interface GigabitEthernet1/0/11 description VMSTOR1-1 switchport trunk encapsulation dot1q switchport mode trunk switchport nonegotiate flowcontrol receive desired spanning-tree portfast trunk end The MTU on the switch should be set to maximum in order to reduce the server load (larger packet -> fewer packets per second -> fewer interrupts are generated). In my case, this is 9198:

(config)# system mtu jumbo 9198 ESXi does not support MTU greater than 9000, so there is still some margin.

Each VLAN-u was chosen address space, for simplicity, which looks like this: 10.1. VLAN_ID .0 / 24 (for example - 10.1.24.0/24). With a shortage of addresses, you can keep within smaller subnets, but it is more convenient.

Each LUN will be represented by a separate iSCSI target, so each target has been selected “common” cluster addresses, which will be raised on the node serving this target at the moment: 10.1. VLAN_ID .10 and 10.1. VLAN_ID .20

The servers will also have permanent addresses for management, in my case it is 10.1.0.100/24 and .200 (in a separate VLAN)

Soft

So, here we install on both Debian servers in the minimum form, I will not dwell on this in detail.

Build Packages

I spent the assembly on a separate virtual machine so as not to litter the server with compilers and sources.

To build a kernel for Debian, it’s enough to install a build-essential meta-package, and perhaps something else trivially, I don’t remember exactly.

Download the latest kernel 3.10 with kernel.org : and unpack:

# cd /usr/src/ # wget https://www.kernel.org/pub/linux/kernel/v3.x/linux-3.10.27.tar.xz # tar xJf linux-3.10.27.tar.xz Next, download the latest revision of the stable SCST branch via SVN, generate a patch for our kernel version and apply it:

# svn checkout svn://svn.code.sf.net/p/scst/svn/branches/2.2.x scst-svn # cd scst-svn # scripts/generate-kernel-patch 3.10.27 > ../scst.patch # cd linux-3.10.27 # patch -Np1 -i ../scst.patch Now let's build the iscsi-scstd daemon:

# cd scst-svn/iscsi-scst/usr # make The resulting iscsi-scstd will need to be put on the server, for example in / opt / scst

Next, configure the kernel for your server.

Enable encryption (if needed).

Do not forget to enable these options for SCST and DRBD:

CONFIG_CONNECTOR=y CONFIG_SCST=y CONFIG_SCST_DISK=y CONFIG_SCST_VDISK=y CONFIG_SCST_ISCSI=y CONFIG_SCST_LOCAL=y Putting it in the form of a .deb package (for this you need to install the fakeroot, kernel-package and at the same time debhelper packages):

# fakeroot make-kpkg clean prepare # fakeroot make-kpkg --us --uc --stem=kernel-scst --revision=1 kernel_image At the output we get the package kernel-scst-image-3.10.27_1_amd64.deb

Next, build the package for DRBD:

# wget http://oss.linbit.com/drbd/8.4/drbd-8.4.4.tar.gz # tar xzf drbd-8.4.4.tar.gz # cd drbd-8.4.4 # dh_make --native --single Enter We change the debian / rules file to the following state (there is a standard file, but it does not collect kernel modules):

#!/usr/bin/make -f # export KDIR="/usr/src/linux-3.10.27" override_dh_installdocs: < , > override_dh_installchangelogs: < > override_dh_auto_configure: ./configure \ --prefix=/usr \ --localstatedir=/var \ --sysconfdir=/etc \ --with-pacemaker \ --with-utils \ --with-km \ --with-udev \ --with-distro=debian \ --without-xen \ --without-heartbeat \ --without-legacy_utils \ --without-rgmanager \ --without-bashcompletion %: dh $@ In the Makefile.in file, we will correct the SUBDIRS variable, remove documentation from it, otherwise the package will not pack up with the abuse on the documentation.

We collect:

# dpkg-buildpackage -us -uc -b Get the drbd_8.4.4_amd64.deb package

Everything, it is necessary to collect more like nothing, copy both packages to the servers and install:

# dpkg -i kernel-scst-image-3.10.27_1_amd64.deb # dpkg -i drbd_8.4.4_amd64.deb Server setup

Network

My interfaces were renamed to /etc/udev/rules.d/70-persistent-net.rules as follows:

int1-6 go to the switch, and drbd1-4 go to the neighboring server.

/ etc / network / interfaces has an extremely frightening look, which even in a nightmare will not dream:

auto lo iface lo inet loopback # Interfaces auto int1 iface int1 inet manual up ip link set int1 mtu 9000 up down ip link set int1 down auto int2 iface int2 inet manual up ip link set int2 mtu 9000 up down ip link set int2 down auto int3 iface int3 inet manual up ip link set int3 mtu 9000 up down ip link set int3 down auto int4 iface int4 inet manual up ip link set int4 mtu 9000 up down ip link set int4 down auto int5 iface int5 inet manual up ip link set int5 mtu 9000 up down ip link set int5 down auto int6 iface int6 inet manual up ip link set int6 mtu 9000 up down ip link set int6 down # Management interface auto int1.2 iface int1.2 inet manual up ip link set int1.2 mtu 1500 up down ip link set int1.2 down vlan_raw_device int1 auto int2.2 iface int2.2 inet manual up ip link set int2.2 mtu 1500 up down ip link set int2.2 down vlan_raw_device int2 auto int3.2 iface int3.2 inet manual up ip link set int3.2 mtu 1500 up down ip link set int3.2 down vlan_raw_device int3 auto int4.2 iface int4.2 inet manual up ip link set int4.2 mtu 1500 up down ip link set int4.2 down vlan_raw_device int4 auto int5.2 iface int5.2 inet manual up ip link set int5.2 mtu 1500 up down ip link set int5.2 down vlan_raw_device int5 auto int6.2 iface int6.2 inet manual up ip link set int6.2 mtu 1500 up down ip link set int6.2 down vlan_raw_device int6 auto bond_vlan2 iface bond_vlan2 inet static address 10.1.0.100 netmask 255.255.255.0 gateway 10.1.0.1 slaves int1.2 int2.2 int3.2 int4.2 int5.2 int6.2 bond-mode active-backup bond-primary int1.2 bond-miimon 100 bond-downdelay 200 bond-updelay 200 mtu 1500 # iSCSI auto int1.24 iface int1.24 inet manual up ip link set int1.24 mtu 9000 up down ip link set int1.24 down vlan_raw_device int1 auto int2.25 iface int2.25 inet manual up ip link set int2.25 mtu 9000 up down ip link set int2.25 down vlan_raw_device int2 auto int3.26 iface int3.26 inet manual up ip link set int3.26 mtu 9000 up down ip link set int3.26 down vlan_raw_device int3 auto int4.27 iface int4.27 inet manual up ip link set int4.27 mtu 9000 up down ip link set int4.27 down vlan_raw_device int4 auto int5.28 iface int5.28 inet manual up ip link set int5.28 mtu 9000 up down ip link set int5.28 down vlan_raw_device int5 auto int6.29 iface int6.29 inet manual up ip link set int6.29 mtu 9000 up down ip link set int6.29 down vlan_raw_device int6 # DRBD bonding auto bond_drbd iface bond_drbd inet static address 192.168.123.100 netmask 255.255.255.0 slaves drbd1 drbd2 drbd3 drbd4 bond-mode balance-rr mtu 9216 Since we want to have fault tolerance and server management, we use the military trick: in active-backup bonding, we collect not the interfaces themselves, but VLAN-subinterfaces. Thus, the server will be available as long as at least one interface is running. This is redundant, but the puruet would not be pas. And at the same time, the same interfaces can be freely used for iSCSI traffic.

For replication, the bond_drbd interface was created in balance-rr mode, in which packets are sent stupidly sequentially across all interfaces. He was assigned an address from the gray network / 24, but one could get by and / 30 or / 31 because there will be only two hosts.

Since this sometimes leads to the arrival of packets out of turn, we increase the buffer of extraordinary packets in /etc/sysctl.conf . Below I will give the whole file, which options I will not explain, for a very long time. You can independently read if desired.

net.ipv4.tcp_reordering = 127 net.core.rmem_max = 33554432 net.core.wmem_max = 33554432 net.core.rmem_default = 16777216 net.core.wmem_default = 16777216 net.ipv4.tcp_rmem = 131072 524288 33554432 net.ipv4.tcp_wmem = 131072 524288 33554432 net.ipv4.tcp_no_metrics_save = 1 net.ipv4.tcp_window_scaling = 1 net.ipv4.tcp_timestamps = 0 net.ipv4.tcp_sack = 0 net.ipv4.tcp_dsack = 0 net.ipv4.tcp_fin_timeout = 15 net.core.netdev_max_backlog = 300000 vm.min_free_kbytes = 720896 According to the test results, the replication interface produces somewhere 3.7 Gbit / s , which is quite acceptable.

Since our server is multi-core, and network cards and a RAID controller are able to separate interrupt handling across several queues, a script was written that binds the interrupts to the cores:

#!/usr/bin/perl -w use strict; use warnings; my $irq = 77; my $ifs = 11; my $queues = 6; my $skip = 1; my @tmpl = ("0", "0", "0", "0", "0", "0"); print "Applying IRQ affinity...\n"; for(my $if = 0; $if < $ifs; $if++) { for(my $q = 0; $q < $queues; $q++, $irq++) { my @tmp = @tmpl; $tmp[$q] = 1; my $mask = join("", @tmp); my $hexmask = bin2hex($mask); #print $irq . " -> " . $hexmask . "\n"; open(OUT, ">/proc/irq/".$irq."/smp_affinity"); print OUT $hexmask."\n"; close(OUT); } $irq += $skip; } sub bin2hex { my ($bin) = @_; return sprintf('%x', oct("0b".scalar(reverse($bin)))); } Discs

Before exporting the disks, we encrypt them and save the master keys for every fireman:

# cryptsetup luksFormat --cipher aes-cbc-essiv:sha256 --hash sha256 /dev/sdb # cryptsetup luksFormat --cipher aes-cbc-essiv:sha256 --hash sha256 /dev/sdc # cryptsetup luksHeaderBackup /dev/sdb --header-backup-file /root/header_sdb.bin # cryptsetup luksHeaderBackup /dev/sdc --header-backup-file /root/header_sdc.bin The password must be written on the inside of the skull and never forget, and hide the key backups to far.

It should be borne in mind that after changing the password on the sections, the backup of the master key can be decrypted with the old password.

Next, a script was written to simplify decryption:

#!/usr/bin/perl -w use strict; use warnings; use IO::Prompt; my %crypto_map = ( '1bd1f798-d105-4150-841b-f2751f419efc' => 'VM_STORAGE_1', 'd7fcdb2b-88fd-4d19-89f3-5bdf3ddcc456' => 'VM_STORAGE_2' ); my $i = 0; my $passwd = prompt('Password: ', '-echo' => '*'); foreach my $dev (sort(keys(%crypto_map))) { $i++; if(-e '/dev/mapper/'.$crypto_map{$dev}) { print "Mapping '".$crypto_map{$dev}."' already exists, skipping\n"; next; } my $ret = system('echo "'.$passwd.'" | /usr/sbin/cryptsetup luksOpen /dev/disk/by-uuid/'.$dev.' '.$crypto_map{$dev}); if($ret == 0) { print $i . ' Crypto mapping '.$dev.' => '.$crypto_map{$dev}.' added successfully' . "\n"; } else { print $i . ' Failed to add mapping '.$dev.' => '.$crypto_map{$dev} . "\n"; exit(1); } } The script works with the UUIDs of the disks in order to always uniquely identify the disk in the system without reference to / dev / sd * .

The encryption speed depends on the processor frequency and the number of cores, and the recording is parallelized better than the reading. You can check the speed with which the server encrypts using the following simple method:

, , # echo "0 268435456 zero" | dmsetup create zerodisk # cryptsetup --cipher aes-cbc-essiv:sha256 --hash sha256 create zerocrypt /dev/mapper/zerodisk Enter passphrase: < > : # dd if=/dev/zero of=/dev/mapper/zerocrypt bs=1M count=16384 16384+0 records in 16384+0 records out 17179869184 bytes (17 GB) copied, 38.3414 s, 448 MB/s # dd of=/dev/null if=/dev/mapper/zerocrypt bs=1M count=16384 16384+0 records in 16384+0 records out 17179869184 bytes (17 GB) copied, 74.5436 s, 230 MB/s As you can see, the speeds are not so hot, but they will rarely be achieved in practice, because the random nature of access usually prevails.

For comparison, the results of the same test on the new Xeon E3-1270 v3 on the Haswell core:

# dd if=/dev/zero of=/dev/mapper/zerocrypt bs=1M count=16384 16384+0 records in 16384+0 records out 17179869184 bytes (17 GB) copied, 11.183 s, 1.5 GB/s # dd of=/dev/null if=/dev/mapper/zerocrypt bs=1M count=16384 16384+0 records in 16384+0 records out 17179869184 bytes (17 GB) copied, 19.4902 s, 881 MB/s Here, here it is much more fun. Frequency is the decisive factor, apparently.

And if you deactivate AES-NI, it will be several times slower.

DRBD

Configuring replication, configs from both ends should be 100% identical.

/etc/drbd.d/global_common.conf

global { usage-count no; } common { protocol B; handlers { } startup { wfc-timeout 10; } disk { c-plan-ahead 0; al-extents 6433; resync-rate 400M; disk-barrier no; disk-flushes no; disk-drain yes; } net { sndbuf-size 1024k; rcvbuf-size 1024k; max-buffers 8192; # x PAGE_SIZE max-epoch-size 8192; # x PAGE_SIZE unplug-watermark 8192; timeout 100; ping-int 15; ping-timeout 60; # x 0.1sec connect-int 15; timeout 50; # x 0.1sec verify-alg sha1; csums-alg sha1; data-integrity-alg crc32c; cram-hmac-alg sha1; shared-secret "ultrasuperdupermegatopsecretpassword"; use-rle; } } Here the most interesting parameter is the protocol, let's compare them.

Recording is considered successful if the block was recorded ...

- A - to local disk and hit the local send buffer

- B - to the local disk and hit the remote receive buffer

- C - to local and remote disk

The slowest (read - high latency) and, at the same time, reliable is C , and I chose a middle ground.

Next comes the definition of the resources with which the DRBD and the nodes involved in their replication operate.

/etc/drbd.d/VM_STORAGE_1.res

resource VM_STORAGE_1 { device /dev/drbd0; disk /dev/mapper/VM_STORAGE_1; meta-disk internal; on vmstor1 { address 192.168.123.100:7801; } on vmstor2 { address 192.168.123.200:7801; } } /etc/drbd.d/VM_STORAGE_2.res

resource VM_STORAGE_2 { device /dev/drbd1; disk /dev/mapper/VM_STORAGE_2; meta-disk internal; on vmstor1 { address 192.168.123.100:7802; } on vmstor2 { address 192.168.123.200:7802; } } Each resource has its own port.

Now we initialize the metadata of the DRBD resources and activate them; this needs to be done on each server:

# drbdadm create-md VM_STORAGE_1 # drbdadm create-md VM_STORAGE_2 # drbdadm up VM_STORAGE_1 # drbdadm up VM_STORAGE_2 Next, you need to select a single server (you can have your own for each resource) and determine that it is the main one and the primary synchronization will go to another one:

# drbdadm primary --force VM_STORAGE_1 # drbdadm primary --force VM_STORAGE_2 Everything went, went, synchronization began.

Depending on the size of the arrays and the speed of the network, it will take a long or very long time.

You can watch the progress with the watch command -n0.1 cat / proc / drbd , it pacifies and tunes philosophically.

In principle, the devices can already be used in the synchronization process, but I advise you to relax :)

The end of the first part

For one part, I think that's enough. And so much information to absorb.

In the second part I will talk about setting up the cluster manager and ESXi hosts to work with this product.

Source: https://habr.com/ru/post/209460/

All Articles