What unites NASA, Oculus Rift and Kinect 2?

NASA's Jet Propulsion Laboratory has been in search of a simpler and more natural way to control robots in space for some time. As a result of the experiments, preference was given to the Leap Motion controller for remote control of the rover and using the Oculus Rift , plus Virtuix Omni for organizing a virtual tour of the Red Planet .

Because of this, it makes sense for JPL to subscribe to Kinect for Windows developers to get their hands on a new, most up-to-date Kinect 2 (which, oddly enough, is not available as a stand-alone device, separate from Xbox One ) to watch Microsoft solutions in the field of robotics.

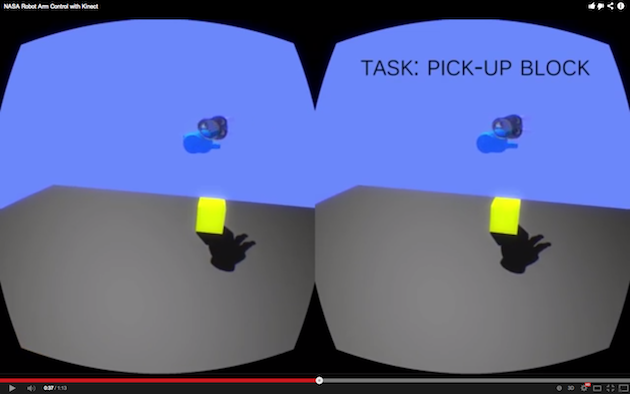

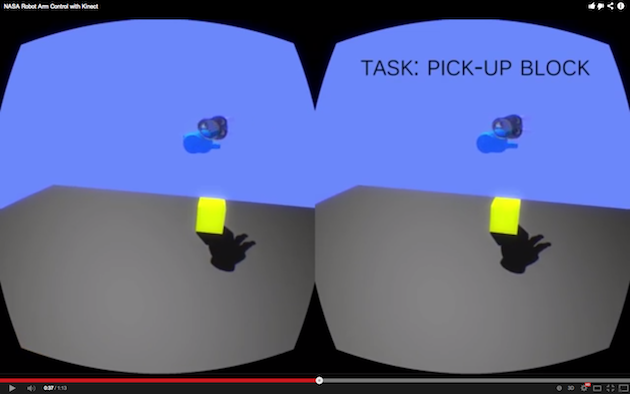

The lab received its Dev Kit at the end of November, and after several days of work, it was able to connect the Oculus Rift to Kinect 2 so that it could manipulate the robot outside its console. According to our interview with a group of JPL engineers, the combination of the Oculus head display and the Kinect motion sensor led to the “most exciting interface” developed by JPL to date.

JPL took part in the first Kinect developer program, so she was already very familiar with how Kinect works. She developed a number of applications, worked with Microsoft to release the game, where it was entrusted to land Curiosity safely on Mars . The second Kinect, however, offers much more precision and accuracy than the first. “This allowed us to trace the open and closed states, the rotation of the wrist,” says engineer Victor Law. “With all these new tracking points and rotational degrees of freedom, we were able to better manipulate the hand.”

Alex Menzies, also a man-machine interaction engineer, describes this combination of a head-mounted display with a Kinect motion sensor as a revolution. “For the first time, we can control the rotation of orientation from a robotic limb with a consumer-grade sensor. Plus, we can really immerse someone in the environment so that he feels the robot as an extension of his own body - you can look at the scene with the person as in perspective with full stereo vision. The entire visual input is correctly displayed where your limbs are in the real world ... ”This, he said, is very different from simply observing yourself on the screen, because then it is very difficult to compare your own movements with observations. "I felt that there is a much better understanding of where the objects are in general."

As you can imagine, a very urgent problem is the signal delay, since most of the robots are in space. Jeff Norris, the mission leader of JPL's operational innovation, says that installations are mainly used to indicate the goals that robots are looking for.

Law and Menzies point out, however, that there are state ghosts indicating where your hand is and a solid color to show where the robot is currently, so the delays are displayed on the screen.

“He feels quite natural because the ghostly hand immediately moves and sees that the robot is catching up with the position,” says Menzies. "You command a little in advance, but you do not feel lag."

')

“We are building partnerships with commercial companies that create devices, and devices, perhaps, were primarily built not for space exploration,” says Lo. “It helps us get a lot more space exploration than if we started from scratch. It also means that we could build systems that are accessible to the general public. Imagine how inspiring it would be for a seven-year-old child to control a space robot using a controller with which he is already familiar! ”

Of course, the ultimate goal is not only to control the robot’s hand, but the space robots as a whole. As the demo shows, JPL hopes to bring the same technology to the machines, such as the Robonaut 2 , which are currently deployed aboard the ISS. “We want to integrate this development, ultimately expanding the control of such robots as in Robonaut 2,” says Lo. “There is work that is too boring, dirty or even dangerous for an astronaut to do it, but in principle we still want to have control of the robot ... If we can make the robot more efficient for us, we can do more in less time” .

Original article: www.engadget.com/2013/12/23/nasa-jpl-control-robotic-arm-kinect-2 .

PS Something to add ... the same distants are almost like Lem’s in one of the books. I saw far.

Because of this, it makes sense for JPL to subscribe to Kinect for Windows developers to get their hands on a new, most up-to-date Kinect 2 (which, oddly enough, is not available as a stand-alone device, separate from Xbox One ) to watch Microsoft solutions in the field of robotics.

The lab received its Dev Kit at the end of November, and after several days of work, it was able to connect the Oculus Rift to Kinect 2 so that it could manipulate the robot outside its console. According to our interview with a group of JPL engineers, the combination of the Oculus head display and the Kinect motion sensor led to the “most exciting interface” developed by JPL to date.

JPL took part in the first Kinect developer program, so she was already very familiar with how Kinect works. She developed a number of applications, worked with Microsoft to release the game, where it was entrusted to land Curiosity safely on Mars . The second Kinect, however, offers much more precision and accuracy than the first. “This allowed us to trace the open and closed states, the rotation of the wrist,” says engineer Victor Law. “With all these new tracking points and rotational degrees of freedom, we were able to better manipulate the hand.”

Alex Menzies, also a man-machine interaction engineer, describes this combination of a head-mounted display with a Kinect motion sensor as a revolution. “For the first time, we can control the rotation of orientation from a robotic limb with a consumer-grade sensor. Plus, we can really immerse someone in the environment so that he feels the robot as an extension of his own body - you can look at the scene with the person as in perspective with full stereo vision. The entire visual input is correctly displayed where your limbs are in the real world ... ”This, he said, is very different from simply observing yourself on the screen, because then it is very difficult to compare your own movements with observations. "I felt that there is a much better understanding of where the objects are in general."

As you can imagine, a very urgent problem is the signal delay, since most of the robots are in space. Jeff Norris, the mission leader of JPL's operational innovation, says that installations are mainly used to indicate the goals that robots are looking for.

Law and Menzies point out, however, that there are state ghosts indicating where your hand is and a solid color to show where the robot is currently, so the delays are displayed on the screen.

“He feels quite natural because the ghostly hand immediately moves and sees that the robot is catching up with the position,” says Menzies. "You command a little in advance, but you do not feel lag."

')

“We are building partnerships with commercial companies that create devices, and devices, perhaps, were primarily built not for space exploration,” says Lo. “It helps us get a lot more space exploration than if we started from scratch. It also means that we could build systems that are accessible to the general public. Imagine how inspiring it would be for a seven-year-old child to control a space robot using a controller with which he is already familiar! ”

Of course, the ultimate goal is not only to control the robot’s hand, but the space robots as a whole. As the demo shows, JPL hopes to bring the same technology to the machines, such as the Robonaut 2 , which are currently deployed aboard the ISS. “We want to integrate this development, ultimately expanding the control of such robots as in Robonaut 2,” says Lo. “There is work that is too boring, dirty or even dangerous for an astronaut to do it, but in principle we still want to have control of the robot ... If we can make the robot more efficient for us, we can do more in less time” .

Original article: www.engadget.com/2013/12/23/nasa-jpl-control-robotic-arm-kinect-2 .

PS Something to add ... the same distants are almost like Lem’s in one of the books. I saw far.

Source: https://habr.com/ru/post/209220/

All Articles