Using XenServer and other free / opensource in manual testing

I want to talk about how XenServer got accustomed in our testing department, as well as a little about the other free / opensource used (DRBL + Clonezilla, Tape redirector, MHVTL). The choice has stopped on these products not for ideological, but purely practical reasons - they are convenient and scalable. But there are a number of problems that I will also focus on in this article.

Under the cut a lot of text and images.

')

I work in the Acronis testing team in the user support department, reproducing user problems. Starting with imitation of disk environment and hardware, and ending with software and its specific settings. The task was to ensure the operation of the test bench with the help of two workstations. ESXi, Hyper-V, Proxmox, and others were tried, but still stopped at using XenServer. And that's what came out of it.

Initially, there was one disk on which XenServer 4.2 was installed. At first, the speed suited. A little later, the server was already used by two people, a heavy environment appeared (for example, Exchange 2003 with a 300GB database, which constantly worked under load) and it immediately became clear that the current performance was not enough for comfortable work. Virtual machines were loaded for a long time (and at the same time it runs an average of 20 pieces on one server), IO Wait often reached several seconds. We had to do something.

The first thing that came to mind was RAID, but this was not implemented quickly, and a solution was needed yesterday. Just then, ZFS was added to the Debian repository and it was decided to try it.

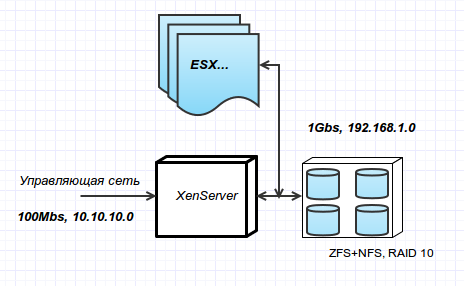

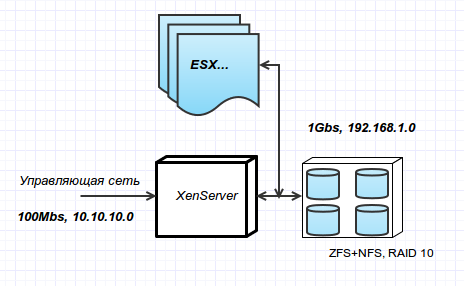

Each of the two workstations has a motherboard with 4 SATA2 ports, 16GB of RAM and gigabit network cards.

The first machine was allocated to the hypervisor and is loaded from a USB flash drive.

On the second machine was installed server Ubuntu 11.04, all the necessary packages for ZFS. Installed 4 disk 1 TB each. RAID 10 was chosen. Since there are only 4 SATA ports on the motherboard, the OS is also installed on a USB flash drive.

The hypervisor itself is connected to ZFS via a gigabit switch.

Experiments were carried out, articles were read, as a result, support for GZIP compression was enabled on ZFS, check-out checking was turned off, and deduplication was also refused. This turned out to be the most optimal solution, all 8 cores are very rarely loaded at 100%, and there is enough memory. Unfortunately, the cache on the SSD could not be tested :)

The file system itself is exported out through NFS, natively supported by ZFS itself. And XenServer, and ESX work with NFS storages. This made it possible to use the same storage at the same time for 2 XenServers and 3 ESXi. Hyper-V, which with an enviable persistence in Server 2012 refuses to work with NFS, is knocked out of this series, but this is on MS’s conscience.

A configured ZFS looks like this:

The speed of writing to a disc roughly corresponds to twice the speed of an individual disc.

Currently, up to 40 virtual machines run simultaneously without any performance problems, whereas previously 20 machines led to IOW sags for up to 5 seconds. Now, after a year and a half from the launch of ZFS, the solution can be considered reliable, fast and extremely budget. Raised NFS and CIFS servers on storage allow it to be used for other purposes as well:

XenServer allows you to create ISO repositories on CIFS network drives. Products before release testing are assembled into an ISO image (installers for Windows and Linux of different localizations), which, in turn, are uploaded to a network drive in the internal gigabit network. As a result, with one mouse movement (subjectively, XenCenter is much more convenient and faster in such work than vSphere) or a script, we insert this ISO into a virtual CDROM (dozens of machines are possible at the same time) and set the product directly from disk, which saves time on copy large (2GB +) files. Of course, such a linear reading sags the network, especially if the installation goes straight from 5+ machines, but it is still very convenient.

The gigabit network through which the storage is connected is also available for virtual machines. Thus, you can use CIFS for any other tests. For convenience, a ZFS server was also raised on the ZFS machine.

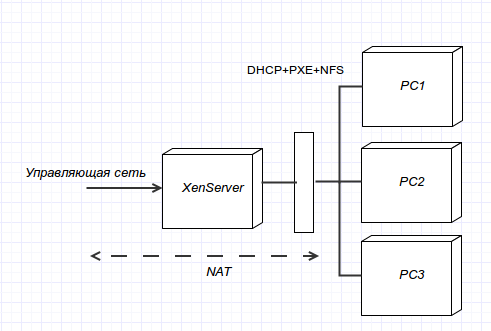

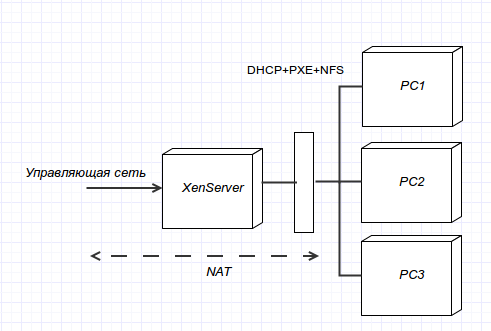

In addition to virtual machines, it is necessary to test on ordinary workstations. This testing and with tape drives and all sorts of REV, RDX discs, etc. You need to constantly and quickly deploy different environments on the machines. Whether it’s an ESX hypervisor or Windows 2008R2 with Hyper-V or SLES with a raised iSCSI multipath. DRBL is used for this purpose .

DRBL in combination with Clonezilla allows you to quickly deploy images of PXE with flexible scripts, and also serves as a NAT server for already deployed machines.

Machines on the internal network have access via NAT to the external network, and they themselves access via RDP via iptables.

A set of different tape drives is connected to one machine that uses the free Tape Redirector , so any virtual machine can use them via iSCSI. There is also a separate virtual machine with a raised MHVTL, but hardware drives are also needed - not all problems appear on the VTL.

Deployment / cloning is done in two clicks using a utility written in Perl + GTK. It works quite simply - a team of blocks is built and executed via SSH. Who cares, the repository here. The code is raw, but it works github.com/Pugnator/GTKdrbl

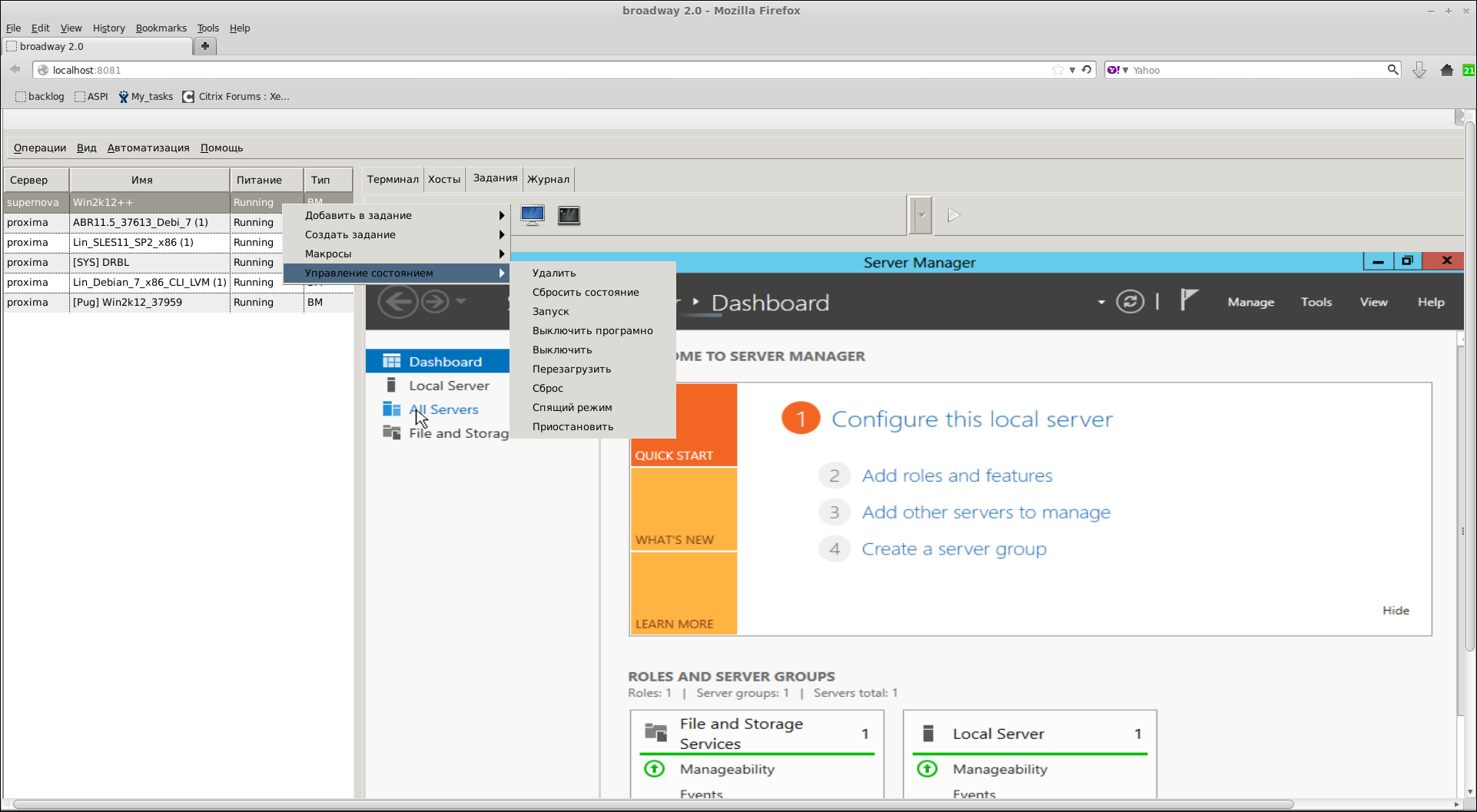

Subjectively, the user-friendly interface is represented only by Citrix - XenCenter, but, unfortunately, it is only for Windows. In addition, for some reason, important and useful features were not brought to the interface, for example, the ability to pause a virtual machine or, unfortunately, often the necessary XAPI reboot option, when a virtual machine hangs tightly

There are other options, such as sourceforge.net/projects/openxenmanager , but they were not stable enough.

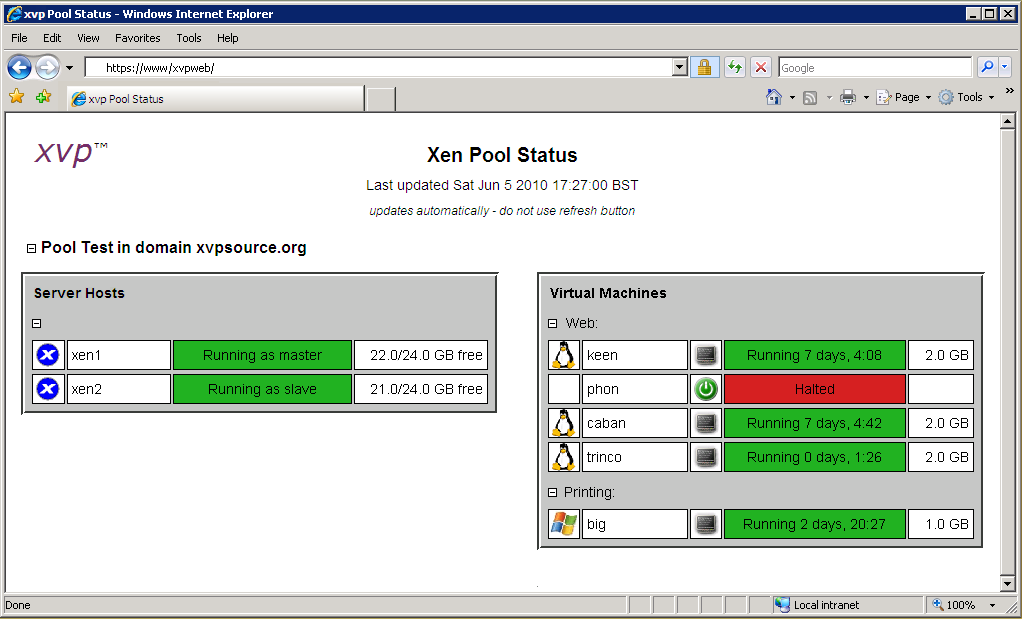

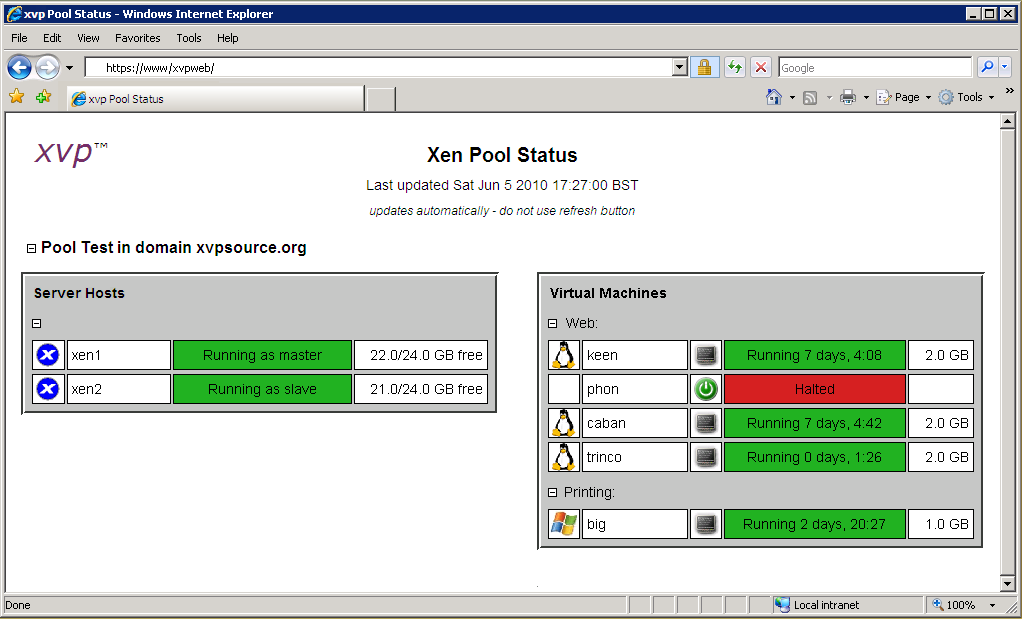

There is a VNC web proxy www.xvpsource.org

Convenient, but requires Java, included in the browser (there are problems), well, first of all it is VNC, you can not work with a full interface.

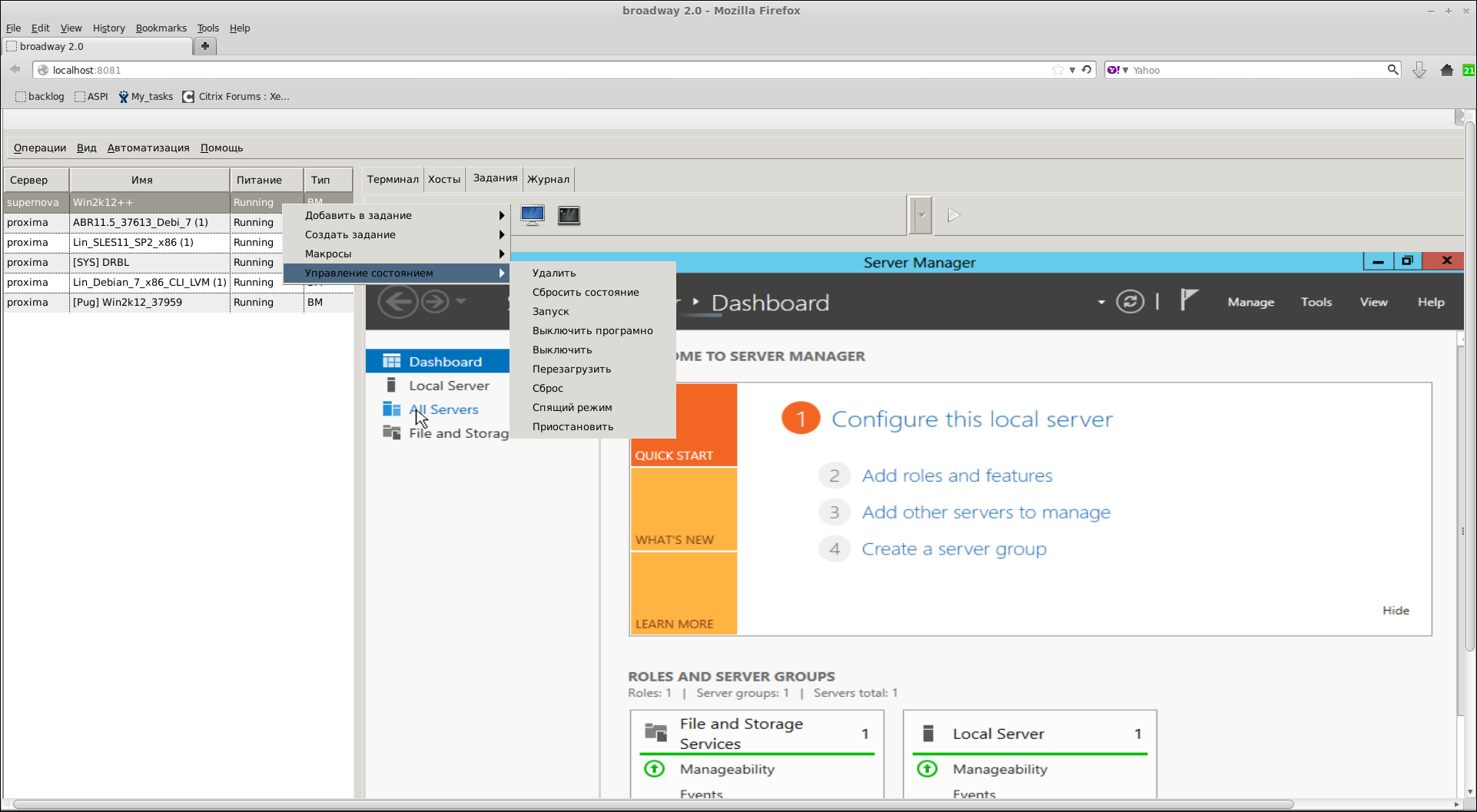

As a result, a client for Linux was written to GTK3, which can also be used on the web. GTK Broadway allows you to get such a tool via HTML5 + WebSockets in your browser

This technology does not allow using it for multiple users at the same time, but with the help of it you can fully work both on Linux and via the web, only by changing the launch parameters (for example, bring in two icons).

When using HTML5 frontend, many restrictions are imposed on the application itself, and these restrictions are for some reason undocumented. An example of a limitation is the inability to use icons in the tray, relative positioning of the window, and so on. The consequences are from unstable work to falls.

The documentation is all bad, at the moment the study of broadway is the reading of the GTK3 source codes, since there is nothing but a description page and news from 3 years ago on the broadway.

The API exists for C, C #, Java, Python, and Powershell. For C, the build is only for Linux, but the method of minor refinement of the source code (at that time there was no implementation of some function in mingw) everything was successfully assembled under Windows (MinGW). The API works via HTTP (S). The API also provides fairly low-level access to virtual machines.

I wanted a lot, from quickly viewing the MBR by clicking the mouse, before retrieving a file from a virtual machine or downloading it back while the virtual machine is turned off.

This may be necessary, for example, in the case of BSOD - extract the registry (and / or edit it and upload it back, for example, turning off any driver), or edit the bootloader options (enable debug through the serial port) and much more. For such purposes, you have to resort to using a boot disk.

But it is possible and otherwise, it is possible to export a virtual machine via HTTP GET in the XVA format, which is a TAR archive, inside which there are disk blocks.

If you read this archive on the fly, you can easily get the required MBR, and having learned the offsets and types of partitions, read the files. But at the moment only MBR extraction is implemented. Starting with version XenServer 6.2, it is possible to export a RAW disk. In future versions, XenServer promises to introduce the ability to export only disk delta from an arbitrary offset, which opens up new possibilities.

You can control the work with the virtual machine network in different ways. This is usually wireshark / tcpdump installed in the virtual machine. The necessary dump is collected and transferred to another place for study. But there is a better way - each running virtual machine has its own dom-id, in accordance with it there is also a VIF device of the vifDOMID.0 type, accessible from the hypervisor. Having connected via SSH to the hypervisor, you can easily get a dump for any arbitrary enabled virtual machine (of course, having added network cards), which makes testing cleaner and more convenient (no need to install PCAP drivers). Further, according to the advice of Q & A, the program makes a pipe and starts Wireshark. And in real time we receive / filter traffic.

The API does not provide any means similar to guest operation in vix vmWare, for example, copying files .

And if with the installation of the main software, the problem is solved with the use of ISO on the gigabit network, then with the transfer of commands / logging, viewing the data is not so smooth. It is necessary to use intermediate network drives, and this is not always possible (test conditions, isolated network). In any case, it is time consuming and inconvenient.

The very first idea that came to mind is to use a virtual serial port. You can activate the virtual com-port, which means XenServer broadcast over TCP. Now, if the connection is open at the corresponding address, we can send / receive messages at the speed of 115200. On the virtual machine, the background program “serial port-CLI proxy” is running, which performs the translation of commands from the serial port and returns the results.

It was not without pitfalls:

1) Transmission is terminated when 65535 bytes are reached, if the client (virtual machine) has not transmitted at least one byte during this time.

2) Turning on the port only works after restart. That is, it is necessary to turn on either on the virtual machine turned off, or restart it.

3) If, for any reason, the connection fails, it is not possible to restore it before rebooting.

4) Well, the worst - if the TCP server does not respond - the virtual machine will freeze at the start.

For these reasons, other methods were searched in parallel, for example, through the xenstore . This is a repository available for different domains. Including virtual machines. There is a buffer of about two megabytes, and quite fast recording. But reading is slower than 115200, xen tools are required (which is not always possible) and the code needs to be thoroughly tested. For example, if you write more than XENSTORE_PAYLOAD_MAX , judging by the comments in the source code for the drivers, this will have fatal consequences.

At the moment, I am thinking about using the paravirtual serial port of the virtual machine through the ssh tunnel on the hypervisor, by analogy with the method mentioned above about network cards. Apparently, this is the safest and fastest method. At the moment, only verified that this is feasible.

In exactly the same way, you can debug the Windows / Linux kernel by sending a serial port over TCP / SSH, and WinDbg, for example, is already connected locally via the pipe. For such purposes, a separate virtual machine was created with a set of characters for all available versions of Windows .

Working with the XenServer API and studying it, I experienced many pros and cons of the opensource. Opportunities run into weak documentation. If the VIX is described in great detail, then with the same xenserver api - 3 examples, 4 test files and comments in the source headers. The code is understandable, but how to connect individual functions is understandable either to developers or to those who deeply know the Xen architecture. For example, such a task as finding out the size of a disk is not described anywhere. And without knowing the architecture - not too easy to guess. Of course, over time, penetrating and delving into the structure of Xen, much has become clearer. But I didn’t get an answer to many questions, and no one answers in the IRC chat rooms on weekends - single visitors write that “today is the resurrection” :).

But there is progress, wiki, articles, examples with the demonstration of new features have been added over the year. I really hope that in the future XenServer will be able to become a strong player in the market with a good set of third party software

Under the cut a lot of text and images.

')

I work in the Acronis testing team in the user support department, reproducing user problems. Starting with imitation of disk environment and hardware, and ending with software and its specific settings. The task was to ensure the operation of the test bench with the help of two workstations. ESXi, Hyper-V, Proxmox, and others were tried, but still stopped at using XenServer. And that's what came out of it.

Hardware

Initially, there was one disk on which XenServer 4.2 was installed. At first, the speed suited. A little later, the server was already used by two people, a heavy environment appeared (for example, Exchange 2003 with a 300GB database, which constantly worked under load) and it immediately became clear that the current performance was not enough for comfortable work. Virtual machines were loaded for a long time (and at the same time it runs an average of 20 pieces on one server), IO Wait often reached several seconds. We had to do something.

The first thing that came to mind was RAID, but this was not implemented quickly, and a solution was needed yesterday. Just then, ZFS was added to the Debian repository and it was decided to try it.

Each of the two workstations has a motherboard with 4 SATA2 ports, 16GB of RAM and gigabit network cards.

The first machine was allocated to the hypervisor and is loaded from a USB flash drive.

On the second machine was installed server Ubuntu 11.04, all the necessary packages for ZFS. Installed 4 disk 1 TB each. RAID 10 was chosen. Since there are only 4 SATA ports on the motherboard, the OS is also installed on a USB flash drive.

The hypervisor itself is connected to ZFS via a gigabit switch.

Experiments were carried out, articles were read, as a result, support for GZIP compression was enabled on ZFS, check-out checking was turned off, and deduplication was also refused. This turned out to be the most optimal solution, all 8 cores are very rarely loaded at 100%, and there is enough memory. Unfortunately, the cache on the SSD could not be tested :)

The file system itself is exported out through NFS, natively supported by ZFS itself. And XenServer, and ESX work with NFS storages. This made it possible to use the same storage at the same time for 2 XenServers and 3 ESXi. Hyper-V, which with an enviable persistence in Server 2012 refuses to work with NFS, is knocked out of this series, but this is on MS’s conscience.

A configured ZFS looks like this:

root@xenzfs:~# zpool status pool: xen state: ONLINE config: NAME STATE READ WRITE CKSUM xen ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 sda ONLINE 0 0 0 sdb ONLINE 0 0 0 mirror-1 ONLINE 0 0 0 sdc ONLINE 0 0 0 sdd ONLINE 0 0 0 errors: No known data errors root@xenzfs:~# zpool list xen NAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT xen 1.81T 1.57T 245G 86% 1.00x ONLINE - The speed of writing to a disc roughly corresponds to twice the speed of an individual disc.

Currently, up to 40 virtual machines run simultaneously without any performance problems, whereas previously 20 machines led to IOW sags for up to 5 seconds. Now, after a year and a half from the launch of ZFS, the solution can be considered reliable, fast and extremely budget. Raised NFS and CIFS servers on storage allow it to be used for other purposes as well:

XenServer allows you to create ISO repositories on CIFS network drives. Products before release testing are assembled into an ISO image (installers for Windows and Linux of different localizations), which, in turn, are uploaded to a network drive in the internal gigabit network. As a result, with one mouse movement (subjectively, XenCenter is much more convenient and faster in such work than vSphere) or a script, we insert this ISO into a virtual CDROM (dozens of machines are possible at the same time) and set the product directly from disk, which saves time on copy large (2GB +) files. Of course, such a linear reading sags the network, especially if the installation goes straight from 5+ machines, but it is still very convenient.

The gigabit network through which the storage is connected is also available for virtual machines. Thus, you can use CIFS for any other tests. For convenience, a ZFS server was also raised on the ZFS machine.

In addition to virtual machines, it is necessary to test on ordinary workstations. This testing and with tape drives and all sorts of REV, RDX discs, etc. You need to constantly and quickly deploy different environments on the machines. Whether it’s an ESX hypervisor or Windows 2008R2 with Hyper-V or SLES with a raised iSCSI multipath. DRBL is used for this purpose .

DRBL in combination with Clonezilla allows you to quickly deploy images of PXE with flexible scripts, and also serves as a NAT server for already deployed machines.

Machines on the internal network have access via NAT to the external network, and they themselves access via RDP via iptables.

iptables -t nat -A PREROUTING -i eth0 -p tcp --dport 3390 -j DNAT --to 192.168.1.50:3389 iptables -t nat -A PREROUTING -i eth0 -p tcp --dport 3391 -j DNAT --to 192.168.1.51:3389 iptables -t nat -A PREROUTING -i eth0 -p tcp --dport 3392 -j DNAT --to 192.168.1.52:3389 A set of different tape drives is connected to one machine that uses the free Tape Redirector , so any virtual machine can use them via iSCSI. There is also a separate virtual machine with a raised MHVTL, but hardware drives are also needed - not all problems appear on the VTL.

Deployment / cloning is done in two clicks using a utility written in Perl + GTK. It works quite simply - a team of blocks is built and executed via SSH. Who cares, the repository here. The code is raw, but it works github.com/Pugnator/GTKdrbl

Interface

Subjectively, the user-friendly interface is represented only by Citrix - XenCenter, but, unfortunately, it is only for Windows. In addition, for some reason, important and useful features were not brought to the interface, for example, the ability to pause a virtual machine or, unfortunately, often the necessary XAPI reboot option, when a virtual machine hangs tightly

There are other options, such as sourceforge.net/projects/openxenmanager , but they were not stable enough.

There is a VNC web proxy www.xvpsource.org

Convenient, but requires Java, included in the browser (there are problems), well, first of all it is VNC, you can not work with a full interface.

As a result, a client for Linux was written to GTK3, which can also be used on the web. GTK Broadway allows you to get such a tool via HTML5 + WebSockets in your browser

This technology does not allow using it for multiple users at the same time, but with the help of it you can fully work both on Linux and via the web, only by changing the launch parameters (for example, bring in two icons).

When using HTML5 frontend, many restrictions are imposed on the application itself, and these restrictions are for some reason undocumented. An example of a limitation is the inability to use icons in the tray, relative positioning of the window, and so on. The consequences are from unstable work to falls.

The documentation is all bad, at the moment the study of broadway is the reading of the GTK3 source codes, since there is nothing but a description page and news from 3 years ago on the broadway.

API

The API exists for C, C #, Java, Python, and Powershell. For C, the build is only for Linux, but the method of minor refinement of the source code (at that time there was no implementation of some function in mingw) everything was successfully assembled under Windows (MinGW). The API works via HTTP (S). The API also provides fairly low-level access to virtual machines.

Discs

I wanted a lot, from quickly viewing the MBR by clicking the mouse, before retrieving a file from a virtual machine or downloading it back while the virtual machine is turned off.

This may be necessary, for example, in the case of BSOD - extract the registry (and / or edit it and upload it back, for example, turning off any driver), or edit the bootloader options (enable debug through the serial port) and much more. For such purposes, you have to resort to using a boot disk.

But it is possible and otherwise, it is possible to export a virtual machine via HTTP GET in the XVA format, which is a TAR archive, inside which there are disk blocks.

$ tar -tvf test.xva ---------- 0/0 17391 1970-01-01 01:00 ova.xml ---------- 0/0 1048576 1970-01-01 01:00 Ref: 946/00000000 ---------- 0/0 40 1970-01-01 01:00 Ref: 946 / 00000000.checksum ---------- 0/0 1048576 1970-01-01 01:00 Ref: 946/00000007 ---------- 0/0 40 1970-01-01 01:00 Ref: 946 / 00000007.checksum ---------- 0/0 1048576 1970-01-01 01:00 Ref: 949/00000000 ---------- 0/0 40 1970-01-01 01:00 Ref: 949 / 00000000.checksum ---------- 0/0 1048576 1970-01-01 01:00 Ref: 949/00000003 ---------- 0/0 40 1970-01-01 01:00 Ref: 949 / 00000003.checksum

If you read this archive on the fly, you can easily get the required MBR, and having learned the offsets and types of partitions, read the files. But at the moment only MBR extraction is implemented. Starting with version XenServer 6.2, it is possible to export a RAW disk. In future versions, XenServer promises to introduce the ability to export only disk delta from an arbitrary offset, which opens up new possibilities.

Network

You can control the work with the virtual machine network in different ways. This is usually wireshark / tcpdump installed in the virtual machine. The necessary dump is collected and transferred to another place for study. But there is a better way - each running virtual machine has its own dom-id, in accordance with it there is also a VIF device of the vifDOMID.0 type, accessible from the hypervisor. Having connected via SSH to the hypervisor, you can easily get a dump for any arbitrary enabled virtual machine (of course, having added network cards), which makes testing cleaner and more convenient (no need to install PCAP drivers). Further, according to the advice of Q & A, the program makes a pipe and starts Wireshark. And in real time we receive / filter traffic.

Guest tools and serial port

The API does not provide any means similar to guest operation in vix vmWare, for example, copying files .

And if with the installation of the main software, the problem is solved with the use of ISO on the gigabit network, then with the transfer of commands / logging, viewing the data is not so smooth. It is necessary to use intermediate network drives, and this is not always possible (test conditions, isolated network). In any case, it is time consuming and inconvenient.

The very first idea that came to mind is to use a virtual serial port. You can activate the virtual com-port, which means XenServer broadcast over TCP. Now, if the connection is open at the corresponding address, we can send / receive messages at the speed of 115200. On the virtual machine, the background program “serial port-CLI proxy” is running, which performs the translation of commands from the serial port and returns the results.

It was not without pitfalls:

1) Transmission is terminated when 65535 bytes are reached, if the client (virtual machine) has not transmitted at least one byte during this time.

2) Turning on the port only works after restart. That is, it is necessary to turn on either on the virtual machine turned off, or restart it.

3) If, for any reason, the connection fails, it is not possible to restore it before rebooting.

4) Well, the worst - if the TCP server does not respond - the virtual machine will freeze at the start.

For these reasons, other methods were searched in parallel, for example, through the xenstore . This is a repository available for different domains. Including virtual machines. There is a buffer of about two megabytes, and quite fast recording. But reading is slower than 115200, xen tools are required (which is not always possible) and the code needs to be thoroughly tested. For example, if you write more than XENSTORE_PAYLOAD_MAX , judging by the comments in the source code for the drivers, this will have fatal consequences.

At the moment, I am thinking about using the paravirtual serial port of the virtual machine through the ssh tunnel on the hypervisor, by analogy with the method mentioned above about network cards. Apparently, this is the safest and fastest method. At the moment, only verified that this is feasible.

In exactly the same way, you can debug the Windows / Linux kernel by sending a serial port over TCP / SSH, and WinDbg, for example, is already connected locally via the pipe. For such purposes, a separate virtual machine was created with a set of characters for all available versions of Windows .

Conclusion

Working with the XenServer API and studying it, I experienced many pros and cons of the opensource. Opportunities run into weak documentation. If the VIX is described in great detail, then with the same xenserver api - 3 examples, 4 test files and comments in the source headers. The code is understandable, but how to connect individual functions is understandable either to developers or to those who deeply know the Xen architecture. For example, such a task as finding out the size of a disk is not described anywhere. And without knowing the architecture - not too easy to guess. Of course, over time, penetrating and delving into the structure of Xen, much has become clearer. But I didn’t get an answer to many questions, and no one answers in the IRC chat rooms on weekends - single visitors write that “today is the resurrection” :).

But there is progress, wiki, articles, examples with the demonstration of new features have been added over the year. I really hope that in the future XenServer will be able to become a strong player in the market with a good set of third party software

Source: https://habr.com/ru/post/209118/

All Articles