How a good piece of hardware was made excellent - an overview of EMC VNX storage systems

EMC data storage systems are like a good German machine. You know that you overpay a little for the brand, but data security and sensitivity of management are provided. And service: premium warranty, for example, delivery of spare parts within 4 hours with the departure of the engineer if necessary.

A relatively recently released new line of equipment, the flagship of which allows you to put up to 1500 drives - a total of 6 PB . Below is its overview with a brief educational program about storage at all. And the story of how really good things made it even better.

')

Right away

I know that immediately ask for the cost. She is tall. You need to pay at least thirty thousand dollars. For this money they give a fully fault-tolerant configuration with powerful functionality, three-year support and updated software, which will be discussed below. That is, the system is far from home and not even for small business.

The most important thing that you get for this money: the frightening words of DU / DL will no longer appear in the reports. And this is the worst combination for service engineers and customers. Reducing the risk of downtime or data loss is what the CIO is prepared to fight by any means.

Why are hybrid storage systems needed?

First, a little history. Multi-level storage is a combination of fast and cheap, but capacious disks in one data file. There was the possibility of such a combination even when we only dreamed about flash-disks because of their cosmic value. So, there is a myth that this chip was invented to improve performance. And no! Initially, such storage was used to save: put data that is not used on slow and cheap disks. It means you need less fast data - only for “hot” data.

Then SSD tightly entered our lives. It was logical to add some seasonings from SSD to our “soup” from fast and slow disks. The fastest and “hottest” data, only 5-10 percent of the total, now fell on them. The updated VNX storage systems are already the third generation of hybrid arrays in which they, in my opinion, have succeeded to fame.

So, on the one hand, we have solutions that consist entirely of computational and flash-modules. They work with hot data at a speed close to DRAM rather than to classic HDD, but at the same time such systems are very expensive when recalculating the cost of a gigabyte of stored data. As a rule, they are used where you need to constantly "grind" 10-20 terabytes with great speed. An example is high-load DBMS, VDI. On the other hand, systems on classic mechanical disks do not allow working with data so quickly, but they make it possible to store a lot of them (the cost per gigabyte stored can be much lower).

However, in most cases, you must simultaneously have a large amount of storage and quickly provide access to the “hot” data. An example would be typical tasks for storage systems, when you need to store some DBMS simultaneously with virtualization volumes, mail, archives, etc ... In these cases, either Flash-storage systems + classic storage systems or mixed (hybrid) storage systems are used. Today we are talking about the latest - supporting both flash drives and classic drives in one system.

So, the system of the previous generation was already very good. She herself was able to work with flash-modules and was able to do everything that is needed to work with high-loaded applications. In general, it might seem that doing something better is quite difficult, and a further increase in productivity is possible only at the expense of iron. But no, the developers succeeded, by making major changes to the software part of the platform, to achieve just a huge performance gain.

New generation

Here are the things.

First, the new EMC is designed for large volumes . The flagship allows you to put up to 1500 discs. One of our clients, by the way, these 6 PBs can be useful, since there are quite a lot of data there - but in practice there have not been more deployments than 2PB yet.

Everything works faster. There is more cache, X-BLADE (file servers) are now more optimized. Yes, yes, do not forget that access to this storage system can be either block (FC, iSCSI, FCoE) or file (CIFS, NFS).

Added new block deduplication. About her and other software details below.

Updated hardware component. Block controllers can now work with full SSD configuration and support up to 32 cores.

Block Controller Architecture

The main differences from the previous line (more below)

- Multi-core MCx architecture (R5.33): 4 times more performance than previous generation, multi-core Cache and FAST Cache management, multi-thread RAID management

- FAST VP update

- Synchronous Active / Active LUN mode

- Virtual Recoverpoint (vRPA)

- Supports HyperV ODX and SMB 3.0

Iron

A new hardware constructive controller has appeared - everything except the flagship uses the new DPE platform. Of the major changes is another backup power module. Fail-safe power batteries are built into the controller for all models except the flagship. In the older model, there are still batteries, but now there are not 2, but 4 of them, and they are now much more convenient to replace.

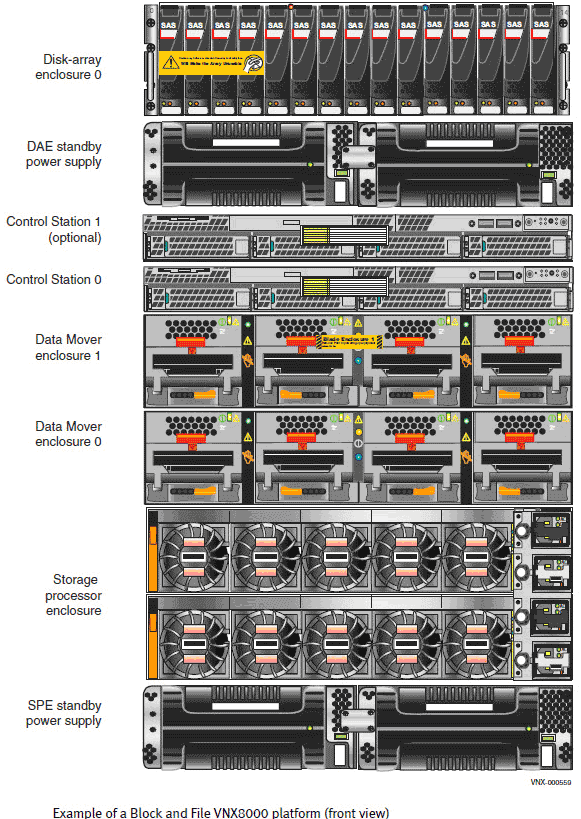

The layout of the controllers and shelves is the same as before (VNX8000 is an exception):

Take a look at the wheels:

Now flash drives are divided into two categories: ultrafast (eMLC) is used for cache and level storage, regular (just MLC) - only for level storage.

Soft

The basic functionality of the software "out of the box" is now also more, but this, in general, was expected.

What is interesting - new software chips have appeared. For example, I was very pleased with the new approach to the allocation of processor resources. Previously, the process received a certain number of cores and worked only for them. That is, with unequal loads of certain processes (quite frequent for real systems) there was an uneven load on the cores. Somewhere the core could be idle, but somewhere the load was close to 100%. The new architecture has taken a step towards greater virtualization — dynamic resource sharing and multi-thread processing.

Completely redesigned cache. Previously, the memory was divided into 2 areas: a cache for writing and a cache for reading. These two buffers did not intersect. Accordingly, one did the optimization of writing data to disk optimal blocks, and the second predicted the reading of the following blocks. So, on the new arrays, the work of the cache is completely reorganized, the arrays now use the dynamic cache, which is responsible for both tasks simultaneously without splitting the memory into different areas. This approach not only allowed more efficient use of memory space, but also dramatically increased performance. On typical loads, according to the manufacturer, this very magically increases cache speed up to 5 times.

Reworked Fast Cache - these are flash-drives used as an additional cache. He is now given more processor resources due to the dynamic allocation of resources. Plus, under Fast Cache, faster eMLC drives are now delivered. As a result, the developers were able to use more aggressive algorithms, which makes warming up the cache much faster. At the same time, the engineers, along with the developers of the classic cache algorithms, walked through the drivers and rewrote them under the modular principle, which also greatly improved performance and lowered the response time of a single treatment.

Fully Automated Storage Tiering Virtual Pool (FAST VP). This is the very mechanism of combining various disks into a single "pool". It was also significantly reworked and more closely integrated with FAST cache. By the way, the granularity of Fast VP is reduced 4 times and now the data is moved in chunks of 256 MB each.

Here, probably, it will be relevant to return to the beginning, where I said that mixing discs does not lead to an increase in performance. Now, when we were able to use the SSD for storage and as a cache, we can talk about speed and the efficiency of data storage and processing.

New block deduplication. The principle is as old as the world: data is broken into blocks, the same blocks are stored once. For duplicate blocks, links to the same blocks as in archives are used. Now all this is very much "sharpened" for virtual environments.

On new storage deduplication occurs on schedule. For example, applications write data to the pool without deduplication during the working period. Then, at the time of reducing the load, the storage engine begins analyzing the recorded data and compressing it by removing duplicates. All this is in great agreement with fast-cache (since the load on deduplicated blocks will increase, and their number will naturally decrease, as a result, they will move to fast-cache). In practice, this means that subjectively more data will fit in the fast cache. This greatly speeds up the work of the array. Two of the most typical types of load maximize the benefits from the new mechanism:

- Single-type virtual environments, such as VDI.

- Multiple applications for one data set.

The estimated savings for such loads will be:

The new principle of working with disks. EMC also changed the principle of working with disks. Earlier, the disk address was rigidly attached to its physical location in the shelf, in the new generation the system remembers disks by their unique identifiers, which makes it possible not to become attached more to the location of disks in the system. It seems to be an insignificant change, however, how easy it is now to move the array to another site! After all, now you can not suffer from the location of the disks in the same manner as before. And the problem of unbalanced load on the back-end can be solved simply by orders of magnitude easier and faster: rearranged the disks one by one - and that's ready, you don't even need to put out the base. And now, if necessary, part of the data can be physically pulled out of the array and taken away for a while to another site, such a kind of hot backup is obtained, I think, you will figure out why you might need it.

Added Permanent Sparing technology has proven itself on Hi-end systems. In addition, now there is no need to allocate special disks for hot swapping, the array will use the default maximum suitable non-occupied disk.

There are important changes in the principle of disk access by the controller. Initially, in the mid-range systems, one controller worked with disks, and the access paths of other controllers were used only for failures. At failure, the volume moved to another controller. This meant that if a certain array requires full system resources, it can theoretically even get no more than 50% of the total system resources - from a single controller. This problem was partially solved in 2 past lines: the ALUA mechanism appeared. The essence of the mechanism: the transfer of host requests to the controller to the owner of the volume through the cache synchronization interface. Unfortunately, the bandwidth of this interface is far from unlimited and the call to the controller to the volume owner is still processed faster. The paths began to be divided into optimal and non-optimal instead of active and passive. Roughly speaking, in the ideal case - up to 75% of the array capacity on that. In the new generation, the developers have gone further. Without changing the hardware structure (!), They changed the logic of the array to Active-Active. Now both controllers simultaneously write to different areas simultaneously, simply blocking their area during recording.

In practice, if we use an array for one task, we can use a cheaper younger storage model. And the load balancing on the tracks becomes much easier. Plus, earlier, when the LUN moved under the control of another controller, there were serious delays in the processing of external I / O calls, and now there will be neither.

Updated software for the array. Externally, the interface to work with an array has not changed much. According to my subjective assessment, this was, as it was, and remained (fortunately) the most convenient interface for DSS - 99% of tasks are done in a couple of clicks right in the web interface. Exotic, of course, requires a console.

In the basic package now comes a very useful new long-term monitoring tool. You can see the reports on the file and block parts - a bunch of metrics. Makes it easy to find bottlenecks. Another tool based on these metrics allows you to plan the expansion of the infrastructure - so that the administrator does not count with his hands what and how much is needed next year.

Another very important thing for virtual environments. Arrays still support tight integration with virtualization systems. If anyone knows, VMware is a division of EMC (rather independent, but division). Hence, the joint work of VMware programmers and storage developers. The consequence is convenient seamless and extremely deep integration. Virtual machines are integrated into the storage interface (monitoring machine resources at the storage level), typical loads for copying and cloning machines can be shifted to the array itself, typical tasks for managing storage systems can be done from the VMware infrastructure interface.

Strange as it may seem, there are similar advantages for Hyper-V (since the EMC has been working closely with MS for a very long time, the work of the developers has historically formed together). Almost the same level of integration depth: general management, infrastructure unloading. There are special chips for MS - for example, Branch Cahe unloads the network infrastructure with weak communication channels. Yes! EMC VNX is the first storage system with SMB 3.0 support.

For now. Questions can be asked in the comments or by e-mail VBolotnov@croc.ru . As regards the cost for specific tasks, I can orient rather quickly with concrete calculations - if necessary, request, not a problem.

Yes. We have already twisted our VNX5400 demo system in our hands and gave it to testing for one of the customers who are planning to update their storage systems this year. She will be back soon, so if anyone is interested in feeling the real system on her own, you can come to our office and look at the EMC Solution Center. Write, agree on a date.

UPD : If anything, we have a program to replace tape and disk libraries with new EMCs.

Source: https://habr.com/ru/post/208784/

All Articles