Containers are the future of clouds.

Ola, Habrasoobschestvo!

This report was originally prepared for presentation at the conference of the company FastVPS ROCK IT 2013 , held on August 24-25, 2013 in the City of Tallinn, Estonia.

Probably, someone heard it personally (thanks!), But I still recommend reading it, since this publication is more detailed and considers much more details omitted in the report (smile)

')

The publication aims to provide a brief overview of the open source tools on the market for deploying several virtual environments based on a physical server with Linux on board, as well as the benefits of using containers to create clouds :)

Meet the heroes of today's story!

By name:

Table: Comparison of Available Technologies for Implementing Virtual Environments in Linux

I would like to note that Oracle VirtualBox was not consciously included in the table, since it is not completely open, and in the article we consider only open technologies.

This table makes it easy to conclude that there are only three technologies ready for industrial use today: KVM, Xen and OpenVZ. Also, the LXC technology is developing at a very fast pace, and for this reason we simply have to consider it. All four technologies, in turn, implement two approaches to isolation - full virtualization and containerization, we'll talk about them.

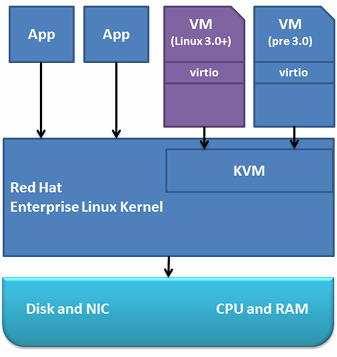

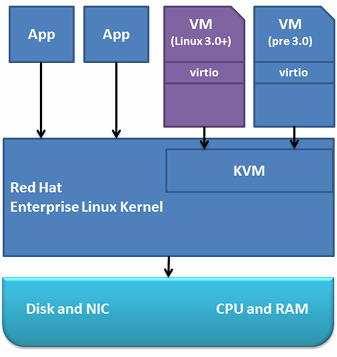

Let's move away from purely theoretical descriptions and look at a visual scheme using the example of KVM.

As you can see, we have three levels:

All the interaction here is quite transparent - the base Linux kernel (HWN) interacts with hardware (processor, memory, I / O), and in turn provides the ability for processes to work in which full Linux kernels, FreeBSD or even Windows It is worth noting that these are not quite normal processes, the work of virtual machines is provided through the kernel module (KVM) that translates system calls from the client operating system to the HWN. This job description cannot be considered completely correct, but it fully conveys the meaning of this approach. The most important thing to note is that the translation from client OS calls to HWN calls is performed using a special processor technology (AMD-V, Intel VT).

The disadvantages of this type of isolation are reduced to additional delays in the operation of the disk subsystem, network subsystem, memory, processor due to the use of an additional hardware-software abstraction layer between the real hardware and the virtual environment. With the help of various technologies and approaches (for example, software virtio, hardware VT-d) these delays are minimized, but they can never be completely eliminated (unless virtualization is embedded in iron) due to the fact that this is an additional layer of abstraction for which to pay.

According to a recent study by IBM overhead (this is the difference between the performance of an application inside a virtual environment and the same application running without using virtualization at all), the KVM technology for I / O is about 15% when tested on SUSE Linux Enterprise Server 11 Service Pack 3.

There is also a very interesting test , presented in the Journal of Physics: Conference 219 (2010), in which the numbers are voiced: 3-4% overhead for the processor, and 20-30% for disk I / O.

At the same time, in the presentation of RedHat for 2013, a figure of 12% is voiced (that is, 88% of the performance of a configuration that works without virtualization).

Of course, these tests were carried out in different software configurations, for different load patterns, for different equipment, and I even admit that some of them were conducted incorrectly, but their essence boils down to one thing - overhead (overhead) when using both KVM and Xen reach 5-15% depending on the type used by the system and configuration. Agree a lot?

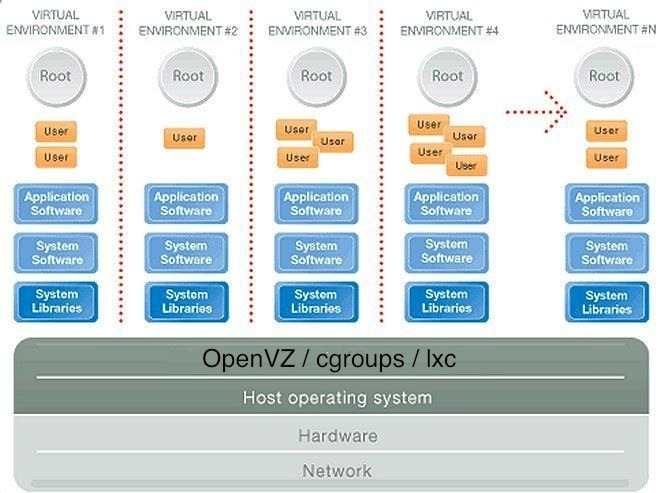

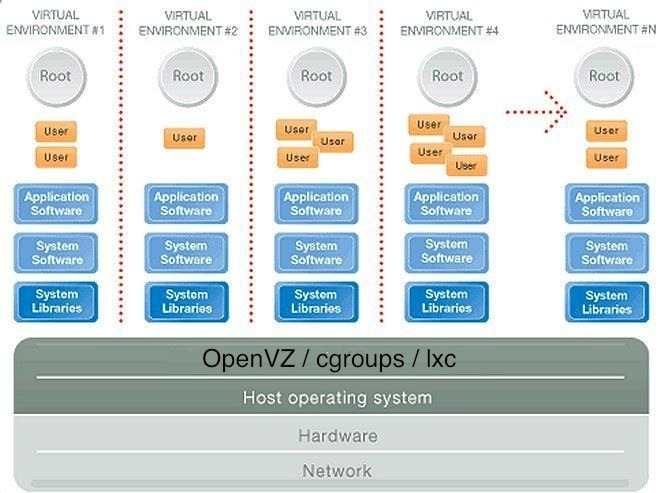

We will do the same, use the illustration.

Just want to say that everything below is true for both OpenVZ and LXC. These technologies are very, very similar and are extremely close relatives (I will try to reveal this topic in my next publication).

Although the image is drawn in a slightly different style, the key difference immediately catches the eye - the kernel uses only one and there are no virtual machines (VM)! But how does it work? At a low level, everything happens in a similar way - the Linux HWN core interacts with the hardware and executes all requests related to accessing the hardware from virtual environments. But how are virtual environments separated from each other? They are separated with built-in Linux kernel mechanisms!

First of all, this is a modified analogue of chroot (modifications primarily concern protection, so that it is not possible to break out of the chroot environment into the root file system), which is well known to all of us, it allows you to create isolated hierarchies within the same file system. But chroot does not allow, for example, to run its own init process (pid 1, since the init process already has a PID data on the HWN), and this is a mandatory requirement if we want to have a completely isolated client OS inside the container. To do this, use the PID namespaces mechanism, which inside each chroot environment creates a completely independent HWN system of process identifiers, where we can have our own process with PID 1 even if the init process is already running on the server itself. In general, we can create hundreds and even thousands of individual containers that are not related to each other.

So how is the memory, processor and hard disk load being limited? They are also limited by the cgroups mechanism, which, by the way, is also used for exactly the same goals in KVM technology.

As we discussed earlier, any isolation technology has an overhead projector, whether we want it or not. But in the case of containerization, this overhead is negligible (0.1-1%) due to the fact that very simple transformations are used, which can often be explained literally on the fingers. For example, isolating the PIDs of processes and socket spaces is accomplished by adding an additional 4-byte identifier to indicate which container the process belongs to. With memory, everything is a bit more complicated and there is a certain loss of memory (due to the peculiarities of memory allocation), but this has little effect on the speed of allocation and work with memory. With a disk system and input-output subsystem, the situation is similar. In the figures, the estimates for the OpenVZ overhead projector are given in the papers by HP engineers and researchers from the University of Brazil for HPC, and they are in the region of 0.1–1% depending on the type of load and testing methodology.

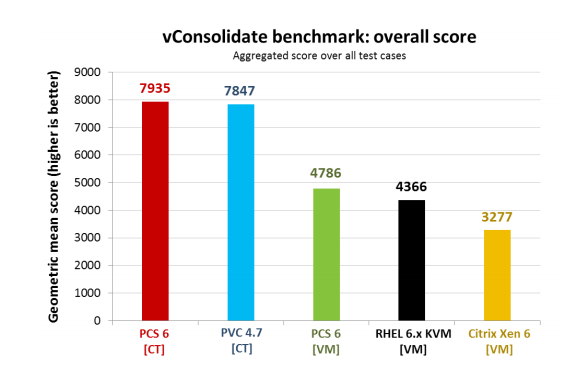

Here we come to the topic stated at the very beginning! We discussed the overhead technology of full virtualization and containerization technologies, now is the time to compare them directly with each other. I note that in some tests, instead of OpenVZ, its commercial version of PCS is used, this has minimal effect on tests, since the kernel used in both open and commercial OS is completely identical.

There are quite a few qualitative tests conducted on the network with the participation of all three players, and almost all of them are conducted by Parallels, which in turn is the developer of OpenVZ. If you bring such tests without the possibility of their testing and repetition - there may be questions about bias.

I’ve been thinking about how to achieve this for quite a while and I came across a wonderful document in which KVM, XEN and OpenVZ / PCS are tested on the basis of Intel’s test methodology vConsolidate, as well as a standardized LAMP test, which allows you to check all the results at home and make sure they are true.

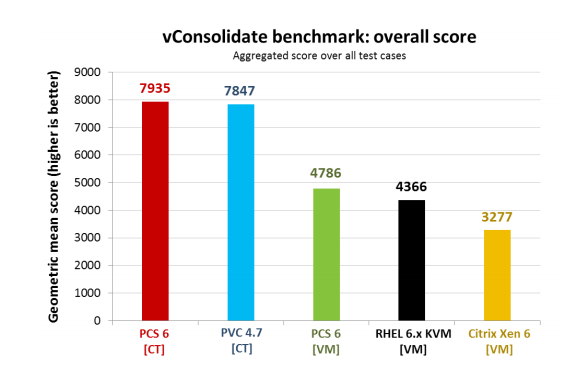

Since this test is strictly in parrots, I did not give its intermediate graphs and brought the final results. As you can see - the advantage of OpenVZ on the face. Of course, Intel has taken many things into account in its test, but it is still very difficult to interpret these figures.

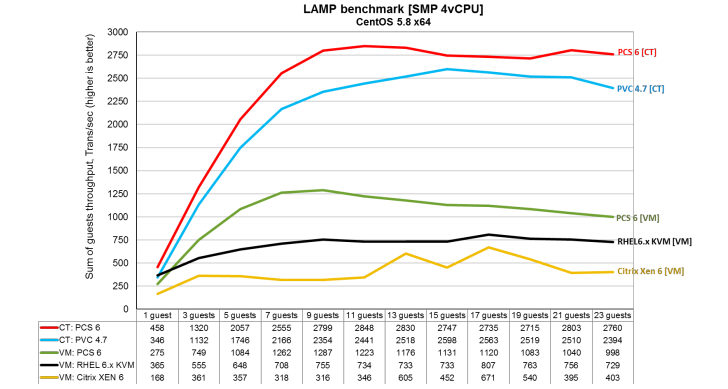

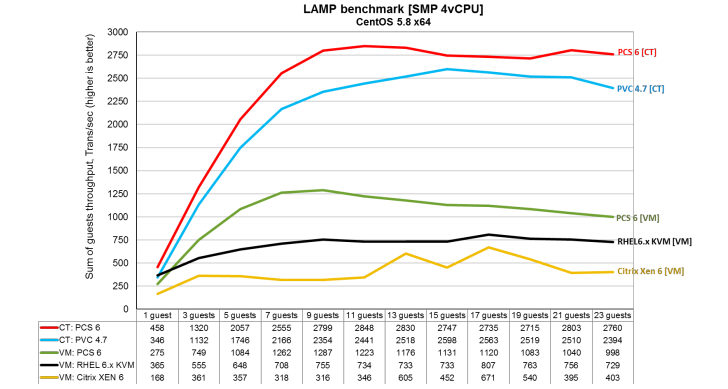

Therefore, I propose a more comprehensible LAMP test, from the same document from Parallels, it tests the performance of the LAMP application depending on the number of running virtual environments:

It's all much clearer and clearly visible almost 2-fold increase in the total number of transactions performed by the LAMP application! What more could you wish for? :)

Of course, it was possible to cover even more tests, but, unfortunately, we are so much beyond the standard Habra article, so you are welcome here .

Hopefully, I was able to convince you that containerization-based solutions make it possible to squeeze almost all of its power out of iron.

In this article we tried to prove that the efficiency of using hardware resources when using containerization instead of full virtualization saves up to 10% (we take the average estimate) of all computing resources of the server. It is rather difficult to judge in percents, it is much easier to imagine that you had 100 servers and they solved a certain task using full virtualization, then having introduced containerization you can free up almost 10 full-fledged servers that consume a lot of electricity, take up space (which is very expensive in case of modern data centers), cost a lot of money in themselves, and also require staff to service them.

How to get away from abstract "expensive" to real numbers? The most significant savings gives us savings on electricity. The cost of electricity, according to Intel, account for almost 10% of all operating costs for operating Data Centers. Which, in turn, according to the US EPA, consume a total of almost 1.5% of all electricity generated in the United States. Thus, we not only save companies' funds, but also make a significant contribution to the preservation of the environment through less electricity consumption and more efficient use of it!

It is also worth noting that the largest computing systems in the world - Google's own clouds, Google , Yandex , Heroku, and many, many others are built using LXC technology, which once again proves the advantage of containerization in cloud computing and shows with the example of real companies.

In addition, both sounded containerization technologies - OpenVZ and LXC have free licenses and are very actively developing and LXC (albeit partially) is on almost any Linux server installed in the last year and a half. Open licenses and an active developer community are key to the success of the technology in the near future.

In the next publications we will focus on the OpenVZ and LXC technologies, and also we will definitely conduct their comparative analysis :)

Update, continuation of a series of publications:

Separately, I would like to thank Vasily Averin (Parallels) for explaining the particularly difficult aspects of the LXC technology.

Regards, Pavel Odintsov

CTO FastVPS LLC

Introduction

This report was originally prepared for presentation at the conference of the company FastVPS ROCK IT 2013 , held on August 24-25, 2013 in the City of Tallinn, Estonia.

Probably, someone heard it personally (thanks!), But I still recommend reading it, since this publication is more detailed and considers much more details omitted in the report (smile)

')

The publication aims to provide a brief overview of the open source tools on the market for deploying several virtual environments based on a physical server with Linux on board, as well as the benefits of using containers to create clouds :)

Open source Linux based solutions for creating virtual environments

Meet the heroes of today's story!

By name:

- KVM

- Xen

- Linux VServer

- Openvz

- LXC (Linux Containers)

Table: Comparison of Available Technologies for Implementing Virtual Environments in Linux

I would like to note that Oracle VirtualBox was not consciously included in the table, since it is not completely open, and in the article we consider only open technologies.

This table makes it easy to conclude that there are only three technologies ready for industrial use today: KVM, Xen and OpenVZ. Also, the LXC technology is developing at a very fast pace, and for this reason we simply have to consider it. All four technologies, in turn, implement two approaches to isolation - full virtualization and containerization, we'll talk about them.

Full virtualization

Let's move away from purely theoretical descriptions and look at a visual scheme using the example of KVM.

As you can see, we have three levels:

- Hardware (Disk, NIC, CPU, Memory in the image - storage system, network devices, processor and memory)

- Linux kernel (absolutely any, from any more or less modern distribution, but 2.663 or higher is recommended; for definiteness, let's call it HWN, HardWare Node)

- Virtual machines (client OS) that run as normal processes on a Linux system, alongside regular Linux demons

All the interaction here is quite transparent - the base Linux kernel (HWN) interacts with hardware (processor, memory, I / O), and in turn provides the ability for processes to work in which full Linux kernels, FreeBSD or even Windows It is worth noting that these are not quite normal processes, the work of virtual machines is provided through the kernel module (KVM) that translates system calls from the client operating system to the HWN. This job description cannot be considered completely correct, but it fully conveys the meaning of this approach. The most important thing to note is that the translation from client OS calls to HWN calls is performed using a special processor technology (AMD-V, Intel VT).

The disadvantages of this type of isolation are reduced to additional delays in the operation of the disk subsystem, network subsystem, memory, processor due to the use of an additional hardware-software abstraction layer between the real hardware and the virtual environment. With the help of various technologies and approaches (for example, software virtio, hardware VT-d) these delays are minimized, but they can never be completely eliminated (unless virtualization is embedded in iron) due to the fact that this is an additional layer of abstraction for which to pay.

Costs of implementing full virtualization

According to a recent study by IBM overhead (this is the difference between the performance of an application inside a virtual environment and the same application running without using virtualization at all), the KVM technology for I / O is about 15% when tested on SUSE Linux Enterprise Server 11 Service Pack 3.

There is also a very interesting test , presented in the Journal of Physics: Conference 219 (2010), in which the numbers are voiced: 3-4% overhead for the processor, and 20-30% for disk I / O.

At the same time, in the presentation of RedHat for 2013, a figure of 12% is voiced (that is, 88% of the performance of a configuration that works without virtualization).

Of course, these tests were carried out in different software configurations, for different load patterns, for different equipment, and I even admit that some of them were conducted incorrectly, but their essence boils down to one thing - overhead (overhead) when using both KVM and Xen reach 5-15% depending on the type used by the system and configuration. Agree a lot?

Containerization

We will do the same, use the illustration.

Just want to say that everything below is true for both OpenVZ and LXC. These technologies are very, very similar and are extremely close relatives (I will try to reveal this topic in my next publication).

Although the image is drawn in a slightly different style, the key difference immediately catches the eye - the kernel uses only one and there are no virtual machines (VM)! But how does it work? At a low level, everything happens in a similar way - the Linux HWN core interacts with the hardware and executes all requests related to accessing the hardware from virtual environments. But how are virtual environments separated from each other? They are separated with built-in Linux kernel mechanisms!

First of all, this is a modified analogue of chroot (modifications primarily concern protection, so that it is not possible to break out of the chroot environment into the root file system), which is well known to all of us, it allows you to create isolated hierarchies within the same file system. But chroot does not allow, for example, to run its own init process (pid 1, since the init process already has a PID data on the HWN), and this is a mandatory requirement if we want to have a completely isolated client OS inside the container. To do this, use the PID namespaces mechanism, which inside each chroot environment creates a completely independent HWN system of process identifiers, where we can have our own process with PID 1 even if the init process is already running on the server itself. In general, we can create hundreds and even thousands of individual containers that are not related to each other.

So how is the memory, processor and hard disk load being limited? They are also limited by the cgroups mechanism, which, by the way, is also used for exactly the same goals in KVM technology.

Costs for the implementation of containerization

As we discussed earlier, any isolation technology has an overhead projector, whether we want it or not. But in the case of containerization, this overhead is negligible (0.1-1%) due to the fact that very simple transformations are used, which can often be explained literally on the fingers. For example, isolating the PIDs of processes and socket spaces is accomplished by adding an additional 4-byte identifier to indicate which container the process belongs to. With memory, everything is a bit more complicated and there is a certain loss of memory (due to the peculiarities of memory allocation), but this has little effect on the speed of allocation and work with memory. With a disk system and input-output subsystem, the situation is similar. In the figures, the estimates for the OpenVZ overhead projector are given in the papers by HP engineers and researchers from the University of Brazil for HPC, and they are in the region of 0.1–1% depending on the type of load and testing methodology.

Containerization vs. Virtualization

Here we come to the topic stated at the very beginning! We discussed the overhead technology of full virtualization and containerization technologies, now is the time to compare them directly with each other. I note that in some tests, instead of OpenVZ, its commercial version of PCS is used, this has minimal effect on tests, since the kernel used in both open and commercial OS is completely identical.

There are quite a few qualitative tests conducted on the network with the participation of all three players, and almost all of them are conducted by Parallels, which in turn is the developer of OpenVZ. If you bring such tests without the possibility of their testing and repetition - there may be questions about bias.

I’ve been thinking about how to achieve this for quite a while and I came across a wonderful document in which KVM, XEN and OpenVZ / PCS are tested on the basis of Intel’s test methodology vConsolidate, as well as a standardized LAMP test, which allows you to check all the results at home and make sure they are true.

Since this test is strictly in parrots, I did not give its intermediate graphs and brought the final results. As you can see - the advantage of OpenVZ on the face. Of course, Intel has taken many things into account in its test, but it is still very difficult to interpret these figures.

Therefore, I propose a more comprehensible LAMP test, from the same document from Parallels, it tests the performance of the LAMP application depending on the number of running virtual environments:

It's all much clearer and clearly visible almost 2-fold increase in the total number of transactions performed by the LAMP application! What more could you wish for? :)

Of course, it was possible to cover even more tests, but, unfortunately, we are so much beyond the standard Habra article, so you are welcome here .

Hopefully, I was able to convince you that containerization-based solutions make it possible to squeeze almost all of its power out of iron.

Containerization is the future of the clouds!

In this article we tried to prove that the efficiency of using hardware resources when using containerization instead of full virtualization saves up to 10% (we take the average estimate) of all computing resources of the server. It is rather difficult to judge in percents, it is much easier to imagine that you had 100 servers and they solved a certain task using full virtualization, then having introduced containerization you can free up almost 10 full-fledged servers that consume a lot of electricity, take up space (which is very expensive in case of modern data centers), cost a lot of money in themselves, and also require staff to service them.

How to get away from abstract "expensive" to real numbers? The most significant savings gives us savings on electricity. The cost of electricity, according to Intel, account for almost 10% of all operating costs for operating Data Centers. Which, in turn, according to the US EPA, consume a total of almost 1.5% of all electricity generated in the United States. Thus, we not only save companies' funds, but also make a significant contribution to the preservation of the environment through less electricity consumption and more efficient use of it!

It is also worth noting that the largest computing systems in the world - Google's own clouds, Google , Yandex , Heroku, and many, many others are built using LXC technology, which once again proves the advantage of containerization in cloud computing and shows with the example of real companies.

In addition, both sounded containerization technologies - OpenVZ and LXC have free licenses and are very actively developing and LXC (albeit partially) is on almost any Linux server installed in the last year and a half. Open licenses and an active developer community are key to the success of the technology in the near future.

In the next publications we will focus on the OpenVZ and LXC technologies, and also we will definitely conduct their comparative analysis :)

Update, continuation of a series of publications:

- Containerization on Linux in detail - LXC and OpenVZ. Part 1

Separately, I would like to thank Vasily Averin (Parallels) for explaining the particularly difficult aspects of the LXC technology.

Regards, Pavel Odintsov

CTO FastVPS LLC

Source: https://habr.com/ru/post/208650/

All Articles