Criticism of Bob Martin’s book Principles, Patterns, and Techniques for Agile Development in C #

If you ask ten developers about the best (or worthy) book on design, then at least 6 of them call Bob Martin's book Principles, Patterns and Agile Development Techniques . If, after this, to show them some interesting moments of Bob’s “uncle” scribbling, then most of them will clear their forehead with perplexity and change their opinion somewhat.

When reading this note, it is worthwhile to include common sense and not consider it as an attack on the holy. After all, it is quite possible that you read it several years ago, when there were fewer scars on the hands of littered projects, and with the word “patterns” the knees trembled a little. So maybe you should look at the "classics" from the height of the new experience?

From the first pages of the book, Martins are very weakly thrown onto the fan, starting the book as follows:

')

“My experience is that .NET programmers are often weaker than those written in Java or C ++. It is clear that there are exceptions. However, over and over again, watching my listeners, I was forced to conclude that .NET programmers usually have less knowledge of software development methods, patterns and design principles, etc. It often happened that the .NET programmers present were not at all aware of these fundamental concepts. This situation must be changed .

While working on this book, I often doubted whether to put my name on the cover of a book devoted to .NET. I asked myself if I wanted to be associated with .NET and with all the negative perceptions associated with this platform. But what's the point of denying? I'm a .NET programmer. Not! I am a flexible .NET programmer. And proud of it. "

Well, now let's see what we, illiterate .NET chicks, can learn from the comrades of the Martin.

In general, the description of all the principles of SOLID seemed to me very ambiguous. On the one hand, many of them (such as DIP and OCP) are formulated very rigidly: DIP prohibits the use of a variable of a particular type, and OCP implies an extension without recompilation. On the other hand, sometimes there are pragmatic notes that you need to use these principles wisely.

Here is an interesting point:

“Flexible teams apply these principles (meaning SOLID) only to eliminate odor; when nothing bad smells, then the principles do not apply. It would be a mistake to unconditionally adhere to a certain principle simply because it is a principle. Principles exist to help eliminate bad odors. These are not perfumes that need to be plentifully watered the entire system. Excessive adherence to principles leads to vice of unnecessary complexity. ”

On the one hand, this is a very pragmatic point of view, but on the other hand, why should we make such rigid principles, in order to introduce additional rules about when to follow them and when not?

In addition, it seems to me that the causes and effects are confused: the principles are needed primarily to determine the “nice” in design and they do not help in their elimination.

Below are descriptions of the principles, which cannot be broken.

“If during the creation of a derived class we are forced to make a change to the base class, then the design most likely has a flaw.”

Or there is another option: we live in the real world, in which evolutionary design is the key development approach.

“Does the LSP principle find application in real programs? Consider an example taken from a project I worked on several years ago . ... In the early 1990s ... "

The original book was released in the middle of 2006 (already after the release of C # 2.0 and generalizations), and here a few years ago it was the beginning of the 1990s.

"The Liskov substitution principle is one of the main tools for implementing the OCP principle."

I would say that one of the options for achieving OCP is polymorphism, and LSP says that polymorphism works the way we expect it to. There are other options for achieving OCP, for example, based on callback functions.

If we are already talking about the formalization of the LSP principle, then we need to talk about contracts, about their role for the correct implementation of inheritance. Contracts in the book are mentioned, but literally in passing.

The criticism of this principle is described in more detail in the article “A Critical Look at the Principle of Inversion of Dependencies” .

But here is one very emotional conclusion, which is very pleased:

“In fact, such dependency inversion is a great sign of object-oriented design. It doesn't matter what language the program is written in. If the dependencies are inverted, then we have an object-oriented design. Otherwise, the design is procedural. ”

Very controversial statement, agree!

Not many people know that the author of the principle Open-Close is not Bob Martin, but Bertrand Meyer in his book Object-Oriented Design of Software Systems . At the same time, even fewer people noticed that Bob Martin interprets this principle in his own way.

Definition from Bob Martin : program entities (classes, modules, functions, etc.) should be open for expansion, but closed for modification.

Thus, the modules have two main characteristics:

Definition by Bertrand Meier : modules must be able to be both open and closed.

In this case, the concepts of openness and closeness are defined as follows:

In other words, Meyer speaks about the openness / closeness of the module interface, I Martin - about the openness / closeness of the module as a whole, including its interface and implementation.

The possibility of extending the behavior of a module without recompilation should be described in the requirements for the module, and not be based on principles.

At the same time, it is amusing that as an example of the Open-Close principle, the Shape class is still given with the Draw method, which corresponds to the open-closed principle compared to the solution from structural design. But at the same time, he is silent about how open this solution will be for expansion and closed for modification if we want to add a new type of shape or a new operation to the base class Shape .

While reading the book, I repeatedly found myself thinking that the book was not written from scratch, but was exactly the second edition, the examples of which were translated into C #. Moreover, the book has been reworked "in the forehead," and not processed using the idioms of the .NET platform.

One of the best manifestations of this property are the examples given in the description of the Interface Segregation Principle principle.

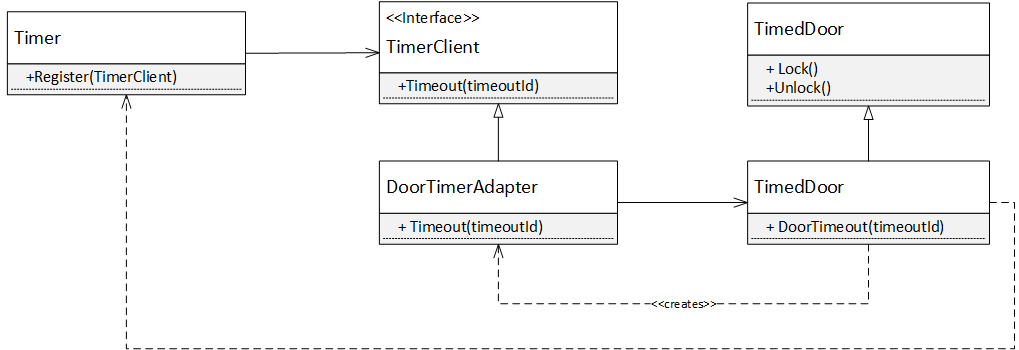

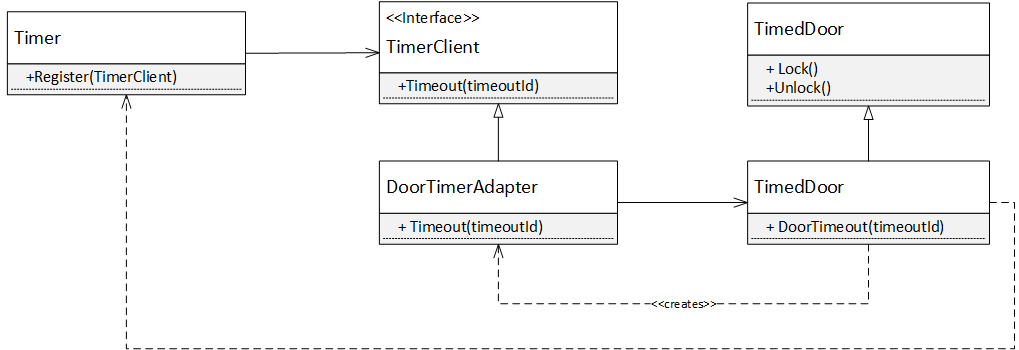

The principle of separation of interfaces is considered in two examples, one of which is a security system with the class Door (Door) and a subclass of TimedDoor , which should give out a sound signal if the door is not closed for some time. Here is one of the solutions to this task, given in the section “Separation through delegation”, which, according to the author, will satisfy the ISP:

Here is what the authors write about this: “This solution is consistent with the principle of the ISP and does not create connections between the Door customers and the Timer class. ... However, it is not too elegant. Every time we want to register a timeout, we have to create a new object. ”

After reading this section, I imagined what the younger Martin (who is the .NET th son) had to say after reading it:

“Pa, listen, I understand that this design option is not the last, and we are not writing a book from scratch, but still ...

After all, there are two extra classes, and for the DoorTimeout method in the TimedDoor class, which takes timeoutId, we’ll tear off personal belongings! After all, the timer in .NET is from the very beginning and the “observer” in them is built on the basis of events, and not on the basis of interfaces. So it turns out that we can throw out the TimerClient and DoorTimerAdapter , and make the DoorTimeout method closed (and also throw out this timeoutId).

So in terms of design, the code will be cleaner, and in terms of implementation, too. So, this example should be thrown out, and the chapter should be completely reworked under the idioms of the C # language, and the community may not appreciate such creativity! ”

However, such a conversation, apparently, was not! Therefore, there are some very unfortunate examples in this chapter that cannot be found in a normal C # application.

“Static diagrams describe the invariable logical structure of a program, namely, the elements — classes, objects , data structures — and the relationships between them.”

Objects are not part of the "immutable logical structure of the program."

"An association is a simple relation, in that one object stores a reference to another and calls methods of another object."

Association does not imply the preservation of links. If class A uses Singleton directly, then there is no link, but there is an association.

“Composition is a special case of aggregation. Again, I note that the implementation is indistinguishable from the general association. This time, the reason is that this relationship finds few uses in C # programs . ”

It must be said here that in controlled languages of pure composition, when the whole strictly controls the lifetime of the component, it is impossible to achieve. But if we speak from a logical point of view, the composition in the C # language is used quite often, if the developer does not abuse the principle of dependency inversion.

It is very interesting that the book describes principles and patterns, while the description of some very rarely relies on the description of others. So, many of the problems described in the DIP pattern are successfully solved by the observer, the strategy and the mediator, but when describing this principle, the patterns are hardly mentioned. Or, when describing Singleton's problems, it would be necessary to say that all its users will violate DIP and get problems with testability, but, again, these parallels are not made.

Now a little specifics.

“Both patterns, considered in this chapter, pursue one goal: to impose some sort of policy on a group of objects. The façade imposes a policy on top and a mediator on the bottom. The facade is visible and imposes restrictions. The Mediator is not visible and does not restrict anything. ”

Troll 80 Level Detected! In this way, we can find a common ground between any two concepts, and even if they are not there, we can combine them in one place due to their differences!

These patterns really have nothing in common, belong to different categories (facade - structural, mediator - behavioral) and are designed to solve different tasks at different levels of abstraction. The chapter of the book should be as solid (cohesive) as the classes and modules, and attempts to describe heterogeneous concepts in one place seem dubious.

“There are two ways to use a decorator to work with databases. You can decorate a business object by replenishing it with methods of reading and writing, or you can decorate a data object that already knows how to read and write itself, providing it with business rules. The latter approach is often used when working with object-oriented databases. "

WTF ?! The main essence of the decorator is that it allows you to change the behavior without changing the interface of the object to be decorated. If we “replenish it with reading and writing methods” and do not override any virtual methods, then the resulting class will not be an adequate decorator!

Yes, formally, the decorator allows you to add operations, but they still have to expand the functionality of an existing class, rather than adding fundamentally new operations. Nevertheless, the decorator is used “for adding clients to objects dynamically, transparent for clients”.

“This dependency cycle links all visited subclasses — all types of modems — together, making it difficult to incrementally compile visitors or add new subclasses to the hierarchy that is being visited.”

Obviously, the selection was simply not updated after the transition from C ++ to C #.

“Worse, the casting speed may depend on the width and depth of the hierarchy, and therefore it is difficult to predict.”

It is possible that for environments with multiple inheritance of implementations, large hierarchies may affect the performance of type conversions, but I could not find evidence of these words for .NET.

Chapter 36 discusses a transition table based on a simple vector (fields of type IList ), which gives the following advice:

“The disadvantage of this solution is primarily low productivity. The search in the jump table takes time. If the state machine is very large, then the search time may be noticeable. ”

I have always thought that the State pattern based on the transition table is one of the most successful solutions in terms of development cost / efficiency. But, of course, a typical solution involves the use of a dictionary that will provide O (1) the difficulty of finding a transition.

“The Pattern Method pattern is easy to use in practice, but it is not flexible enough. The Strategy pattern has the necessary flexibility, but you have to introduce an additional class, create an additional object and incorporate it into the system. Therefore, the choice between these patterns depends on whether you need the flexibility of the Strategy or you are ready to be satisfied with the simplicity of the Template method. ”

Insufficient flexibility of the pattern The templating pattern is not its flaw, but its particularity. Since developing classes for convenient extension by successors is not an easy task, the base class can provide a framework in which the heir will have to squeeze.

Bob Martin is a well-known supporter of TDD, but I prefer to think about design in terms of contracts. As a result, there are several bugs in the code of the book, since the implementation was "driven" by tests, and not by preconditions and postconditions. In more detail about it it is possible to read in article: "Contracts, a state and unit tests" .

With unit tests for Martin there are a few problems. First, a huge number of tests go to the database, which makes them essentially integration tests. I do not know whether in 2006 there was a clear separation between these two concepts, but now the difference between them is more or less obvious.

Fallen unit test means bugs in the code or at least the mismatch of the actual behavior of the intended. A fallen integration test doesn’t mean anything like that, since this may be a consequence of environmental problems. That is why unit tests are cutting builds and run on each build, and integration tests are in a separate build and are not run so often.

Secondly, Martin leads too many tests written on the principle: they isolated the interfaces of all the classes, they wrote the tests, HAPPINESS. This approach worries me that after working for a couple of months we will get a code with good coverage, but with a bad design.

You can, of course, say: “well, this is still the second edition of the book, and the original of the first edition was released as early as 2002, then everything was different!”. But the second edition came out in 2006, and the authors had time to update the material of the book based on the experience gained. But that's not the point. At the time of publication of the first edition (more precisely in a year), Evans published Domain-Driven Design , which shows more pragmatic views on design, on tests, on struggle with complexity, and on many other issues. Even then, in Evans, one can see thoughts about the benefits of immutability, about transferring logic to simple objects (called Value Objects), that one can almost always turn the design around so that complex composite objects become such high-level mediators.

As a result, already at the beginning of the null it was known that instead of “we select interfaces and potest”, there is another approach: select behavior into classes that do not depend on anyone (neither on other classes, nor on the external environment); then create higher-level classes that are built on the basis of proven lower-level classes. As a result, we get a hierarchical system built on a solid foundation with the interfaces selected only when the application needs the ability to substitute behavior during execution.

NOTE

I wrote more about this in the article “Testing design vs. Good design .

Interestingly, on the same topic, contracts vs. unit tests, there is an interesting InfoQ video featuring Martin and Coplien: Coplien and Martin Debate TDD, CDD and Professionalism

Well, the book provides examples of tests for which you need to select a keyboard. Here is one of them:

This test does not check anything: if it passes, it means that the code of the test and the class is the same, but it does not say that the operation is implemented correctly. Nevertheless, the test should be more abstract in comparison with the code under test, otherwise there will be no sense from them.

I already mentioned that when reading a book, it is felt that this is the second edition of the book, which was slightly adapted to C #, and not fully reworked using the idioms of a new programming language. In many places, Java idioms are used (method names with a small letter, CONSTANT_WRITTED_WOT_TAK), the Main method is added to the domain object, occasionally the concept of “package” is used instead of assemblies. The examples lack the standard .NET idioms: for observers, interfaces are used and no events are used; readonly modifier is not used, classes with resources do not implement IDisposable etc; generalizations are not used, although they appeared a year before the book appeared.

One of the most epic blunders is given in the next box, “Where did I go?”.

“Generally speaking, it is not necessary to include in the name some kind of orthogonal concept, especially if this concept can change. For example, what if we want to make ICommand not an interface, but an abstract class? Will we look for all references to ICommand and replace them with Command ? Are we going to recompile and deploy all the assemblies affected by this change? "

IMHO, it's easier to come to someone else's monastery with its own charter, than to come to another language with your idioms . If I program in Java, then I use local idioms for naming regardless of my own preferences, this is RIGHT! But most of all in this box I like the argument.

Junior Martin apparently did not tell the elder that the transition from the interface to the abstract class is a potential breaking change, regardless of whether we change the name of the entity or not: in any case, we will need to “re-compile and deploy all the assemblies affected by this change”! Attempting to replace the assembly on the fly with a similar change will lead to the collapse of the application!

Martins are very fond of the condition, therefore, when refactoring a generator of primes (p. 80), static fields were highlighted:

“Allocating three functions made me convert some local variables to static class fields. As a result, it became much clearer which variables are actually local, and which ones have a wider scope. ”

I do not understand the love of preserving intermediate results in the fields. If you want to separate local variables from non-local variables, then the method arguments are suitable for this, it is not necessary to use a shared state for this.

The shared state complicates the code (after all, now the order of calls is important), and also makes it impossible to get primes in parallel from different threads, which violates the generally accepted rule of the “Framework Design Guidelines”, which states that static class members must be thread-safe.

I'm not saying for the fact that the code does not use blocks of iterators that came out a year before the release of this book.

This code is discussed on page 251 and is not in the examples that come with the book. I put it here .

There are two types of problems in the code: firstly, there are trivialities, such as the lack of readonly, the absence of the IDisposable interface, the use of interfaces instead of events to implement the observer, the manual manipulation of threads when there are asynchronous operations from the first version of the .NET Framework and the absence of exception handling.

But most importantly, this code is an example of poor design. The responsibilities of the classes SocketServer , ServiceRunner and the heirs of SocketService are blurred and not clear.

For example, we have a class that implements the SocketService interface. What can be done in the Serve (Socket s ) method? Somehow he says that this method is called in another thread? Not. How can he know that the parent socket is closed and he also needs to interrupt the execution of his operations? And how to understand, is it possible to call blocking methods of type Receive in this method, or do you need to twist your own infinite loop?

It can be seen that if it is impossible to write tests to the code, then the resulting design of the Martins will be terrible. This code does not effectively use the built-in capabilities of the .NET Framework, but the funny thing is that it just does not work! Look at the Close method, running there is set to false , and then thread .Interrupt () is called, which will not lead to anything, since the current server thread now hangs waiting for a new connection in the serverSocket .AcceptSocket () method. A similar problem will occur with all client sockets if they call s .Receive () in the Server method: this whole city cannot be closed.

The full code can be found here .

Everything is not so fatal here, but the use of java-style constants, the lack of the readonly keyword for key and nodes fields, and the unsuccessful names of constants: LESS and GREATER , which in fact determine the subtree index, are striking.

Someone asked me why I rated this book on goodreads.com at 2 points. Well, in that case I have another question: is it really worth putting more?

I am very frightened that this book is almost a classic, although it contains a set of questionable principles, with questionable rules about when to follow them and when not. In the book, a vague description of patterns that are not tied to the principles described earlier. In the book, two buckets of slapstick that tests are driven by the example of fragile tests and code with a bunch of errors that would not exist if the design of the class had been thought at least a little in advance. The book is aimed at C # programmers, but even by the standards of 2006, the code in it is no good.

I am afraid that this book can lead to serious manifestations of the cult of cargo , if it is read by an inexperienced developer, and she really does not give anything to the experienced. So it turns out that the main advantage of this book is that it allows you to adequately participate in trolling about SOLID and other principles, but no more.

When reading this note, it is worthwhile to include common sense and not consider it as an attack on the holy. After all, it is quite possible that you read it several years ago, when there were fewer scars on the hands of littered projects, and with the word “patterns” the knees trembled a little. So maybe you should look at the "classics" from the height of the new experience?

From the first pages of the book, Martins are very weakly thrown onto the fan, starting the book as follows:

')

“My experience is that .NET programmers are often weaker than those written in Java or C ++. It is clear that there are exceptions. However, over and over again, watching my listeners, I was forced to conclude that .NET programmers usually have less knowledge of software development methods, patterns and design principles, etc. It often happened that the .NET programmers present were not at all aware of these fundamental concepts. This situation must be changed .

While working on this book, I often doubted whether to put my name on the cover of a book devoted to .NET. I asked myself if I wanted to be associated with .NET and with all the negative perceptions associated with this platform. But what's the point of denying? I'm a .NET programmer. Not! I am a flexible .NET programmer. And proud of it. "

Well, now let's see what we, illiterate .NET chicks, can learn from the comrades of the Martin.

Principles

In general, the description of all the principles of SOLID seemed to me very ambiguous. On the one hand, many of them (such as DIP and OCP) are formulated very rigidly: DIP prohibits the use of a variable of a particular type, and OCP implies an extension without recompilation. On the other hand, sometimes there are pragmatic notes that you need to use these principles wisely.

Here is an interesting point:

“Flexible teams apply these principles (meaning SOLID) only to eliminate odor; when nothing bad smells, then the principles do not apply. It would be a mistake to unconditionally adhere to a certain principle simply because it is a principle. Principles exist to help eliminate bad odors. These are not perfumes that need to be plentifully watered the entire system. Excessive adherence to principles leads to vice of unnecessary complexity. ”

On the one hand, this is a very pragmatic point of view, but on the other hand, why should we make such rigid principles, in order to introduce additional rules about when to follow them and when not?

In addition, it seems to me that the causes and effects are confused: the principles are needed primarily to determine the “nice” in design and they do not help in their elimination.

Below are descriptions of the principles, which cannot be broken.

Lsp

“If during the creation of a derived class we are forced to make a change to the base class, then the design most likely has a flaw.”

Or there is another option: we live in the real world, in which evolutionary design is the key development approach.

“Does the LSP principle find application in real programs? Consider an example taken from a project I worked on several years ago . ... In the early 1990s ... "

The original book was released in the middle of 2006 (already after the release of C # 2.0 and generalizations), and here a few years ago it was the beginning of the 1990s.

"The Liskov substitution principle is one of the main tools for implementing the OCP principle."

I would say that one of the options for achieving OCP is polymorphism, and LSP says that polymorphism works the way we expect it to. There are other options for achieving OCP, for example, based on callback functions.

If we are already talking about the formalization of the LSP principle, then we need to talk about contracts, about their role for the correct implementation of inheritance. Contracts in the book are mentioned, but literally in passing.

Dip

The criticism of this principle is described in more detail in the article “A Critical Look at the Principle of Inversion of Dependencies” .

But here is one very emotional conclusion, which is very pleased:

“In fact, such dependency inversion is a great sign of object-oriented design. It doesn't matter what language the program is written in. If the dependencies are inverted, then we have an object-oriented design. Otherwise, the design is procedural. ”

Very controversial statement, agree!

OCP

Not many people know that the author of the principle Open-Close is not Bob Martin, but Bertrand Meyer in his book Object-Oriented Design of Software Systems . At the same time, even fewer people noticed that Bob Martin interprets this principle in his own way.

Definition from Bob Martin : program entities (classes, modules, functions, etc.) should be open for expansion, but closed for modification.

Thus, the modules have two main characteristics:

- They are open for expansion. This means that the behavior of the module can be expanded. When the requirements for an application change, we add a new behavior to the module that meets the changed requirements. In other words, we can change the composition of the module's functions.

- They are closed for modification. The extension of module behavior is not associated with changes in the source or binary code of a module. The binary executable representation of the module, be it a composable library, a DLL, or an .exe file, remains unchanged.

Definition by Bertrand Meier : modules must be able to be both open and closed.

In this case, the concepts of openness and closeness are defined as follows:

- A module is called open if it is still available for expansion. For example, it is possible to expand the set of operations in it or add fields to its data structures.

- A module is called closed if it is available for use by other modules. This means that the module (its interface - in terms of hiding information) already has a well-defined final description. At the implementation level, the closed state of the module means that the module can be compiled, stored in the library and made available for use by other modules (its clients).

In other words, Meyer speaks about the openness / closeness of the module interface, I Martin - about the openness / closeness of the module as a whole, including its interface and implementation.

The possibility of extending the behavior of a module without recompilation should be described in the requirements for the module, and not be based on principles.

At the same time, it is amusing that as an example of the Open-Close principle, the Shape class is still given with the Draw method, which corresponds to the open-closed principle compared to the solution from structural design. But at the same time, he is silent about how open this solution will be for expansion and closed for modification if we want to add a new type of shape or a new operation to the base class Shape .

ISP

While reading the book, I repeatedly found myself thinking that the book was not written from scratch, but was exactly the second edition, the examples of which were translated into C #. Moreover, the book has been reworked "in the forehead," and not processed using the idioms of the .NET platform.

One of the best manifestations of this property are the examples given in the description of the Interface Segregation Principle principle.

The principle of separation of interfaces is considered in two examples, one of which is a security system with the class Door (Door) and a subclass of TimedDoor , which should give out a sound signal if the door is not closed for some time. Here is one of the solutions to this task, given in the section “Separation through delegation”, which, according to the author, will satisfy the ISP:

Here is what the authors write about this: “This solution is consistent with the principle of the ISP and does not create connections between the Door customers and the Timer class. ... However, it is not too elegant. Every time we want to register a timeout, we have to create a new object. ”

After reading this section, I imagined what the younger Martin (who is the .NET th son) had to say after reading it:

“Pa, listen, I understand that this design option is not the last, and we are not writing a book from scratch, but still ...

After all, there are two extra classes, and for the DoorTimeout method in the TimedDoor class, which takes timeoutId, we’ll tear off personal belongings! After all, the timer in .NET is from the very beginning and the “observer” in them is built on the basis of events, and not on the basis of interfaces. So it turns out that we can throw out the TimerClient and DoorTimerAdapter , and make the DoorTimeout method closed (and also throw out this timeoutId).

So in terms of design, the code will be cleaner, and in terms of implementation, too. So, this example should be thrown out, and the chapter should be completely reworked under the idioms of the C # language, and the community may not appreciate such creativity! ”

However, such a conversation, apparently, was not! Therefore, there are some very unfortunate examples in this chapter that cannot be found in a normal C # application.

UML

“Static diagrams describe the invariable logical structure of a program, namely, the elements — classes, objects , data structures — and the relationships between them.”

Objects are not part of the "immutable logical structure of the program."

"An association is a simple relation, in that one object stores a reference to another and calls methods of another object."

Association does not imply the preservation of links. If class A uses Singleton directly, then there is no link, but there is an association.

“Composition is a special case of aggregation. Again, I note that the implementation is indistinguishable from the general association. This time, the reason is that this relationship finds few uses in C # programs . ”

It must be said here that in controlled languages of pure composition, when the whole strictly controls the lifetime of the component, it is impossible to achieve. But if we speak from a logical point of view, the composition in the C # language is used quite often, if the developer does not abuse the principle of dependency inversion.

Patterns

It is very interesting that the book describes principles and patterns, while the description of some very rarely relies on the description of others. So, many of the problems described in the DIP pattern are successfully solved by the observer, the strategy and the mediator, but when describing this principle, the patterns are hardly mentioned. Or, when describing Singleton's problems, it would be necessary to say that all its users will violate DIP and get problems with testability, but, again, these parallels are not made.

Now a little specifics.

Facade and intermediary

“Both patterns, considered in this chapter, pursue one goal: to impose some sort of policy on a group of objects. The façade imposes a policy on top and a mediator on the bottom. The facade is visible and imposes restrictions. The Mediator is not visible and does not restrict anything. ”

Troll 80 Level Detected! In this way, we can find a common ground between any two concepts, and even if they are not there, we can combine them in one place due to their differences!

These patterns really have nothing in common, belong to different categories (facade - structural, mediator - behavioral) and are designed to solve different tasks at different levels of abstraction. The chapter of the book should be as solid (cohesive) as the classes and modules, and attempts to describe heterogeneous concepts in one place seem dubious.

Decorator

“There are two ways to use a decorator to work with databases. You can decorate a business object by replenishing it with methods of reading and writing, or you can decorate a data object that already knows how to read and write itself, providing it with business rules. The latter approach is often used when working with object-oriented databases. "

WTF ?! The main essence of the decorator is that it allows you to change the behavior without changing the interface of the object to be decorated. If we “replenish it with reading and writing methods” and do not override any virtual methods, then the resulting class will not be an adequate decorator!

Yes, formally, the decorator allows you to add operations, but they still have to expand the functionality of an existing class, rather than adding fundamentally new operations. Nevertheless, the decorator is used “for adding clients to objects dynamically, transparent for clients”.

Visitor

“This dependency cycle links all visited subclasses — all types of modems — together, making it difficult to incrementally compile visitors or add new subclasses to the hierarchy that is being visited.”

Obviously, the selection was simply not updated after the transition from C ++ to C #.

“Worse, the casting speed may depend on the width and depth of the hierarchy, and therefore it is difficult to predict.”

It is possible that for environments with multiple inheritance of implementations, large hierarchies may affect the performance of type conversions, but I could not find evidence of these words for .NET.

condition

Chapter 36 discusses a transition table based on a simple vector (fields of type IList ), which gives the following advice:

“The disadvantage of this solution is primarily low productivity. The search in the jump table takes time. If the state machine is very large, then the search time may be noticeable. ”

I have always thought that the State pattern based on the transition table is one of the most successful solutions in terms of development cost / efficiency. But, of course, a typical solution involves the use of a dictionary that will provide O (1) the difficulty of finding a transition.

Template Method and Strategy

“The Pattern Method pattern is easy to use in practice, but it is not flexible enough. The Strategy pattern has the necessary flexibility, but you have to introduce an additional class, create an additional object and incorporate it into the system. Therefore, the choice between these patterns depends on whether you need the flexibility of the Strategy or you are ready to be satisfied with the simplicity of the Template method. ”

Insufficient flexibility of the pattern The templating pattern is not its flaw, but its particularity. Since developing classes for convenient extension by successors is not an easy task, the base class can provide a framework in which the heir will have to squeeze.

Unit tests

Bob Martin is a well-known supporter of TDD, but I prefer to think about design in terms of contracts. As a result, there are several bugs in the code of the book, since the implementation was "driven" by tests, and not by preconditions and postconditions. In more detail about it it is possible to read in article: "Contracts, a state and unit tests" .

With unit tests for Martin there are a few problems. First, a huge number of tests go to the database, which makes them essentially integration tests. I do not know whether in 2006 there was a clear separation between these two concepts, but now the difference between them is more or less obvious.

Fallen unit test means bugs in the code or at least the mismatch of the actual behavior of the intended. A fallen integration test doesn’t mean anything like that, since this may be a consequence of environmental problems. That is why unit tests are cutting builds and run on each build, and integration tests are in a separate build and are not run so often.

Secondly, Martin leads too many tests written on the principle: they isolated the interfaces of all the classes, they wrote the tests, HAPPINESS. This approach worries me that after working for a couple of months we will get a code with good coverage, but with a bad design.

You can, of course, say: “well, this is still the second edition of the book, and the original of the first edition was released as early as 2002, then everything was different!”. But the second edition came out in 2006, and the authors had time to update the material of the book based on the experience gained. But that's not the point. At the time of publication of the first edition (more precisely in a year), Evans published Domain-Driven Design , which shows more pragmatic views on design, on tests, on struggle with complexity, and on many other issues. Even then, in Evans, one can see thoughts about the benefits of immutability, about transferring logic to simple objects (called Value Objects), that one can almost always turn the design around so that complex composite objects become such high-level mediators.

As a result, already at the beginning of the null it was known that instead of “we select interfaces and potest”, there is another approach: select behavior into classes that do not depend on anyone (neither on other classes, nor on the external environment); then create higher-level classes that are built on the basis of proven lower-level classes. As a result, we get a hierarchical system built on a solid foundation with the interfaces selected only when the application needs the ability to substitute behavior during execution.

NOTE

I wrote more about this in the article “Testing design vs. Good design .

Interestingly, on the same topic, contracts vs. unit tests, there is an interesting InfoQ video featuring Martin and Coplien: Coplien and Martin Debate TDD, CDD and Professionalism

A good example of terrible tests.

Well, the book provides examples of tests for which you need to select a keyboard. Here is one of them:

[Test] public void LoadingEmployeeDataCommand() { operation = new LoadEmployeeOperation(123, null); SqlCommand command = operation.LoadEmployeeCommand; Assert.AreEqual("select * from Employee " + "where EmpId=@EmpId", command.CommandText); Assert.AreEqual(123, command.Parameters["@EmpId"].Value); } This test does not check anything: if it passes, it means that the code of the test and the class is the same, but it does not say that the operation is implemented correctly. Nevertheless, the test should be more abstract in comparison with the code under test, otherwise there will be no sense from them.

.NET

I already mentioned that when reading a book, it is felt that this is the second edition of the book, which was slightly adapted to C #, and not fully reworked using the idioms of a new programming language. In many places, Java idioms are used (method names with a small letter, CONSTANT_WRITTED_WOT_TAK), the Main method is added to the domain object, occasionally the concept of “package” is used instead of assemblies. The examples lack the standard .NET idioms: for observers, interfaces are used and no events are used; readonly modifier is not used, classes with resources do not implement IDisposable etc; generalizations are not used, although they appeared a year before the book appeared.

One of the most epic blunders is given in the next box, “Where did I go?”.

“Generally speaking, it is not necessary to include in the name some kind of orthogonal concept, especially if this concept can change. For example, what if we want to make ICommand not an interface, but an abstract class? Will we look for all references to ICommand and replace them with Command ? Are we going to recompile and deploy all the assemblies affected by this change? "

IMHO, it's easier to come to someone else's monastery with its own charter, than to come to another language with your idioms . If I program in Java, then I use local idioms for naming regardless of my own preferences, this is RIGHT! But most of all in this box I like the argument.

Junior Martin apparently did not tell the elder that the transition from the interface to the abstract class is a potential breaking change, regardless of whether we change the name of the entity or not: in any case, we will need to “re-compile and deploy all the assemblies affected by this change”! Attempting to replace the assembly on the fly with a similar change will lead to the collapse of the application!

PrimeGenerator

Martins are very fond of the condition, therefore, when refactoring a generator of primes (p. 80), static fields were highlighted:

“Allocating three functions made me convert some local variables to static class fields. As a result, it became much clearer which variables are actually local, and which ones have a wider scope. ”

class PrimeGenerator { private static bool[] crossedOut; private static int[] result; public static int[] GeneratePrimeNumbers(int maxValue) { return null; } // crossedOut private static void UncrossIntegersUpTo(int maxValue) { } // result crossedOut private static void PutUncrossedIntegersIntoResult() { } } I do not understand the love of preserving intermediate results in the fields. If you want to separate local variables from non-local variables, then the method arguments are suitable for this, it is not necessary to use a shared state for this.

The shared state complicates the code (after all, now the order of calls is important), and also makes it impossible to get primes in parallel from different threads, which violates the generally accepted rule of the “Framework Design Guidelines”, which states that static class members must be thread-safe.

I'm not saying for the fact that the code does not use blocks of iterators that came out a year before the release of this book.

SocketServer

This code is discussed on page 251 and is not in the examples that come with the book. I put it here .

public interface SocketService { void Serve(Socket s); } public class SocketServer { private TcpListener serverSocket = null; private Thread serverThread = null; private bool running = false; private SocketService itsService = null; private ArrayList threads = new ArrayList(); public SocketServer(int port, SocketService service) { itsService = service; IPAddress addr = IPAddress.Parse("127.0.0.1"); serverSocket = new TcpListener(addr, port); serverThread = new Thread(new ThreadStart(Server)); serverThread.Start(); } public void Close() { running = false; serverThread.Interrupt(); serverSocket.Stop(); serverThread.Join(); WaitForServiceThreads(); } private void Server() { serverSocket.Start(); running = true; while (running) { Socket s = serverSocket.AcceptSocket(); StartServiceThread(s); } } // } There are two types of problems in the code: firstly, there are trivialities, such as the lack of readonly, the absence of the IDisposable interface, the use of interfaces instead of events to implement the observer, the manual manipulation of threads when there are asynchronous operations from the first version of the .NET Framework and the absence of exception handling.

But most importantly, this code is an example of poor design. The responsibilities of the classes SocketServer , ServiceRunner and the heirs of SocketService are blurred and not clear.

For example, we have a class that implements the SocketService interface. What can be done in the Serve (Socket s ) method? Somehow he says that this method is called in another thread? Not. How can he know that the parent socket is closed and he also needs to interrupt the execution of his operations? And how to understand, is it possible to call blocking methods of type Receive in this method, or do you need to twist your own infinite loop?

It can be seen that if it is impossible to write tests to the code, then the resulting design of the Martins will be terrible. This code does not effectively use the built-in capabilities of the .NET Framework, but the funny thing is that it just does not work! Look at the Close method, running there is set to false , and then thread .Interrupt () is called, which will not lead to anything, since the current server thread now hangs waiting for a new connection in the serverSocket .AcceptSocket () method. A similar problem will occur with all client sockets if they call s .Receive () in the Server method: this whole city cannot be closed.

Treemap

The full code can be found here .

internal class TreeMapNode { private static readonly int LESS = 0; private static readonly int GREATER = 1; private IComparable key; private object value; private TreeMapNode[] nodes = new TreeMapNode[2]; private int SelectSubNode(IComparable key) { return (key.CompareTo(this.key) < 0) ? LESS : GREATER; } } Everything is not so fatal here, but the use of java-style constants, the lack of the readonly keyword for key and nodes fields, and the unsuccessful names of constants: LESS and GREATER , which in fact determine the subtree index, are striking.

Conclusion

Someone asked me why I rated this book on goodreads.com at 2 points. Well, in that case I have another question: is it really worth putting more?

I am very frightened that this book is almost a classic, although it contains a set of questionable principles, with questionable rules about when to follow them and when not. In the book, a vague description of patterns that are not tied to the principles described earlier. In the book, two buckets of slapstick that tests are driven by the example of fragile tests and code with a bunch of errors that would not exist if the design of the class had been thought at least a little in advance. The book is aimed at C # programmers, but even by the standards of 2006, the code in it is no good.

I am afraid that this book can lead to serious manifestations of the cult of cargo , if it is read by an inexperienced developer, and she really does not give anything to the experienced. So it turns out that the main advantage of this book is that it allows you to adequately participate in trolling about SOLID and other principles, but no more.

Source: https://habr.com/ru/post/208454/

All Articles