Approaches to optimizing (web) applications

I do not know about you, I personally love to engage in optimizing the performance of programs. I love it when the programs do not slow down, but the sites open quickly. In this article I would like to give some (basic) approaches to improving performance. Basically, they are related to web applications, but some things are true for "regular" programs. I will touch on topics such as profiling, batch processing, asynchronous processing of requests, etc. This topic can be considered a continuation of “Strategies to optimize web applications using MySQL .

I do not know about you, I personally love to engage in optimizing the performance of programs. I love it when the programs do not slow down, but the sites open quickly. In this article I would like to give some (basic) approaches to improving performance. Basically, they are related to web applications, but some things are true for "regular" programs. I will touch on topics such as profiling, batch processing, asynchronous processing of requests, etc. This topic can be considered a continuation of “Strategies to optimize web applications using MySQL .When to optimize?

The very first question that you need to ask yourself before you start optimizing something is whether you are satisfied with the current performance? For example, if you are developing a game, what is the minimum FPS on the “middle” hardware and “average” settings? If it drops below, say, 30, then players will notice. Even if the average frame rate is 60 FPS, it is the minimum FPS value that determines the feel of the game - it “slows down” or “it runs smoothly”. If this is a website, how long does the page open to users? If this time is a fraction of a second, users will be more active and will get satisfaction from working with your site.

Suppose you understand that you (or your boss, hehe) are not comfortable with the current performance of the application. What to do?

1. Measure

Any optimization should start with numbers . If you do not have the time of execution of individual fragments of the application, you can not optimize effectively. You can optimize for a long time fragments that are easier for you to optimize than those that really slow down, because you do not have a complete picture of what is happening.

')

When developing it is often convenient to use profiling to identify bottlenecks. For PHP, for example, there is a good profiler called xhprof from the company Facebook ( they wrote about it here repeatedly ). If you have a large and unfamiliar project, then profiler is practically the only way to quickly find bottlenecks in the code, if there are any. However, the “usual” profiler is rarely used during daily development, for several reasons at once:

- even the most "good" profilers significantly slow down the application

- for the results you need to go to a separate place or run separate viewers

- the results themselves need to be stored somewhere (the profiling data usually takes a significant amount of space).

For these (and perhaps some other) reasons, in order to replace a separate profiling utility for web development, so-called “debag panels” are embedded in the development version of the site ( example ), which summarizes (with the possibility to see the details) various metrics that are built right into the code. This is usually the number and execution time of SQL queries, the number and execution times of queries to other services (for example, memcache) are less common. Almost always, the total execution time, the size of the response from the server, the amount of memory consumed are also measured.

In most games, you can turn on the "debug console", in which, as a rule, you can see the number of FPS, the number of objects on the scene, etc. Until relatively recently, minecraft contained a diagram with the distribution of time for each frame: the time spent on “physics” was drawn in one color, and the rendering in another.

You can measure a lot of different things, but for web projects, most of the time is spent on the database or accessing other services. If you are not using some kind of ready-made framework, or your debugging framework does not include a debagging panel, even such code is enough to start with something:

<?php function sql_query($query) { $start = microtime(true); $result = mysql_query($query); $GLOBALS['SQL_TIME'] += microtime(true) - $start; return $result; } 2. I said measure!

You're doing great, everything is hung with timers, everything is wonderful in the development environment, but is everything different in production? So you are measuring incorrectly or, more likely, not everything ... What about measuring production performance? If you are writing, for example, in PHP, there is a great tool for measuring performance in a production environment using the UDP protocol called Pinba . Based on this tool, you can leave a debug panel during development and, in addition, get realtime statistics on your timers in a “combat” environment. If you have never measured performance in this way, you will most likely find out a lot of interesting things about how your site actually works.

The return time of the page from the server is 100 ms, but do you still complain that the pages open for a long time? Measure the size of the data returned and the performance counters built into the browser . Maybe your site needs a CDN to transfer static data, maybe you just need to change the hosting provider. Until you measure where the bottleneck is, you can only guess.

3. Be lazy

You can often find that in the code for every sneeze there is an initialization of something big and unnecessary on this page. For example, some large init-method, which for each web request climbs the weather forecast to another site or runs “git pull origin master” in the root of the project, as a means of automatic deployment. In the process of performance analysis, you will surely come across a lot of things that you can just throw out of the code, or "wrap in if", and include the desired piece only when it is really required.

It is often found that large fragments of the page remain almost unchanged (for example, the header and the footer of the page). If this is the case, then the obvious solution is either to pre-generate the content, or place it in the cache and not draw it every time with a new one.

Be lazy in your code and in real life. Do not do the same (unwanted) work all the time, do only what you really need to answer the user's request, and either don’t do the rest or delegate to someone (for example, you can have "heavy" but not very sensitive to delays, to entrust things to cron instead of performing it on the web).

4. Use (asynchronous) batch processing.

In the most different projects, you can meet the same trivial design error: processing dozens, hundreds of records, one at a time, instead of processing everything together, with a single request. For example, if on a page you need to show data on 30 products, make one query of the form "SELECT ... FROM table WHERE id IN (...)" instead of 30 queries of the form "SELECT ... FROM table WHERE id = ...". For most databases, the difference in speed between one query with a result of 30 rows and one (!) Query with a result of 1 row will not be at all. As a rule, you need a very small number of changes in the code in order to add batch processing, and in return you will receive a speed increase at times, sometimes hundreds of times. This applies not only to SQL queries, but also to any calls to external or internal services. Contacting the network anywhere always introduces a significant delay in processing the request, so the number of requests is highly desirable to reduce to a minimum in the conditions of the web.

Another way to speed up batch processing is asynchronous. If your language allows it and you can group requests for different services into one and execute them asynchronously, then you also get a noticeable decrease in response time, and the more services, the greater the gain. This is poorly applicable to MySQL, but is well applicable when working with, say, the slow Google Datastore API.

5. Simplify the complex, unravel the confusing

Let me give you an example: you have a hefty SQL query that does something, and does it (possibly) correctly, but sooo slowly , while scanning millions of lines. To optimize a complex SQL query, you first need to simplify it, throw out all unnecessary, perhaps whole tables or nested samples. Only after you have removed all unnecessary, you can begin to carry out meaningful optimization. Otherwise, you can waste a lot of time optimizing a fragment that does not affect the final result. Often, especially when working with MySQL, as a satisfactory solution, there can be a split query into several simpler ones, each of which is much faster than the original one.

In systems that are developed by a large number of people, it is often possible to see "layering of crutches" instead of a clear and well-designed code. This happens not only with low qualifications of programmers, but also with the usual, iterative development, provided there is no constant refactoring. If you find that the problem lies in one of these places, first you need to do a little code refactoring in order to, firstly, understand how it works, and secondly, you can immediately optimize or throw away some obviously extra things. It often happens that, after simply putting the code in order, the performance of the code becomes satisfactory and the corresponding fragment ceases to require optimization.

6. “Dear” features

It is quite possible that you find out that there are features used by 0.1% of users, but which at the same time take up half the response time on the server. If this is the case, then you can either try to rethink this functionality and offer some other, more “cheaper” solution in return, or make this feature optional and disabled by default. If the user needs it, he will turn it back on.

7. Caching

If nothing else helps, or you have data that does not change over time, cache it. Why am I saying that it is worth using the cache only when all other options are used up? The fact is that as soon as you start putting mutable data into the cache, you will need to monitor the relevance of the cache, which is a very complex engineering task.

Example: you cache individual records in the table by the primary key id. One entry - one key in the cache of the form "table_ <id>". Imagine now that you need to update several records on a specific condition ("update table where <condition>"). How to reset the cache in this case? One of the simple but very laborious decisions is to prefetch this condition, reset all entries in the cache by id and then update. But what if, between select, flushing the cache and the update, another request will “wedge in”? Like this:

| First request: 1. select id from table where <condition> 2. memcache delete ids 6. update table set ... where <condition> | Second parallel query: 3. memcache get "table_N" - empty, the cache is already reset 4. select ... from table where id = N 5. memcache set "table_N" - set the old data in the cache |

The presence of two data sources instead of one without very carefully written code inevitably leads to their desynchronization and, in the case of the cache, to unpleasant artifacts on the site in the form of inconsistent or outdated data, “broken” counters, etc.

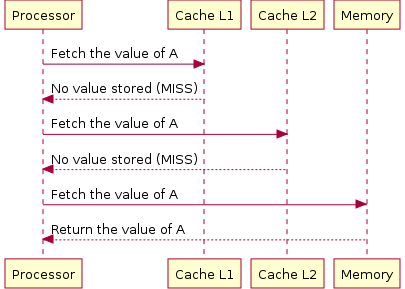

Of course, there are a large number of examples where caching is successfully used to increase performance, but, again, after considering all the other possibilities. In operating systems, operations on working with slow media are cached (reading is simply cached, the record is buffered, which sometimes leads to data corruption or file system structure). This makes a significant contribution to the speed of work, but if you have ever compared the user experience from working with SSD and with a hard disk, you will never want to use the latter again, despite caching :). The processors use many cache levels, since different memory sizes differ in speed by orders of magnitude (compare the access time of the order of 1 ns to the register memory and 10 ms for the hard disk - a difference of 10 million times!), Therefore, a significant complication of the architecture is still justified ( illustration taken from wikipedia ).

Of course, there are a large number of examples where caching is successfully used to increase performance, but, again, after considering all the other possibilities. In operating systems, operations on working with slow media are cached (reading is simply cached, the record is buffered, which sometimes leads to data corruption or file system structure). This makes a significant contribution to the speed of work, but if you have ever compared the user experience from working with SSD and with a hard disk, you will never want to use the latter again, despite caching :). The processors use many cache levels, since different memory sizes differ in speed by orders of magnitude (compare the access time of the order of 1 ns to the register memory and 10 ms for the hard disk - a difference of 10 million times!), Therefore, a significant complication of the architecture is still justified ( illustration taken from wikipedia ).Conclusion

So, we looked at the basic ways to improve the performance of (web) applications, mainly considering a bunch of PHP + MySQL as the most common. I personally use the above approaches for optimization, and so far I have been able to speed up projects with great effort many times, sometimes dozens of times, having spent just a few days for the biggest one :). Hopefully, if the article does not teach you how to optimize, then at least it will push you (and your colleagues) in the right direction, and the world will be a little better.

* The first illustration is taken from this page (according to Google)

Source: https://habr.com/ru/post/208138/

All Articles