Our experience in optimizing nginx to distribute video content

Our company serves many large Internet portals of various subjects. The specificity of such projects implies the emergence of various difficulties with the growth of the audience, and hence the increase in the load on the servers. One of our clients is actively promoting its video portal, and, as a result, the load inevitably began to grow, and at a great pace. At some point, it became impossible to manage with two servers and it was decided to add two more. Then two more ... as a result, the servers became 12. However, the load continues to grow and it cannot be limited to only one horizontal scaling. It is time to think about deeper optimization.

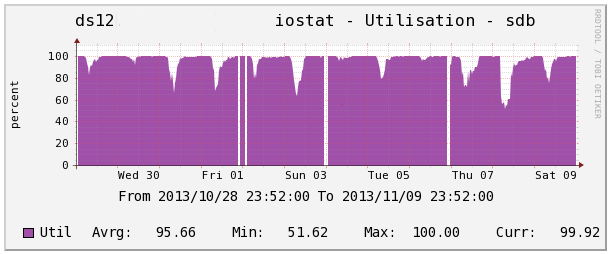

Our company serves many large Internet portals of various subjects. The specificity of such projects implies the emergence of various difficulties with the growth of the audience, and hence the increase in the load on the servers. One of our clients is actively promoting its video portal, and, as a result, the load inevitably began to grow, and at a great pace. At some point, it became impossible to manage with two servers and it was decided to add two more. Then two more ... as a result, the servers became 12. However, the load continues to grow and it cannot be limited to only one horizontal scaling. It is time to think about deeper optimization.So, everyone, of course, knows that nginx is best at dealing with static distribution. Of course, with proper configuration optimization. This project is no exception - Nginx and distributes all the statics. Basically, these are graphic and audio / video content, in particular, video files ranging in size from 50 MB to 2 GB. Nginx does an excellent job with its task, and our team has already gained quite a lot of experience in its fine-tuning. But, one way or another, problems always arise, and their solutions do not always lie on the surface. In our case, difficulties began to arise in load peaks, when the portal laid out a “fresh batch” of video content. In simple terms, the system rested on the disks. The 100% load on the disk subsystem could last several hours in a row, as can be seen in the graph below.

As a result, the content delivery rate is very low and dissatisfied users.

')

Part of the configuration file responsible for the distribution of statics at the time:

location ~* \.(jpg|jpeg|gif|png|ico|css|bmp|js|swf|flv|avi|djvu|mp3|mp4|3gp)$ { aio on; directio 512; output_buffers 1 512k; root /srv/www/htdocs; } How can you improve the situation in such cases? There are many articles on Habré (and not only) on this topic and in the comments there are many useful tips. It is believed that a fast SSD cache is a better solution for distributing statics. Someone thinks the cache in VFS is good for a large number of small files, as long as it fits within the scope of the highlighted section. The SSD option is probably good, but not for everyone, because sometimes there is simply no physical ability to add another disk, not to mention a pair. Allocating a part of the RAM to the cache partition is not the right solution, the Linux kernel (we use only Linux) does a good job of caching, and there is not always enough free RAM.

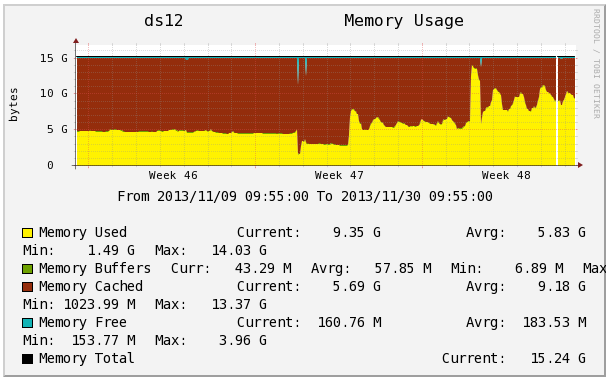

In our case, there was a lot of free RAM (up to 90% of 16 GB on each server). But how can it be properly used? It was decided to increase the recoil buffer from 512 KB to 8 MB:

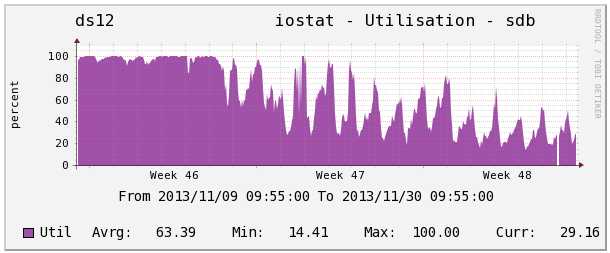

location ~* \.(jpg|jpeg|gif|png|ico|css|bmp|js|swf|flv|avi|djvu|mp3|mp4|3gp)$ { aio on; directio 512; output_buffers 1 8m; root /srv/www/htdocs; } This was enough to reduce the load on the disks on average by a half to two times. The consumption of RAM by the web server at the same time increased significantly (on average from 70 to 90% of the total).

The graph below shows how the load on the disk changed after optimization:

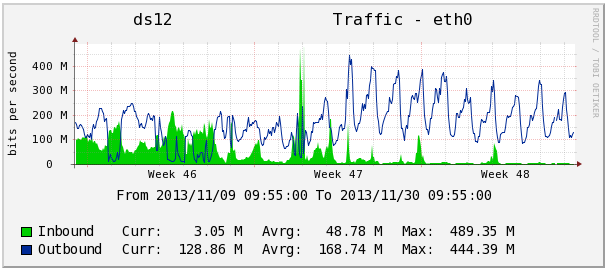

Network load graph. Outgoing traffic increased by an average of one and a half to two times:

The graph of changes in memory consumption after optimization:

As a result of experiments with settings of buffers, the rate of content delivery to load peaks averaged 1 Mb / s per user, which is quite an acceptable result. The service is stable, the client is satisfied, the users too.

Useful articles:

Nginx wiki

Tuning nginx

Accelerate Nginx in 5 minutes

Nginx Secure Web Server

Source: https://habr.com/ru/post/207834/

All Articles