Colossus Google's distributed file system

Colossus (or GFS2) is Google’s proprietary distributed file system running on production servers in 2009. Colossus is an evolutionary development of GFS. Like its predecessor GFS, Colossus is optimized for working with large datasets , scales well, is a highly available and fault-tolerant system, and also allows you to store data securely.

At the same time, Colossus solves some of the tasks that GFS could not cope with, and eliminates some of the bottlenecks of its predecessor.

One of these fundamental limitations of the GFS + Google MapReduce bundle, as well as the similar HDFS + Hadoop MapReduce (Classic) bundle (before YARN ), was focused exclusively on batch processing . At the same time, more and more Google services — social services, cloud storage, and map services — required significantly less delays than those typical of batch processing.

Thus, Google faced the need to support near-real-time responses for certain types of queries.

')

In addition, GFS chunk has a size of 64 MB (although the chunk size is configurable), which is generally not suitable for Gmail, Google Docs, Google Cloud Storage services - most of the space allocated for chunk remains unallocated .

Reducing the chunk size would automatically increase the metadata table in which the file-to-chunk mapping is stored. And since:

In addition, modern services are geographically distributed. Geo-distribution allows both to remain available to the service during force majeure and shortens the time of content delivery to the user who requests it. But the GFS architecture, described in [1], as the classic “Master-Slave” architecture, does not imply the realization of geographical distribution (in any case, without significant costs).

(Disclaimer: I have not found a single reliable source that fully describes the architecture of Colossus, so the description of the architecture contains both gaps and assumptions.)

Colossus was designed to solve the GFS problems described above. So the size of the chunk was reduced to 1 MB (by default), although it was still configurable. The increasing demands of the Master servers for the amount of RAM needed to maintain the metadata table were satisfied by the new “multi cell” -oriented Colossus architecture .

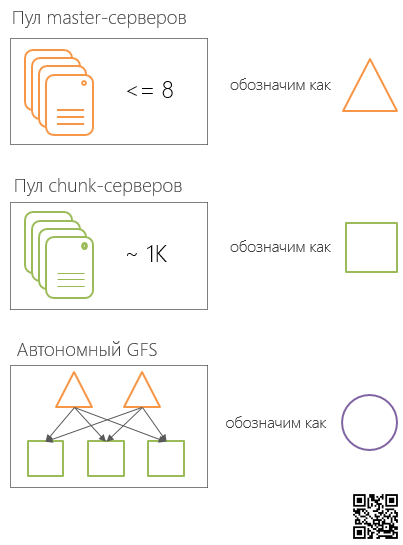

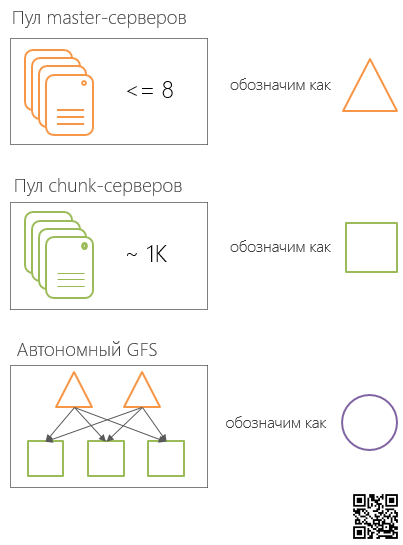

So in Colossus there is a pool of master servers and a pool of chunk servers divided into logical cells. The ratio of the master server cell (up to 8 master servers in a cell) to the cells of the chunk servers is one to many, that is, one cell of the master servers serves one or more cells of the chunk servers.

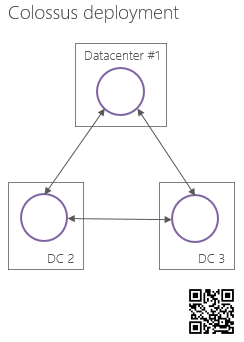

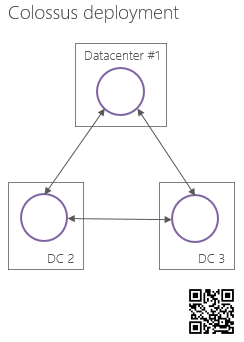

Inside the data center, the group of Master-server cells and the cells of Chunk-servers managed by it form some autonomous file system (independent of other groups of this type) (hereinafter, for short, SCI, Stand-alone Colossus Instance ). Such SCIs are located in several Google data centers and interact with each other through a specially developed protocol.

Since in open access there is no detailed Colossus internal device described by Google engineers, it is not clear how the problem of conflicts is resolved, both between SCI and within the cell of the master servers.

One of the traditional ways of resolving conflicts between equivalent nodes is the quorum of servers . But if there is an even number of participants in a quorum, situations where a quorum does not come to anything will be possible - half “for”, half “against”. And since in the information about Colossus it often sounds that there can be up to 8 nodes in the cell of the master servers, the solution of conflicts with the help of a quorum is called into question.

It is also not at all clear how one SCI knows what data another SCI operates on . If we assume that SCI does not possess such titles, it means that this knowledge should have:

In general, Colossus as a whole is more likely a “black box” than a “clear architecture” of building geo-distributed file systems operating with petabytes of data.

Conclusion

As you can see, the changes in Colossus affected almost all elements of the predecessor file system (GFS) - from chunk to cluster composition; however, the continuity of the ideas and concepts embodied in the GFS has been preserved.

One of the most "star" customers of Colossus is Caffeine - the latest infrastructure of Google search services.

[1] Sanjay Ghemawat, Howard Gobioff, Shun-Tak Leung. The Google File System. ACM SIGOPS Operating Systems Review, 2003.

[10] Andrew Fikes. Storage Architecture and Challenges. Google Faculty Summit, 2010.

* A complete list of sources used to prepare the cycle.

Dmitry Petukhov

MCP,PhD Student , IT Zombies,

caffeinated man instead of red blood cells.

At the same time, Colossus solves some of the tasks that GFS could not cope with, and eliminates some of the bottlenecks of its predecessor.

Why did you need GFS2? GFS limitations

One of these fundamental limitations of the GFS + Google MapReduce bundle, as well as the similar HDFS + Hadoop MapReduce (Classic) bundle (before YARN ), was focused exclusively on batch processing . At the same time, more and more Google services — social services, cloud storage, and map services — required significantly less delays than those typical of batch processing.

Thus, Google faced the need to support near-real-time responses for certain types of queries.

')

In addition, GFS chunk has a size of 64 MB (although the chunk size is configurable), which is generally not suitable for Gmail, Google Docs, Google Cloud Storage services - most of the space allocated for chunk remains unallocated .

Reducing the chunk size would automatically increase the metadata table in which the file-to-chunk mapping is stored. And since:

- access, maintenance of relevance and replication of metadata is the responsibility of the Master server;

- in GFS, as in HDFS, metadata is fully loaded into the server’s RAM,

In addition, modern services are geographically distributed. Geo-distribution allows both to remain available to the service during force majeure and shortens the time of content delivery to the user who requests it. But the GFS architecture, described in [1], as the classic “Master-Slave” architecture, does not imply the realization of geographical distribution (in any case, without significant costs).

Architecture

(Disclaimer: I have not found a single reliable source that fully describes the architecture of Colossus, so the description of the architecture contains both gaps and assumptions.)

Colossus was designed to solve the GFS problems described above. So the size of the chunk was reduced to 1 MB (by default), although it was still configurable. The increasing demands of the Master servers for the amount of RAM needed to maintain the metadata table were satisfied by the new “multi cell” -oriented Colossus architecture .

So in Colossus there is a pool of master servers and a pool of chunk servers divided into logical cells. The ratio of the master server cell (up to 8 master servers in a cell) to the cells of the chunk servers is one to many, that is, one cell of the master servers serves one or more cells of the chunk servers.

Inside the data center, the group of Master-server cells and the cells of Chunk-servers managed by it form some autonomous file system (independent of other groups of this type) (hereinafter, for short, SCI, Stand-alone Colossus Instance ). Such SCIs are located in several Google data centers and interact with each other through a specially developed protocol.

Since in open access there is no detailed Colossus internal device described by Google engineers, it is not clear how the problem of conflicts is resolved, both between SCI and within the cell of the master servers.

One of the traditional ways of resolving conflicts between equivalent nodes is the quorum of servers . But if there is an even number of participants in a quorum, situations where a quorum does not come to anything will be possible - half “for”, half “against”. And since in the information about Colossus it often sounds that there can be up to 8 nodes in the cell of the master servers, the solution of conflicts with the help of a quorum is called into question.

It is also not at all clear how one SCI knows what data another SCI operates on . If we assume that SCI does not possess such titles, it means that this knowledge should have:

- or client (which is even less likely);

- or (conditionally) Supermaster (which again is the single point of failure);

- or this information (in fact, the critical state ) must be located in the storage shared by all SCI. There are expected problems with locks, transactions, replication. PaxosDB successfully copes with the latter, or the storage implements the Paxos algorithm (or similar).

In general, Colossus as a whole is more likely a “black box” than a “clear architecture” of building geo-distributed file systems operating with petabytes of data.

Conclusion

As you can see, the changes in Colossus affected almost all elements of the predecessor file system (GFS) - from chunk to cluster composition; however, the continuity of the ideas and concepts embodied in the GFS has been preserved.

One of the most "star" customers of Colossus is Caffeine - the latest infrastructure of Google search services.

List of sources*

[1] Sanjay Ghemawat, Howard Gobioff, Shun-Tak Leung. The Google File System. ACM SIGOPS Operating Systems Review, 2003.

[10] Andrew Fikes. Storage Architecture and Challenges. Google Faculty Summit, 2010.

* A complete list of sources used to prepare the cycle.

Dmitry Petukhov

MCP,

caffeinated man instead of red blood cells.

Source: https://habr.com/ru/post/206986/

All Articles