Google Platform. 10+ years

Storing and processing data is a task that mankind with varying success solves a thousand years. The problems associated with solving this problem are connected not only with the physical volume of data ( volume ), but also with the rate of variability of these data ( velocity ) and the variety of data sources - the fact that Gartner analysts in their articles [11, 12] designated as "3V".

Computer Science has recently faced the problem of Big Data, whose solutions for IT are private companies, governments, and the scientific community.

And a company has already appeared in the world, which, with varying success, has been coping with the Big Data problem for 10 years already. In my feeling (because to declare we truly need open data, which is not freely available), no commercial or non-profit organization operates with a large amount of data than the company in question.

')

This company was the main contributor to the ideas of the Hadoop platform , as well as many components of the Hadoop ecosystem, such as HBase, Apache Giraph, Apache Drill.

As you guessed, it is about Google.

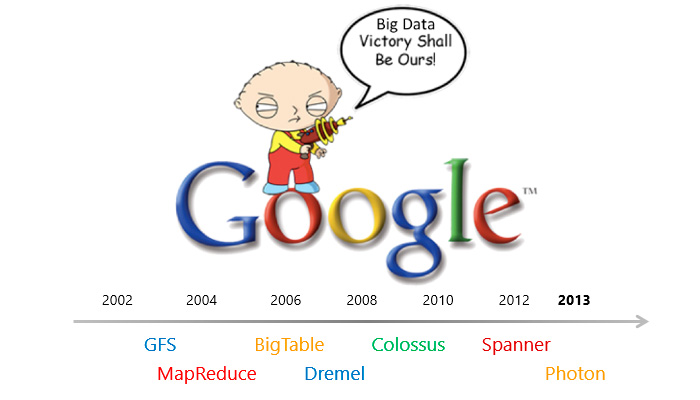

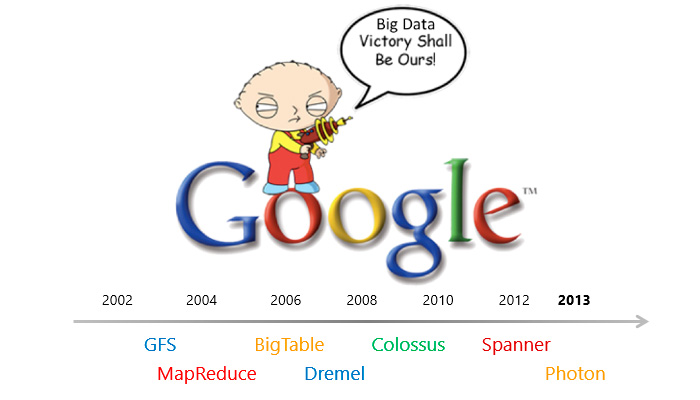

Chronology of Big Data at Google

Conventionally, the history of the development of “Big Data” solutions at Google can be divided into 2 periods:

During this period, Google engineers described and published freely available research papers on 3 systems that Google uses to solve its problems:

The impact of the work published by Google on the first steps in the development of the Big Data industry is difficult to overestimate.

The most famous example of the implementation of the concepts described by Google is the Hadoop platform. So the prototype of the HDFS file system is GFS ; the ideas behind the HBase architecture are taken from BigTable ; and the Hadoop MapReduce computing framework (without YARN ) is an implementation of the principles embodied in a similar Google MapReduce framework.

Starting from 2008, the Hadoop platform itself will be gaining popularity over several years and by 2010-2011 will become the de facto standard for working with Big Data.

Now Hadoop is already the “locomotive” in the Big Data world and has a huge impact on this IT segment. But once the same huge influence on Hadoop had the architectural approaches described in Google to build the Big Data platform.

The Google platform itself has been developing all this time, has adapted to more and more new requirements, the search engine has new services, including those whose nature corresponded to an interactive processing mode rather than a batch ; chunk sizes (clusters in GFS) were suitable for efficiently storing not all data types; there were requirements related to geodistribution and support for distributed transactions .

By 2009-2010, both within Google itself and in the academic environment, the merits and limitations of the set of approaches for building the Big Data platform described by Google engineers from 2003 to 2008 were investigated in sufficient detail. Yes, and the Google platform itself for the period up to 2009 has evolved and evolved.

So, in (conditionally) the 2nd stage of development of the Big Data platform in Google - 2009-2013 - the following software systems were described by researchers with varying degrees of detail:

In subsequent articles of the cycle on the Google platform, most of the above internal Google software products will be reviewed, with which Google successfully solves the tasks of storing, structuring and searching by data, detecting spam, increasing the effectiveness of ad impressions in contextual advertising services, maintaining data consistency on the social network Google+, etc.

Instead of a conclusion, I’ll quote a person who has already proven his ability to successfully predict the future of the Big Data industry, Cloudera CEO Mike Olson:

Additional sources

Dmitry Petukhov

MCP,PhD Student , IT Zombies,

caffeinated man instead of red blood cells.

Computer Science has recently faced the problem of Big Data, whose solutions for IT are private companies, governments, and the scientific community.

And a company has already appeared in the world, which, with varying success, has been coping with the Big Data problem for 10 years already. In my feeling (because to declare we truly need open data, which is not freely available), no commercial or non-profit organization operates with a large amount of data than the company in question.

')

This company was the main contributor to the ideas of the Hadoop platform , as well as many components of the Hadoop ecosystem, such as HBase, Apache Giraph, Apache Drill.

As you guessed, it is about Google.

Chronology of Big Data at Google

Conventionally, the history of the development of “Big Data” solutions at Google can be divided into 2 periods:

- 1st period ( 2003-2008 ): during this period, a set of principles and concepts were described, which are now de facto the standard in the world of processing large amounts of data (on commodity-equipment).

- 2nd period ( from 2009 to the present ): data processing technologies were described, which, with a high degree of probability, will be used to solve the “Big Data” task in the near future.

2003-2008

During this period, Google engineers described and published freely available research papers on 3 systems that Google uses to solve its problems:

- Google File System (GFS) - distributed file system [1];

- Bigtable [3] is a high-performance petabyte data storage database;

- MapReduce [2] is a software model designed for distributed processing of large amounts of data.

The impact of the work published by Google on the first steps in the development of the Big Data industry is difficult to overestimate.

The most famous example of the implementation of the concepts described by Google is the Hadoop platform. So the prototype of the HDFS file system is GFS ; the ideas behind the HBase architecture are taken from BigTable ; and the Hadoop MapReduce computing framework (without YARN ) is an implementation of the principles embodied in a similar Google MapReduce framework.

Starting from 2008, the Hadoop platform itself will be gaining popularity over several years and by 2010-2011 will become the de facto standard for working with Big Data.

Now Hadoop is already the “locomotive” in the Big Data world and has a huge impact on this IT segment. But once the same huge influence on Hadoop had the architectural approaches described in Google to build the Big Data platform.

The Google platform itself has been developing all this time, has adapted to more and more new requirements, the search engine has new services, including those whose nature corresponded to an interactive processing mode rather than a batch ; chunk sizes (clusters in GFS) were suitable for efficiently storing not all data types; there were requirements related to geodistribution and support for distributed transactions .

By 2009-2010, both within Google itself and in the academic environment, the merits and limitations of the set of approaches for building the Big Data platform described by Google engineers from 2003 to 2008 were investigated in sufficient detail. Yes, and the Google platform itself for the period up to 2009 has evolved and evolved.

2009-2013

So, in (conditionally) the 2nd stage of development of the Big Data platform in Google - 2009-2013 - the following software systems were described by researchers with varying degrees of detail:

- Colossus (GFS2) is a distributed file system, which is a development of GFS [10].

- Spanner is a scalable geo-distributed storage with support for data versioning, which is a development of BigTable [8].

- Dremel is a scalable system for processing requests in near-real-time mode, designed to analyze read-only related data [4].

- Percolator is a platform for incremental data processing, which is used to update Google search indexes [9].

- Caffeine is Google's search engine infrastructure using GFS2, next-generation (iterative) MapReduce and next-generation BigTable [6].

- Pregel is a scalable, fault-tolerant and distributed graph processing system [7].

- Photon is a scalable, fault-tolerant and geo-distributed stream data processing system [5].

In subsequent articles of the cycle on the Google platform, most of the above internal Google software products will be reviewed, with which Google successfully solves the tasks of storing, structuring and searching by data, detecting spam, increasing the effectiveness of ad impressions in contextual advertising services, maintaining data consistency on the social network Google+, etc.

Instead of conclusion

Instead of a conclusion, I’ll quote a person who has already proven his ability to successfully predict the future of the Big Data industry, Cloudera CEO Mike Olson:

High-performance data processing

read your Google research papers that are coming out right now.

- Mike Olson, Cloudera CEO

List of sources used to prepare the cycle

main sources

- [1] Sanjay Ghemawat, Howard Gobioff, Shun-Tak Leung. The Google File System. ACM SIGOPS Operating Systems Review, 2003.

- [2] Jeffrey Dean, Sanjay Ghemawat. MapReduce: simplified data processing on large clusters. Proceedings of OSDI, 2004.

- [3] Fay Chang, Jeffrey Dean, Sanjay Ghemawat, Wilson C. Hsieh, Deborah A.Wallach, et al. Bigtable: A Distributed Storage System for Structured Data. Proceedings of OSDI, 2006.

- [4] Sergey Melnik, Andrey Gubarev, Jing Jing Long, Geoffrey Romer, et al. Dremel: Interactive Analysis of Web-Scale Datasets. Proceedings of the VLDB Endowment, 2010.

- [5] Rajagopal Ananthanarayanan, Venkatesh Basker, Sumit Das, Ashish Gupta, Haifeng Jiang, Tianhao Qiu, et al. Photon: Fault-tolerant and Scalable Joining of Continuous Data Streams, 2013.

- [6] Our new search index: Caffeine. Google Official blog.

- [7] Grzegorz Malewicz, Matthew H. Austern, Aart JC Bik, James C. Dehnert, Ilan Horn, et al. Pregel: A System for Large-Scale Graph Processing. Proceedings of the 2010 international conference on Management of data, 2010.

- [8] James C. Corbett, Jeffrey Dean, Michael Epstein, Andrew Fikes, Christopher Frost, JJ Furman, et al. Spanner: Google's Globally-Distributed Database. Proceedings of OSDI, 2012.

- [9] Daniel Peng, Frank Dabek. Large-scale Incremental Processing Using Distributed Transactions and Notifications. Proceedings of the 9th USENIX Symposium on Operating Systems Design and Implementation, 2010.

- [10] Andrew Fikes. Storage Architecture and Challenges. Google Faculty Summit, 2010.

Additional sources

- [11] Douglas, L. 3D Data Management: Controlling Data Volume, Velocity and Variety. Gartner, 2001.

- [12] Christy Pettey, Laurence Goasduff. Gartner Says Solving 'Big Data' Challenge Involves More Than Just Managing Volumes of Data. Gartner, 2011.

- [13] The law of transition of quantitative changes to qualitative. The free encyclopedia Wikipedia.

- [14] Google BigQuery. Google Developers.

- [15] Article resource 0xCode.in : {Big Data, Cloud Computing, HPC} Blog.

Post change history

Commit 01 [Dec 23rd, 2013]. Changed the title of the article.

- Google Platform. 2003-2013

+ Google Platform. 10+ years

Commit 02 [Dec 24, 2013].

+ link to the post with a description of Colossus.

Commit 03 [Dec 25, 2013].

+ link to post with description of Spanner.

Commit 04 [Dec 26, 2013].

+ link to the post with a description of Dremel.

Commit 05 [12/27/2013].

+ link to post with Photon description.

- Google Platform. 2003-2013

+ Google Platform. 10+ years

Commit 02 [Dec 24, 2013].

+ link to the post with a description of Colossus.

Commit 03 [Dec 25, 2013].

+ link to post with description of Spanner.

Commit 04 [Dec 26, 2013].

+ link to the post with a description of Dremel.

Commit 05 [12/27/2013].

+ link to post with Photon description.

Dmitry Petukhov

MCP,

caffeinated man instead of red blood cells.

Source: https://habr.com/ru/post/206972/

All Articles