FTCA Data Clustering Algorithm

Foreword

Walking around the English Internet, looking for a solution to one of the urgent topics at work, I came across a very interesting algorithm called “Fast Threshold Clustering Algorithm”. This clustering algorithm, which is remarkable, appeared relatively recently, namely in November of this year and the author is David Varadi. The link to the source will be available at the end of the article.

For a start, what is a clusterizer?

A clusteriser is a program that allows you to create from a certain set of objects - a group (hereinafter - clusters), formed according to its meaning or content, the number of these groups, while it may not be known in advance. These objects can be both documents and numbers, depending on what is required to be grouped.

There are many different clustering algorithms ranging from the usual K-means in a variety of implementations and interpretations, and ending with more difficult to understand algorithms that can include suffix trees (STC) or higher mathematics (Lingo, SVD, etc.). A common problem that overtakes a specific algorithm is an error. Error always has a place to be, especially when we are talking about documents that contain the real speech of a person. After all, the car is not always clear where to stick a fairy tale about Tsar Saltan: to politics, to the history of ancient Russia or to create a cluster? Objects can have many links with other objects for different properties. With this task they still fight.

And how to avoid this error?

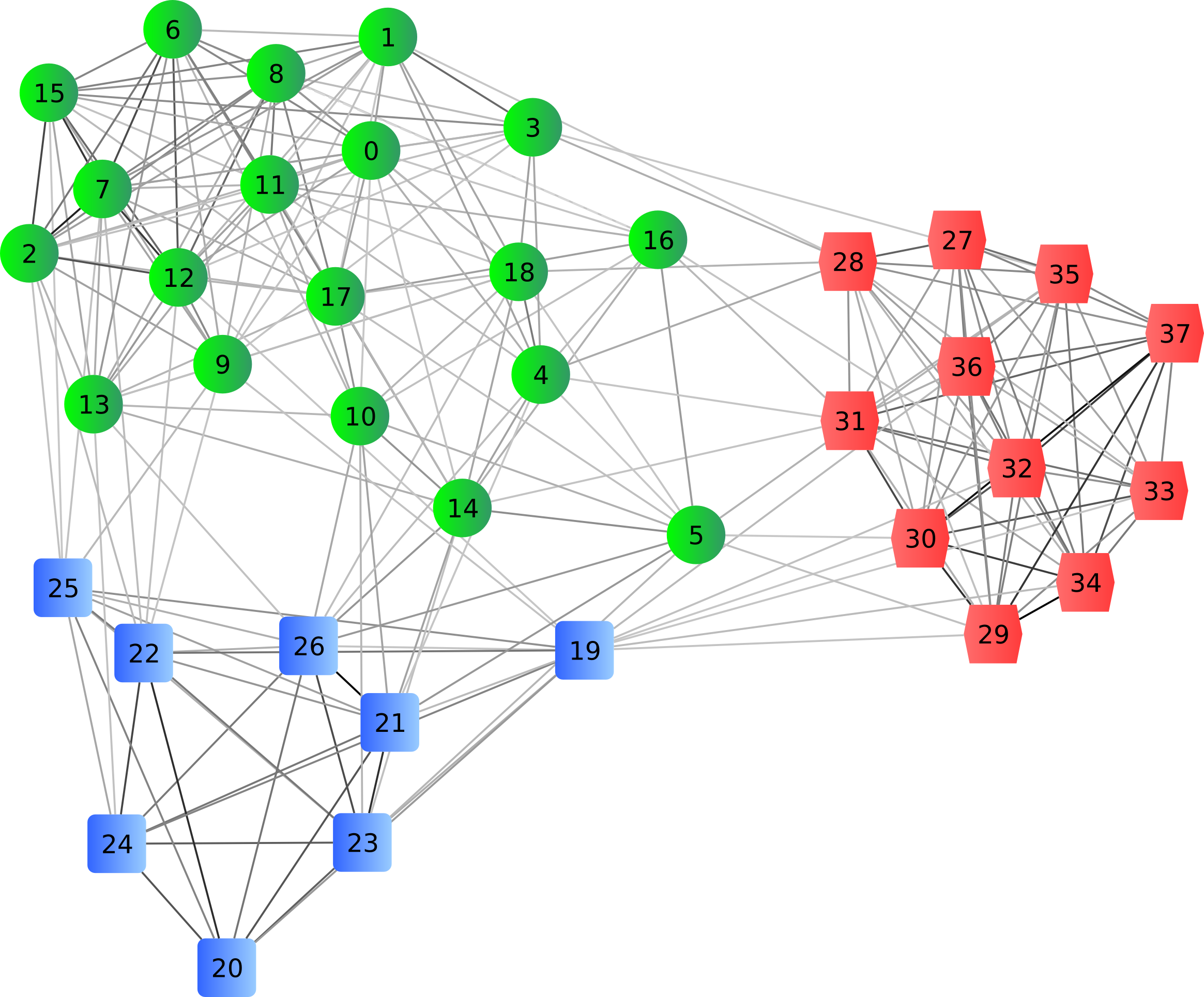

There are many ways to avoid errors. You can clearly highlight the key properties of the object, which by no means can be in one cluster, but can ideally fit the other. For example, in the example above, you can search for the presence of the words "lived-were", "kingdom-state", and the like and not include this document in certain clusters. And you can make a graph of dependencies between objects and already looking at this graph to make further conclusions (This method works, for example, SCT and Lingo). There are many solutions, even the crutch condition for satisfying a property on certain data sets can significantly improve the accuracy of clustering as a whole.

And what is the algorithm you talked about at the beginning?

The algorithm described by David attracted my attention not by its asymptotics or applicability in practice, but by its simplicity. It is built on ordinary human logic and is very similar to other algorithms.

')

To begin with, imagine that we have a set of objects that we want to group and a function that says how much two objects resemble each other in percentage terms:

F (x, y) = F (y, x) = ...%

- Correlation function of two objects.

(Actually, this is all that, as a rule, the human brain uses when grouping objects.)

Next, we introduce the concept of average similarity:

G (x, y1, y2, ..., yn) = SUM (F (x, yk)) / n

- this function shows how much the object looks like a group of other objects.

Algorithm

At the very first (and long) step, it is required, for convenience, to sort all our objects by their average correlation with other objects in ascending order. That is, we will get a list whose first element is the element with the lowest average correlation, and the last, on the contrary, with the highest.

Next we need to decide how many clusters to create and on what basis. What is the principle? And this is our Treshold - the same border above which objects are considered similar enough to be together in one group.

Clusters are created according to the following algorithm:

1) Sort the objects.

2) Take the first and last object from our sorted list.

• If their correlation is greater than our similarity boundary - we create one cluster whose first elements will be these two objects and find all the objects from the remaining ones, the average correlation of which with the whole cluster will be more trashhold.

• If their correlation is less than the similarity boundary, we create two clusters of elements into which will fall on the same principle as described above.

3) If only one object remains, we create a separate cluster and push it there. Otherwise, go back to step 1.

Everything, in the end, you should get a fairly stable cluster. It is noteworthy that this algorithm quite sensibly creates separate clusters for objects that do not fit anywhere and there is an opportunity to adjust the percentage similarity parameter. Thus, these objects create their own habitat and do not allow an error that does not pass through trashhold to get into this habitat and spoil the whole picture.

Also, if we go into logic a little, we can understand that the algorithm is very flexible in the sense that we can quite easily increase the accuracy at a loss of speed or vice versa. For example, adding a mobile trashhold function for each cluster, which will show what is the average connectivity of objects within the cluster. Thus, the error is not something that does not fall, it will decrease (although inserting an element into the cluster will start the recalculation of this treshchold, which will kill the performance). In the opposite direction, you can reverse the accuracy of the algorithm and increase productivity by adding an object to the cluster for similarity only to the standard, the core of the cluster.

Results

Summing up, I want to say that even I still have not understood why David began the name with the word "Fast", which is translated as "Fast." Of course, this algorithm cannot be called fast if you do not use the huge correlation matrix of each object with each + average values for each object, but calculating all of this is a very complicated operation, due to the possible complexity of the correlation function itself. Theoretically, the idea is very good, practically - it can process small amounts of data.

The usual asymptotic complexity of the algorithm is O (n ^ 3) of, possibly, a complex function F (x, y). When using the correlation matrix, we get O (n ^ 2) in speed and O (n ^ 2) in memory, which will clear all the plugs from the RAM. These are the cakes, dear Habrachane.

Next time I will try to tell you about the results of my testing of this algorithm.

Source: cssanalytics.wordpress.com/2013/11/26/fast-threshold-clustering-algorithm-ftca

Source: https://habr.com/ru/post/206904/

All Articles