Finishing exdupe.exe - a smart deduplicating archiver

Some time ago, I ran into an unpleasant problem - it took several virtual machines to back up. I must say that backing up for me means having as a result not only an archive with the last copy, but a small handful of these archives made according to a given scheme. Of course, the batnichek for archiving was written quickly and worked without any complaints, but the size ... The size of the backup set turned out to be huge. Especially sad was the fact that they were almost identical virtuals, and almost identical backups of these virtual women. So I recognized the word "deduplication" and "diff" and began to look for some kind of compression utility with deduplication.

Different utilities offered different approaches to compressing files that are close in content, but one thing turned out to be common - choose one source file and set the utility on the others - it determines the difference between the source and the others and archives the result, and when you need to deploy - you specify the source file and the archive with diff , the utility itself will deploy everything.

In short, the source needed somewhere to have in expanded form. All the time - and at the time of archiving, and at the time of unzipping. That is, "oil painting" - I have five virtual women, I want to make an archive today with the difference between today's virtual women and yesterday's - I have to:

- from yesterday to prepare a full copy of the entire farm,

- then run the utility, it will make diff and archive diff.

Now I want to copy all this somewhere — I will not drag yesterday’s (source) data in uncompressed form — I will have to archive the source as well. If tomorrow I need to make a diff between yesterday and tomorrow - the source should be available in uncompressed and untouched form - either a copy of yesterday's state, or an archive that will have to be deployed. If I need to deploy the archive on a new host, first I need to deploy the source, then deploy the diff itself.

Well, good disk space - you can buy, but time! Such a lot of time is spent on unarchiving the source! But he was found - an archiver who could do everything right - drive the source into the archive, moreover, with deduplication, and then do the diffs directly from the compressed source, and the speed of archiving / unarchiving rests on the speed of the hard disk. Cool But under Windows 2003 does not work. As you understand, if everything worked by itself - I would not have written this article.

So, now - ambula.

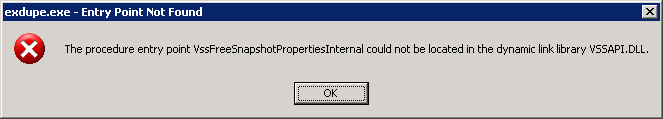

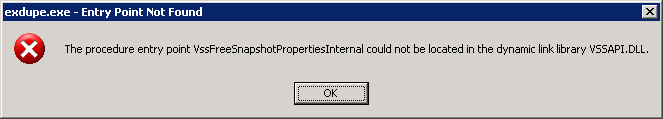

The archiver is called exdupe, it was of frivarny type, with partially available source code. Partially - because the deduplicator library was linked statically, and the code was laid out on the command line utility (now all the code is laid out). Everything lies in the form of a project under Visual Studio 2012. Everything started only under the 64-bit version of Windows (mine is Win2003), and at startup it gave an error:

The source code was immediately downloaded from the program site (I finished the version 0.5.0).

The cause of the error is the incompatibility of the versions of the VSSAPI.dll library in my Windows and in the lib that was connected in the project.

Picked up the source, I realized that the easiest way is to simply disable support for Shadow Copy — remove the VSS library calls and freeze the functions responsible for accessing VSS. It must be said, the code was written with direct hands, albeit with errors, and there were only two functions and they were in the file "shadow \ shadow.cpp".

Here is what we do:

Different utilities offered different approaches to compressing files that are close in content, but one thing turned out to be common - choose one source file and set the utility on the others - it determines the difference between the source and the others and archives the result, and when you need to deploy - you specify the source file and the archive with diff , the utility itself will deploy everything.

In short, the source needed somewhere to have in expanded form. All the time - and at the time of archiving, and at the time of unzipping. That is, "oil painting" - I have five virtual women, I want to make an archive today with the difference between today's virtual women and yesterday's - I have to:

- from yesterday to prepare a full copy of the entire farm,

- then run the utility, it will make diff and archive diff.

Now I want to copy all this somewhere — I will not drag yesterday’s (source) data in uncompressed form — I will have to archive the source as well. If tomorrow I need to make a diff between yesterday and tomorrow - the source should be available in uncompressed and untouched form - either a copy of yesterday's state, or an archive that will have to be deployed. If I need to deploy the archive on a new host, first I need to deploy the source, then deploy the diff itself.

Well, good disk space - you can buy, but time! Such a lot of time is spent on unarchiving the source! But he was found - an archiver who could do everything right - drive the source into the archive, moreover, with deduplication, and then do the diffs directly from the compressed source, and the speed of archiving / unarchiving rests on the speed of the hard disk. Cool But under Windows 2003 does not work. As you understand, if everything worked by itself - I would not have written this article.

So, now - ambula.

The archiver is called exdupe, it was of frivarny type, with partially available source code. Partially - because the deduplicator library was linked statically, and the code was laid out on the command line utility (now all the code is laid out). Everything lies in the form of a project under Visual Studio 2012. Everything started only under the 64-bit version of Windows (mine is Win2003), and at startup it gave an error:

Entry Point Not Found

The procedure entry point VssFreeSnapshotPropertiesInternal could not be located in the dynamic link library VSSAPI.DLL.

The source code was immediately downloaded from the program site (I finished the version 0.5.0).

The cause of the error is the incompatibility of the versions of the VSSAPI.dll library in my Windows and in the lib that was connected in the project.

Picked up the source, I realized that the easiest way is to simply disable support for Shadow Copy — remove the VSS library calls and freeze the functions responsible for accessing VSS. It must be said, the code was written with direct hands, albeit with errors, and there were only two functions and they were in the file "shadow \ shadow.cpp".

Here is what we do:

- find the function void unshadow (void)

- Comment line 342:

VssFreeSnapshotProperties(&prop.Obj.Snap);

will be://removing shadowing //VssFreeSnapshotProperties(&prop.Obj.Snap); - before line 330

ULONG fetched = 0;

add://remove shadowing return 1; - find the function int shadow (vector volumes),

Comment line 177:hr = ::CreateVssBackupComponents(&comp);

will be://remove shadowing //hr = ::CreateVssBackupComponents(&comp);

')

5. before line 157:// Initialize COM and open ourselves wide for callbacks by // CoInitializeSecurity. HRESULT hr;

stick in://remove shadowing return 1;

Before building, I recommend changing the name of the executable in the project properties so as not to be confused with the author's “exdupe.exe”. Type "exdupe-050-noshadow.exe".

We collect, we start - it works!

The algorithm is really smart, of course it wakes up the memory and loads the kernels, but this can be configured - I can comfortably run in two streams with the "-t2" key. On a regular hard drive, source deduplication with compression:

- 65.7 GB for 44 min = 24.8 MB / s

- compressed to 24.1 GB = compression ratio 0.37

deduplication the next day

- processing time 10 min. 20 s. = 106 MB / s

- diff compressed to 2.1 GB

Separately, I’ll say that the restriction on launching only from under 64-bit OS versions is artificially introduced, and can be disabled, while archives created by a 64-bit program are normally deployed with 32-bit, though there are no record speeds here (and there’s no waiting need to).

I do not post binaries and full texts of the project due to license restrictions.

Exdupe utility website - www.exdupe.com

Sources: www.exdupe.com/old

Source: https://habr.com/ru/post/206534/

All Articles