Optimal storage architecture for virtual infrastructure backups

How to design a virtual infrastructure backup storage architecture? First of all, you need to answer the key question: “What is the main priority: minimizing disk space usage, performance or cost?” The answer to this question determines the entire future investment strategy in backup infrastructure.

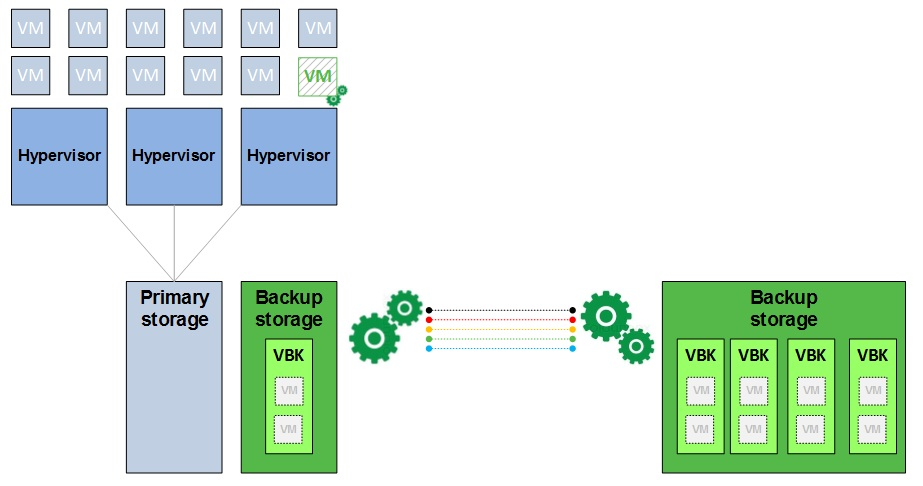

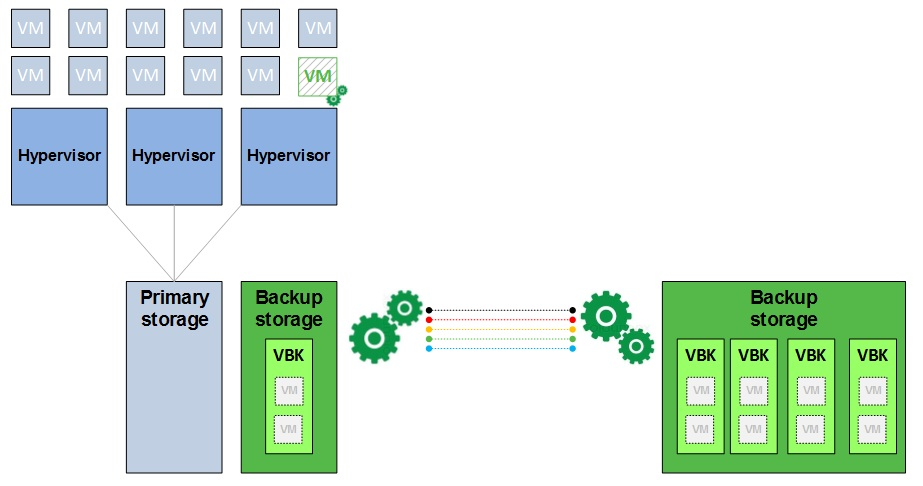

The figure shows one of the best options for the backup infrastructure architecture. The first-line storage system (in the figure, this is Backup Storage, shown to the left of the data line), located in close proximity to the original data of the productive network, should be as fast as possible (for example, it can be built on SSD disks), but remaining This is reasonable for the price. To achieve such a goal, this storage should be of a size sufficient to store only those data that are most likely to be claimed for recovery in the event of a system failure or at the request of users of the productive network. For example, if according to your statistics, up to 80% of restore requests fall on data created, modified, or deleted in the last 30 days, then only this data should be stored on the “first line” storage system. When selecting this storage system, the following recommended properties should be considered:

So, to summarize, in terms of backup, the advantage of this scheme is as follows:

')

If the repository stores many backups of the same infrastructure, it is wise to use data deduplication , which will reduce the required storage disk space.

Specialized deduplication storage systems, such as ExaGrid, Data Domain, HP StoreOnce and others, are good choices for this task. It is also a good option to use software deduplication built into Windows Server 2012. Veeam Backup works with both hardware and software deduplication built into your storage, and also contains its own built-in deduplication, and here you have complete freedom what kind of deduplication to apply, and this in turn depends on the structure of your data and the functionality of your storage system. For example, the ExaGrid EX series storage systems automatically save frequently used data in decompressed (re-deduplicated or “rehydrated”) form, which allows them to quickly return such data for read operations, so that only the network speed will be a limiting factor when performing recovery operations from backup copies. This is especially important for on-the-fly virtual machine recovery tasks directly from backup to the sandbox, as is the case, for example, with the Instant VM Recovery technology. You can read more about deduplication issues in backup in the ESG lab report "Virtual Machine Backup Without Compromise" (English).

Planning disk space for the backup repository is a daunting task, something similar to planning the fuel consumption of a car - in fact, the mileage will vary from case to case and will depend on many factors. And, in the end, this brings us back to the original question: “what is more important - speed, cost or size of disk space”. Subsequent issues, priorities and constraints depend on the specific user scenarios, IT infrastructure, and the structure of the data itself.

The figure shows one of the best options for the backup infrastructure architecture. The first-line storage system (in the figure, this is Backup Storage, shown to the left of the data line), located in close proximity to the original data of the productive network, should be as fast as possible (for example, it can be built on SSD disks), but remaining This is reasonable for the price. To achieve such a goal, this storage should be of a size sufficient to store only those data that are most likely to be claimed for recovery in the event of a system failure or at the request of users of the productive network. For example, if according to your statistics, up to 80% of restore requests fall on data created, modified, or deleted in the last 30 days, then only this data should be stored on the “first line” storage system. When selecting this storage system, the following recommended properties should be considered:

- High performance : The use of high-performance storage on the “first line” allows, on the one hand, to duplicate / replicate the changes in the original data as quickly as possible, and, on the other hand, to quickly recover the required data, which gives good RPO and RTO, respectively.

- Small volume : You have to pay for high performance, therefore, the storage capacity of the first line should not be large. When planning the size of this repository, you need to proceed from the amount of data that can most likely be claimed for restoration, for which it makes sense to analyze the statistics of the calls to HelpDesk to determine if 80% of requests for data recovery occur over time, in your organization . The answer to this question may be in the range of 7 to 30 days, which means that later backups should be moved to the storage of long-term storage (much larger, but less productive and lower in price per unit of stored information).

- Storage is the source for subsequent backup tasks : From the first-line storage, the data needs to be copied further down the chain: to the long-term storage repository or even outside the office, and possibly to several destinations at the same time. Therefore, such a storage system should provide high speed not only for write operations, but also for read operations. More information about “backup copy jobs” using the WAN accelerator can be found in the webinar recording: " Backup copy jobs with built-in WAN acceleration " (English)

So, to summarize, in terms of backup, the advantage of this scheme is as follows:

- New and changed production system data are duplicated as quickly as possible (good RPO)

- Backups of the most frequently required data recovery are on fast storage and as close as possible (from the network point of view) to the original data (good RTO)

- Copies located outside the office or in the long-term data storage repository are created asynchronously with respect to copies created during the initial data duplication process. Thus, the process of primary duplication of information removes the additional risks associated with the transfer of large amounts of data via the WAN or slow network segments (risks associated primarily with the fact that the process can drag out and begin to adversely affect the RPO).

')

If the repository stores many backups of the same infrastructure, it is wise to use data deduplication , which will reduce the required storage disk space.

Specialized deduplication storage systems, such as ExaGrid, Data Domain, HP StoreOnce and others, are good choices for this task. It is also a good option to use software deduplication built into Windows Server 2012. Veeam Backup works with both hardware and software deduplication built into your storage, and also contains its own built-in deduplication, and here you have complete freedom what kind of deduplication to apply, and this in turn depends on the structure of your data and the functionality of your storage system. For example, the ExaGrid EX series storage systems automatically save frequently used data in decompressed (re-deduplicated or “rehydrated”) form, which allows them to quickly return such data for read operations, so that only the network speed will be a limiting factor when performing recovery operations from backup copies. This is especially important for on-the-fly virtual machine recovery tasks directly from backup to the sandbox, as is the case, for example, with the Instant VM Recovery technology. You can read more about deduplication issues in backup in the ESG lab report "Virtual Machine Backup Without Compromise" (English).

Planning disk space for the backup repository is a daunting task, something similar to planning the fuel consumption of a car - in fact, the mileage will vary from case to case and will depend on many factors. And, in the end, this brings us back to the original question: “what is more important - speed, cost or size of disk space”. Subsequent issues, priorities and constraints depend on the specific user scenarios, IT infrastructure, and the structure of the data itself.

Additional materials

Source: https://habr.com/ru/post/206170/

All Articles