Sensu - monitoring framework

A bit of history

In 2011, a movement emerged in the DevOps environment, united under the hashtag #monitoringsucks , and criticized the existing monitoring systems for lack of flexibility. What exactly did not suit them - this presentation perfectly illustrates.

In short, people want a certain API standard for interaction between the monitoring components, and of the appearance of these components themselves, in order to build flexible and intelligent monitoring out of them.

The result of this wave of discontent was the massive discussion of problems and drawing attention to interesting tools like Sensu and Riemann .

')

In 2013, the hashtag in the community has changed - now it is #monitoringlove . This happened due to the development of opensource monitoring tools.

Of the new tools, Sensu is of the greatest interest. I didn’t seriously consider Riemann, because at the moment he doesn’t have any means to ensure fault tolerance, and I don’t like the idea of writing a config on Clojure.

It is about Sensu that I will tell in this article, I will describe the basic principles of work and give an example of solving a typical monitoring task.

Key facts about Sensu:

* Written in Ruby, uses EventMachine (I would prefer Python, but ok).

* Configs in JSON

* Can use Nagios plugins.

* Works through RabbitMQ, in PUSH mode, when clients themselves send to the server

results of checks as soon as they are ready.

* There are DEB, RPM and even MSI packages.

* There are modules for puppet and cookbook for Chef.

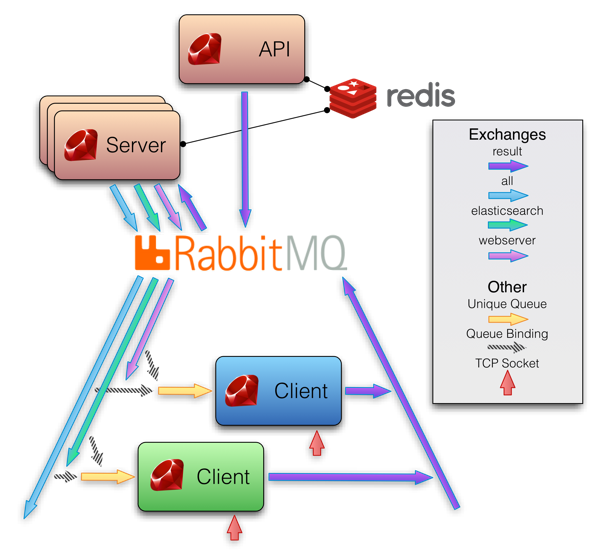

The image below shows the layout of Sensu. In my opinion, everything is very logical, and this scheme of work gives scaling and fault tolerance out of the box.

The system consists of three main components: sensu-server, sensu-api, and sensu-client on clients. A sensu-dashboard is also available. Installation is trivial and covered in detail in the documentation, for the latest currently version 0.12, it is available here . As I already mentioned, there are deb, rpm and msi packages.

Basic concepts

In order to understand how it all works, you need to understand the terminology. I do not aim to translate official documentation, but I want to give basic concepts so that it is clear what will be discussed in the future.

We have the following entities:

Client (Client)

This is a server with the sensu-client installed and configured, which publishes information about itself in RabbitMQ and is thus registered in the Sensu monitoring system. From the Sensu server, he receives a set of checks, and performs them, adding the result to RabbitMQ.

For self-identification, he needs a configuration that looks something like this (taken from the documentation):

{ "client": { "name": "i-424242", "address": "127.0.0.1", "subscriptions": [ "production", "webserver", "mysql" ] } } Everything is pretty obvious, with the exception of "subscriptions" (subscriptions). Subscriptions are a list of the roles associated with this server and determine the list of checks performed on it. More fine-tuning is described in the official documentation , from the useful you can add any fields, the values of which can be used in the checks, as well as the time interval that must pass to generate an event when the client leaves offline.

Check

Checks determine the commands that will be run on clients and their parameters. Fully compatible with Nagios plugins, i.e. use exit code as a criterion for successful verification, and STDOUT or STDERR as the data source. Checks are configured in the sensu-server configuration, a typical check looks like this (example from the documentation):

{ "checks": { "chef_client": { "command": "check-chef-client.rb", "subscribers": [ "production" ], "interval": 60 "handlers": [ "pagerduty", "irc" ] } } } From interesting here again, subscriptions and handlers. Subscriptions determine which clients will run this command.

A set of handlers determines the list of commands that will be executed when processing data from this check. About them we will talk further.

It is worth noting that verification can be a “metric” (metric), i.e. the data from its STDOUT will always be simply transferred to handlers. Thus, you can send these metrics somewhere to store or draw graphs (for example, in Graphite). Details in the documentation .

Handlers

Handlers define the commands that will be run on the monitoring server upon receipt of data from checks, and their parameters. For example, this handler, when receiving a non-zero exit code from any check, will execute the command

mail -s 'sensu event' email@address.com (example from the documentation): { "handlers": { "mail": { "type": "pipe", "command": "mail -s 'sensu event' email@address.com" } } } It's all obvious, a bunch of handlers in the plugin repository . You can send in Pagerduty, and send letters, and in Graylog2 send in gelf. Details in the documentation .

All of the above is already enough to build a working system. There are also mutators, extensions and APIs, but this is not important for us now.

Getting to the fun

Sensu is positioned as a “monitoring framework”, and this means that “out of the box” there is nothing familiar about enterprise systems like Zabbix out of the box. All functionality is added thanks to plugins.

Let's try to do something useful. Let's take a simple task - perform checks regarding Redis on clients, in case of problems, display an alert on the Dashing panel, as well as send a message to the history in Graylog2 and email to admin@example.com. And also remove metrics from Redis and send it to storage in Graphite, and then also perform checks on the aggregated keys.

Clients have addresses 192.168.1.2N, Graphite is deployed at 192.168.1.80:8082, RabbitMQ and Redis also at 192.168.1.80. Graylog2 listens on 192.168.1.81, Dashing is also deployed there.

Configuration

Let's start with the client configuration.

Suppose we have N servers in the role of redis.

The client configuration looks like this:

/etc/sensu/config.json

{ "client": { "graphite_server": "192.168.1.80:8082", "address": "192.168.1.2N", "name": "clientN", "subscriptions": [ "redis" ] } "rabbitmq": { "vhost": "/sensu", "host": "192.168.1.80", "password": "password", "port": 5672, "user": "sensu" } } and placed on each of the N clients.

All other configuration files are only on the Sensu server.

Basic settings:

/etc/sensu/conf.d/settings.json

{ "api": { "host": "192.168.1.80", "port": 4567 }, "redis": { "host": "192.168.1.80", "port": 6379 }, "rabbitmq": { "vhost": "/sensu", "host": "192.168.1.80", "password": "password", "port": 5672, "user": "sensu" }, "mailer": { "mail_from": "sensu@example.com", "smtp_port": "25", "mail_to": "admin@example.com", "smtp_address": "localhost" }, "dashing": { "auth_token": "YOUR_AUTH_TOKEN", "host": "http://192.168.1.81:8088" }, "gelf": { "server": "192.168.1.81" "port": "12201", } } As you can see, we configured not only Sensu itself, but also parameters for dashing handlers, gelf and mailer.

Now we define these handlers themselves:

/etc/sensu/conf.d/handlers.json

{ "handlers": { "default": { "type": "set", "handlers": [ "mailer", "dashing", "gelf" ] }, "gelf": { "type": "pipe", "command": "/etc/sensu/handlers/gelf.rb" }, "mailer": { "type": "pipe", "command": "/etc/sensu/handlers/mailer.rb" }, "dashing": { "type": "pipe", "command": "/etc/sensu/handlers/dashing.rb" }, "graphite": { "mutator": "only_check_output", "type": "amqp", "exchange": { "durable": true, "type": "topic", "name": "metrics" } } } } It's simple. Notice that in Graphite we helmet data through AMQP. Handlers must be decomposed on the monitoring server in / etc / sensu / handlers.

Now we will configure the checks that will be performed on the clients:

/etc/sensu/conf.d/checks.json

{ "checks": { "redis_processes": { "interval": 60, "command": "/etc/sensu/plugins/processes/check-procs.rb -p redis -c 8 -C 0 -w 7 -W 1", "subscribers": [ "redis", ], "handlers": [ "default" ] }, "redis_memory": { "dependencies": [ "redis_processes" ], "command": "/etc/sensu/plugins/redis/check-redis-memory.rb -c 204800 -w 51200", "interval": 60, "subscribers": [ "redis", ], "handlers": [ "default" ] }, "redis_metric": { "handlers": [ "graphite" ], "interval": 60, "dependencies": [ "redis_processes" ], "command": "/etc/sensu/plugins/redis/redis-graphite.rb --scheme stats.:::name:::.redis", "subscribers": [ "redis", ], "type": "metric" }, "redis_keys_from_graphite": { "interval": 60, "command": "/etc/sensu/plugins/graphite/check-data.rb -s :::graphite_server::: -t stats.:::name:::.redis.db0.keys -w 500 -c 900 -a 120", "subscribers": [ "redis" ], "dependencies": [ "redis_processes" ], "handlers": [ "default" ] } } } Checks are carried out by Sensu plug-ins, which need to be expanded on clients in / etc / sensu / plugins. For those familiar with Nagios, there is nothing new here, only the redis_metric metric is interesting, from which we put data into Graphite, and then in the redis_keys_from_graphite check we get and check the data in the last 10 minutes. In general, almost every plugin has a key - help, which gives quite reasonable information about the use.

That's the whole configuration. Everything is clear, everything can be stored in the repository, and that's fine.

Of course, you need to configure another Dashing and Graphite, but I will leave this outside of the article. Instructions for configuring Sensu + Graphite can be found here , but with Dashing everything is clear.

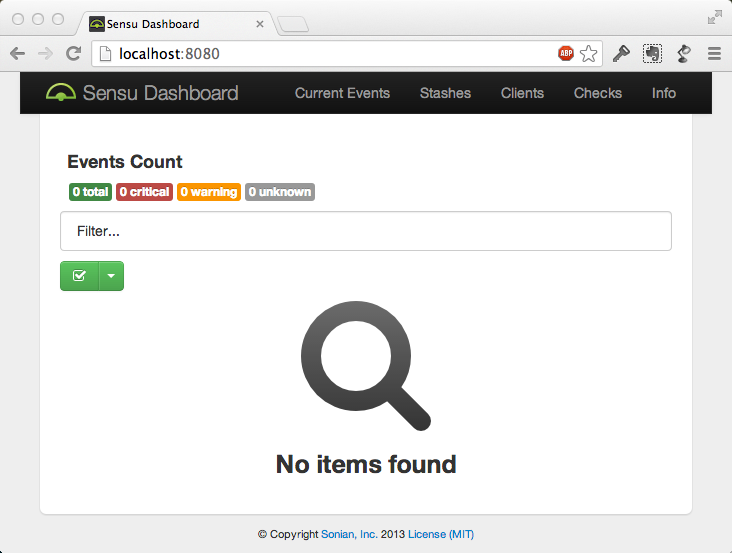

And also Sensu has a simple dashboard on which you can see a list of clients, checks, and triggered alerts. Through the API and using the dashboard, you can turn off the generation of alerts for any hosts or checks, as well as see the general state of the system.

It looks something like this (the screen is not mine):

findings

As we can see, Sensu assumes only the role of a router and an organizer, and all the dirty work is done by external programs. This allows you to keep the small size of the source code, and the overall simplicity of the system. Registering clients through RabbitMQ allows you to get rid of the mechanism of "detecting" clients, which is especially convenient for clouds. Scaled everything is very simple, an example of HA + load balancing can be seen here .

I have been using Sensu in production, in parallel with Zabbix, for about a month now, and in the test version I used a couple of months. Sensu's flexibility allowed us to set up monitoring of key parameters and project metrics with output to the Dashing panel, while I have been using Zabbix for a very long time, and he is now engaged in more comprehensive monitoring. In general, for many projects, especially in the clouds, Sensu would be an excellent choice, because it provides the ability to flexibly route events, and is also well adapted to the dynamic nature of the clouds. In the presentations, I met numbers in thousands of servers monitored by Sensu, so there is no problem with performance.

In conclusion, I want to point out the cons and pros of Sensu (all IMHO):

Cons Sensu

- Available notification handlers are scanty on settings. This is now the main disadvantage if you do not use services like PagerDuty. The only solution is to write your cunning handler.

- Config in json, not in yaml.

- We need an external keeper of metrics, he is also a graphical chart maker.

- Not too complete documentation. Fortunately, the project is simple, you can figure it out just by reading the code.

Sensu advantages

- Config can be stored in git, decompose everything using Chef / Puppet.

- Scalable.

- Fault tolerance.

- Flexibility to choose a storage system.

- Nagios plugin support.

- Auto-connect customers.

- Subscription mechanism.

- Data is published by customers as they are generated.

- The results of the checks can be flexibly sent to different handlers.

Source: https://habr.com/ru/post/205804/

All Articles