TeamCity setup for newbies

This article is primarily useful to those who use the same technology stack as our team, namely: ASP.NET, C #, NUnit, Selenium 2, git, MSBuild. Such tasks as integration with git, building C # projects, NUnit tests (both modular and UI tests), as well as deploying to the server will be considered. However, for sure there will be an interesting one for other users, except perhaps they have eaten a dog on this issue. But again, they will be able to pay attention to errors in the article or to advise something: for example, how to optimize the deployment phase.

What is “continuous integration” is perfectly described here and here , it is hardly necessary to repeat this topic for the hundredth time.

Well, for starters - what can TeamCity (hereinafter - just TC)? Or maybe it is the following: when changes appear in the specified repository branch (or other event), execute a script that includes, for example, building an application, running tests, executing other scripts, uploading files to a remote server, etc.

The important point is that the “integration server” and the “machine on which this process will take place” are usually (not necessarily) different servers. Moreover, there are several, even many, machines that run assemblies and tests, and all are on different operating systems - in general, there is where to turn.

')

To start the build process, an agent program is used that accepts commands from a TC server, and it can be launched on any of the major operating systems (the glory of Java multiplatform). You can install several agents on one computer and run them in parallel, but it is important to remember that one agent can process only one project at a time. When starting a task, TC selects the first suitable unallocated agent, and you can install “filters”, for example, select an agent only from Windows OS or only with installed .NET version not lower than 4.0, etc.

Now you need to come up with a work script. We use the following branches in our work:

In general, almost standard git-flow, which can be read in more detail, for example, here .

In this regard, our script will look like this:

For the release branch, we are doing the same thing, but at the time of uploading new data, suspend the server and show the stub.

Now go ahead - implement the script!

It all starts with a simple project creation.

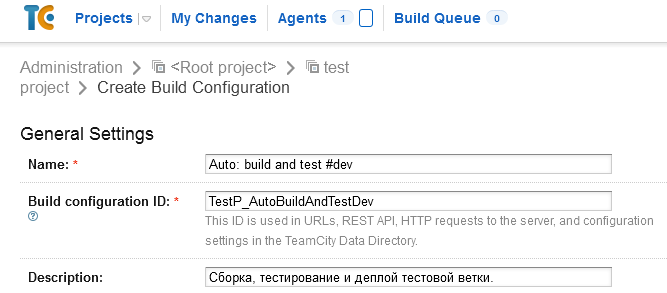

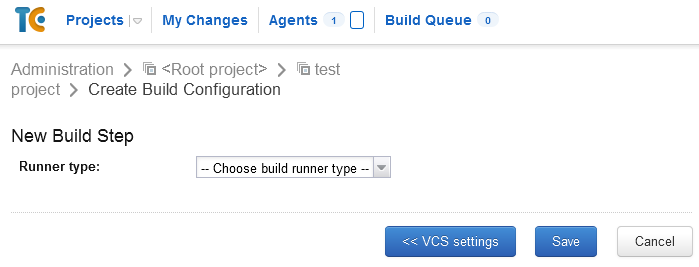

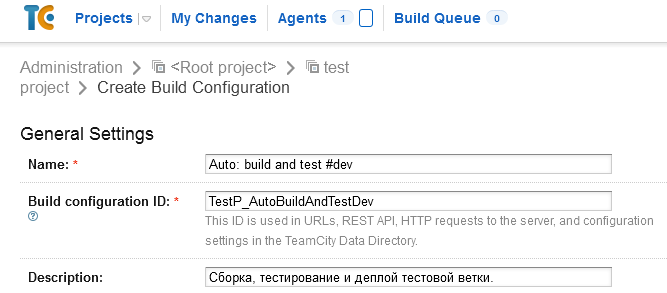

After - create “ build configuration ”. The configuration defines the build script.

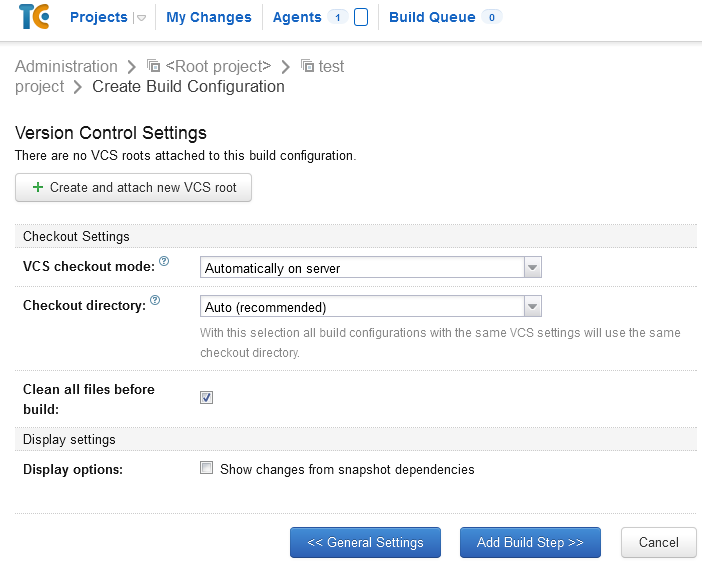

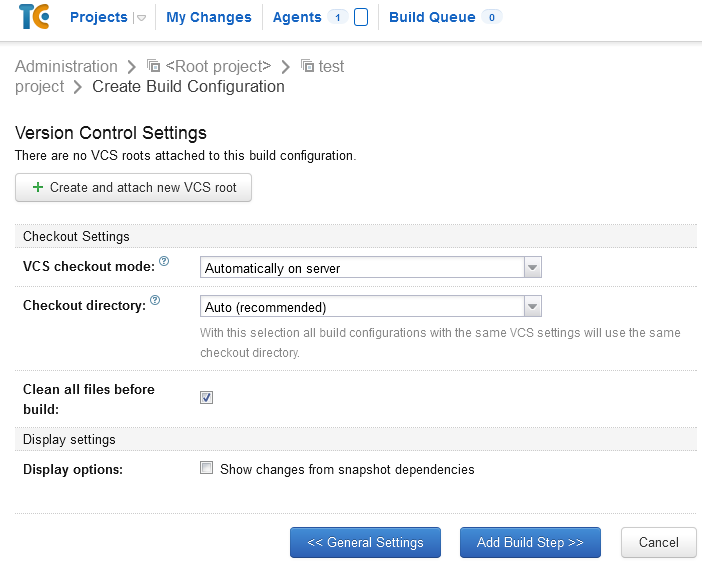

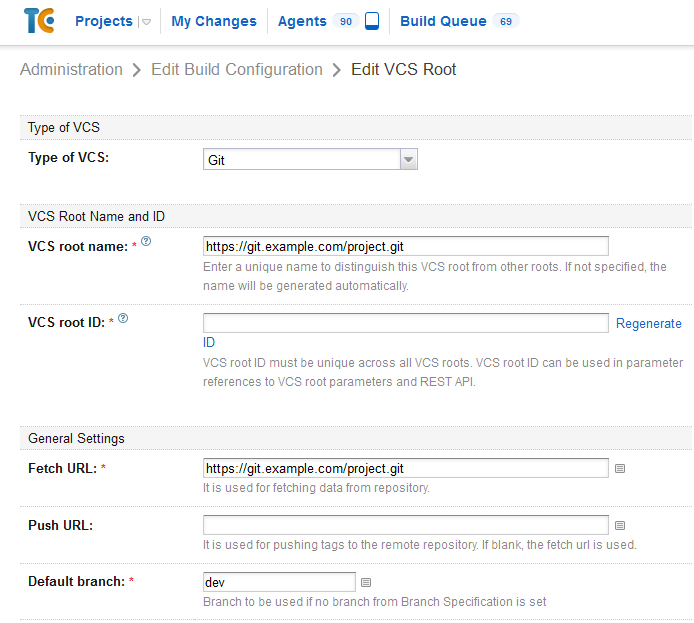

At the second step of creating the configuration, we will be asked about the VCS used, so we answer honestly that we have git here. You may have another VCS - do not get lost. A new repository is added using the Create and attach new VCS root button.

So, the key settings:

The rest of the variety of options - for your taste and color.

Next, you need to configure the automatic start of the task. Go to “ Build Triggers ” (build conditions) and select the VCS Trigger condition - with the default settings it will check for new commits in the repository once a minute, and if there are any, it will launch the task for execution.

Since we have a project on ASP.NET in the form of a Solution from Visual Studio - everything was also simple here.

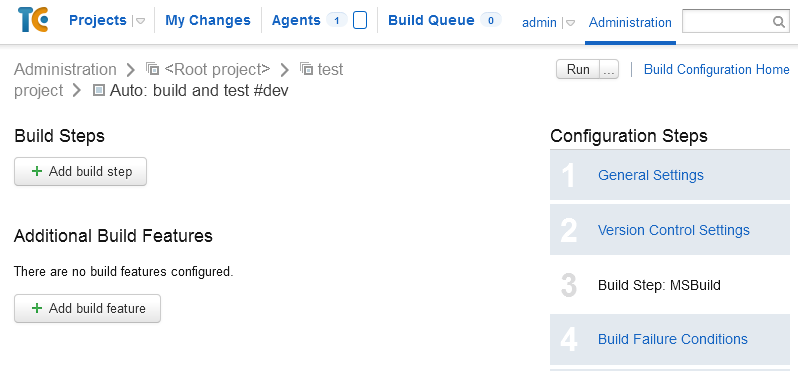

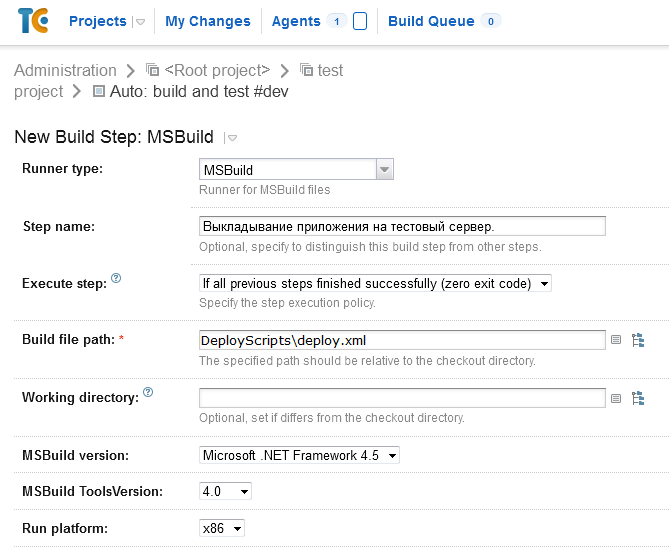

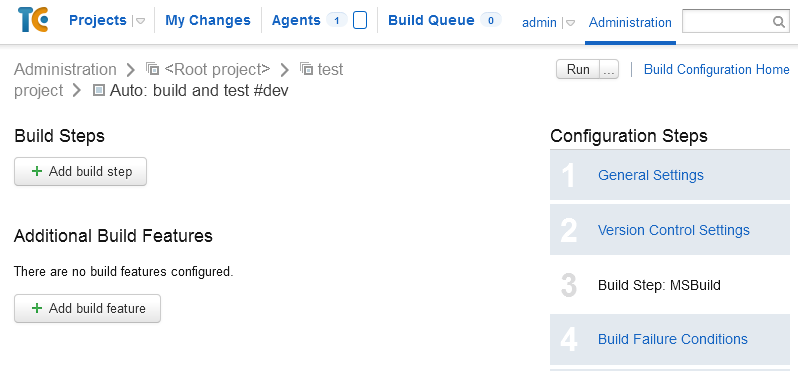

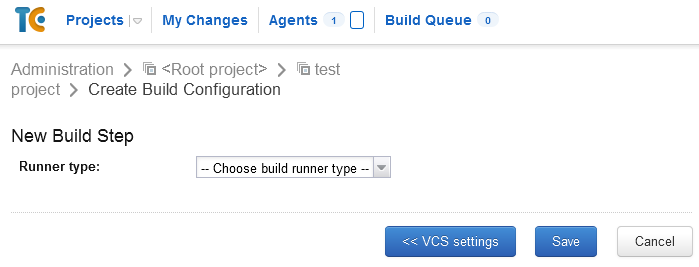

Go to the " Build Steps " menu, select the runner type (and there are really a lot of them here) and stop at MSBuild . Why on it? It provides a fairly simple way to describe the build process, even a fairly complicated one, by adding or removing various steps in a simple XML file.

Then everything is elementary.

Build file path - the path to the sln file.

MSBuild version , MSBuild ToolsVersion and Run platform select the requirements of your project.

If there are several configurations in a project, then you can use the Command line parameters option to enter something like this key:

where Production is replaced by the desired config.

An important point if you are using NuGet packages; if not, you can go directly to the next item.

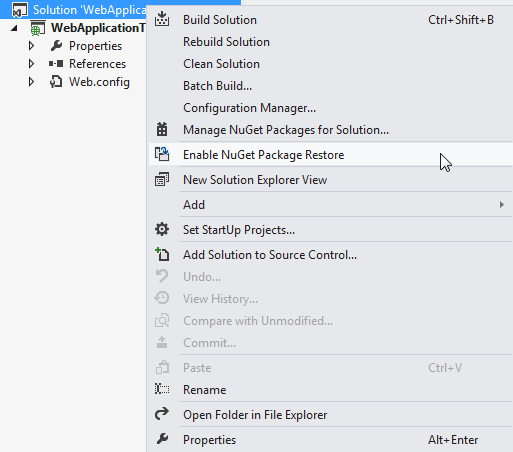

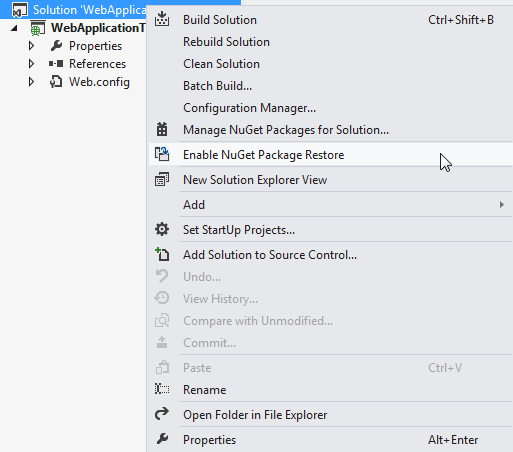

Since NuGet packages weigh a lot, and you don’t feel like storing library binaries unnecessarily, you can use the wonderful option NuGet Package Restore :

In this situation, library binaries are not included in the repository, but are downloaded as needed during the build process.

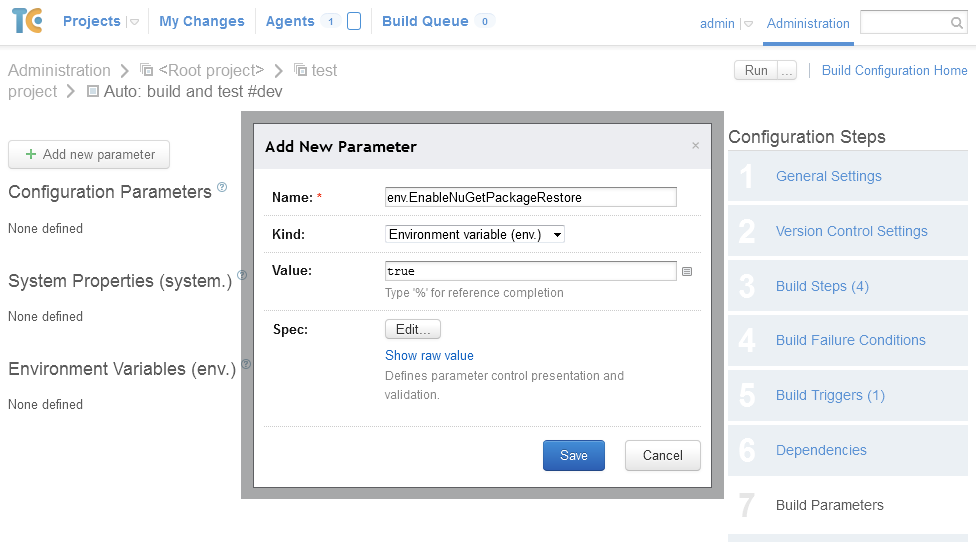

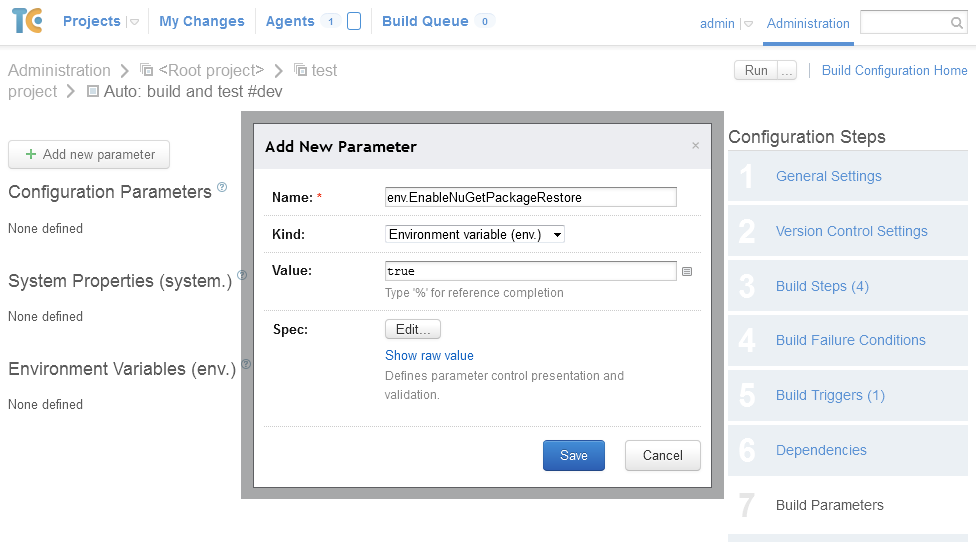

But MSBuild is a real gentleman and will not do extra gestures without permission, so there will be no way to download packages - this process should be allowed to it. To do this, you must either set the Enable NuGet Package Restore environment variable to true on the client, or go to the Build Parameters menu and set it there.

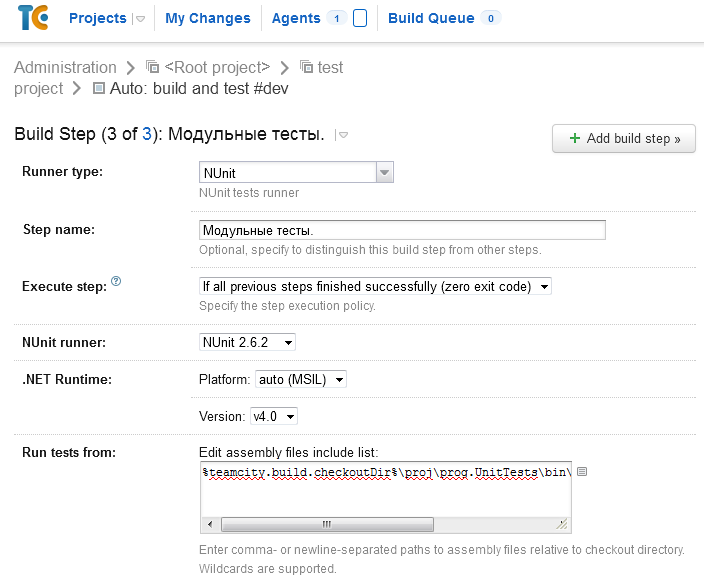

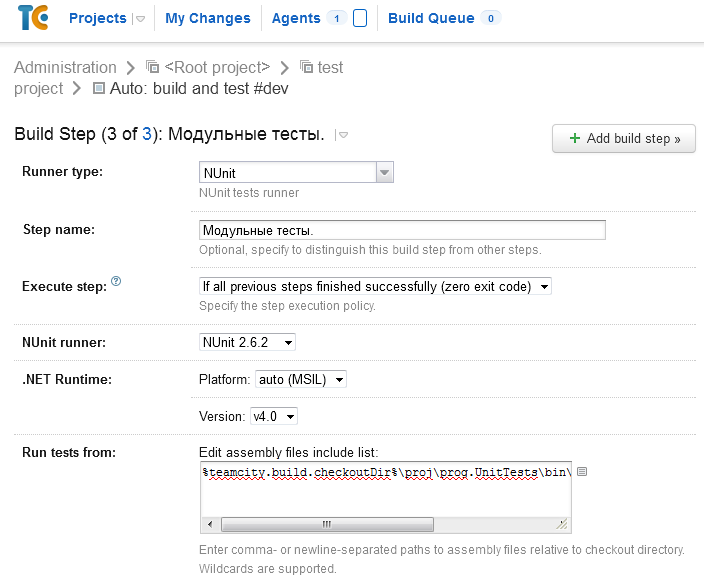

Our unit tests are a separate project within the solution. So at the previous step they were already compiled - it remains to run them.

We add a new step, only now Runner is NUnit . Pay attention to the parameter Execute step : it indicates the conditions under which the step should be performed, and has 4 values:

Here the most important thing is to indicate the correct path to the assembly with tests inside the project in the column Run tests from. Here, for example, it looks like this:

% teamcity.build.checkoutDir% is a variable indicating the folder into which the data is downloaded from the repository. In principle, it is not required to be specified, since by default, the path is relative to this directory, so the path could be shortened to:

I will separately mark the Run recently failed test first option - if in the previous run some tests fell, then they will be the first to be launched in the next run, and you will quickly learn about the success of the last changes.

Everything is much more interesting here than with unit tests. The cap here suggests that, in order to test the project in the browser, it, i.e. project must be run. But here we fooled by running the web server directly from the Selenium test code:

And the launch itself looks exactly the same as on the unit tests stage.

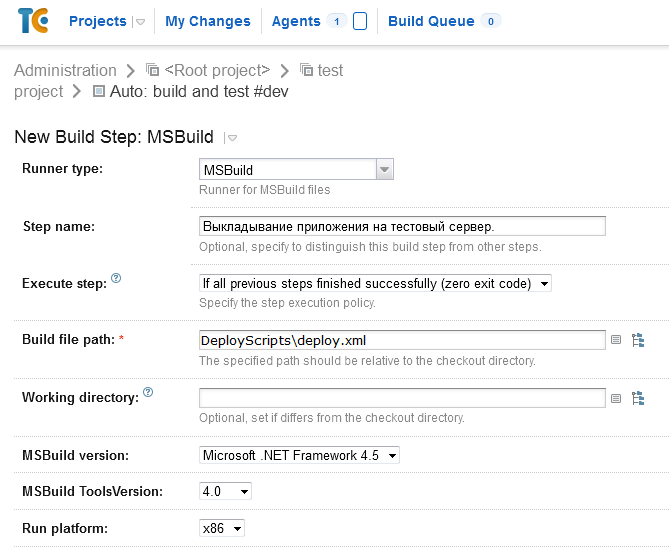

The server on which the TC agent is located and the server on which IIS is installed are different servers, and, moreover, they are located on different networks. Therefore, the files must somehow be delivered to the destination server. And here the solution is chosen, maybe not very elegant, but extremely simple. We use upload via FTP, and MSBuild does this for us.

The scheme is as follows:

And so - the task of his call:

Lack of solution - ALL files are uploaded, not just new ones that have changed. On a project with a bunch of layout files or multiple modules, this can be a problem because of the time it takes to fill.

Another drawback is that when a file is found with a non-Latin name in the name, it falls with an error. Latin letters and numbers are processed normally. The legs of this problem seem to grow out of the specifics of the FTP protocol: it is based on ASCII, but it doesn’t describe how to encode non-ASCII characters, suggesting “do what your server will understand”. Accordingly, to cure the problem, without changing the scheme, you need to patch MSBuild Community Tasks, since the source code is open . Well, or use an alternative way to upload files, for example through WinSCP.

We solve it a little wild, but nice way. IIS has a feature: if you put a file named app_offline.html in the root of the site, the site is chopped off, and when you access all files, the contents of this file will be displayed .

Minus - the appeal is precisely that to all files, including static. So, if you want to make a stub with the design, CSS and images, use inline-styles and data: url, well, or as an option - put them on a separate server.

We enable / disable the server through a WinSCP script and such files:

That is, initially the file is in the root and is called _app_offline.html . When you need to block access for an update, we rename it to app_offline.html . When files are uploaded , the new _app_offline.html file is uploaded , and when finished, the app_offline.html file is deleted . And we get exactly what it was originally.

In the text of the blank page, I highly recommend using the refresh meta-tag, which will periodically refresh the page. If by this time the update process is completed, the user will return back to the service, which will certainly be incredibly happy.

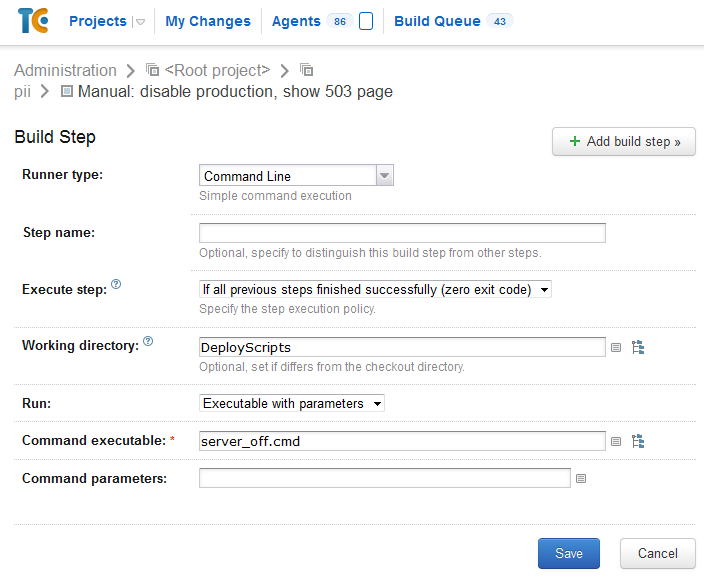

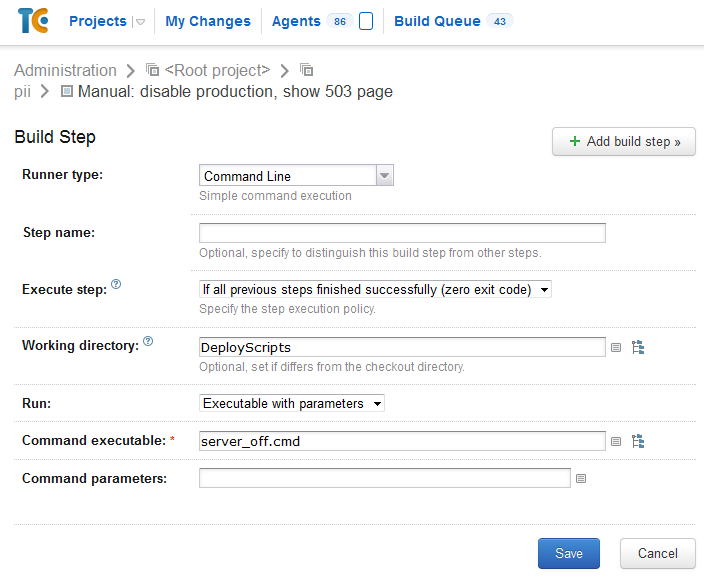

Calling the script to enable the stub (shutting off the stub is similar):

Yes, WinCSP lies as a result right in the repository. Yes, passwords in the clear form are in the file. Yes, not the most elegant solution, but since only developers from our team have access to the repository and the virtual machine with an agent, why not? Yes, it would be possible to store a file with passwords, for example, directly on the agent, but this would not increase security in principle, but, for example, the scanning of a new agent would slow down.

These settings are used by us for about half a year, and so far not a single problem has arisen - everything works like a clock, which is good news.

That's all. I am pleased to hear comments and tips on how to improve these steps, as well as your stories about what features of TC you use in yourself.

Update from November 3, 2014.

Choosing Runner Type "Command line" does not require any screening of spaces - Team City will take care of this on its own.

What is “continuous integration” is perfectly described here and here , it is hardly necessary to repeat this topic for the hundredth time.

Well, for starters - what can TeamCity (hereinafter - just TC)? Or maybe it is the following: when changes appear in the specified repository branch (or other event), execute a script that includes, for example, building an application, running tests, executing other scripts, uploading files to a remote server, etc.

The important point is that the “integration server” and the “machine on which this process will take place” are usually (not necessarily) different servers. Moreover, there are several, even many, machines that run assemblies and tests, and all are on different operating systems - in general, there is where to turn.

')

To start the build process, an agent program is used that accepts commands from a TC server, and it can be launched on any of the major operating systems (the glory of Java multiplatform). You can install several agents on one computer and run them in parallel, but it is important to remember that one agent can process only one project at a time. When starting a task, TC selects the first suitable unallocated agent, and you can install “filters”, for example, select an agent only from Windows OS or only with installed .NET version not lower than 4.0, etc.

Now you need to come up with a work script. We use the following branches in our work:

- release - contains the actual code, the working version, which is located on the combat server;

- dev - all new features go to it, later it is added to release;

- a separate branch for each feature that buds off dev and returns to it.

In general, almost standard git-flow, which can be read in more detail, for example, here .

In this regard, our script will look like this:

- pick up the latest changes from the dev repository;

- compile the project;

- if everything went well at the previous step - run the unit tests;

- if everything went well in the previous step, run the functional tests;

- if everything went well in the previous step, upload the changes to the test server.

For the release branch, we are doing the same thing, but at the time of uploading new data, suspend the server and show the stub.

Now go ahead - implement the script!

Pick up fresh changes from repository

It all starts with a simple project creation.

After - create “ build configuration ”. The configuration defines the build script.

At the second step of creating the configuration, we will be asked about the VCS used, so we answer honestly that we have git here. You may have another VCS - do not get lost. A new repository is added using the Create and attach new VCS root button.

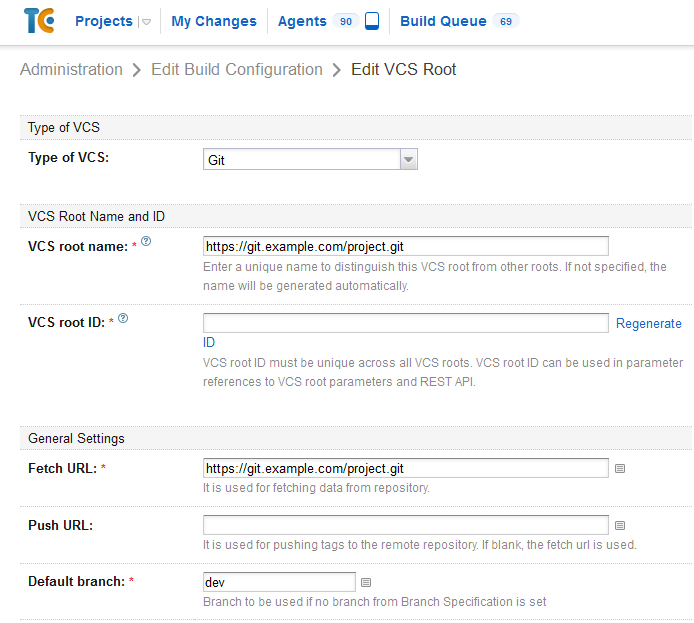

So, the key settings:

- VCS root ID can not touch - a unique code, anyway. If left blank, automatically generated;

- Fetch URL - the address from which we will take the contents of the repository;

- Default branch - the branch from which the information will be taken;

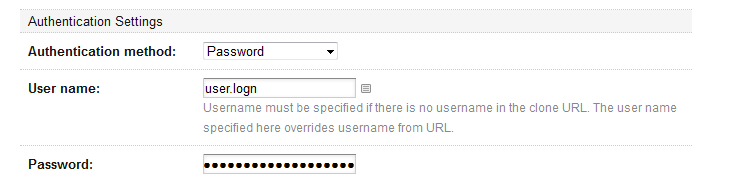

- Authentication method - the most interesting - authentication method. There can be access without authorization (if the data is on the internal server, for example), and by key, and by password. For each option, additional fields will be your own, you know.

The rest of the variety of options - for your taste and color.

Next, you need to configure the automatic start of the task. Go to “ Build Triggers ” (build conditions) and select the VCS Trigger condition - with the default settings it will check for new commits in the repository once a minute, and if there are any, it will launch the task for execution.

Compile project

Since we have a project on ASP.NET in the form of a Solution from Visual Studio - everything was also simple here.

Go to the " Build Steps " menu, select the runner type (and there are really a lot of them here) and stop at MSBuild . Why on it? It provides a fairly simple way to describe the build process, even a fairly complicated one, by adding or removing various steps in a simple XML file.

Then everything is elementary.

Build file path - the path to the sln file.

MSBuild version , MSBuild ToolsVersion and Run platform select the requirements of your project.

If there are several configurations in a project, then you can use the Command line parameters option to enter something like this key:

/p:Configuration=Production where Production is replaced by the desired config.

Enable NuGet Download

An important point if you are using NuGet packages; if not, you can go directly to the next item.

Since NuGet packages weigh a lot, and you don’t feel like storing library binaries unnecessarily, you can use the wonderful option NuGet Package Restore :

In this situation, library binaries are not included in the repository, but are downloaded as needed during the build process.

But MSBuild is a real gentleman and will not do extra gestures without permission, so there will be no way to download packages - this process should be allowed to it. To do this, you must either set the Enable NuGet Package Restore environment variable to true on the client, or go to the Build Parameters menu and set it there.

Run unit tests

Our unit tests are a separate project within the solution. So at the previous step they were already compiled - it remains to run them.

We add a new step, only now Runner is NUnit . Pay attention to the parameter Execute step : it indicates the conditions under which the step should be performed, and has 4 values:

- If all previous steps finished successfully (zero exit code) - if all previous steps have completed without errors. The check is performed purely on the agent;

- Only if build status is successful - similar to the previous one, but the agent also specifies the build status of the TC server. It is necessary for more fine control of the task logic, for example, if the zero return code of a specific step is an error for us;

- Even if some of the previous steps failed - even if one of the previous steps failed with an error;

- Always, even if build stop command was issued - perform a step, even if a command is issued to cancel the execution of the assembly.

Here the most important thing is to indicate the correct path to the assembly with tests inside the project in the column Run tests from. Here, for example, it looks like this:

%teamcity.build.checkoutDir%\project\project.FuncTests\bin\Dev\project.FuncTests.dll % teamcity.build.checkoutDir% is a variable indicating the folder into which the data is downloaded from the repository. In principle, it is not required to be specified, since by default, the path is relative to this directory, so the path could be shortened to:

project\project.FuncTests\bin\Dev\project.FuncTests.dll

I will separately mark the Run recently failed test first option - if in the previous run some tests fell, then they will be the first to be launched in the next run, and you will quickly learn about the success of the last changes.

Run interface tests (functional tests)

Everything is much more interesting here than with unit tests. The cap here suggests that, in order to test the project in the browser, it, i.e. project must be run. But here we fooled by running the web server directly from the Selenium test code:

[SetUpFixture] class ServerInit { private const string ApplicationName = "justtest"; private Process _iisProcess; private string GetApplicationPath(string applicationName) { var tmpDirName=AppDomain.CurrentDomain.BaseDirectory.TrimEnd('\\'); var solutionFolder = Path.GetDirectoryName(Path.GetDirectoryName(Path.GetDirectoryName(tmpDirName))); string result = Path.Combine(solutionFolder, applicationName); return result; } [SetUp] public void RunBeforeAnyTests() { […] var applicationPath = GetApplicationPath(ApplicationName); var programFiles = Environment.GetFolderPath(Environment.SpecialFolder.ProgramFiles); _iisProcess = new Process { StartInfo = { FileName = string.Format("{0}/IIS Express/iisexpress.exe", programFiles), Arguments = string.Format("/path:\"{0}\" /port:{1}", applicationPath, UrlProvider.Port) } }; _iisProcess.Start(); } [TearDown] public void RunAfterAnyTests() { […] if (_iisProcess.HasExited == false) { _iisProcess.Kill(); } } }] And the launch itself looks exactly the same as on the unit tests stage.

Pour changes to the test server

The server on which the TC agent is located and the server on which IIS is installed are different servers, and, moreover, they are located on different networks. Therefore, the files must somehow be delivered to the destination server. And here the solution is chosen, maybe not very elegant, but extremely simple. We use upload via FTP, and MSBuild does this for us.

The scheme is as follows:

- set up an FTP account on the server for uploading files. For additional security, you can disable casting for all IP except the internal one, if the TC server is on the internal network, of course;

- install on the MSBuild Community Tasks agent in order to be able to use the “Upload via FTP” task. Download here ;

- prepare a script file for MSBuild that will perform the following actions:

- building the application in a temporary folder;

- substitution of the configuration file;

- file upload via FTP.

This is how this file will look like (deploy.xml)

<?xml version="1.0" encoding="utf-8"?> <Project xmlns="http://schemas.microsoft.com/developer/msbuild/2003" ToolsVersion="4.0" DefaultTargets="Build"> <UsingTask TaskName="TransformXml" AssemblyFile="$(MSBuildExtensionsPath)\Microsoft\VisualStudio\v11.0\Web\Microsoft.Web.Publishing.Tasks.dll"/> <Import Project="$(MSBuildExtensionsPath32)\MSBuildCommunityTasks\MSBuild.Community.Tasks.Targets"/> <PropertyGroup> <OutputDir>bin</OutputDir> <PublishDir>../output</PublishDir> <Configuration>Dev</Configuration> <TransformInputFile>..\project\project\Web.template.config</TransformInputFile> <TransformFile>..\project\project\Web.$(Configuration).config</TransformFile> <TransformOutputFile>..\project\output\Web.config</TransformOutputFile> <StackTraceEnabled>False</StackTraceEnabled> </PropertyGroup> <ItemGroup> <ProjectToBuild Include="../project/project.sln"> <Properties>WebProjectOutputDir=$(PublishDir);OutputPath=$(OutputDir);Configuration=Dev</Properties> </ProjectToBuild> </ItemGroup> <Target Name="Build"> <MSBuild Projects="@(ProjectToBuild)"/> </Target> <Target Name="CreateWebConfigs" AfterTargets="Build"> <TransformXml Source="$(TransformInputFile)" Transform="$(TransformFile)" Destination="$(TransformOutputFile)" /> </Target> <Target Name="AfterBuild" AfterTargets="CreateWebConfigs"> <PropertyGroup> <ftpHost>dev.example.com</ftpHost> <ftpUser></ftpUser> <ftpPass></ftpPass> <LocalDirectory>..\project\output</LocalDirectory> </PropertyGroup> <FtpUploadDirectoryContent ServerHost="$(ftpHost)" Port="21" Username="$(ftpUser)" Password="$(ftpPass)" LocalDirectory="$(LocalDirectory)" RemoteDirectory="" Recursive="true" /> </Target> </Project> And so - the task of his call:

Lack of solution - ALL files are uploaded, not just new ones that have changed. On a project with a bunch of layout files or multiple modules, this can be a problem because of the time it takes to fill.

Another drawback is that when a file is found with a non-Latin name in the name, it falls with an error. Latin letters and numbers are processed normally. The legs of this problem seem to grow out of the specifics of the FTP protocol: it is based on ASCII, but it doesn’t describe how to encode non-ASCII characters, suggesting “do what your server will understand”. Accordingly, to cure the problem, without changing the scheme, you need to patch MSBuild Community Tasks, since the source code is open . Well, or use an alternative way to upload files, for example through WinSCP.

Stop and start the server for the release script

We solve it a little wild, but nice way. IIS has a feature: if you put a file named app_offline.html in the root of the site, the site is chopped off, and when you access all files, the contents of this file will be displayed .

Minus - the appeal is precisely that to all files, including static. So, if you want to make a stub with the design, CSS and images, use inline-styles and data: url, well, or as an option - put them on a separate server.

We enable / disable the server through a WinSCP script and such files:

server_off.cmd

winscp.exe /console /script=server_off.txt server_on.cmd

winscp.exe /console /script=server_on.txt server_off.txt

option batch abort option confirm off open ftp://:@dev.example.com mv _app_offline.htm app_offline.htm close exit server_on.txt

option batch abort option confirm off open ftp://:@dev.example.com rm app_offline.htm close exit That is, initially the file is in the root and is called _app_offline.html . When you need to block access for an update, we rename it to app_offline.html . When files are uploaded , the new _app_offline.html file is uploaded , and when finished, the app_offline.html file is deleted . And we get exactly what it was originally.

In the text of the blank page, I highly recommend using the refresh meta-tag, which will periodically refresh the page. If by this time the update process is completed, the user will return back to the service, which will certainly be incredibly happy.

Calling the script to enable the stub (shutting off the stub is similar):

Yes, WinCSP lies as a result right in the repository. Yes, passwords in the clear form are in the file. Yes, not the most elegant solution, but since only developers from our team have access to the repository and the virtual machine with an agent, why not? Yes, it would be possible to store a file with passwords, for example, directly on the agent, but this would not increase security in principle, but, for example, the scanning of a new agent would slow down.

These settings are used by us for about half a year, and so far not a single problem has arisen - everything works like a clock, which is good news.

That's all. I am pleased to hear comments and tips on how to improve these steps, as well as your stories about what features of TC you use in yourself.

Update from November 3, 2014.

Choosing Runner Type "Command line" does not require any screening of spaces - Team City will take care of this on its own.

Source: https://habr.com/ru/post/205402/

All Articles