Windows Azure Blob-storage: CORS support

Recently there were many updates to Windows Azure . Among them, the long-awaited support of Cross-Origin Resource Sharing for storage. I use blob-storage (file storage) in my work and in this post I will describe how to make file uploading easy and enjoyable.

To start working with storage (blob-storage) using CORS, you need to solve the following subtasks:

1. Create a repository

2. Enable CORS support

3. Create a temporary key for writing (Shared Access Signature)

4. Write downloader

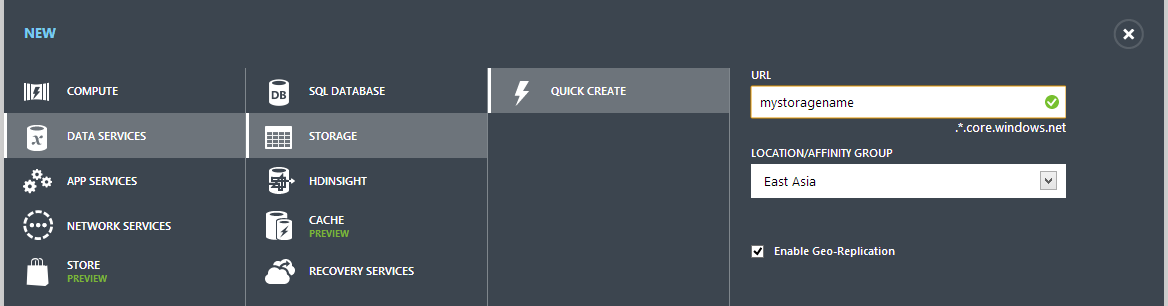

Creating a storage is very simple: you need to go to the Azure management portal and create it.

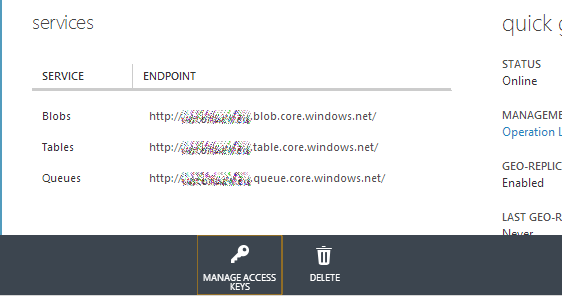

After the storage is created, we need its name, access point and access key.

Now you need to make a container, you can create it in the portal or from the code.

Up to five CORS rules can be created for each storage service (blob, tables and queue). For our purposes, one is enough. The rule has several properties.

')

You can add * to allow access from anywhere. To restrict access from certain domains, it is necessary to add all of them to the list with indication of the protocol and case sensitive (i.e., if you add only http://domain.com, then the request is https://domain.com or http: // Domain.com will not work).

If the request will contain headers not listed, it will not pass. To upload files, we need two standard headers: accept and content-length, and blobs specific: x-ms-blob-type, x-ms-blob-content-type and x-ms-blob-cache-control.

List of methods that will be accepted by the repository. To send files, you need a PUT method.

The time that the browser has to cache the pre-request (preflight request), from which it learns the CORS settings. Considering that all successful requests to the repository are billed, it is worth making it large enough.

If you configure from .net (all of the above and below can be done using the REST-API), you will need the Microsoft.WindowsAzure.Storage library of the third version. The easiest way to extract it is nuget.

With this, everything is also simple: the key is a string passed in the address parameters when accessing the repository. Valid within the object for which permission is given. In our case it will be a container. Formed in the following way:

Now, after all the preparatory activities, you can begin to create a bootloader.

In Windows Azure there are two types of blobs - block and page. The page is optimized for storing streaming data (video, audio, etc.) and cut into pages of 512 bytes. Their maximum size is 1 TB. In this example, block blobs will be used, with a maximum size of 400GB. For the bootloader, it is important that in one download operation it can transfer no more than 64MB. Those. the file is cut into blocks not exceeding 64MB and the final operation blocks are glued together. In the example, files will be loaded in chunks of 512Kb

Accordingly, the algorithm of the loader will be as follows.

The logic of the loader is quite simple, but since everything in our world has become asynchronous, you need to be careful.

We will read files using a new and convenient FileReader.

Create a handler for it, which, after reading the block, will send it to the repository:

And this is what the function of actually reading the file looks like:

When the file has been read and all blocks have been sent, you need to inform the repository about it so that it will glue the blocks together.

That's all. Using the capabilities of CORS, you can now upload files directly to the repositories, almost without involving your servers. And this is good: the server makes less, it takes less, more money is left for everything else.

Project sources: https://github.com/unconnected4/CORSatWindowsAzureBlobStorage

HTML5 File API: multiple file uploads to the server

Put Blob (REST-API) - important in the list of headers

Windows Azure Storage and Cross-Origin Resource Sharing (CORS) - Lets Have Some Fun

Uploading Large Files in Windows Azure Blob Storage Using Shared Access Signature, HTML, and JavaScript

g +

To start working with storage (blob-storage) using CORS, you need to solve the following subtasks:

1. Create a repository

2. Enable CORS support

3. Create a temporary key for writing (Shared Access Signature)

4. Write downloader

Creating a repository

Creating a storage is very simple: you need to go to the Azure management portal and create it.

screen

After the storage is created, we need its name, access point and access key.

screen

Now you need to make a container, you can create it in the portal or from the code.

screen

c # create blob container

CloudStorageAccount account = CloudStorageAccount.Parse("connectionString"); CloudBlobClient client = account.CreateCloudBlobClient(); CloudBlobContainer container = client.GetContainerReference("containername"); if (container.CreateIfNotExists()) { container.SetPermissions(new BlobContainerPermissions { PublicAccess = BlobContainerPublicAccessType.Blob }); } Enabling CORS Support for File Storage Service

Up to five CORS rules can be created for each storage service (blob, tables and queue). For our purposes, one is enough. The rule has several properties.

')

Allowed domains

You can add * to allow access from anywhere. To restrict access from certain domains, it is necessary to add all of them to the list with indication of the protocol and case sensitive (i.e., if you add only http://domain.com, then the request is https://domain.com or http: // Domain.com will not work).

Allowed headers

If the request will contain headers not listed, it will not pass. To upload files, we need two standard headers: accept and content-length, and blobs specific: x-ms-blob-type, x-ms-blob-content-type and x-ms-blob-cache-control.

Allowed methods

List of methods that will be accepted by the repository. To send files, you need a PUT method.

Lifetime

The time that the browser has to cache the pre-request (preflight request), from which it learns the CORS settings. Considering that all successful requests to the repository are billed, it is worth making it large enough.

Cors-rule

If you configure from .net (all of the above and below can be done using the REST-API), you will need the Microsoft.WindowsAzure.Storage library of the third version. The easiest way to extract it is nuget.

PM> Install-Package WindowsAzure.Storage CorsRule corsRule = new CorsRule { AllowedOrigins = new List<string> { "http://allowed.domain.com", "https://allowed.domain.com" }, AllowedHeaders = new List<string> { "x-ms-blob-*", "content-type", "accept" }, AllowedMethods = CorsHttpMethods.Put, MaxAgeInSeconds=60*60 }; Connecting the rule to the service

ServiceProperties serviceProperties = new ServiceProperties { // ( , ) - CORS DefaultServiceVersion = "2013-08-15", //, , NullReference exception // Logging = new LoggingProperties { Version = "1.0", LoggingOperations = LoggingOperations.None }, HourMetrics = new MetricsProperties { Version = "1.0", MetricsLevel = MetricsLevel.None }, MinuteMetrics = new MetricsProperties { Version = "1.0", MetricsLevel = MetricsLevel.None } }; // serviceProperties.Cors.CorsRules.Add(corsRule); // client.SetServiceProperties(serviceProperties); Getting a temporary key.

With this, everything is also simple: the key is a string passed in the address parameters when accessing the repository. Valid within the object for which permission is given. In our case it will be a container. Formed in the following way:

CloudBlobContainer container = client.GetContainerReference("containername"); string key=container.GetSharedAccessSignature(new SharedAccessBlobPolicy { Permissions = SharedAccessBlobPermissions.Write, SharedAccessStartTime = DateTime.UtcNow.AddMinutes(-1), // SharedAccessExpiryTime = DateTime.UtcNow.AddMinutes(60), // }) Loader

Now, after all the preparatory activities, you can begin to create a bootloader.

In Windows Azure there are two types of blobs - block and page. The page is optimized for storing streaming data (video, audio, etc.) and cut into pages of 512 bytes. Their maximum size is 1 TB. In this example, block blobs will be used, with a maximum size of 400GB. For the bootloader, it is important that in one download operation it can transfer no more than 64MB. Those. the file is cut into blocks not exceeding 64MB and the final operation blocks are glued together. In the example, files will be loaded in chunks of 512Kb

Accordingly, the algorithm of the loader will be as follows.

10 20 30 10 40 The logic of the loader is quite simple, but since everything in our world has become asynchronous, you need to be careful.

We will read files using a new and convenient FileReader.

var reader=new FileReader(); Create a handler for it, which, after reading the block, will send it to the repository:

reader.onloadend = function(e) { if (e.target.readyState == FileReader.DONE) { // DONE == 2 //url , , , // var uri = submitUri + '&comp=block&blockid=' + blockIds[blockIds.length - 1]; var requestData = new Uint8Array(e.target.result); $.ajax({ url: uri, type: "PUT", data: requestData, processData: false, //preflight request beforeSend: function (xhr) { xhr.setRequestHeader('x-ms-blob-type', 'BlockBlob'); xhr.setRequestHeader('Content-Length', requestData.length); }, success: function () { bytesUploaded += requestData.length; var percentage = ((parseFloat(bytesUploaded) / parseFloat(files[fileIndex].size)) * 100).toFixed(2); $('#progress_'+fileIndex).text(' : ' + percentage + '%'); processFile(); }, error: function () { $('#progress_' + fileIndex).text(' '); } }); } And this is what the function of actually reading the file looks like:

function processFile() { if (bytesRemain > 0) { // blockIds.push(btoa(blockId())); // var fileContent = files[fileIndex].slice(streamPointer, streamPointer + blockSize); reader.readAsArrayBuffer(fileContent); streamPointer += blockSize; bytesRemain -= blockSize; if (bytesRemain < blockSize) { blockSize = bytesRemain; } } else { // , commitBlocks(); } } When the file has been read and all blocks have been sent, you need to inform the repository about it so that it will glue the blocks together.

function commitBlocks() { // var uri = submitUri + '&comp=blocklist'; var requestBody = '<?xml version="1.0" encoding="utf-8"?><BlockList>'; for (var i = 0; i < blockIds.length; i++) { requestBody += '<Latest>' + blockIds[i] + '</Latest>'; } requestBody += '</BlockList>'; $.ajax({ url: uri, type: 'PUT', data: requestBody, beforeSend: function (xhr) { // mime-type , xhr.setRequestHeader('x-ms-blob-content-type', files[fileIndex].type); // , xhr.setRequestHeader('x-ms-blob-cache-control', 'max-age=31536000'); xhr.setRequestHeader('Content-Length', requestBody.length); }, success: function () { $('#progress_' + fileIndex).text(''); ($('<a>').attr('href', storageurl + files[fileIndex].name).text(files[fileIndex].name)).appendTo($('#progress_' + fileIndex)); fileIndex++; startUpload(); }, error: function () { $('#progress_' + fileIndex).text(' '); } }); } That's all. Using the capabilities of CORS, you can now upload files directly to the repositories, almost without involving your servers. And this is good: the server makes less, it takes less, more money is left for everything else.

Project sources: https://github.com/unconnected4/CORSatWindowsAzureBlobStorage

Links to read:

HTML5 File API: multiple file uploads to the server

Put Blob (REST-API) - important in the list of headers

Windows Azure Storage and Cross-Origin Resource Sharing (CORS) - Lets Have Some Fun

Uploading Large Files in Windows Azure Blob Storage Using Shared Access Signature, HTML, and JavaScript

g +

Source: https://habr.com/ru/post/204966/

All Articles