3D VDI acceleration in practice. Part 1

3D VDI acceleration in practice.

Part 1 - vSGA and vDGA

The lack of hardware acceleration of graphics is a significant obstacle in the implementation of virtualization technologies in companies working in the field of design, engineering, design development, etc. Consider what new opportunities appeared with the release of NVIDIA GRID.

Virtualization of workplaces (VDI) has already firmly entered our lives, primarily in the corporate market segment, and confidently fights the way to other segments, including in the form of public cloud services ( Desktop as a Service ). The lack of hardware-accelerated graphics hinders the use of this technology in industries that could appreciate the benefits of using VDI such as remote accessibility, data security, and simplified personnel outsourcing.

')

The first steps to using 3D acceleration in VDI were made quite a long time ago and consisted in forwarding PCI devices to virtual machines, which allowed to issue video cards for VDI installed in the server or connected to the server using external PCIe baskets, such as Dell PowerEdge C410x. The disadvantages of this solution are obvious - increased use of electricity, space in racks and high cost.

NVIDIA GRID Technology at a Glance

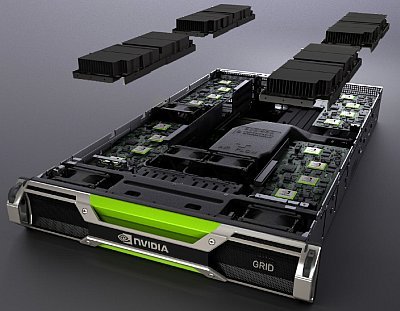

With the announcement of NVIDIA GRID technology (NVIDIA VGX at the time of the announcement) last year, interest in using 3D accelerated VDI has increased significantly. The essence of the GRID technology, which is originally designed specifically for 3D acceleration in virtual environments , is quite simple and includes the following principles:

- Aggregation based on one PCIe card of several graphic accelerators;

- The ability to virtualize graphics accelerators at the hypervisor level;

- The possibility of virtualization of graphics accelerators using GRID Virtual GPU technology.

Currently, NVIDIA has released two video cards based on the NVIDIA Keppler architecture - NVIDIA GRID K1 and K2. The characteristics of these cards are as follows:

| GRID K1 | GRID K2 | |

| GPU number | 4 Kepler entry level GPU | 2 Kepler high-end GPU |

| CUDA Kernels | 768 | 3072 |

| Total memory size | 16 GB DDR3 | 8 GB GDDR5 |

| Maximum power | 130 W | 225 W |

| Card Length | 26.7 cm | 26.7 cm |

| Map Height | 11.2 cm | 11.2 cm |

| Map Width | Dual slot | Dual slot |

| Display data input / output | Not | Not |

| Extra power | 6-pin connector | 8-pin connector |

| PCIe | x16 | x16 |

| PCIe generation | Gen3 (compatible with Gen2) | Gen3 (compatible with Gen2) |

| Cooling | Passive | Passive |

| Technical specifications | GRID K1 board specifications | GRID K2 board specifications |

In fact, GRID K1 is four QUADRO K600 cards integrated on one PCIe card, GRID K2 cards are two cards of QUADRO K5000 level. This allows even without using virtualization to significantly increase the density of graphics cards in servers.

The inclusion of various vendors in the GRID server platform, ensuring the installation of up to 4 GRID cards in one server eliminates the need to use external PCIe baskets.

Software that supports the GRID technology is VMware, Citrix and Microsoft hypervisors, as well as VMware and Citrix workstation virtualization systems (and Microsoft, if we consider the options for server sharing).

Description of our test bench

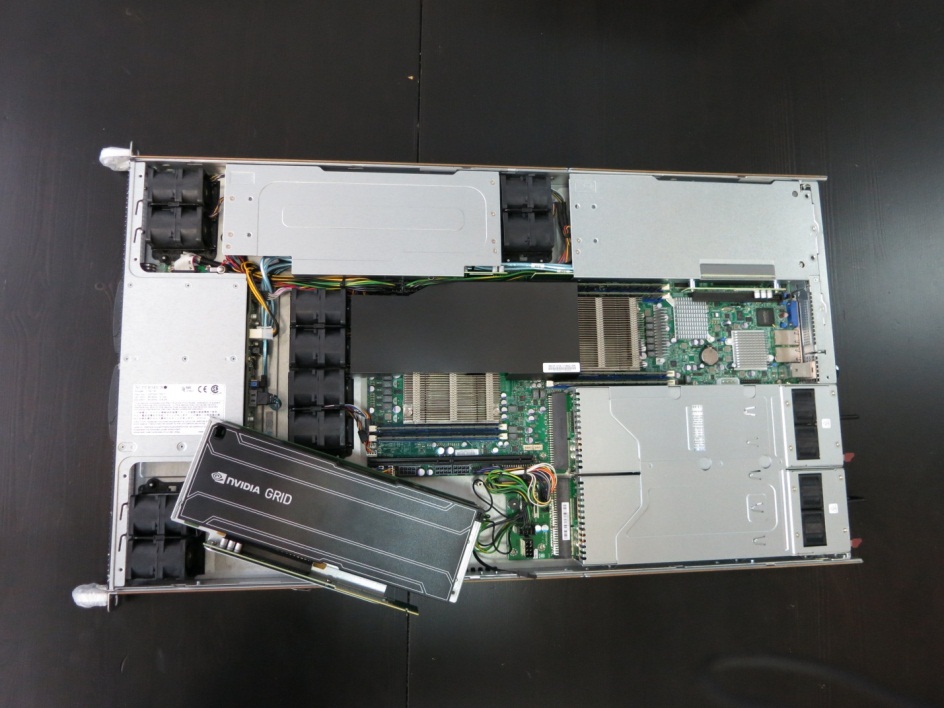

For our test bench, we decided to use the 1U SuperMicro 1027GR-TRFT server.

Its main features are:

- Dual socket R (LGA 2011) supports Intel® Xeon® processor E5-2600 and E5-2600 v2 family

- Up to 512GB ECC DDR3, up to 1866MHz; 8x DIMM sockets

- 3x PCI-E 3.0 x16 slots (support GPU / Xeon Phi cards), 1x PCI-E 3.0 x8 (in x16) low-profile slot

- Intel® X540 10GBase-T Controller

- 4x Hot-swap 2.5 "SATA3 Drive Bays

- 1800W Redundant Power Supplies Platinum Level (94% +)

This choice was due to the high density (up to 3 GRID video cards in 1U) and the presence of built-in 10GBase-T network interfaces.

SATA basket allows you to use inexpensive SSD drives for Host Based data access caching, which is so useful for VDI loads, with characteristic disk activity peaks at the beginning and end of the working day.

With the current prices for the memory modules of eight DIMM slots, this is quite enough in a situation where the density of a VM per server is limited by the CPU and GPU resources.

In this server, we installed the NVIDIA GRID K1 card. Here is a photo of the server with a video card ready for installation:

As a virtualization platform, VMware vSphere familiar to us was chosen. Looking ahead, I’ll note that in the second part of this article we will have to use Citrix XenServer , because at the moment only he and only in the Tech Preview status supports GRID Virtual GPU technology.

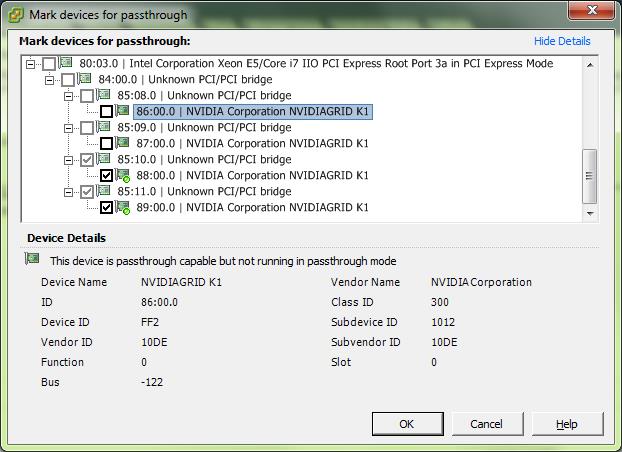

The ESXi hypervisor defines a video card as 4 NVIDIAGRID K1 devices connected via a PCI / PCI bridge, which makes accelerators available for separate use as a passthrough device connected to a VM or as the basis for virtualization at the hypervisor level.

The driver from NVIDIA is installed in the hypervisor:

~ # esxcli software vib list | grep NVIDIANVIDIA-VMware_ESXi_5.1_Host_Driver 304.76-1OEM.510.0.0.802205 NVIDIA VMwareAccepted 2013-03-26All devices that are not put into passthrough mode are initialized and loaded by the NVIDIA driver during the boot process:

2013-10-28T06:12:42.521Z cpu7:9838)Loading module nvidia ...2013-10-28T06:12:42.535Z cpu7:9838)Elf: 1852: module nvidia has license NVIDIA2013-10-28T06:12:42.692Z cpu7:9838)module heap: Initial heap size: 8388608, max heap size: 684769282013-10-28T06:12:42.692Z cpu7:9838)vmklnx_module_mempool_init: Mempool max 68476928 being used for module: 772013-10-28T06:12:42.693Z cpu7:9838)vmk_MemPoolCreate passed for 2048 pages2013-10-28T06:12:42.693Z cpu7:9838)module heap: using memType 22013-10-28T06:12:42.693Z cpu7:9838)module heap vmklnx_nvidia: creation succeeded. id = 0x4100370000002013-10-28T06:12:42.943Z cpu7:9838)PCI: driver nvidia is looking for devices2013-10-28T06:12:42.943Z cpu7:9838)PCI: driver nvidia claimed device 0000:86:00.02013-10-28T06:12:42.943Z cpu7:9838)PCI: driver nvidia claimed device 0000:87:00.02013-10-28T06:12:42.943Z cpu7:9838)PCI: driver nvidia claimed 2 devicesNVRM: loading NVIDIA UNIX x86_64 Kernel Module 304.76 Sun Jan 13 20:13:01 PST 20132013-10-28T06:12:42.944Z cpu7:9838)Mod: 4485: Initialization of nvidia succeeded with module ID 77.2013-10-28T06:12:42.944Z cpu7:9838)nvidia loaded successfully.After loading the hypervisor

Citrix XenDesktop 7 is used as a platform for creating a VDI infrastructure, which is currently used in our production infrastructure providing VDI services to our customers. The test machines use the HXD 3D Pro technology, which provides efficient packaging and forwarding of the rendered GPU image to the client. The test virtual server has the following configuration: 4vCPU 2GHz, 8GB RAM, 60GB HDD.

VSGA testing

vSGA is a VMware technology that provides virtualization of GPU resources installed in servers running the VMware ESXi hypervisor and the subsequent use of GPU data to provide 3D acceleration for virtual video cards issued for a virtual server.

The technology has many limitations in terms of performance and functionality of virtual video cards, however, it allows you to maximize the density of virtual machines per GPU.

In fact, we managed to start the machines with close to twofold excess of the amount of virtual video memory compared to the amount of physical video memory on the used GPUs.

The functionality of the virtual video card is as follows:

- Supported APIs: DirectX 9, OpenGL 2.1

- maximum amount of video memory: 512MB

- graphics core performance: dynamic, not controlled.

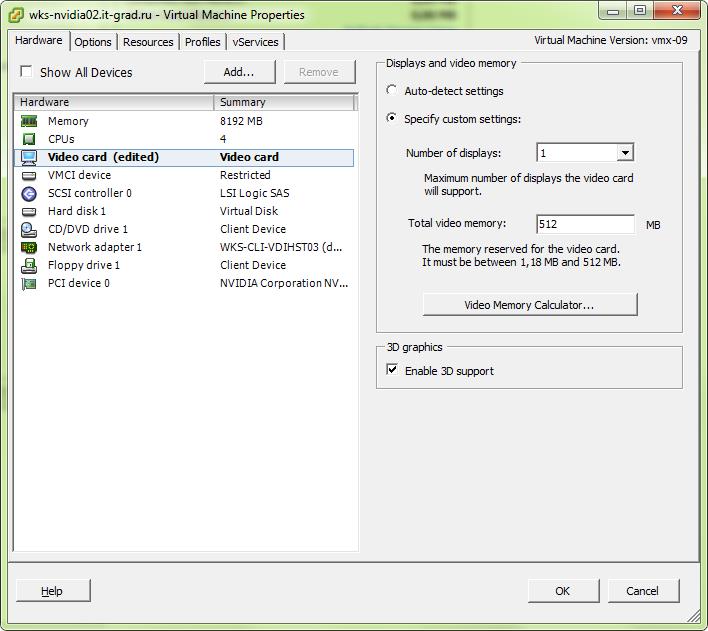

In the case of using VMware View, this virtual machine configuration can be done directly from the View management interface, but in our case, to activate hardware acceleration for a virtual video card, you need to perform two actions:

- enable 3D support

- set the size of the video memory in the properties of the video card in the editing machine:

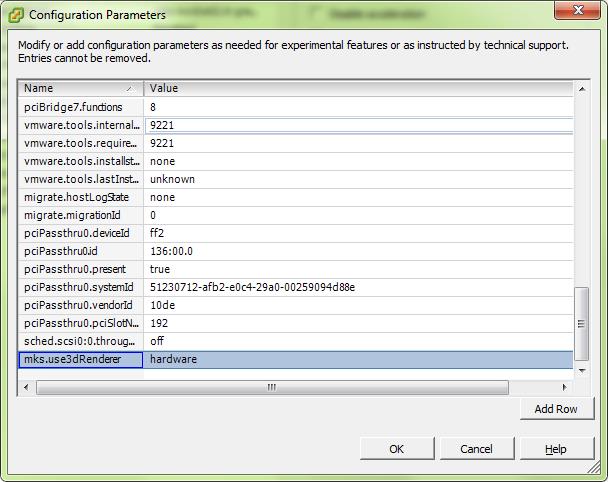

and add the parameter mks.use3dRenderer = hardware to its parameters:

In the guest OS, such a virtual video card is defined as “VMware SVGA 3D”. It differs from the usual virtual video card only in memory and in support of the hardware acceleration of the above APIs.

The results of the FurMark test on such a VDI machine unequivocally say that you will not have to play on it (it should be noted that during testing a physical video card was used by one virtual machine, that is, all the computing resources of the video card, taking into account the virtualization overhead, were available to the test ):

From the point of view of AutoCad 2014, the capabilities of the video card are as follows:

Enhanced 3D Performance: Available and onSmooth display: Available and offGooch shader: Available and using hardwarePer-pixel lighting: Available and onFull-shadow display: Available and onTexture compression: Available and offAdvanced material effects: Available and onAutodesk driver: Not CertifiedEffect support:Enhanced 3D Performance: AvailableSmooth display: AvailableGooch shader: AvailablePer-pixel lighting: AvailableFull-shadow display: AvailableTexture compression: AvailableAdvanced material effects: AvailableAs you can see, formally all the parameters of hardware acceleration are supported by the driver . It is assumed that we can see support problems only when using heavier products that use, for example, the CUDA architecture.

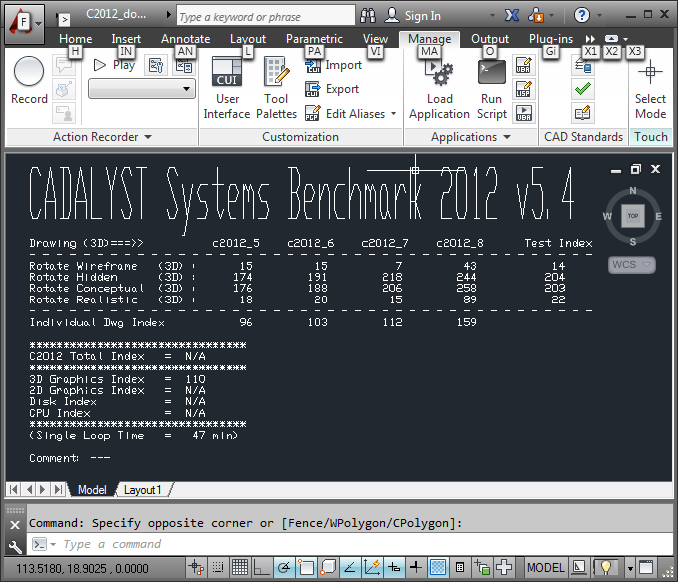

Cadalyst Benchmark test results:

The results are not impressive, but you can use this software, and if you do not need great performance and work with complex models - for example, in the classroom, then high density and low cost of such machines may be useful.

VDGA testing

vDGA is the name used by VMware to refer to forwarding a physical video card to a virtual machine.

In fact, for this technology, NVIDIA GRID gave one single advantage - a high GPU density , which eliminates the use of external PCIe baskets.

For example, in the server used on the test bench it is possible to install three NVIDIA GRID K1 video cards, which will give us 12 independent QUADRO K600 accelerators . This allows you to run 12 virtual servers on the server, which allows you to load server capacity, and, depending on the load profile, it also provides GPU resources for resources compared to CPU resources.

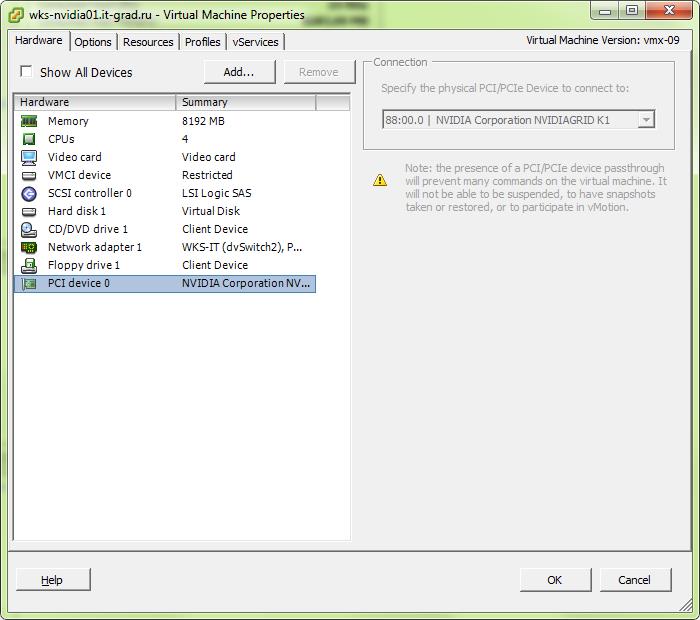

To forward a video card to a virtual server, you must enable passthrough mode for this PCIe device in the host configuration and add a PCI device to the virtual machine configuration:

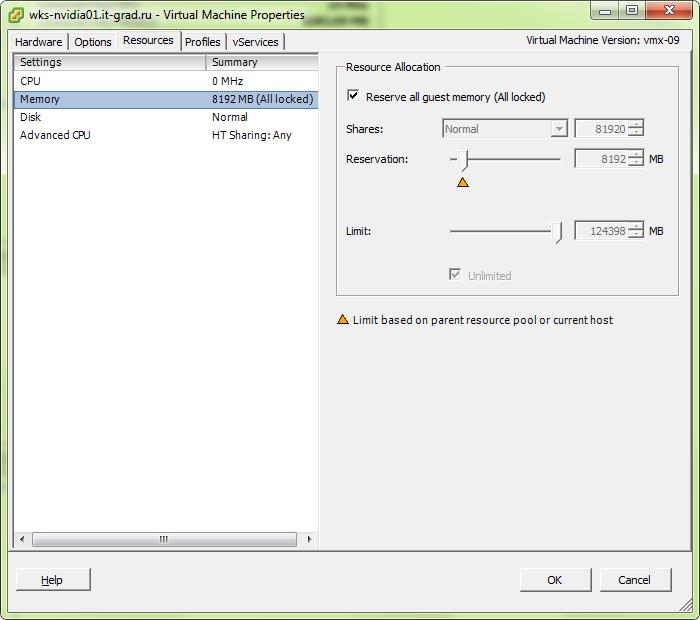

Also, you need to install a full memory reservation for this virtual machine.

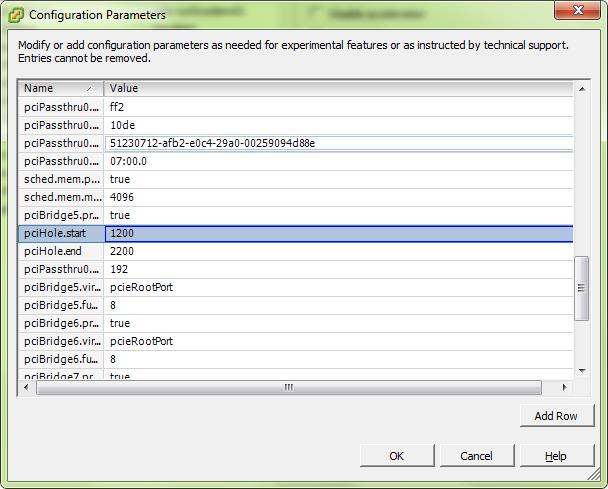

and set up pci hole. On this account there are different opinions, we chose values from 1200 to 2200:

In the guest OS, in this case, the video card seems to be a full-fledged NVIDIA device and requires the installation of drivers for the GRID video card family.

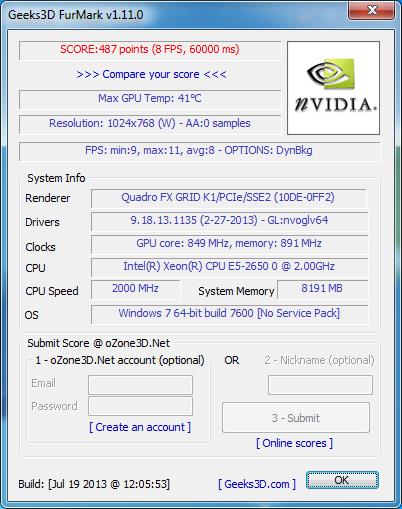

The results of FurMark are close to the results obtained in the vSGA test, which indicates the relative effectiveness of the level of virtualization for this test:

When using AutoCad 2014, the picture is as follows:

Current Effect Status:Enhanced 3D Performance: Available and on Smooth display: Available and offGooch shader: Available and using hardwarePer-pixel lighting: Available and onFull-shadow display: Available and onTexture compression: Available and offAdvanced material effects: Available and onAutodesk driver: Not CertifiedEffect support:Enhanced 3D Performance: AvailableSmooth display: AvailableGooch shader: AvailablePer-pixel lighting: AvailableFull-shadow display: AvailableTexture compression: AvailableAdvanced material effects: AvailableAll features are also expectedly supported, but the card is not certified. From the GRID series for AutoCad, only K2 is certified.

Results of the Cadalyst 2012 benchmark:

As we can see, the projected video card actually shows results 4 times larger than the virtualized one . In this case, it is already possible to use such a machine for the work of a designer.

If the performance of the K1 card is not enough, you can install the K2 and get a top range video card inside the virtual server.

In the second part of the article

We will talk in detail about the possibility of GPU virtualization through NVIDIA technologies, which promise us support for all available physical API cards and performance sufficient for confident work with CAD, we will show a test bench, measure the performance of such video cards and summarize. To be continued.

Source: https://habr.com/ru/post/204768/

All Articles