MPEG-DASH in nginx-rtmp-module: live video in a browser without flash

What is MPEG-DASH

MPEG-DASH is a new generation technology that allows you to stream an adaptive video stream. The data is divided into fragments and transferred to the client via the HTTP protocol. This allows you to reliably transfer video through the existing HTTP infrastructure, overcome proxy servers, as well as smoothly transfer network problems, changes in network addresses, and so on.

MPEG-DASH is a new generation technology that allows you to stream an adaptive video stream. The data is divided into fragments and transferred to the client via the HTTP protocol. This allows you to reliably transfer video through the existing HTTP infrastructure, overcome proxy servers, as well as smoothly transfer network problems, changes in network addresses, and so on.DASH - Dynamic Adaptive Streaming over HTTP. The DASH ISO / IEC 23009-1: 2012 standard was developed by the MPEG group in 2011MPEG-DASH technology is generally similar to other well-known HLS (HTTP Live Streaming) technology developed by Apple and widely used on mobile devices with iOS and Android. The stream is presented in the form of small fragments and a playlist (manifest) containing flow metadata and links to fragments.

In the HLS playlist is stored in the format m3u8 (extension m3u), and fragments - in MPEG-TS (part of the standard MPEG-2). In MPEG-DASH, a playlist (manifest) is stored in XML, and fragments can be in both MPEG-TS format and ISO BMFF (in other words, mp4). In practice, support for MPEG-TS clients is limited, so you have to focus on a more modern mp4.

What is the advantage of MPEG-DASH? The main advantage is that support for this technology is being actively introduced into browsers today, which makes it possible to broadcast video without using heavy and rather annoying flash. In addition, MPEG-DASH is supported by new TV models within the HbbTV standard.

')

Next, I will talk about the technology itself and describe how to configure live broadcasting in the browser using nginx-rtmp-module and dash.js.

Manifesto

The MPEG-DASH manifest is an XML document. Its specification is given in ISO / IEC 23009-1: 2012. The manifesto has a rather complicated format, it supports periods, time shifts, stream descriptions, various ways of fragment numbering. For live streaming with constant audio and video channels, we need only a limited set of features:

- video characteristics - dimensions, fps, codec, stream width

- audio characteristics - frequency, codec, stream width

- links to actual audio and video fragments

- links to initialization fragments of threads

Manifest example

<?xml version="1.0"?> <MPD type="dynamic" xmlns="urn:mpeg:dash:schema:mpd:2011" availabilityStartTime="2013-11-27T12:40:35+04:00" availabilityEndTime="2013-11-27T12:41:08+04:00" minimumUpdatePeriod="PT5S" minBufferTime="PT5S" timeShiftBufferDepth="PT0H0M0.00S" suggestedPresentationDelay="PT10S" profiles="urn:mpeg:dash:profile:isoff-live:2011"> <Period start="PT0S" id="dash"> <AdaptationSet segmentAlignment="true" maxWidth="768" maxHeight="576" maxFrameRate="24"> <Representation id="video" mimeType="video/mp4" codecs="avc1.42c028" width="768" height="576" frameRate="24" sar="1:1" startWithSAP="1" bandwidth="641000"> <SegmentTemplate presentationTimeOffset="0" timescale="1000" media="mystream-$Time$.m4v" initialization="mystream-init.m4v"> <SegmentTimeline> <S t="0" d="5888"/> <S t="5888" d="6760"/> <S t="12648" d="6000"/> <S t="18648" d="8680"/> <S t="27328" d="6545"/> </SegmentTimeline> </SegmentTemplate> </Representation> </AdaptationSet> <AdaptationSet segmentAlignment="true"> <AudioChannelConfiguration schemeIdUri="urn:mpeg:dash:23003:3:audio_channel_configuration:2011" value="1"/> <Representation id="audio" mimeType="audio/mp4" codecs="mp4a.40.2" audioSamplingRate="48000" startWithSAP="1" bandwidth="125000"> <SegmentTemplate presentationTimeOffset="0" timescale="1000" media="mystream-$Time$.m4a" initialization="mystream-init.m4a"> <SegmentTimeline> <S t="0" d="5888"/> <S t="5888" d="6760"/> <S t="12648" d="6000"/> <S t="18648" d="8680"/> <S t="27328" d="6545"/> </SegmentTimeline> </SegmentTemplate> </Representation> </AdaptationSet> </Period> </MPD> Fragments

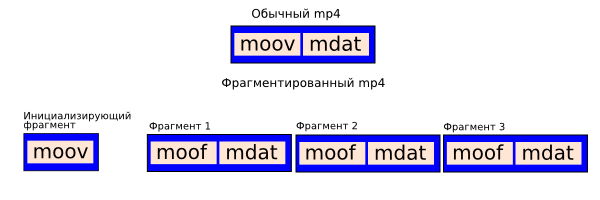

MPEG-DASH fragments are in mp4 format. However, these are not exactly the mp4s we usually use, these are fragmented mp4s. A regular mp4 file consists of two main parts - metadata and data. Metadata is stored in the

moov atom, and data is stored in the mdat atom. Metadata allows for each sample to find out its timestamp, duration, size, offset relative to the beginning of the file, and so on. In addition, moov stores information about codecs and a block of codec parameters, without which stream decoding is impossible.

moov atom is stored in a separate initialization fragment and contains only general information about the stream - codec parameters, video sizes, audio sample rate, and so on. Each fragment, in turn, consists of two atoms - moof and mdat . The atom metadata of the moof fragment stores information about the samples found in this fragment. The moof format is significantly different from moov. In addition, in the absence of codec parameters, the fragment cannot be played as an independent mp4 file.MPEG-DASH on the server

Starting with version 1.0.8, nginx-rtmp-module supports live streaming in MPEG-DASH. It happens in the same way as broadcasting in HLS. In the specified directory, dash fragments are created, as well as a manifest file. In the process of broadcasting old fragments will be deleted, new ones will appear, the playlist will be updated.

To build nginx with nginx-rtmp-module we specify it in

--add-module ./configure --add-module=/path/to/nginx-rtmp-module ... After assembly, set up MPEG-DASH

rtmp { server { listen 1935; # dash- /tmp/dash application myapp { live on; dash on; dash_path /tmp/dash; } } } ... http { server { listen 8080; # location /dash { root /tmp; add_header Cache-Control no-cache; } } } Here is what an MPEG-DASH stream slice called

mystream # mystream-init.m4a mystream-init.m4v # # : mystream-TIME.mp4X # TIME- mystream-0.m4a mystream-0.m4v mystream-5888.m4a mystream-5888.m4v mystream-12648.m4a mystream-12648.m4v mystream-18648.m4a mystream-18648.m4v mystream-27328.m4a mystream-27328.m4v # () mystream.mpd # mystream-raw.m4a mystream-raw.m4v MPEG-DASH in the browser

The main tool with which MPEG-DASH playback in browsers is currently implemented is the dash.js player, which is the reference implementation of the client’s MPEG-DASH and is being developed by the DASH Industry Forum organization. Player pops from browser support Media Source Extensions .

At the moment, the player works well with a static video, but it still has some problems with playing live streams. So, in order to support live broadcasts in Chrome, I had to modify the player a bit. The modified version lies in the

live brunch of my fork of the project . The authors of the player promised in the next version to completely solve the problem with live broadcasts.Download and install dash.js from the fork

# dash.js /var/www cd /var/www git clone https://github.com/arut/dash.js.git cd dash.js git checkout live Open the baseline.html editor and find the line with the standard URL

url = "http://dash.edgesuite.net/envivio/dashpr/clear/Manifest.mpd", Replace with our url

url = "http://localhost:8080/dash/mystream.mpd". Add a location in nginx to upload dash.js contents, including a test page

location /dash.js { root /var/www; } Now we start broadcasting with the name mystream

ffmpeg -re -i ~/Videos/sintel.mp4 -c:v libx264 -profile:v baseline -c:a libfaac -ar 44100 -ac 2 -f flv rtmp://localhost/myapp/mystream Next, go to the browser on the page

http://localhost:8080/dash.js/baseline.html . Here you may have to wait a few seconds until the broadcast manifest is created and refresh the page.

Browsers

For MPEG-DASH to work via dash.js, the browser must support the Media Source Extensions API . The situation with the support of these extensions is constantly improving, but still not perfect.

- Chrome (including mobile since Android 4.2) - supported in the current version

- IE - supported from version 11 onwards from Windows 8

- Firefox is not supported. The developers promise to support Media Source Extensions in the coming months.

- Safari is not supported. However, Safari is the only desktop browser that supports HLS.

Source: https://habr.com/ru/post/204666/

All Articles