ScienceHub # 08: Neural Interfaces

Alexander Kaplan, one of the favorite experts of Post-Science, not only told us all about neurointerfaces, how to control his brain and interaction with external devices, but also showed his office, and also conducted an experiment with a member of our film crew. More on this below.

What Kaplan is doing can be called the development of a new paradigm in psychophysiology. Until recently, scientists have studied the brain according to its electrical activity, since neurons or the brain work on electricity. Nerve cells communicate with each other, transmit information to each other using current pulses or what is called a spike. And then the idea arose to take an electrical signal and make it a command to control external devices. This was made possible by electroencephalography, which was previously used to diagnose diseases. This method consists in the fact that the electromagnetic field from the electrical life of the cells makes its way through the skull to the surface of the head on the electrodes, which are pressed against the skin surface.

But the conversion of this electrical signal into a tool for controlling external equipment is not at all a toy. Information about the outside world, which we store and synthesize in ourselves, we manifest outside with the help of muscles, this is our only way, but can everything be conveyed through thoughts?

')

Alexander Kaplan: “This is an organic channel through which one can express the inner world that is created at many entrances. If there was some direct channel for the release of information from the brain, we could at least compare something. Or develop a philosophical neurophysiological problem. We say now that such a channel can appear if electric signals are used as an engine. But this is a more general aspect, and there is a pragmatic aspect. If we receive a control signal directly from the brain, then in this way a person can directly say something without using muscles. Exactly this situation occurs in a number of diseases. In particular, with a stroke. After a stroke, people may be immobilized, the motor system may be knocked out. At the same time, a person can be conscious. Destroyed those areas of the brain that are associated with motility.

It turns out, we see two aspects of the new approach. If we take an electrical signal and make a command system out of it, then we make a neural interface. On the one hand, the brain, and on the other hand, some software technical devices that allow the electrical signal of the brain to manifest in the information, in the motor or in managerial terms. That is, we get a new access to the brain. And the brain gets a new way out to the world. The development of this channel is the theme for one of the most modern sections of neurophysiology and psychophysiology. You can even say psychology. The topic we are talking about is now defined by a capacious term. The interface is the brain of the computer, the neural interfaces. ”

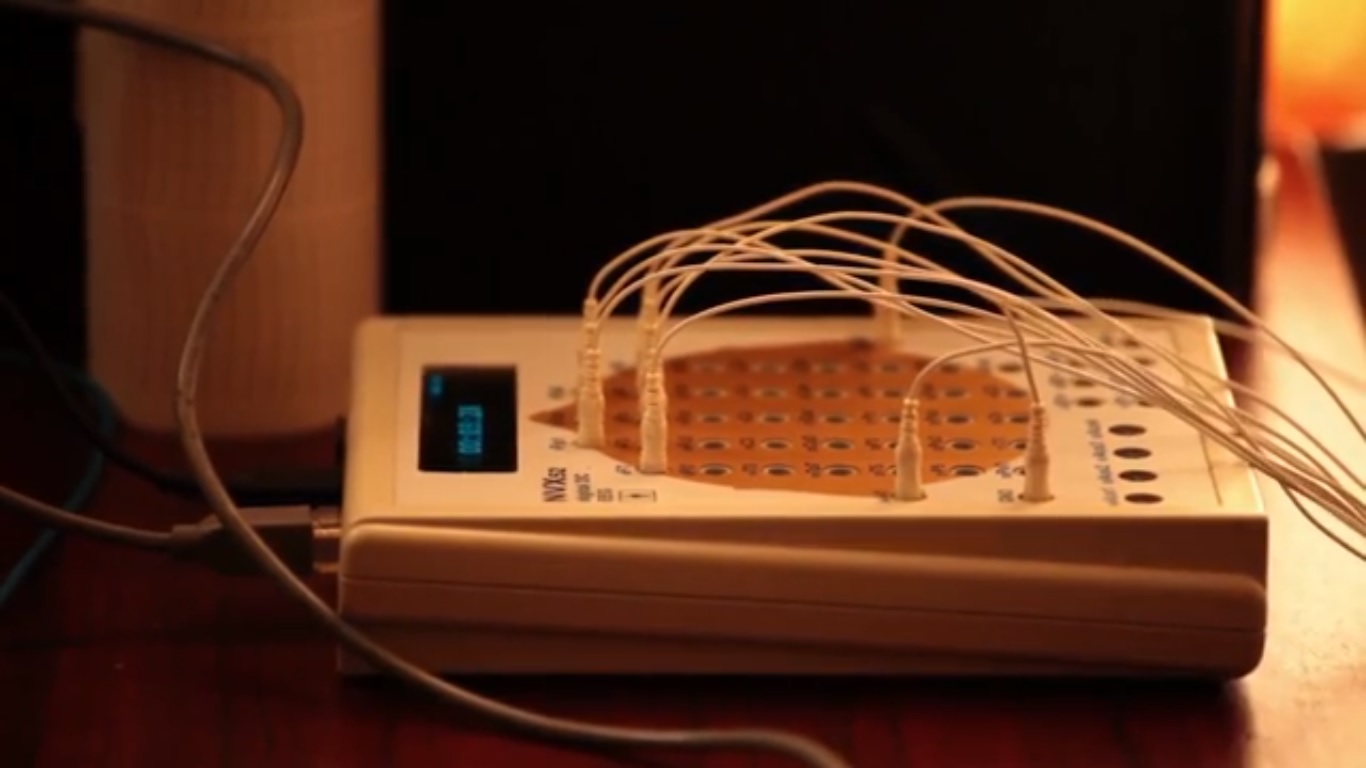

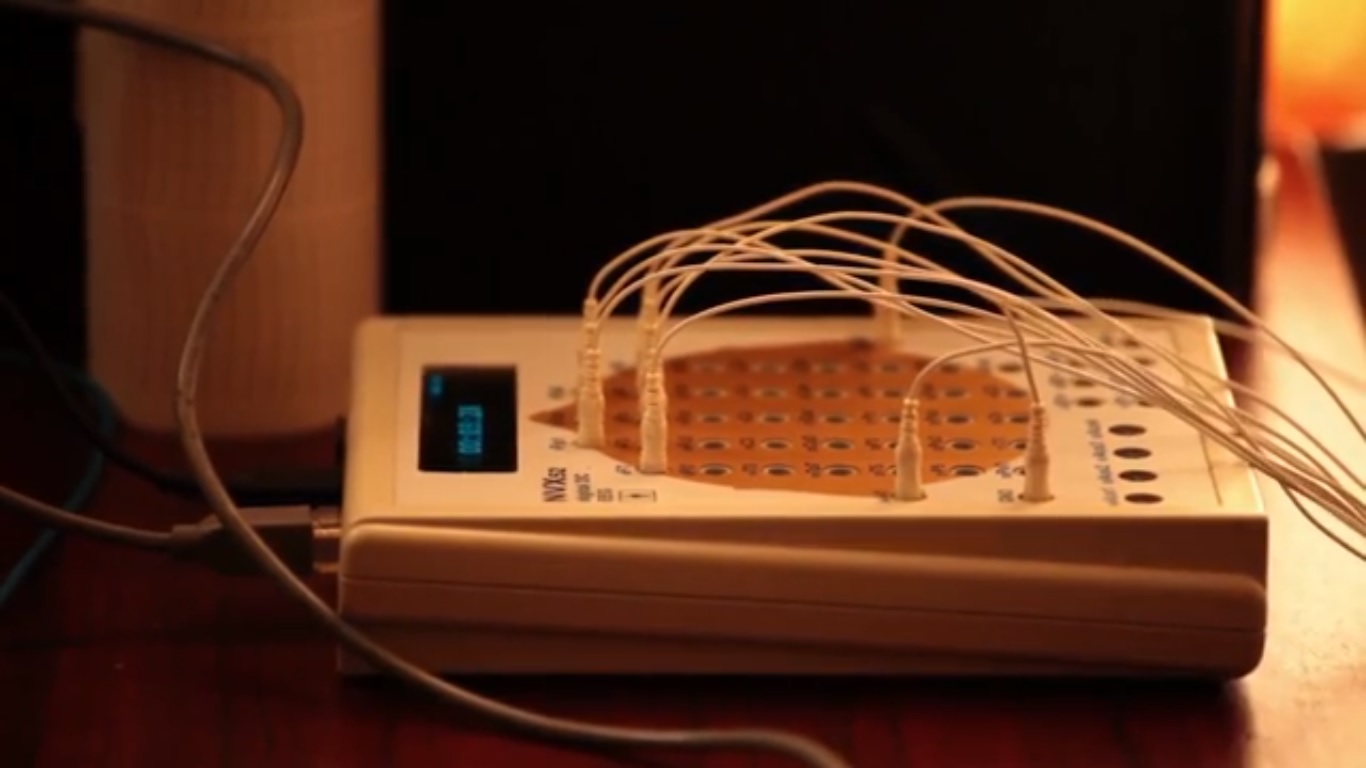

The idea of using the electric signal of the brain as a channel for communication arose long ago, but the researchers did not immediately understand the potential of such an initiative. The only problem is that this channel is slow. In order to manage something, you need to perform actions immediately after the emergence of thought. Make such a fast channel at the time was not possible. And the request for such technologies was formed long ago - most of all they were required in the clinical area, when the patient has the brain, but the motility is impaired. Another caveat is that such devices must be portable, so that people do not depend on a huge design. Therefore, a technology request for a microchip appeared, which records the electrical activity of the brain, on a microprocessor, which processes and decrypts, and finally, on a wireless connection. That is, a wireless connection that registers the electrodes.

AK: “The key new issue in this area was to understand whether a person can arbitrarily change his electrical activity. It changes according to natural bases due to the action of the internal mechanisms of the brain. But if I myself or someone can change my electrical activity, this means that a communication channel is actually already emerging. And now it is not the signs of diseases, but the signs of volitional action that need to be deciphered: "I want to change the electrical activity of the brain in a certain direction." A person needs to learn how to make several movements in this EEG. This will be a few letters or a few commands. And then we will agree. For example, changing “A” would mean turning on the TV. Change "B" - turn on the refrigerator and so on. Or you can type letters. But there are more letters. Characters in the alphabet or on the control panel can be many. Is it possible to achieve such that you can get a lot of gradations of changes in its electrical activity? This is the problem scientists are busy with today.

I must say that so easy to change their electrical activity no one succeeds. It is necessary to imagine something so that it has a good response in the change of electrical activity. For example, I present an orange. Unfortunately, it is not possible to identify a good clear change in electrical activity associated with my thinking about orange. What to do? It turned out that only bodily images give a good response. For example, I want to squeeze my right hand or my left hand. I imagine that I am squeezing my right hand. In fact, this does not happen as a physical phenomenon. But in my view there is already a right hand squeeze. This can be deciphered.

It turns out that I can really arbitrarily let me know in the encephalogram what I want to do. So we will agree in advance that I squeeze my right hand - this means some specific team. But these are just a few teams.

We are now talking about a key achievement after the discovery of an encephalogram and after decoding the diagnostic signs of diseases. Now signs of volitional effort appear in the encephalogram itself. They are 4 - 6. But how can you type the letters? Scientists went the other way. This is the development of this principle - how to get signs of will?

Scientists did not require more imagery from a person or more images that he can present. They went the other way, so that the images presented by man respond to the electrical activity of the brain. They presented these images outside. Let's say there will be a matrix, in each cell of which some symbols, letters will be drawn. Each cell starts flashing at different times. In the continuous recording of the electrical activity of the brain, you can catch reactions to the wink of each character.

The researchers' finding is that if I conceived a symbol of my own, the reaction to this symbol will be slightly different. Relatively speaking. It will be strengthened. And there is nothing unnatural about it. I want this symbol. So there must be some other reaction. And the reactions to all other characters will be about the same.

Our task is to quickly catch an unusual reaction. If we catch it, then we understand what symbol we are talking about. If it's a letter, type that letter. So, letter by letter, a person turns his attention from one symbol to another. And now another character gives a big reaction a little different. Thus, we receive intentional responses of the brain to the will of a person. Thus, it turns out that one way or another, volitional intention, the volitional impulse of a person to a particular external action can be obtained behind the scenes, but simply by recording the electrical activity of the brain. This, in fact, is the interface-brain-computer technology. "

With the help of decoding and additional devices, one can understand the intentions of a person, but for this you need to agree with him in advance on what each change in the electrical activity of the brain means. Although the thoughts of a person cannot be read in this way, because we did not agree in advance what the changes would mean.

. .: “There is a double transformation. First, we agree with the person about what meaning this or that imagination will have (squeezing the right hand or left hand or attention to this or that image, which is given from the outside - a letter, symbol, picture). That is, first we agree on what action will be taken externally. For example, if the picture of the museum, then let the various museums of the world open on the screen. But then you need to pay attention to a particular museum, the following picture will open.

The key here is that we must correctly guess about the strong-willed intentions of a person who had been discussed in advance. Here is the question of decoding. Of course, it is not completely reliable as decoding any code. There is always some kind of error. Scientists are struggling to ensure that this error was less.

If we talk about our laboratory, then if we print letters by willpower, we give three to five percent error. That is, the person just sat down at the apparatus, for example. He put the electrodes. They put a computer in front of him. It will print with an error of no more than 5%. If he practices, some people have one hundred percent print for quite a long time without error. This can be achieved. The problem is not the error. The problem is speed. How quickly can a person's intentions be deciphered? This delay determines the speed of interaction with the outside world.

If we type letters, it is only 15 letters per minute. This is the highest speed that we reach in our laboratory. This is much less than if a person who could not type on a keyboard would type letters with two fingers. That would be 90 letters per minute. And here is such a small speed. "

Actually, Alexander and his assistant conducted such an experiment with my colleague Olya — they put a special cap on her head — a kind of horror helmet, and connected 8 electrons. Flickering triangles were displayed on the screen, which rotated in a circle. After Olya completed a short training course and the machine synchronized its processes and her brain, then she chose the color and the number that meant it, thought about him, and he appeared on the screen.

In all interface-brain-computer technologies, psychophysiology is the key science. This science owns the methods of analyzing the activity of the nervous system, and neurophysiology is responsible for the mechanisms of the nervous system. It explains how the brain works, how nerve cells are arranged, how they generate electrical signals.

When scientists try to catch human reactions to some kind of external stimuli or to the presentation of internal images - this is the prerogative of psychology. The brain itself is neurophysiology, and the content of the brain is already psychology, images, our ideas about the outside world, our volitional efforts. But we need to cross it, because we have to catch these mental phenomena in the responses of the neuropsychological substrate, that is, in the electrical oscillations of the brain. That is, at the junction of physiology and psychology, neurophysiology is born.

Physiologists and psychologists, respectively, work in this area, and specialists from about three disciplines adjoin them.

1. Bioengineering. These are people who can design devices, for example, recording a biopotential. Of course, we can say that this is an ordinary electronic engineer. Still, there is a big specificity in the registration of biological potentials, and especially the potentials of the brain, although they are very small. When the signal is caught, it needs to be deciphered, and this is a big deal.

2. Mathematics. Decoding the signal is already the prerogative of mathematicians. Although the mathematician himself cannot decipher, he needs to be given hints. This is done by neuroscientists.

3. Programming. If there is an engineering part: a good signal, understanding how to decipher, you need to program it. That is, you need to make a reliable good software that scrolls through all these calculations on any computing tool. So, we need programmers and not simple ones. They should understand things like speed of action. “We need not just how to program, but pick up such configurations (such libraries) that will do it quickly. Then they need to be done on the microcontroller. And not on the usual big computer. This should be an interdisciplinary team. We work in such a team. ”

In the future, neurointerface technologies have two branches that can intersect. The first direction is medical. New technologies are needed by people who are physically unable to work and cannot maintain normal communication, that is, they have completely dropped out of society. It is important in this area to find specialists who will focus on the installation and maintenance of these devices, adaptation to each specific category of patients.

The second direction is neurocybernetics.

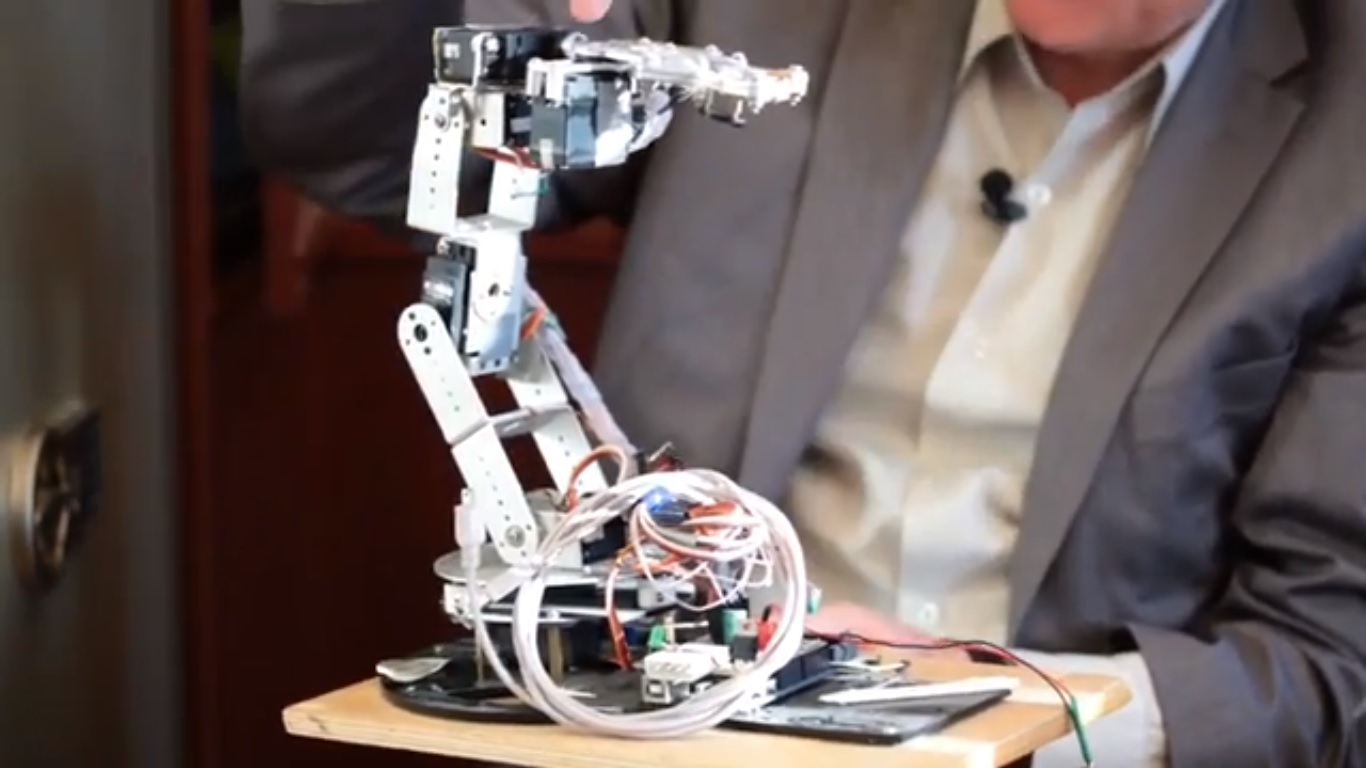

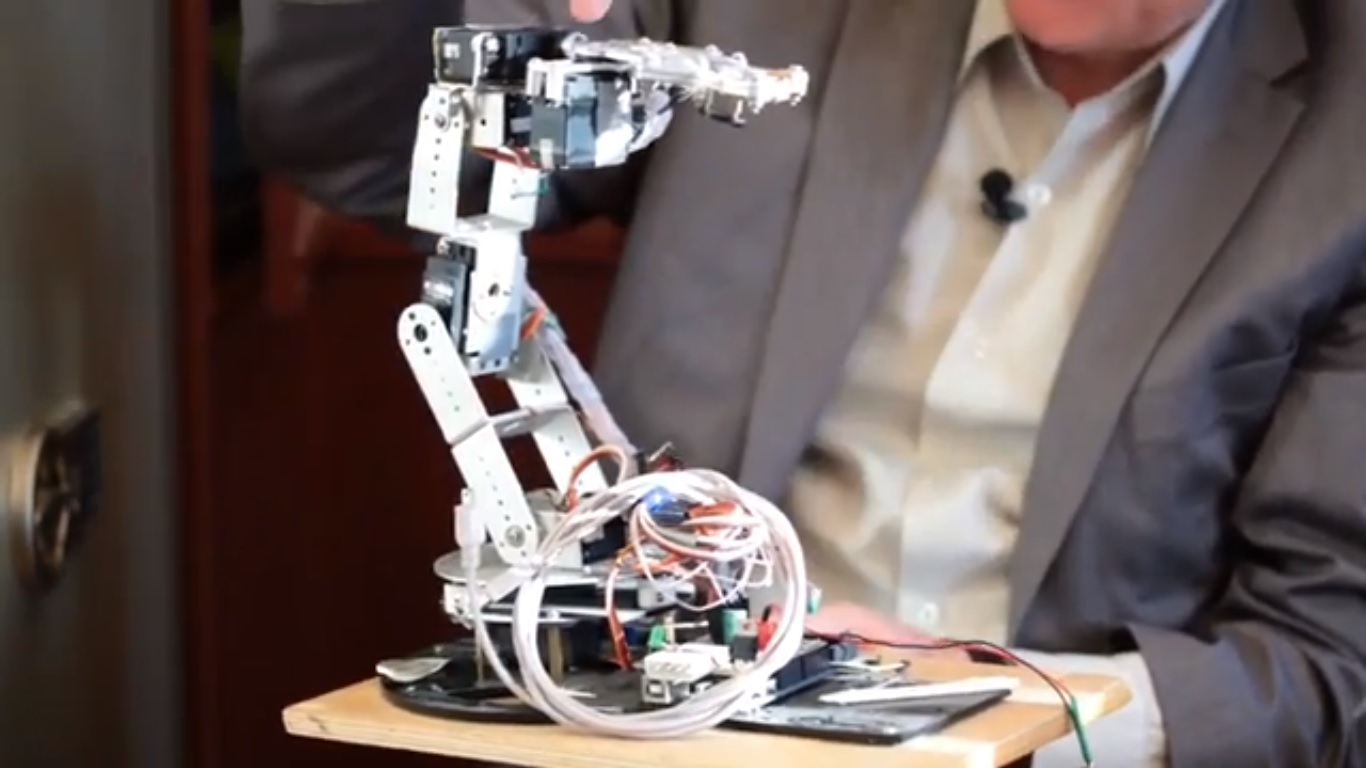

. .: “I now have the neurocybernetics of real devices, when the brain is tight enough to communicate with computer technology. And this is a real neurocybernetics, because feedback should appear. For example, we want to control the manipulator. We have a layout of a six-motor manipulator. We must somehow dispose of these six motors with our power of thought or our volitional effort, so that he pulls out his arrow, takes something with claws and moves it to another place.

There must be some kind of inner intrinsic intelligence in the form of a sequence of switching on the motors. And I just have to give a mental command "forward." And the motors are connected in series. We must interact with this manipulator. He must understand what I want from him and organize this action inside his engines. This is the real interaction between the brain and the external device. These devices must be cybernetic. That is, do not just turn on the TV. There is no cybernetics. And this is our future - this is when we get really controlled devices that work in collaboration with our brain. And this is absolutely not the idea that is practiced in science fiction films like Robocop, when a person is simply immersed in a technical tool and cannot live separately. No, these are devices like a mobile phone. I can pull it out of my pocket and throw it away. I can live without him. But some restrictions are imposed. However, they may even be an advantage. So it is here. ”

The last area that concerns neural interfaces is a philosophical-humanistic problem. Actually, this is a problem of the life of the brain. Increasingly, we are faced with a situation where the brain dies not at will, but because the organs of the body that provide its life fail.

. .: “Of course, the problem of the continuation of a person’s life arises. Because the main thing that is contained in our personality is the maintenance of the brain by the body. The body contributes. But memory, our memories are the brain. The problem of preservation of personality is the problem of preservation of the communicability of the brain with external devices.

After all, if we control the manipulator, being in a healthy body, it controls the brain. If the body is unhealthy (for example, complete body paralysis), the person can control the manipulator. Now it all works in the USA: in several laboratories such manipulators are made, which are controlled by a completely immobilized person who cannot even speak. In this case, the manipulator can take a cookie, treat you. And this is the will of man. He cannot say anything, he does not move anything, but he treated you. And he can type letters in the same way. Therefore, how to be in this situation? The body is with it, because the organs that are in this body are healthy. Not only healthy motor system. And if a person has a heart or liver that is impossible to replace? Can one then go for a full body prosthesis? That is, the prosthesis that provides the brain with life, but it will no longer be biological? Biological will be the brain. How does he interact with the outside world? Neurointerface. But this is a problem that requires ethical philosophical and humanistic reflection. "

All of the above, as well as an experiment in the video format here.

What Kaplan is doing can be called the development of a new paradigm in psychophysiology. Until recently, scientists have studied the brain according to its electrical activity, since neurons or the brain work on electricity. Nerve cells communicate with each other, transmit information to each other using current pulses or what is called a spike. And then the idea arose to take an electrical signal and make it a command to control external devices. This was made possible by electroencephalography, which was previously used to diagnose diseases. This method consists in the fact that the electromagnetic field from the electrical life of the cells makes its way through the skull to the surface of the head on the electrodes, which are pressed against the skin surface.

But the conversion of this electrical signal into a tool for controlling external equipment is not at all a toy. Information about the outside world, which we store and synthesize in ourselves, we manifest outside with the help of muscles, this is our only way, but can everything be conveyed through thoughts?

')

Alexander Kaplan: “This is an organic channel through which one can express the inner world that is created at many entrances. If there was some direct channel for the release of information from the brain, we could at least compare something. Or develop a philosophical neurophysiological problem. We say now that such a channel can appear if electric signals are used as an engine. But this is a more general aspect, and there is a pragmatic aspect. If we receive a control signal directly from the brain, then in this way a person can directly say something without using muscles. Exactly this situation occurs in a number of diseases. In particular, with a stroke. After a stroke, people may be immobilized, the motor system may be knocked out. At the same time, a person can be conscious. Destroyed those areas of the brain that are associated with motility.

It turns out, we see two aspects of the new approach. If we take an electrical signal and make a command system out of it, then we make a neural interface. On the one hand, the brain, and on the other hand, some software technical devices that allow the electrical signal of the brain to manifest in the information, in the motor or in managerial terms. That is, we get a new access to the brain. And the brain gets a new way out to the world. The development of this channel is the theme for one of the most modern sections of neurophysiology and psychophysiology. You can even say psychology. The topic we are talking about is now defined by a capacious term. The interface is the brain of the computer, the neural interfaces. ”

The idea of using the electric signal of the brain as a channel for communication arose long ago, but the researchers did not immediately understand the potential of such an initiative. The only problem is that this channel is slow. In order to manage something, you need to perform actions immediately after the emergence of thought. Make such a fast channel at the time was not possible. And the request for such technologies was formed long ago - most of all they were required in the clinical area, when the patient has the brain, but the motility is impaired. Another caveat is that such devices must be portable, so that people do not depend on a huge design. Therefore, a technology request for a microchip appeared, which records the electrical activity of the brain, on a microprocessor, which processes and decrypts, and finally, on a wireless connection. That is, a wireless connection that registers the electrodes.

AK: “The key new issue in this area was to understand whether a person can arbitrarily change his electrical activity. It changes according to natural bases due to the action of the internal mechanisms of the brain. But if I myself or someone can change my electrical activity, this means that a communication channel is actually already emerging. And now it is not the signs of diseases, but the signs of volitional action that need to be deciphered: "I want to change the electrical activity of the brain in a certain direction." A person needs to learn how to make several movements in this EEG. This will be a few letters or a few commands. And then we will agree. For example, changing “A” would mean turning on the TV. Change "B" - turn on the refrigerator and so on. Or you can type letters. But there are more letters. Characters in the alphabet or on the control panel can be many. Is it possible to achieve such that you can get a lot of gradations of changes in its electrical activity? This is the problem scientists are busy with today.

I must say that so easy to change their electrical activity no one succeeds. It is necessary to imagine something so that it has a good response in the change of electrical activity. For example, I present an orange. Unfortunately, it is not possible to identify a good clear change in electrical activity associated with my thinking about orange. What to do? It turned out that only bodily images give a good response. For example, I want to squeeze my right hand or my left hand. I imagine that I am squeezing my right hand. In fact, this does not happen as a physical phenomenon. But in my view there is already a right hand squeeze. This can be deciphered.

It turns out that I can really arbitrarily let me know in the encephalogram what I want to do. So we will agree in advance that I squeeze my right hand - this means some specific team. But these are just a few teams.

We are now talking about a key achievement after the discovery of an encephalogram and after decoding the diagnostic signs of diseases. Now signs of volitional effort appear in the encephalogram itself. They are 4 - 6. But how can you type the letters? Scientists went the other way. This is the development of this principle - how to get signs of will?

Scientists did not require more imagery from a person or more images that he can present. They went the other way, so that the images presented by man respond to the electrical activity of the brain. They presented these images outside. Let's say there will be a matrix, in each cell of which some symbols, letters will be drawn. Each cell starts flashing at different times. In the continuous recording of the electrical activity of the brain, you can catch reactions to the wink of each character.

The researchers' finding is that if I conceived a symbol of my own, the reaction to this symbol will be slightly different. Relatively speaking. It will be strengthened. And there is nothing unnatural about it. I want this symbol. So there must be some other reaction. And the reactions to all other characters will be about the same.

Our task is to quickly catch an unusual reaction. If we catch it, then we understand what symbol we are talking about. If it's a letter, type that letter. So, letter by letter, a person turns his attention from one symbol to another. And now another character gives a big reaction a little different. Thus, we receive intentional responses of the brain to the will of a person. Thus, it turns out that one way or another, volitional intention, the volitional impulse of a person to a particular external action can be obtained behind the scenes, but simply by recording the electrical activity of the brain. This, in fact, is the interface-brain-computer technology. "

Is it possible to read the thoughts of a person?

With the help of decoding and additional devices, one can understand the intentions of a person, but for this you need to agree with him in advance on what each change in the electrical activity of the brain means. Although the thoughts of a person cannot be read in this way, because we did not agree in advance what the changes would mean.

. .: “There is a double transformation. First, we agree with the person about what meaning this or that imagination will have (squeezing the right hand or left hand or attention to this or that image, which is given from the outside - a letter, symbol, picture). That is, first we agree on what action will be taken externally. For example, if the picture of the museum, then let the various museums of the world open on the screen. But then you need to pay attention to a particular museum, the following picture will open.

The key here is that we must correctly guess about the strong-willed intentions of a person who had been discussed in advance. Here is the question of decoding. Of course, it is not completely reliable as decoding any code. There is always some kind of error. Scientists are struggling to ensure that this error was less.

If we talk about our laboratory, then if we print letters by willpower, we give three to five percent error. That is, the person just sat down at the apparatus, for example. He put the electrodes. They put a computer in front of him. It will print with an error of no more than 5%. If he practices, some people have one hundred percent print for quite a long time without error. This can be achieved. The problem is not the error. The problem is speed. How quickly can a person's intentions be deciphered? This delay determines the speed of interaction with the outside world.

If we type letters, it is only 15 letters per minute. This is the highest speed that we reach in our laboratory. This is much less than if a person who could not type on a keyboard would type letters with two fingers. That would be 90 letters per minute. And here is such a small speed. "

Actually, Alexander and his assistant conducted such an experiment with my colleague Olya — they put a special cap on her head — a kind of horror helmet, and connected 8 electrons. Flickering triangles were displayed on the screen, which rotated in a circle. After Olya completed a short training course and the machine synchronized its processes and her brain, then she chose the color and the number that meant it, thought about him, and he appeared on the screen.

What and who

In all interface-brain-computer technologies, psychophysiology is the key science. This science owns the methods of analyzing the activity of the nervous system, and neurophysiology is responsible for the mechanisms of the nervous system. It explains how the brain works, how nerve cells are arranged, how they generate electrical signals.

When scientists try to catch human reactions to some kind of external stimuli or to the presentation of internal images - this is the prerogative of psychology. The brain itself is neurophysiology, and the content of the brain is already psychology, images, our ideas about the outside world, our volitional efforts. But we need to cross it, because we have to catch these mental phenomena in the responses of the neuropsychological substrate, that is, in the electrical oscillations of the brain. That is, at the junction of physiology and psychology, neurophysiology is born.

Physiologists and psychologists, respectively, work in this area, and specialists from about three disciplines adjoin them.

1. Bioengineering. These are people who can design devices, for example, recording a biopotential. Of course, we can say that this is an ordinary electronic engineer. Still, there is a big specificity in the registration of biological potentials, and especially the potentials of the brain, although they are very small. When the signal is caught, it needs to be deciphered, and this is a big deal.

2. Mathematics. Decoding the signal is already the prerogative of mathematicians. Although the mathematician himself cannot decipher, he needs to be given hints. This is done by neuroscientists.

3. Programming. If there is an engineering part: a good signal, understanding how to decipher, you need to program it. That is, you need to make a reliable good software that scrolls through all these calculations on any computing tool. So, we need programmers and not simple ones. They should understand things like speed of action. “We need not just how to program, but pick up such configurations (such libraries) that will do it quickly. Then they need to be done on the microcontroller. And not on the usual big computer. This should be an interdisciplinary team. We work in such a team. ”

Future

In the future, neurointerface technologies have two branches that can intersect. The first direction is medical. New technologies are needed by people who are physically unable to work and cannot maintain normal communication, that is, they have completely dropped out of society. It is important in this area to find specialists who will focus on the installation and maintenance of these devices, adaptation to each specific category of patients.

The second direction is neurocybernetics.

. .: “I now have the neurocybernetics of real devices, when the brain is tight enough to communicate with computer technology. And this is a real neurocybernetics, because feedback should appear. For example, we want to control the manipulator. We have a layout of a six-motor manipulator. We must somehow dispose of these six motors with our power of thought or our volitional effort, so that he pulls out his arrow, takes something with claws and moves it to another place.

There must be some kind of inner intrinsic intelligence in the form of a sequence of switching on the motors. And I just have to give a mental command "forward." And the motors are connected in series. We must interact with this manipulator. He must understand what I want from him and organize this action inside his engines. This is the real interaction between the brain and the external device. These devices must be cybernetic. That is, do not just turn on the TV. There is no cybernetics. And this is our future - this is when we get really controlled devices that work in collaboration with our brain. And this is absolutely not the idea that is practiced in science fiction films like Robocop, when a person is simply immersed in a technical tool and cannot live separately. No, these are devices like a mobile phone. I can pull it out of my pocket and throw it away. I can live without him. But some restrictions are imposed. However, they may even be an advantage. So it is here. ”

Ethics problem

The last area that concerns neural interfaces is a philosophical-humanistic problem. Actually, this is a problem of the life of the brain. Increasingly, we are faced with a situation where the brain dies not at will, but because the organs of the body that provide its life fail.

. .: “Of course, the problem of the continuation of a person’s life arises. Because the main thing that is contained in our personality is the maintenance of the brain by the body. The body contributes. But memory, our memories are the brain. The problem of preservation of personality is the problem of preservation of the communicability of the brain with external devices.

After all, if we control the manipulator, being in a healthy body, it controls the brain. If the body is unhealthy (for example, complete body paralysis), the person can control the manipulator. Now it all works in the USA: in several laboratories such manipulators are made, which are controlled by a completely immobilized person who cannot even speak. In this case, the manipulator can take a cookie, treat you. And this is the will of man. He cannot say anything, he does not move anything, but he treated you. And he can type letters in the same way. Therefore, how to be in this situation? The body is with it, because the organs that are in this body are healthy. Not only healthy motor system. And if a person has a heart or liver that is impossible to replace? Can one then go for a full body prosthesis? That is, the prosthesis that provides the brain with life, but it will no longer be biological? Biological will be the brain. How does he interact with the outside world? Neurointerface. But this is a problem that requires ethical philosophical and humanistic reflection. "

All of the above, as well as an experiment in the video format here.

Source: https://habr.com/ru/post/203858/

All Articles