Introduction to data deduplication

Introduction

In the field of business continuity there are many different problems associated with the rapid growth of data in modern IT infrastructures. In my opinion, we can distinguish two main ones:

- How to plan a place to store large amounts of data

- How to backup this data

Indeed, the growth of data on terabytes per year for some large organization is today a very real scenario. But what about efficient storage and backup? After all, there are a maximum of 24 hours in a day and the backup window cannot grow indefinitely (unlike the data itself). Today I want to tell how deduplication can help reduce the severity of this problem.

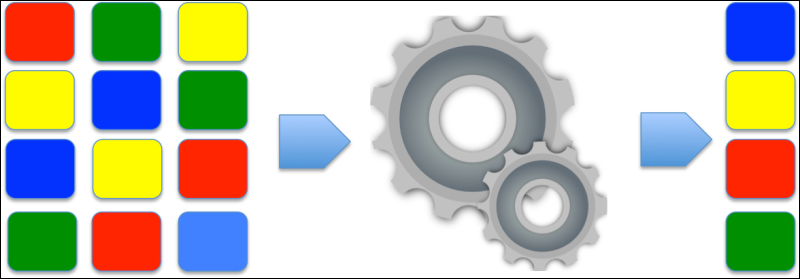

Deduplication

In a broad sense, there are two main types of deduplication:

')

- File-level deduplication ( file-level deduplication) is the unit of deduplication in this method, as it is easy to understand, is a separate file when duplicate files are excluded from the data storage system. When they talk about file-level deduplication, they often also mention Single-Instance Storage (SIS) technology.

- Block-level deduplication (block deduplication) - here the deduplication unit is a data block of arbitrary length, which is often repeated in various logical objects of the storage system.

Usually, the more granular deduplication scheme is used, the greater is the space savings in the data storage.

What is SIS? The essence of the method is simple, for example, if there are 2 files that are absolutely identical, then one of them is replaced by a link to the other. This mechanism works successfully in mail servers (for example, Exchange) and in databases. For example, if one corporate mail user sends an email with an attachment to several recipients, this file will be saved in the Exchange database only once.

Sounds great! But only as long as the files are absolutely identical. If one of the identical files is changed by at least a byte, a separate modified copy of it will be created and the deduplication efficiency will decrease.

Block deduplication works at the level of data blocks recorded on the disk, to assess the identity or uniqueness of which hash functions are used. The deduplication system stores a hash table for all data blocks stored in it. As soon as the deduplication system finds matching hashes for different blocks, it intends to save the blocks as a single instance and a set of links to it. You can also compare data blocks from different computers (global deduplication), which further increases the effectiveness of deduplication, since disks of different computers with the same operating system can store many repetitive data. It is worth noting that the highest efficiency will be achieved by reducing the block size and maximizing the block repeatability ratio. In this connection, there are two methods of block deduplication: with a constant (predetermined) and variable (dynamically matched for specific data) length.

Deduplication scopes

Most product developers with deduplication support are focused on the backup market. In this case, over time, backup copies can take up two to three times more space than the original data itself. Therefore, file deduplication has been used in backup products for a long time, which, however, may not be sufficient under certain conditions. Adding block deduplication can significantly improve storage utilization and make it easier to meet system resiliency requirements.

Another way to use deduplication is to use it on the servers of the production system. This can be done by the OS itself, additional software, or data storage hardware (DSS). This requires attentiveness, for example, Windows 2008 — the OS positioned as capable of producing data deduplication does only SIS. At the same time, storage systems can perform deduplication at the block level, representing the file system for the user in expanded (original) form, hiding all the details associated with deduplication. Suppose there are 4 GB of data on the storage system that is deduplicated to 2 GB. In other words, if the user accesses such a storage, he will see 4 GB of data and that is exactly the amount that will be placed in backup copies.

Reduced interest and high expectations

The percentage of disk space saved is the most important area that is easily manipulated when it says “95% reduction in backup file sizes”. However, the algorithm used to calculate this ratio may not be completely relevant to your particular situation. The first variable to take into account are file types. Formats such as ZIP, CAB, JPG, MP3, AVI - this is already compressed data, which give a smaller deduplication rate than uncompressed data. Equally important is the frequency of data changes for deduplication and the amount of historical data. If you are using a product that deduplicates existing data on a file server, then you should not worry. But if deduplication is used as part of the backup system, then you need to answer the following questions:

- How often does the data change?

- Are these changes significant or only a few blocks in the file?

- How often is the backup performed and how many files are stored?

Deduplication is easy to calculate online with the help of special calculators, but you cannot imagine how it will be in your particular situation. As you can see, the percentage depends on many factors and in theory reaches 95%, but in practice it can reach only a few percent.

Time is our all

Speaking about backup deduplication, it is important for us to know how fast it is executed. There are three main types of deduplication:

- source (on the side of the data source);

- target (or "post deduplication processing");

- continuous (or “transit deduplication”);

First type: Deduplication on the data source side

It runs on the device itself, where the source data is located. Any data marked for backup is divided into blocks, hash is calculated for them. There are 3 potential problems here.

- The first problem is that the resources of the source machine are involved . Therefore, you need to make sure that it has enough processor resources and RAM. There is no reasonable reason to perform deduplication on an already loaded mail server. Of course, some manufacturers talk about the ease of their decisions, but this does not negate the fact that the efficiency of the original environment will be affected, and this may be unacceptable.

- The second problem is where is it better to store the hash tables? You can have hash tables on the same source server, or on a centralized server on the network (this should be done if global deduplication is used), but this solution creates an additional load on the network .

- Despite its disadvantages, source deduplication has its right to use, for example, in companies with a small IT infrastructure, where there are several servers in the infrastructure, it is irrational to use global deduplication.

Target (or post-process) deduplication

Suppose that data from all computers are sent to one backup repository. Once the data is received, the repository can create a hash table with blocks of this data. The first advantage of this method is a larger amount of data, and the larger the data pool, the larger the hash table and, accordingly, the greater the chance of finding identical blocks. The second advantage is that the whole process takes place outside the productive network.

However, this option does not solve all the problems. There are some points that need to be taken into account.

- The first is the dependence on free space . If you have an extensive infrastructure, then the size of the required space can be very large.

- Also, the second drawback of target deduplication is the demands on the disk subsystem of the repository . Typically, the data must be written to the repository disk before being broken into blocks, and only then the hashing and deduplication process begins. This makes the disk subsystem a bottleneck of the architecture.

- The third drawback is that each hash function has a hash collision probability , that is, a situation where the same hash is calculated for two different blocks. This causes damage to the original data. To prevent it, it is necessary to choose a hash algorithm with a minimum collision probability, which in turn requires more computational power. This is usually not a problem, as the target deduplication uses hardware that can handle this load. It must be said that the probability of hash collisions of modern hash functions is rather small.

- A fourth potential flaw is that the full amount of data from the “production” must be transferred through the network without creating a significant load on the network and the productive system itself. This can be solved by using night or other less loaded hours for the system, or by isolating this traffic to another network (which is common practice in medium and large companies).

Transit Deduplication

Transit deduplication is explained as the process that occurs during the transfer of data from source to target. The term is a bit confusing. Data is not really deduplicated "in the wire." In fact, this means that the data collected in the target memory of the device is deduplicated there before the write operation to disk. This takes the disk search time out of the equation. Transit deduplication can be considered the best form of target deduplication. It has all the advantages of a global data view along with the unloading of the hashing process, but none of the disadvantages of slow I / O disks.

However, it still represents a lot of network traffic and potential hash collisions. This method requires the greatest computing resources (processor and memory) among all listed.

Summarizing

Deduplication technologies can help reduce storage costs. You should carefully choose the type of deduplication. Ultimately, deduplication will allow the company to more slowly increase the cost of storing its growing data.

Useful materials

[1] Original article (eng.)

[2] Veeam blog article: How to Get Unbelievable Deduplication Results with Windows Server 2012 and Veeam Backup & Replication!

[3] Video (English): Deduplication best practices with MS Windows Server 2012 and Veeam Backup & Replication 6.5

[4] Built-in compression and deduplication in Veeam Backup & Replication

Source: https://habr.com/ru/post/203614/

All Articles