Data Modeling in Cassandra 2.0 in CQL3

The article is intended for people trying to create their first "table" in the database Cassandra.

For posting several releases of Cassandra, the developers took the right vector to ease the use of this database. Considering its advantages, such as speed and fault tolerance, it was difficult both to administer and write for it. Now, the number of dances with a tambourine that must be carried out before starting and starting to develop has been minimized - several commands in bash or one .msi in Windows.

Moreover, the recently updated CQL (Query Language) has made life easier for developers, replacing the binary and rather complicated language Thrift.

Personally, I was faced with the problem of the absence of Russian-language Kassandra manuals. In my opinion, I would like to raise the most difficult topic in this article. How to design a database then?

')

Cassandra was created as a distributed database with an emphasis on maximum write and read speed. You need to simulate “tables” depending on the

In SQL, we used to distribute tables, links between them, and then

We have employees of some company. Create a table (which is actually called the Column Family, but for ease of transition from SQL to CQL use the word table) to CQL and fill in the data:

Tables in C * are required to have a PRIMARY KEY. It is used to find the node where the string is stored.

Read the data:

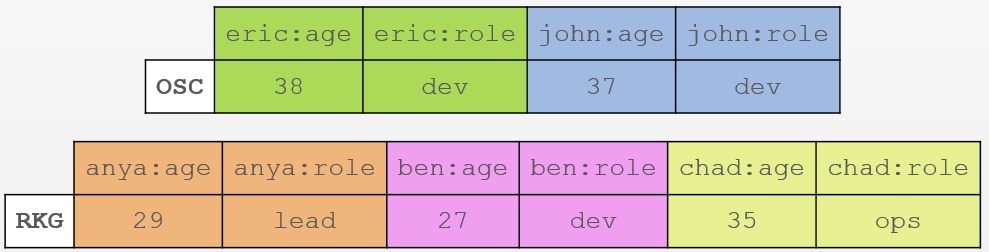

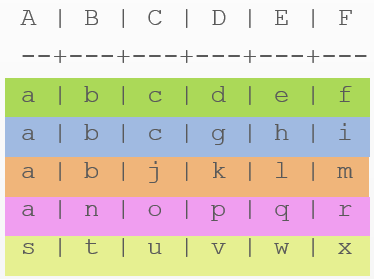

This picture is a hand-painted cqlsh output.

It looks like a regular table from a relational database. C * will create two lines.

Attention! These are two internal row structures , not tables. If you listen a little, you can say that each row is like a small table. Further clearer.

Complicate. Add a company name.

Read the data:

Focus on PRIMARY KEY. The first of the parameters - the

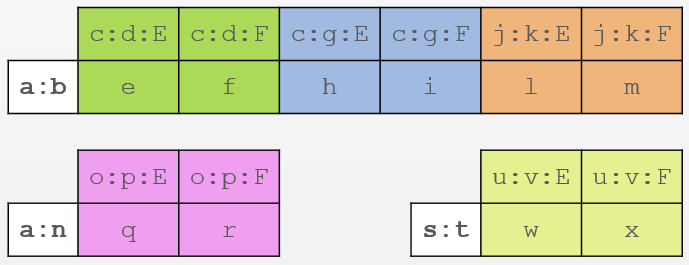

This is how the internal structure now looks.

As you can see with us:

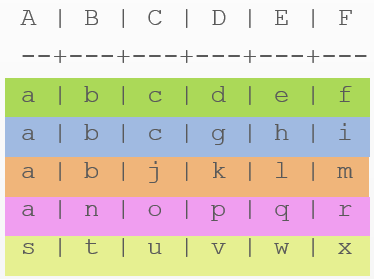

Even harder. Capital letter - the name of the column. Lower case - data.

Read the data:

Now our distribution key is composite -

The internal structure has become more complicated. Such data as

This is the fastest way to record and store infinite amounts of data in a distributed database. C * was just designed with an emphasis on write / read speed. Here, for example, comparison of speeds of MongoDB, HBase and C * .

We have some events that occur 1000 times a second. For example, indicators are taken from noise level sensors. 10 sensors. Each of them sends data 100 times per second. We have 3 tasks:

We need to install several nodes, make each standalone. It may even carry one of them to the cloud.

We will store the data of one day in one line.

Since the distribution key is a unique combination of day + sensor, data for one day will be stored for each sensor in a separate line. Thanks to the reverse sorting inside the string, we get the most important data for us (the last) "at the tip of the fingers."

Since the search for a distribution key (day) is a very fast operation in C *, the third item can be considered completed.

Of course, we can do a search for the day / days, and within the day we can already compare the timestamp. But days can be very much.

We have only 10 sensors. Could you take advantage of this? You can, if you imagine that one sensor - one line. In this case, C * will cache the location of all ten lines on the disk in memory.

Create a second table, where we will store the same data, but excluding days.

And when we insert the data, we limit the lifetime of each cell in order not to increase 2 billion columns. We each sensor gives no more than 100 readings per second. From here:

It is necessary to ensure that in 248 days the oldest data quietly and imperceptibly delete.

In the application code, you will need to set a condition that if the requested data goes beyond the last 248 days, then we use the

“What nonsense! Why the second table? ”- you think. I repeat: in the database C *, storing the same value several times is the norm, the correct model. The wins are as follows:

I recommend to view it in this order.

UPD: Terminology fix. Replaced the words "master key" with "distribution key" in the right places. Added here the concept of "cluster key".

For posting several releases of Cassandra, the developers took the right vector to ease the use of this database. Considering its advantages, such as speed and fault tolerance, it was difficult both to administer and write for it. Now, the number of dances with a tambourine that must be carried out before starting and starting to develop has been minimized - several commands in bash or one .msi in Windows.

Moreover, the recently updated CQL (Query Language) has made life easier for developers, replacing the binary and rather complicated language Thrift.

Personally, I was faced with the problem of the absence of Russian-language Kassandra manuals. In my opinion, I would like to raise the most difficult topic in this article. How to design a database then?

Disclaimer

- The article is NOT intended for people who see the word Cassandra for the first time.

- The article does NOT serve as a promotional material of a technology.

- The article does NOT seek to prove anything to anyone.

- If the speed of writing / reading is not so important, and if “100% uptime” is not much needed, and if you have only a few million entries, then probably this article, and the whole Cassandra as a whole, is not what you want. need to.

')

Likbez

- Cassandra (hereinafter C * ) is a distributed NoSQL database, so all the “why so and not like this” decisions are always made with an eye to clustering.

- CQL is a SQL-like language. Abbreviation for C assandra Q uery L anguage.

- Node (node) - instans C *, or java process in terms of operating systems. On one machine, you can run multiple nodes, for example.

- The primary storage unit is a string . The entire string is stored on the nodes, i.e. There are no situations when half-lines are on one node, half-lines are on another. A row can dynamically expand to 2 billion columns. It is important.

- cqlsh - command line for CQL. All the examples below are performed exactly in it. It is part of the C * distribution.

The basic rule of data modeling in C *

Cassandra was created as a distributed database with an emphasis on maximum write and read speed. You need to simulate “tables” depending on the

SELECT queries of your application .In SQL, we used to distribute tables, links between them, and then

SELECT ... JOIN ... what we want and how we want. JOINs are the main problem with performance in RDBMS. They are not in CQL.First example.

We have employees of some company. Create a table (which is actually called the Column Family, but for ease of transition from SQL to CQL use the word table) to CQL and fill in the data:

CREATE TABLE employees ( name text, -- age int, -- - role text, -- - PRIMARY KEY (name)); -- INSERT INTO employees (name, age, role) VALUES ('john', 37, 'dev'); INSERT INTO employees (name, age, role) VALUES ('eric', 38, 'ceo'); Tables in C * are required to have a PRIMARY KEY. It is used to find the node where the string is stored.

Read the data:

SELECT * FROM employees; This picture is a hand-painted cqlsh output.

It looks like a regular table from a relational database. C * will create two lines.

Attention! These are two internal row structures , not tables. If you listen a little, you can say that each row is like a small table. Further clearer.

The second example.

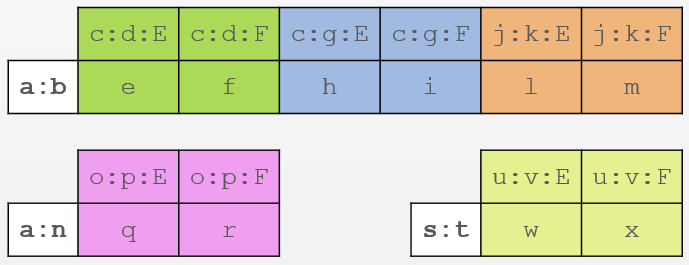

Complicate. Add a company name.

CREATE TABLE employees ( company text, name text, age int, role text, PRIMARY KEY (company,name) -- : company name ); INSERT INTO employees (company, name, age, role) VALUES ('OSC', 'eric', 38, 'ceo'); INSERT INTO employees (company, name, age, role) VALUES ('OSC', 'john', 37, 'dev'); INSERT INTO employees (company, name, age, role) VALUES ('RKG', 'anya', 29, 'lead'); INSERT INTO employees (company, name, age, role) VALUES ('RKG', 'ben', 27, 'dev'); INSERT INTO employees (company, name, age, role) VALUES ('RKG', 'chan', 35, 'ops'); Read the data:

SELECT * FROM employees;

Focus on PRIMARY KEY. The first of the parameters - the

company - is the distribution key, it will be used to search for the node from now on. The second key name is the cluster key . He turns into a column. Those. we turn the data into a column name. Was 'eric' the usual four bytes, and became part of the column name.This is how the internal structure now looks.

As you can see with us:

- Two companies -

OSCandRKG. There are only two lines. - Green

ericstores its age and role in two cells. Similarly, all the rest. - It turns out with such a structure we can store 1 billion employees in each company (line). Remember, however, that the limit on the number of columns is 2 billion?

- It may seem that we once again store the same data. This is true, but in C * this design is the correct modeling pattern.

- Extending lines is the main feature when modeling in C *.

The third example.

Even harder. Capital letter - the name of the column. Lower case - data.

CREATE TABLE example ( A text, B text, C text, D text, E text, F text, PRIMARY KEY ((A,B), C, D)); -- (A,B) (C,D) INSERT INTO example (A, B, C, D, E, F) VALUES ('a', 'b', 'c', 'd', 'e', 'f'); INSERT INTO example (A, B, C, D, E, F) VALUES ('a', 'b', 'c', 'g', 'h', 'i'); INSERT INTO example (A, B, C, D, E, F) VALUES ('a', 'b', 'j', 'k', 'l', 'm'); INSERT INTO example (A, B, C, D, E, F) VALUES ('a', 'n', 'o', 'p', 'q', 'r'); INSERT INTO example (A, B, C, D, E, F) VALUES ('s', 't', 'u', 'v', 'w', 'x'); Read the data:

SELECT * FROM example;

Now our distribution key is composite -

(A,B) . The cluster key is also composite - C, D.The internal structure has become more complicated. Such data as

c, d, g, k, o, p, u, v participate in the column names along with E and F:

- As you can see, now each unique combination of A and B is the key to the string.

- We have only three unique distribution keys -

a:b,a:nands:t. - Columns multiplied due to cluster keys. In the

a:b, we have three unique combinations —c:d,c:g,j:k— that store the actual data in columns E and F —eandf,handi,landm. - Similarly, the other two lines.

Why so hard?

This is the fastest way to record and store infinite amounts of data in a distributed database. C * was just designed with an emphasis on write / read speed. Here, for example, comparison of speeds of MongoDB, HBase and C * .

Real life example

We have some events that occur 1000 times a second. For example, indicators are taken from noise level sensors. 10 sensors. Each of them sends data 100 times per second. We have 3 tasks:

- Continue recording if the database server (node) stops working.

- Manage to record 1000 new records per second no matter what.

- Provide a schedule of any sensor for any day for a couple of milliseconds.

- Provide a schedule of any sensor for any period of time as quickly as possible.

The first and second points are easy.

We need to install several nodes, make each standalone. It may even carry one of them to the cloud.

The third point is the main trick.

We will store the data of one day in one line.

CREATE TABLE temperature_events_by_day ( day text, -- Text of the following format: 'YYYY-MM-DD' sensor_id uuid, event_time timestamp, temperature double, PRIMARY KEY ((day,sensor_id), event_time) -- . (day,sensor_id) (event_time) ) WITH CLUSTERING ORDER BY event_time DESC; -- Since the distribution key is a unique combination of day + sensor, data for one day will be stored for each sensor in a separate line. Thanks to the reverse sorting inside the string, we get the most important data for us (the last) "at the tip of the fingers."

Since the search for a distribution key (day) is a very fast operation in C *, the third item can be considered completed.

Fourth point

Of course, we can do a search for the day / days, and within the day we can already compare the timestamp. But days can be very much.

We have only 10 sensors. Could you take advantage of this? You can, if you imagine that one sensor - one line. In this case, C * will cache the location of all ten lines on the disk in memory.

Create a second table, where we will store the same data, but excluding days.

CREATE TABLE temperature_events ( sensor_id uuid, event_time timestamp, temperature double, PRIMARY KEY (sensor_id, event_time) -- (sensor_id) (event_time) ) WITH CLUSTERING ORDER BY event_time DESC; -- And when we insert the data, we limit the lifetime of each cell in order not to increase 2 billion columns. We each sensor gives no more than 100 readings per second. From here:

2**31 / (24 * 60 * 60 * 100 /) = 2147483648 / (24 * 60 * 60 * 100) = 248.55It is necessary to ensure that in 248 days the oldest data quietly and imperceptibly delete.

INSERT INTO temperature_events (sensor_id, event_time, temperature) VALUES ('12341234-1234-1234-123412', 2535726623061, 36.6) TTL 21427200; -- 248 days in seconds In the application code, you will need to set a condition that if the requested data goes beyond the last 248 days, then we use the

temperature_events_by_day table, if not - temperature_events . Searching for the latter will be a few milliseconds faster.“What nonsense! Why the second table? ”- you think. I repeat: in the database C *, storing the same value several times is the norm, the correct model. The wins are as follows:

- Writing data to the second table is faster than the first. Cassandra does not have to look for the node (s) in which to add a new value. She will know in advance.

- Reading data is also very fast. For example, it is several times larger than a normal indexed, normalized SQL database.

Sources

I recommend to view it in this order.

- Webinar - Understanding How to Get Data .

- Webinar - The Data Model is Dead, Long Live the Data Model

- Webinar - Become a Super Modeler

- Webinar - The World's Next Top Data Model

- Full CQL3 Documentation - Cassandra Query Language (CQL) v3

Next article of the cycle .

UPD: Terminology fix. Replaced the words "master key" with "distribution key" in the right places. Added here the concept of "cluster key".

Source: https://habr.com/ru/post/203200/

All Articles