Contactless management: a view from the inside

In the previous session we met the INCOS project from Belarusian developers. In this post, we take a look inside the plastic box and get acquainted with the stages of prototyping and the gesture recognition algorithms used.

')

So, I give the floor to the developers:

We see that at this stage there is already a functioning prototype that allows you to test contactless control algorithms. Unfortunately, the materials presented above are still far from what they promise us in the project on Bumstarter . But the guys are working on the project, they are using new components and are moving steadily towards their intended goal. I hope that our joint support at Bumstarter will give life to such an interesting and promising project as INCOS.

')

So, I give the floor to the developers:

Several years ago, our INCOS team had the idea to try to implement a project in the field of contactless control.

Initially, it was not about a specific device, but gradually I wanted to do something more, and, ultimately, resulted in the creation of the INCOS system. Today's existing devices, with the help of which you can control objects on the screen directly by gestures, are often not universal, i.e. can not be directly connected to any device, say via usb port. In fact, this premise served as a creative impetus for us. Unlike other devices, our system can be connected, for example, to a TV that supports Smart TV.

In this case, INCOS will be defined as a hid device. In fact, you can connect the system not only to a computer and a TV, but also to any other device that has a usb port, Wi-Fi, Ethernet, Bluetooth and is able to perceive INCOS as a mouse and keyboard. Another advantage of INCOS is that the system is not limited to the set of programs in which it can be used. For example, you can use it to control any games, including those released before 2000 (I'm not a gamer, but sometimes I like to play Starcraft). Currently, the INCOS version is being developed for connecting to several devices simultaneously.

For this purpose, it is supposed to use software-controlled usb-switch. Thus, each device will be available at different times. INCOS provides a “smart remote” function, which involves switching channels without using a TV remote. Gesture and voice commands can be assigned to various buttons on the console (after having trained the INCOS system using the TV remote control and the built-in infrared receiver to use the remote control keystrokes). Various types of contactless control systems, for example, kinect are usually based on the so-called tof-cameras, i.e. use to build the so-called depth map infrared radiation. However, after analyzing various types of tof-cameras, and their price range (and the cost of the most suitable copies for us was in the hundreds and thousands of euros) came to a simpler, cheaper and angry solution: a stereo pair.

Yes, of course, it has its drawbacks. For example, a device based on a stereo pair works better in the light than in the dark (devices that build an infrared grid, as a rule, vice versa). However, with the right approach, many problems can be avoided (in particular, the one mentioned above was solved with the help of additional infrared camera illumination).

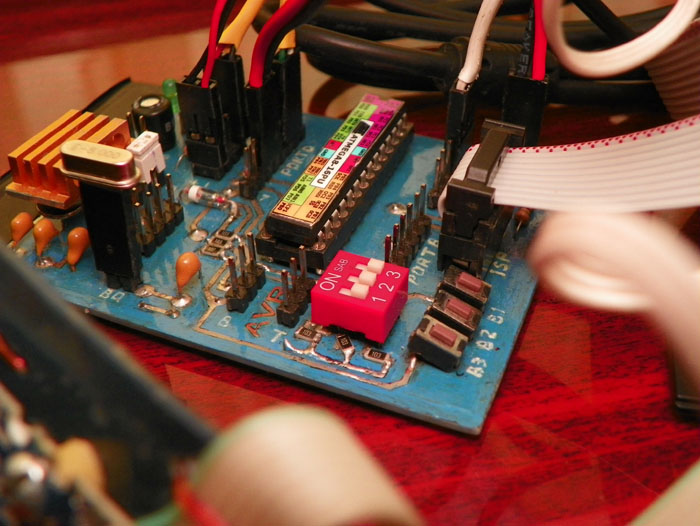

Previously, the Raspberry board was considered as the base for the device. However, for reasons of speed, it was decided to choose a different platform (however, the possibility of connecting to Raspberry still remains)

Currently, the core of the system is the Cubieboard mini-PC (based on the AllWinner A10 processor), however, replacing the processor with a more powerful processor is also considered due to the release of the new Cubieboard models.

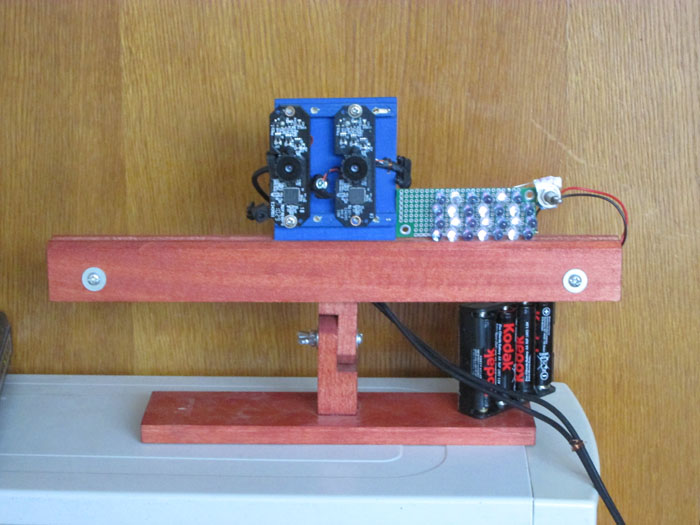

Our test bench with extra infrared light

The resolution of the image is currently 320x240 pixels and 640x480 (this is due to the frequency of the cameras and processing speed), the number of fps is 60 or more, but work is underway to increase this resolution. The maximum distance at which the device operates is 3.5-4 meters, the minimum is 20-30 cm, however you can adjust it. Camera viewing angle (using special wide-angle lenses) is about 800.

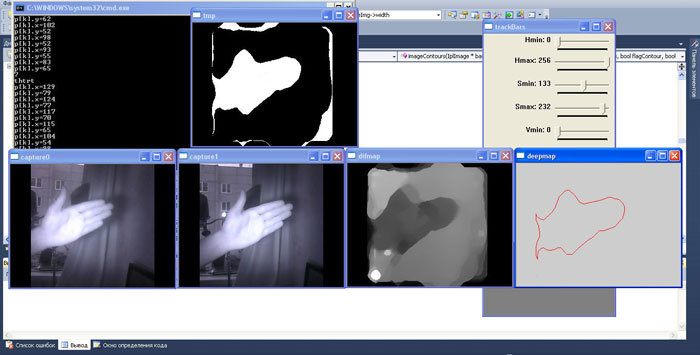

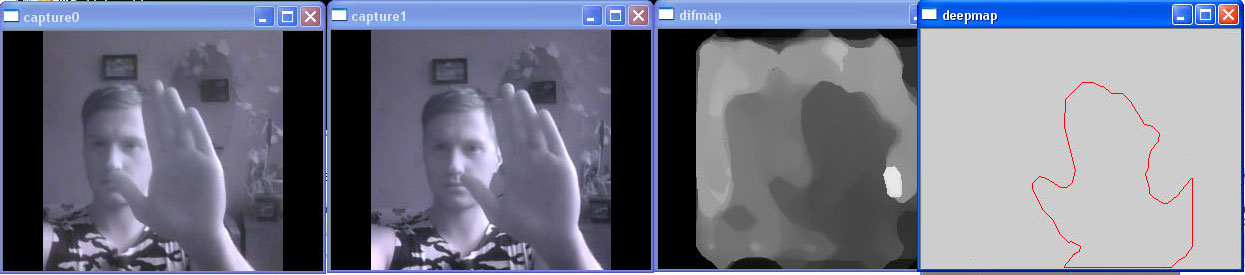

To implement the software component of INCOS, it was decided to use the OpenCV library, and for skeletonizing the contours obtained - CGAL. Both of these libraries are distributed under the Open Source license. Two images obtained from cameras are processed using an algorithm that uses sliding sums of absolute differences between pixels in the left and right images (Kurt Konolige algorithm). Thus, a depth map is constructed. Then there is the elimination of noise in the resulting image using specially designed software filters.

An image from the left camera, from the right and a depth map on the test bench.

To highlight areas that are at the right distance from the camera (and the human body is located closer to the camera than the background elements) at this stage of development, a threshold transformation is used. To select the edges and recognize the contours of the human body, contour analysis based on moments is used. Then build the skeleton model using the methods of the library CGAL. The main purpose of creating this device was not only and not so much the control of the hands, but rather the whole body, therefore, in the skeleton, the hands actually consider only the thumb and the rest of the fingers as a single set. Next, an analysis of the geometrical characteristics of the branches and their division into classes takes place: the branches containing characteristic points and not containing with the help of appropriate classifiers and machine learning methods (in particular, the support vector machine). After determining the characteristic points, they are tracked. For gesture recognition, a sequence of frames is analyzed and gesture recognition is currently performed using the nearest neighbor method

On the image you can see the hand highlighted by infrared illumination, the binarized image (above), and the outline of the hand (display of the skeleton and the characteristic points is disabled)

Skeleton and feature point display enabled

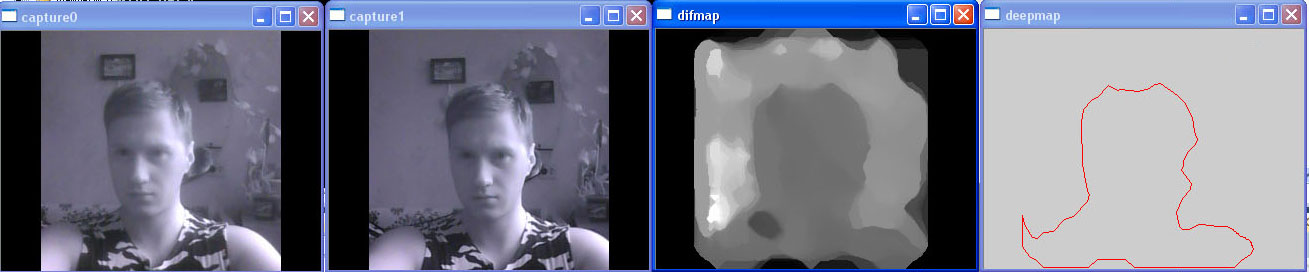

Recognition of the contour of the upper body

In the figure, the arm is closer than the body.

As you know, at the moment there is no voice recognition system that would perform this task 100%. Our system, of course, is not unique in this respect. It should be emphasized that the system mainly perceives separate speech, or rather voice commands. In this case, it is possible to “tie” a command in any language to the action, since the meaning and phonetic load of the word does not matter for the device. To implement the mechanism of voice control, we considered the CMUSphinx library, as well as some of our developments in the field of neural networks. Now we are also looking at the Julius library. Currently, the Kohonen neural network is used in the system. In preprocessing, Heminga smoothing and discrete Fourier transform are applied, as well as logarithmic spectrum compression.

The interaction of the INCOS system and the Smart Home system is under development. At this stage, a connector for connecting an Ethernet cable is provided in INCOS, since these devices are controlled by a local network, which allows you to configure the system through a web interface.

We see that at this stage there is already a functioning prototype that allows you to test contactless control algorithms. Unfortunately, the materials presented above are still far from what they promise us in the project on Bumstarter . But the guys are working on the project, they are using new components and are moving steadily towards their intended goal. I hope that our joint support at Bumstarter will give life to such an interesting and promising project as INCOS.

Source: https://habr.com/ru/post/202724/

All Articles