Clustering web applications hosted by Amazon Web Services

The theme of high-loaded applications is widely known. I also decided to insert my 5 kopecks and share the experience of creating a high-load application on the AWS infrastructure.

At first, I will be commonplace and repeat all the known truths. There are 2 ways to scale an application:

1) vertical scaling is an increase in the performance of each component of the system (processor, RAM, other components);

2) horizontal, when several elements are joined together, and the system as a whole consists of a set of computational nodes that solve a common problem, thereby increasing the overall reliability and availability of the system. And the increase in productivity is achieved by adding additional nodes to the system.

The first approach is not bad, but there is a significant drawback - the limited capacity of a single computing node - it is impossible to infinitely increase the frequency of the processor's computing core and the bus bandwidth.

Therefore, scaling out significantly wins from its vertical brother, because with a lack of performance, you can add a node (or group of nodes) to the system.

')

Recently, we once again comprehended all the delights of horizontal scaling in practice: we built a highly reliable social service for American football fans that withstand a peak load of 200,000 requests per minute. Therefore, I want to talk about our experience in creating a highly scalable system on the infrastructure of Amazon Web Services.

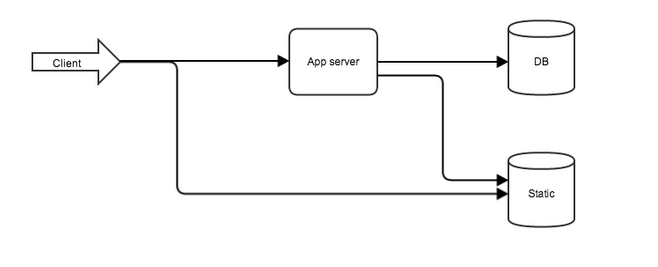

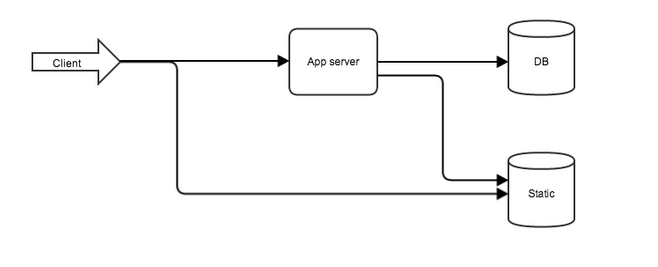

Usually, the web application architecture looks like this:

Fig. 1. Typical web application architecture

Most often, the bottlenecks of the system are the application code and database, therefore, it is necessary to provide for the possibility of their parallelization. We used:

First of all, we will conduct the maximum optimization of the code and queries to the database, and then we will divide the application into several parts, according to the nature of the tasks they perform. We deliver to separate servers:

Each member of the system - an individual approach. Therefore, we choose a server with the most appropriate parameters for the nature of the tasks to be solved.

The application is best suited server with the largest number of processor cores (to serve a large number of concurrent users). Amazon provides a set of Computer Optimized Instances that best fit your goals.

What is the work of the database? - Numerous disk I / O operations (write and read data). Here the best option would be a server with the fastest hard drive (for example, SSD). And again, Amazon is happy to try and offers us Storage Optimized Instances, but the General Purpose (large or xlarge) server will also work, as I’m going to scale them later.

For static resources, neither a powerful processor nor a large amount of RAM is needed, here the choice falls on the service of the static resources of Amazon Simple Storage Service.

Dividing the application, I brought it to the scheme shown in Fig. one.

Advantages of separation:

But the application itself is still not clustered, there are also no servers for the cache and session replication.

For accurate experiments and application performance testing, we need one or more machines with a fairly wide channel. User actions will be emulated using the Apache Jmeter utility. It is good because it allows you to use real access logs from the server as test data, or you can proxy your browser and run several hundred parallel threads for execution.

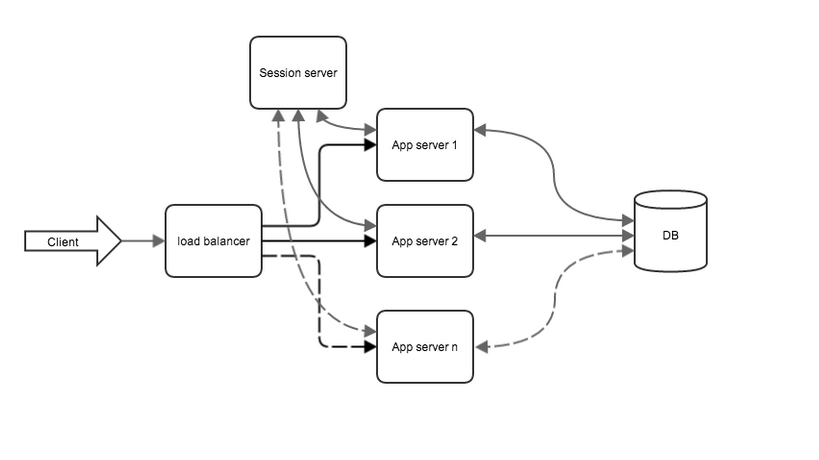

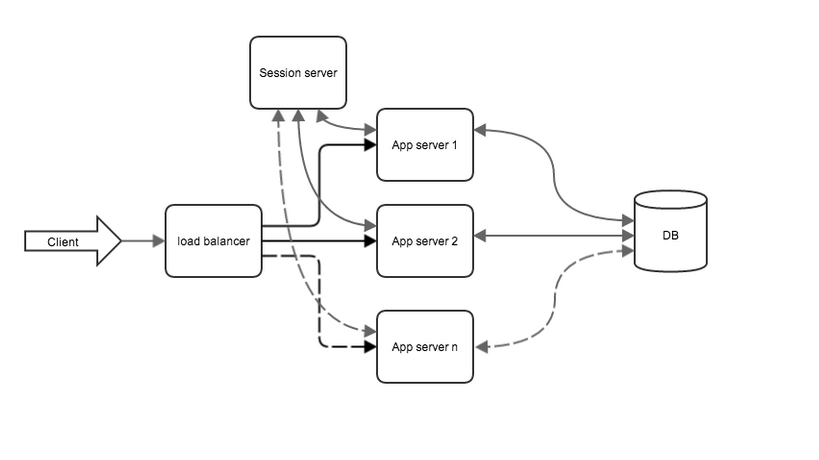

So, experiments have shown that the obtained performance is still not enough, and the server with the application was loaded at 100% (the weak link was the code of the developed application). I'm parallelizing. 2 new elements are introduced into the game:

As it turned out, the developed application does not cope with the load assigned to it, therefore, the load needs to be shared among several servers.

As a load balancer, you can start another server with a wide channel and set up special software (haproxy [], nginx [], DNS []), but since the work is done in the Amazon infrastructure, the ELB service existing there will be used (Elastic Load Balancer). It is very easy to set up and has good performance indicators. First of all, it is necessary to clone an existing machine with an application for the subsequent addition of a pair of machines to the balancer. Cloning is done using Amazon AMI. The ELB automatically monitors the state of the machines added to the distribution list. To do this, the application should implement the simplest ping resource that will respond with 200 code to requests, it is indicated to the balancer.

So, after configuring the balancer to work with two already existing servers with the application, I configure the DNS to work through the balancer.

This item can be skipped if the application does not impose additional work on the HTTP session, or if a simple REST service is implemented. Otherwise, it is necessary that all applications participating in balancing have access to a common session repository. For storage of sessions one more large instance is started and ram memcached storage is configured on it. Session replication is assigned to the module for Tomcat: memcached-session-manager [5]

Now the system looks like this (static server is omitted to simplify the scheme):

Fig. 2. View of the system after application clustering

Application clustering results:

With the increasing number of servers with the application, the load on the database, with which it cannot cope over time, also increases. It is necessary to reduce the load on the database.

So, load testing is again carried out with Apache Jmeter and this time everything depends on the database performance. Optimizing work with DB, I apply two approaches: caching of the data of requests and replication of base for read requests.

The basic idea of caching is that the data that is most often requested to be stored in RAM and, when repeating the requests, first check whether the requested data is in the cache, and only if they are not available, make requests to the database, and then place the results of the requests. in the cache. For caching, an additional server was deployed with configured memcached and sufficient RAM.

The specificity of our application involves more reading data than writing.

Therefore, we cluster the database for reading. This is where the database replication mechanism helps. Replication in MongoDB is organized as follows: the database servers are divided into masters and slaves, while direct data writing is allowed only to the master, it already synchronizes data to the slaves, and reading is allowed from both the master and slaves.

Fig. 3 DB clustering for reading

As a result of the work we have achieved the desired: the system processes 200,000 requests per minute.

At first, I will be commonplace and repeat all the known truths. There are 2 ways to scale an application:

1) vertical scaling is an increase in the performance of each component of the system (processor, RAM, other components);

2) horizontal, when several elements are joined together, and the system as a whole consists of a set of computational nodes that solve a common problem, thereby increasing the overall reliability and availability of the system. And the increase in productivity is achieved by adding additional nodes to the system.

The first approach is not bad, but there is a significant drawback - the limited capacity of a single computing node - it is impossible to infinitely increase the frequency of the processor's computing core and the bus bandwidth.

Therefore, scaling out significantly wins from its vertical brother, because with a lack of performance, you can add a node (or group of nodes) to the system.

')

Recently, we once again comprehended all the delights of horizontal scaling in practice: we built a highly reliable social service for American football fans that withstand a peak load of 200,000 requests per minute. Therefore, I want to talk about our experience in creating a highly scalable system on the infrastructure of Amazon Web Services.

Usually, the web application architecture looks like this:

Fig. 1. Typical web application architecture

- the first user is “met” by the web server, the tasks of delivering static resources and sending requests to the application are assigned to its shoulders;

- then the relay is passed to the application, where all the business logic and interaction with the database flows.

Most often, the bottlenecks of the system are the application code and database, therefore, it is necessary to provide for the possibility of their parallelization. We used:

- java 7 and rest jersey

- application server - tomcat 7

- database - MongoDB (NoSQL)

- cache system - memcached

As it was, or through thorns to high load

Step One: Divide and Conquer

First of all, we will conduct the maximum optimization of the code and queries to the database, and then we will divide the application into several parts, according to the nature of the tasks they perform. We deliver to separate servers:

- application server;

- database server;

- server with static resources.

Each member of the system - an individual approach. Therefore, we choose a server with the most appropriate parameters for the nature of the tasks to be solved.

Application server

The application is best suited server with the largest number of processor cores (to serve a large number of concurrent users). Amazon provides a set of Computer Optimized Instances that best fit your goals.

Database server

What is the work of the database? - Numerous disk I / O operations (write and read data). Here the best option would be a server with the fastest hard drive (for example, SSD). And again, Amazon is happy to try and offers us Storage Optimized Instances, but the General Purpose (large or xlarge) server will also work, as I’m going to scale them later.

Static resources

For static resources, neither a powerful processor nor a large amount of RAM is needed, here the choice falls on the service of the static resources of Amazon Simple Storage Service.

Dividing the application, I brought it to the scheme shown in Fig. one.

Advantages of separation:

- each element of the system works on the machine that is most adapted to its needs;

- there is an opportunity for database clustering;

- You can separately test different elements of the system to find weaknesses.

But the application itself is still not clustered, there are also no servers for the cache and session replication.

Step Two: Experiments

For accurate experiments and application performance testing, we need one or more machines with a fairly wide channel. User actions will be emulated using the Apache Jmeter utility. It is good because it allows you to use real access logs from the server as test data, or you can proxy your browser and run several hundred parallel threads for execution.

Step Three: Load Balancing

So, experiments have shown that the obtained performance is still not enough, and the server with the application was loaded at 100% (the weak link was the code of the developed application). I'm parallelizing. 2 new elements are introduced into the game:

- load balancer

- session server

Load balancing

As it turned out, the developed application does not cope with the load assigned to it, therefore, the load needs to be shared among several servers.

As a load balancer, you can start another server with a wide channel and set up special software (haproxy [], nginx [], DNS []), but since the work is done in the Amazon infrastructure, the ELB service existing there will be used (Elastic Load Balancer). It is very easy to set up and has good performance indicators. First of all, it is necessary to clone an existing machine with an application for the subsequent addition of a pair of machines to the balancer. Cloning is done using Amazon AMI. The ELB automatically monitors the state of the machines added to the distribution list. To do this, the application should implement the simplest ping resource that will respond with 200 code to requests, it is indicated to the balancer.

So, after configuring the balancer to work with two already existing servers with the application, I configure the DNS to work through the balancer.

Session replication

This item can be skipped if the application does not impose additional work on the HTTP session, or if a simple REST service is implemented. Otherwise, it is necessary that all applications participating in balancing have access to a common session repository. For storage of sessions one more large instance is started and ram memcached storage is configured on it. Session replication is assigned to the module for Tomcat: memcached-session-manager [5]

Now the system looks like this (static server is omitted to simplify the scheme):

Fig. 2. View of the system after application clustering

Application clustering results:

- increased system reliability. Even after the failure of one of the servers with the application, the balancer excludes it from the list, and the system continues to function;

- to increase system performance, you simply need to add another compute node with the application;

- by adding extra nodes, we were able to achieve peak performance of 70,000 requests per minute.

With the increasing number of servers with the application, the load on the database, with which it cannot cope over time, also increases. It is necessary to reduce the load on the database.

Step 4: optimization of work with the database

So, load testing is again carried out with Apache Jmeter and this time everything depends on the database performance. Optimizing work with DB, I apply two approaches: caching of the data of requests and replication of base for read requests.

Caching

The basic idea of caching is that the data that is most often requested to be stored in RAM and, when repeating the requests, first check whether the requested data is in the cache, and only if they are not available, make requests to the database, and then place the results of the requests. in the cache. For caching, an additional server was deployed with configured memcached and sufficient RAM.

Database replication

The specificity of our application involves more reading data than writing.

Therefore, we cluster the database for reading. This is where the database replication mechanism helps. Replication in MongoDB is organized as follows: the database servers are divided into masters and slaves, while direct data writing is allowed only to the master, it already synchronizes data to the slaves, and reading is allowed from both the master and slaves.

Fig. 3 DB clustering for reading

Final: 200K requests per minute

As a result of the work we have achieved the desired: the system processes 200,000 requests per minute.

Source: https://habr.com/ru/post/202280/

All Articles