Our tanks. The history of load testing in Yandex

Today I want to recall how load testing in Yandex appeared, developed and arranged now.

By the way, if you like this story, come to the Test Environment in our St. Petersburg office on November 30 ( register ) - there I will tell you more about the game mechanics in testing and will be happy to talk to you live. So.

')

In 2005-2006, part of the non-search infrastructure of Yandex began to experience loads growing like a rune. There is a need to test the performance of related services with the search, in the first place - a banner twister. Timur Khayrullin, who at the time was in charge of load testing, was puzzled by the search for a suitable tool.

But the open solutions existing at that time were either very primitive (ab / siege) or not productive enough (jmeter). HP Load Runner stood out from commercial utilities, but the high cost of licenses and the proprietary software package did not make us happy. Therefore, Timur, together with the developer of the high-performance web server phantom Zhenya Mamchits, came up with a clever trick: they taught the server to work in client mode. So the phantom-benchmark module turned out. The phantom code itself is now open and can be downloaded from here , and you can watch a video about the phantom from the presentation here .

Then Phantom was very simple, able to measure only the maximum performance of the server, and we could only limit the number of threads. But already at that time, our utility was more productive than its peers. Therefore, for load testing, they began to contact us more and more and more services. From 2006 to 2009, the load testing team grew to ten people. The name “tankers” attached to us very quickly; they charge “tapes” with “cartridges” and “shoot” from “tanks” at “targets”. The tank theme is still with us. To save resources, we created a special "landfill" or "chicken coop", where we kept virtual machines for load testing. The virtualization platform at that time was on openvz, and now we have completely switched to lxc due to better support for new kernels and distributions of ubuntu server. Respect the lxc community!

In parallel with the incoming services and the growing popularity of load testing, or rather, with the growth of self-awareness of service teams, an understanding of the limitations of the tool’s capabilities came.

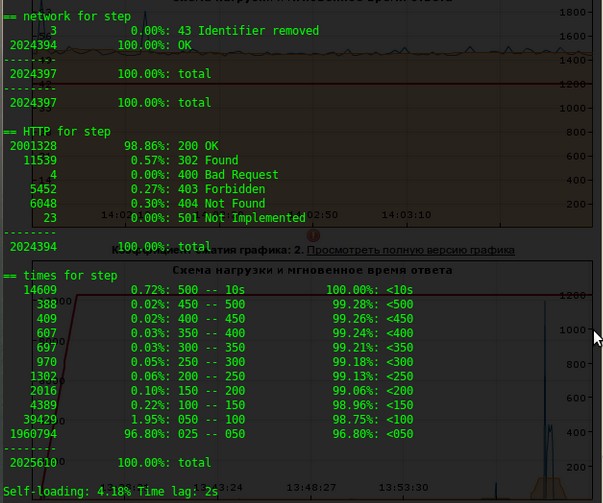

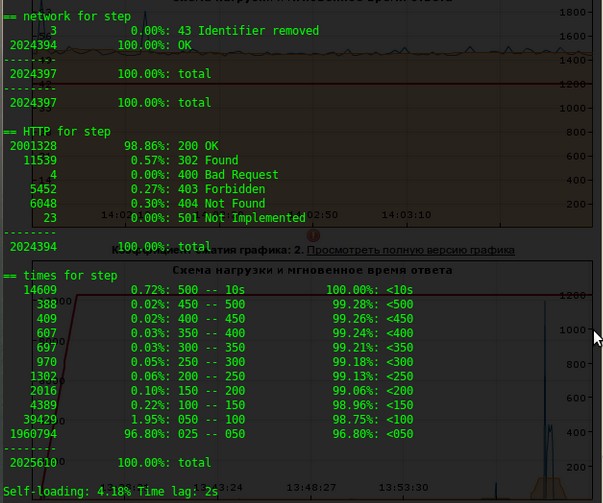

With the assistance of the developers and under the leadership of “tankman” Andrei baabaka Kuzmichev, we began to develop the phantom into a real framework to support load testing - Lunapark. Previously, due to poor organization of reports, the results were stored haphazardly - in Wiki, JIRA, mail, etc. This was very inconvenient, we invested a lot of work in this sore point and gradually we had a real web interface with dashboards and graphs, where all the tests were tied to tickets in JIRA, all reports finally received uniformity and clear design. The web interface has learned to display percentiles, timings, average times, response codes, the amount of received and transmitted data and about 30 different graphs and tables. In addition, Lunapark was tied with mail, jabber and other services. The changes did not bypass the Phantom load generator itself - it learned how to do a lot of things that it could not do before. For example, to submit requests on a schedule - linear, stepwise, reduce the load and even (!) Submit zero and fractional load. An aggregation output with percentiles, monitoring the amount of data, errors, responses were added to the console output. It looked like a console output sample of 2009.

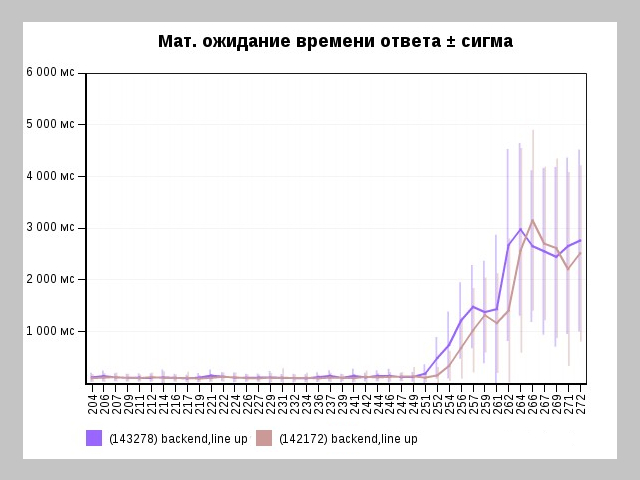

Gradually, it became clear that it was necessary not only to constantly test some services, but also to accompany them from year to year from release to release, and also to be able to compare tests with each other. This is how the Lunapark pages important for the work of a load tester appeared: comparison of tests and regression. It looked like their first versions.

A tester, developer or manager at any time could find out how the product is developing in terms of performance. Now we are working on improving and bringing these pages to the head of the framework. At the current stage of development, it is not the unit tests that are most important for us, but performance trends.

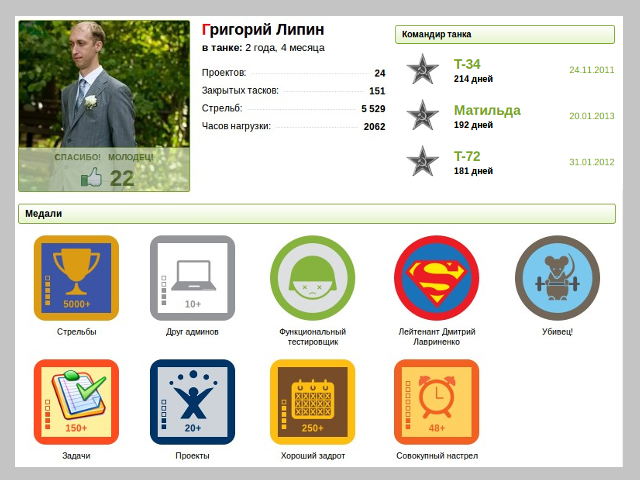

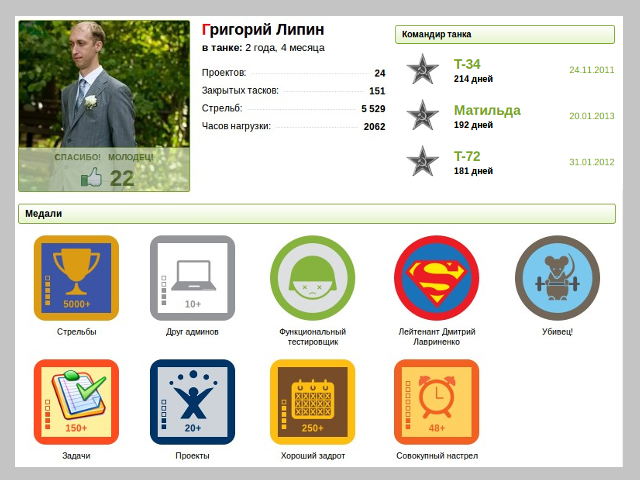

In 2011, an important event happened - we became the first team in Yandex, which launched the real gamification of the workflow, about which I will have a separate report on the Test Environment. This even now is rarely found in the most advanced IT companies. On the Lunapark page we placed the “Hall of Fame” and are very proud of this part of the framework. For a specific test, you can tell the load tester a real friendly “Thank you!”. Each tester receives different badges for a particular event, becomes a “tank commander” (a la mayor in Forskverik) and even a “rank”, which is given for the number of tests performed. Among the routine work any achivka or thanks for its weight in gold. It is very cool motivating.

We consider 2011 as a turning point in our load testing. The development team was headed by Andrew undera Pokhilko. The tester community knows it as a developer of great plug-ins for Jmeter . Andrei brought fresh ideas and approaches that are now very helpful in the work.

First, we realized that developing the tool in the old paradigm “no time to explain, encode” does not work, and switched to a modular development paradigm where a large monolith is broken down into components and developed into separate parts without creating a threat to the whole project. Secondly, since orders for load testing began to come from services for which http traffic was not interesting, we needed a tool that could be used to test SMTP / POP3 / FTP / DNS and other protocols. We thought it was expensive to write phantom-loggers for each such service, and we decided to build an ordinary Jmeter into Lunapark. Thus, with small efforts, we learned to support dozens of new protocols in load testing. Embedding helped us leave the standard web interface without switching to the Jmeter GUI. In addition to Jmeter support, our tank eventually learned to support SSL, IPv6, UDP, elliptics, “multitests” to several addresses from one generator, load loading of several million rps from distributed generators and much more.

At a certain point, orders for load testing fell so much that it became obvious to us: we will not be able to double the staff every year without removing the routine and regression tests from the load tester. To solve this problem, we analyzed all our current daily work, and it turned out that services need to be approached with varying degrees of testing depth. We came up with the following scheme of work:

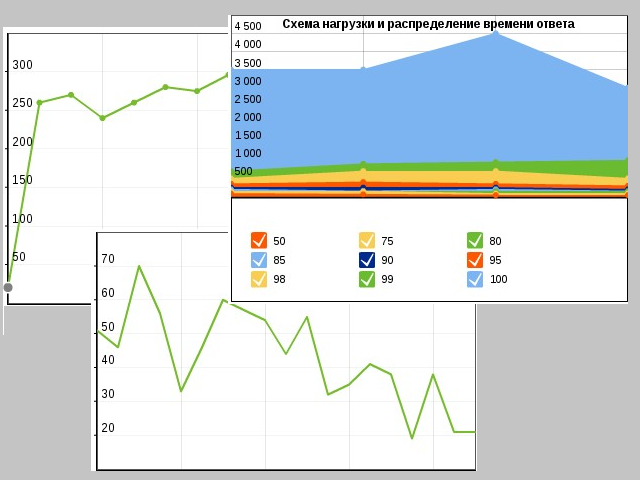

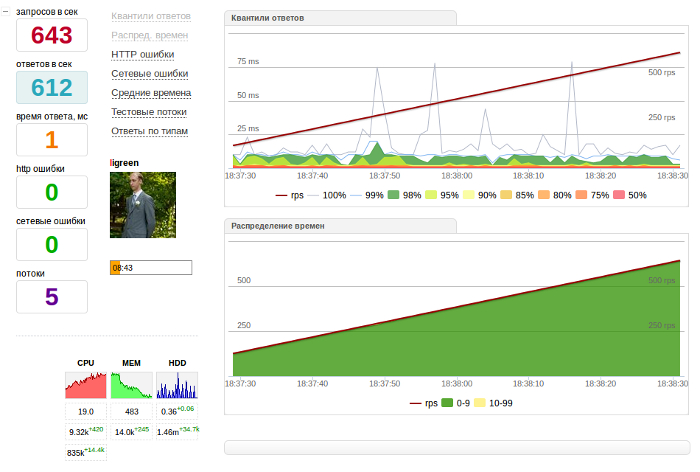

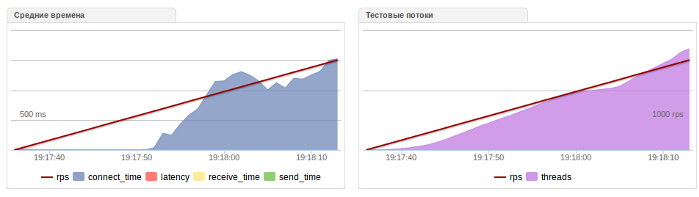

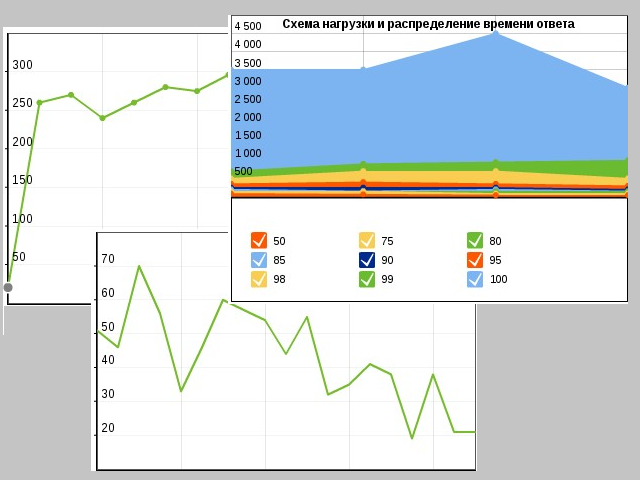

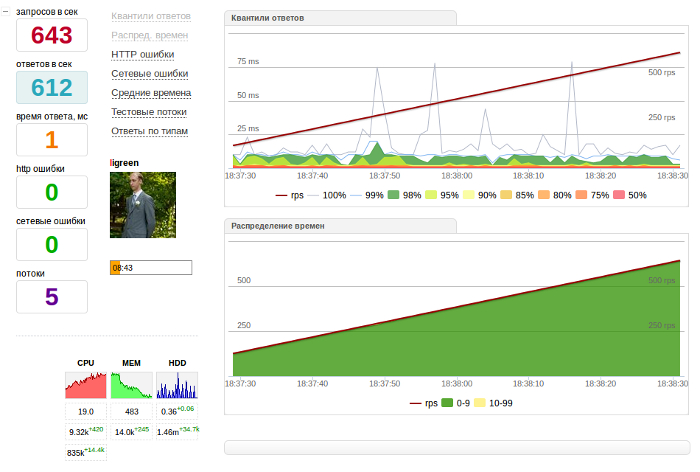

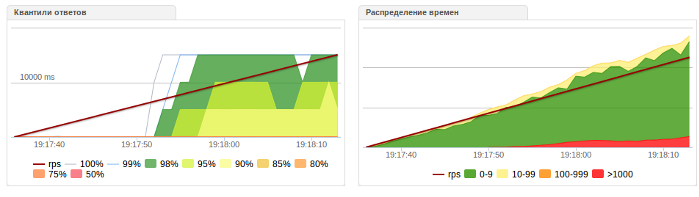

An example of an online test page.

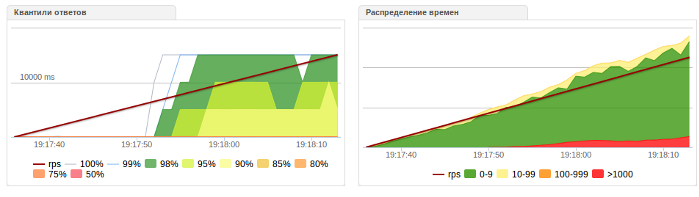

For analysis, you can include additional graphs, for example, response times.

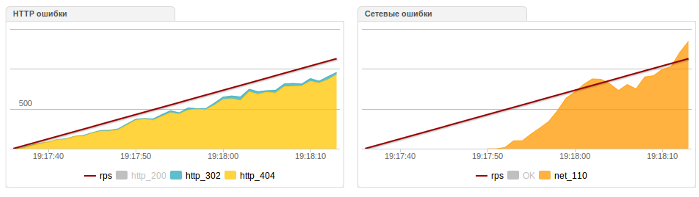

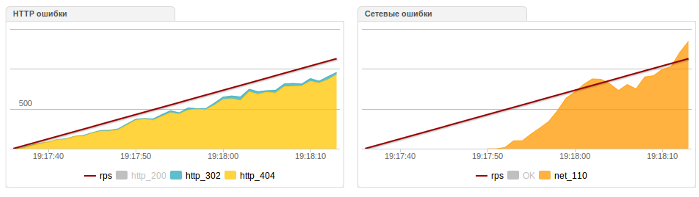

HTTP and network errors:

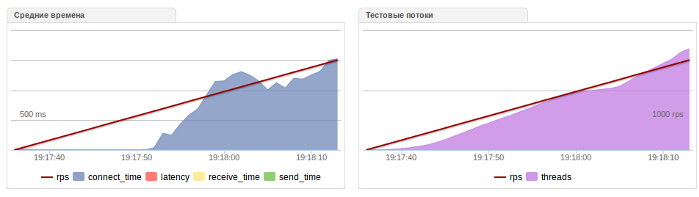

Times at different stages of interaction and flows:

For regression tests, or for tests, the acceptance of the results of which is carried out by developers or administrators, we did the so-called automatic and semi-automatic tests. About this you need to tell separately.

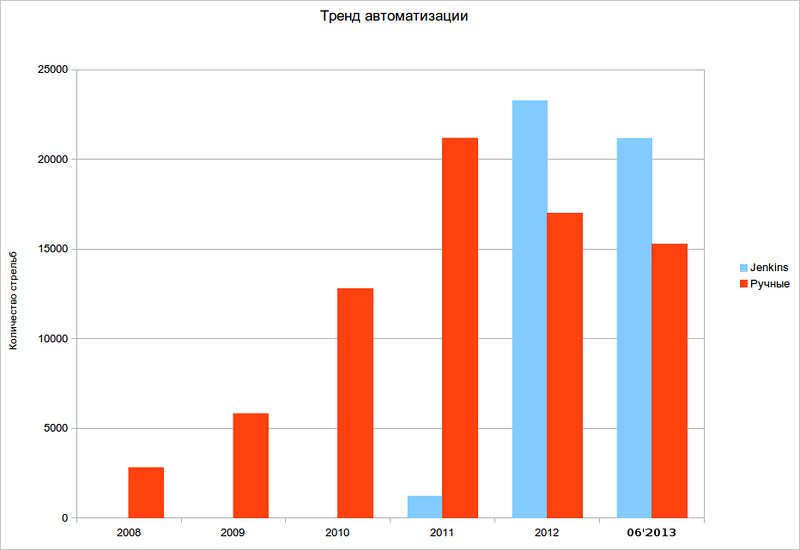

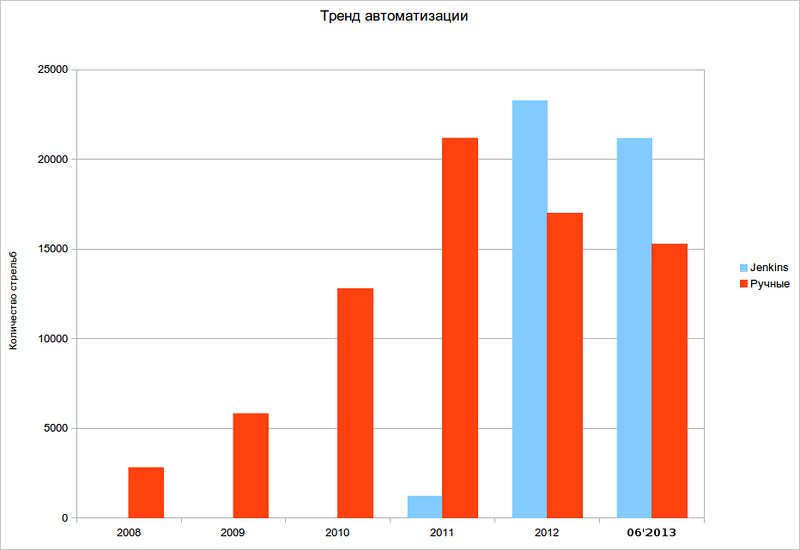

At the end of 2011, we realized that in fact all the test operations can be done by scripts, calls, or, more simply, by some executing mechanism. Ideologically, CI frameworks that are able to build projects, run a test suite, report events and pass a verdict are the closest to such activities. We looked at the options for these tools and found that there are not so many open frameworks. Jenkins seemed to us most convenient for extending the functionality with the help of plug-ins and, having tested it near Lunapark, introduced it into testing. With the help of external calls to a special API and built-in scheduler, we were able to shift all the routine work of the tester to Jenkins. The developers received the cherished button “Test my service now!”, The load users received dozens or even hundreds of tests a day without their participation, managers and system administrators received regression performance charts from build to build. At the moment, automatic tests account for about 70% of the total flow, and this figure is constantly growing. This saves us dozens of people in the staff and allows us to concentrate the tester's intelligence on manual and research tests.

The attentive reader will notice that Lunapark gradually became a separate structure: an alienable generator generator, a backend for statistics and telemetry, as well as a separate automation framework. Having looked it over and knowing that from YAC'10, where baabaka told about Lunapark, testers of the entire Runet at every opportunity troll us open Lunapark outside, we decided to put part of Lunapark in opensource. In the summer of 2012, at one of the Yandex.Subbotnik in Moscow, we presented a load generator to the testers community. Now Yandex.Tank with light graphics, with built-in support for jmeter and ab, develops only on the external githabe, we answer user questions in the strawberry and accept external pull requests from the developers.

We know that the community of load testers in RuNet is small, the available knowledge is very scarce and superficial, but nevertheless, interest in the topic of performance is only growing. Therefore, we will be happy to share our accumulated experience and knowledge in this area and promise to periodically publish articles on the topic of workloads, tools and testing methods.

By the way, if you like this story, come to the Test Environment in our St. Petersburg office on November 30 ( register ) - there I will tell you more about the game mechanics in testing and will be happy to talk to you live. So.

')

In 2005-2006, part of the non-search infrastructure of Yandex began to experience loads growing like a rune. There is a need to test the performance of related services with the search, in the first place - a banner twister. Timur Khayrullin, who at the time was in charge of load testing, was puzzled by the search for a suitable tool.

But the open solutions existing at that time were either very primitive (ab / siege) or not productive enough (jmeter). HP Load Runner stood out from commercial utilities, but the high cost of licenses and the proprietary software package did not make us happy. Therefore, Timur, together with the developer of the high-performance web server phantom Zhenya Mamchits, came up with a clever trick: they taught the server to work in client mode. So the phantom-benchmark module turned out. The phantom code itself is now open and can be downloaded from here , and you can watch a video about the phantom from the presentation here .

Then Phantom was very simple, able to measure only the maximum performance of the server, and we could only limit the number of threads. But already at that time, our utility was more productive than its peers. Therefore, for load testing, they began to contact us more and more and more services. From 2006 to 2009, the load testing team grew to ten people. The name “tankers” attached to us very quickly; they charge “tapes” with “cartridges” and “shoot” from “tanks” at “targets”. The tank theme is still with us. To save resources, we created a special "landfill" or "chicken coop", where we kept virtual machines for load testing. The virtualization platform at that time was on openvz, and now we have completely switched to lxc due to better support for new kernels and distributions of ubuntu server. Respect the lxc community!

In parallel with the incoming services and the growing popularity of load testing, or rather, with the growth of self-awareness of service teams, an understanding of the limitations of the tool’s capabilities came.

With the assistance of the developers and under the leadership of “tankman” Andrei baabaka Kuzmichev, we began to develop the phantom into a real framework to support load testing - Lunapark. Previously, due to poor organization of reports, the results were stored haphazardly - in Wiki, JIRA, mail, etc. This was very inconvenient, we invested a lot of work in this sore point and gradually we had a real web interface with dashboards and graphs, where all the tests were tied to tickets in JIRA, all reports finally received uniformity and clear design. The web interface has learned to display percentiles, timings, average times, response codes, the amount of received and transmitted data and about 30 different graphs and tables. In addition, Lunapark was tied with mail, jabber and other services. The changes did not bypass the Phantom load generator itself - it learned how to do a lot of things that it could not do before. For example, to submit requests on a schedule - linear, stepwise, reduce the load and even (!) Submit zero and fractional load. An aggregation output with percentiles, monitoring the amount of data, errors, responses were added to the console output. It looked like a console output sample of 2009.

Gradually, it became clear that it was necessary not only to constantly test some services, but also to accompany them from year to year from release to release, and also to be able to compare tests with each other. This is how the Lunapark pages important for the work of a load tester appeared: comparison of tests and regression. It looked like their first versions.

A tester, developer or manager at any time could find out how the product is developing in terms of performance. Now we are working on improving and bringing these pages to the head of the framework. At the current stage of development, it is not the unit tests that are most important for us, but performance trends.

In 2011, an important event happened - we became the first team in Yandex, which launched the real gamification of the workflow, about which I will have a separate report on the Test Environment. This even now is rarely found in the most advanced IT companies. On the Lunapark page we placed the “Hall of Fame” and are very proud of this part of the framework. For a specific test, you can tell the load tester a real friendly “Thank you!”. Each tester receives different badges for a particular event, becomes a “tank commander” (a la mayor in Forskverik) and even a “rank”, which is given for the number of tests performed. Among the routine work any achivka or thanks for its weight in gold. It is very cool motivating.

We consider 2011 as a turning point in our load testing. The development team was headed by Andrew undera Pokhilko. The tester community knows it as a developer of great plug-ins for Jmeter . Andrei brought fresh ideas and approaches that are now very helpful in the work.

First, we realized that developing the tool in the old paradigm “no time to explain, encode” does not work, and switched to a modular development paradigm where a large monolith is broken down into components and developed into separate parts without creating a threat to the whole project. Secondly, since orders for load testing began to come from services for which http traffic was not interesting, we needed a tool that could be used to test SMTP / POP3 / FTP / DNS and other protocols. We thought it was expensive to write phantom-loggers for each such service, and we decided to build an ordinary Jmeter into Lunapark. Thus, with small efforts, we learned to support dozens of new protocols in load testing. Embedding helped us leave the standard web interface without switching to the Jmeter GUI. In addition to Jmeter support, our tank eventually learned to support SSL, IPv6, UDP, elliptics, “multitests” to several addresses from one generator, load loading of several million rps from distributed generators and much more.

At a certain point, orders for load testing fell so much that it became obvious to us: we will not be able to double the staff every year without removing the routine and regression tests from the load tester. To solve this problem, we analyzed all our current daily work, and it turned out that services need to be approached with varying degrees of testing depth. We came up with the following scheme of work:

- To test prototypes or experimental builds, we made an alienable version of the load generator and, in order not to distract customers from more prepared projects, began recommending it to developers or system administrators for debugging load tests of their prototype. The results obtained are compatible with the Lunapark framework and there are no situations “I tested my prototype using ab, it gave out 1000 rps, and in Lunapark only 500!”.

- To test one-time or event services, where the load can suddenly grow several times (Sport, EGE, News, Promo Projects), we keep high-speed virtual machines where ready packaged services roll out. Tests are held in manual mode with the removal of remote telemetry from the load object. Sometimes the process is coordinated in special chat rooms where up to 5-10 people can sit: “tankers”, developers, managers. According to our internal statistics, 50% of manual tests (!) Catch various performance problems - from incorrectly constructed indexes, “spikes” on file operations, an insufficient number of workers and so on. The final results are documented in JIRA.

An example of an online test page.

For analysis, you can include additional graphs, for example, response times.

HTTP and network errors:

Times at different stages of interaction and flows:

For regression tests, or for tests, the acceptance of the results of which is carried out by developers or administrators, we did the so-called automatic and semi-automatic tests. About this you need to tell separately.

At the end of 2011, we realized that in fact all the test operations can be done by scripts, calls, or, more simply, by some executing mechanism. Ideologically, CI frameworks that are able to build projects, run a test suite, report events and pass a verdict are the closest to such activities. We looked at the options for these tools and found that there are not so many open frameworks. Jenkins seemed to us most convenient for extending the functionality with the help of plug-ins and, having tested it near Lunapark, introduced it into testing. With the help of external calls to a special API and built-in scheduler, we were able to shift all the routine work of the tester to Jenkins. The developers received the cherished button “Test my service now!”, The load users received dozens or even hundreds of tests a day without their participation, managers and system administrators received regression performance charts from build to build. At the moment, automatic tests account for about 70% of the total flow, and this figure is constantly growing. This saves us dozens of people in the staff and allows us to concentrate the tester's intelligence on manual and research tests.

The attentive reader will notice that Lunapark gradually became a separate structure: an alienable generator generator, a backend for statistics and telemetry, as well as a separate automation framework. Having looked it over and knowing that from YAC'10, where baabaka told about Lunapark, testers of the entire Runet at every opportunity troll us open Lunapark outside, we decided to put part of Lunapark in opensource. In the summer of 2012, at one of the Yandex.Subbotnik in Moscow, we presented a load generator to the testers community. Now Yandex.Tank with light graphics, with built-in support for jmeter and ab, develops only on the external githabe, we answer user questions in the strawberry and accept external pull requests from the developers.

We know that the community of load testers in RuNet is small, the available knowledge is very scarce and superficial, but nevertheless, interest in the topic of performance is only growing. Therefore, we will be happy to share our accumulated experience and knowledge in this area and promise to periodically publish articles on the topic of workloads, tools and testing methods.

Source: https://habr.com/ru/post/202020/

All Articles