How we test the search in Yandex. Screenshot-based testing of result blocks

The larger and more complex the service becomes, the more time you have to spend on testing. Therefore, the desire to automate and formalize this process is completely legitimate.

Most often, Selenium WebDriver is used to automate the testing of web services. As a rule, functional tests are written with it. But, as everyone is well aware, functional tests cannot solve the problem of testing the layout of a service, which requires additional manual, often cross-browser, checks. How can a test assess the correctness of the layout? In order to detect the regression errors of the layout, the test will need a certain standard, which can be the image of a correct layout, taken, for example, from the production version of the service. This approach is called screenshot-based testing. This approach is rarely used, and most often the layout is still manually tested. The reason for this is a series of fairly stringent requirements for the service, for the test run environment, and for the tests themselves.

The extended answers of Yandex services in the search results - we call them “witches” in our old tradition inside - an additional link in which something can break.

')

Using the example of testing witches in the search, we will describe what features the testing service should have, what problems we have when using screenshot-based testing, and how we solve them.

It takes most of the time allotted to regression testing of desktop search to check witches. It is important to make sure that the witches are correctly displayed in all major browsers (Firefox, Chrome, Opera, IE9 +). Whatever qualitative functional tests we wrote, we did not manage to significantly reduce the regression time. Fortunately, thanks to some features, the witches are quite similar to screenshot-based testing:

For testing to be effective, Selenium Grid should have as many browsers of different versions as possible. The benefit of each test is multiplied by the number of browsers in which it runs. It takes a lot of time and resources to create screenshot-based tests, so you should try to carry them out with the maximum degree of efficiency. Otherwise, the gain in time compared with manual testing may be quite insignificant. For testing automation, we deployed Selenium Grid, which provides thousands of browsers of the types we need.

Another problem that you need to think "on the shore" - the stability of the service as a whole. When a service lives and develops rapidly (design, functionality changes significantly), the fight against this noise level will require support and may not pay off from the upgrade to the upgrade of the service. As noted above, warlockers are fairly stable.

So, we want to test the witches with the help of screenshots, while emulating user actions: click on the active elements, enter text in the input fields, switch tabs and so on. But, besides the witcher himself, there are other elements on the page, including non-static ones: snippets, advertisements, and vertical bars. In the overwhelming majority of cases, beta and search search have visible differences. Hence, the comparison page is completely meaningless. But all these elements do not affect the functionality of the witcher. It was possible to hide individual elements of the page, but since we have too many of them, we decided to hide all the elements of the page, except for the testee, with the help of JavaScript. This has an indirect benefit: the page is “compressed”, the screenshot is taken and transmitted over the network faster, it takes up less memory space. In addition, again with the help of JavaScript, we learned to determine the coordinates of the area in which the sorcerer is directly located, and to make a comparison only of this area.

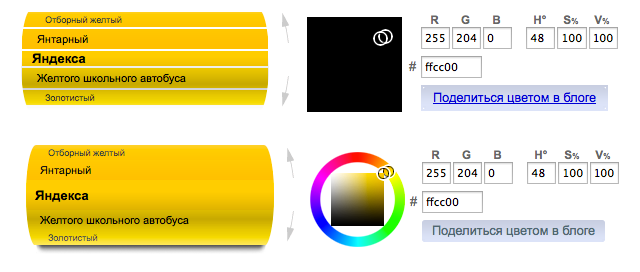

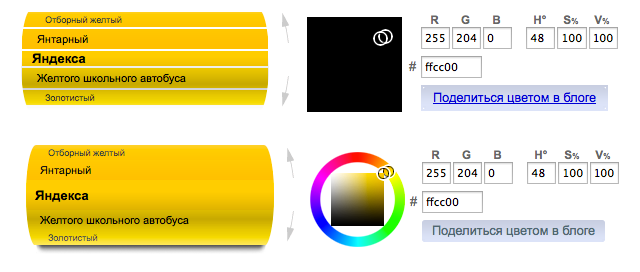

But even the same for the human eye screenshots were distinguished by pixel by pixel comparison. Without going into the reasons for this behavior of browsers, we introduced an experimental threshold for differences in RGB channels, in which the triggering occurs only for differences in the display visible to the human eye.

On the way to full cross-browser testing, many problems were solved, caused primarily by the features of OperaDriver and IEDriver (the description of which is beyond the scope of this article).

But, despite all the efforts, in a significant percentage of cases the tests gave false positives for random reasons: network lag, delays in the execution of JavaScript and AJAX. Although such errors occur with functional tests, in the screenshot-based tests their effect is higher: if the functional test checks element A and the problem occurs in element B, then a false positive may not occur, which is not the case with the screenshot-based test.

Let's give an example. When choosing a different cocktail in the bartender’s bargainer, the drawing of a new recipe does not happen instantly: it takes time to retrieve data over the network using AJAX and in JavaScript redrawing of the barrageer's elements. As a result, on beta, the script did not bring the witcher to the desired state:

Whereas in the production of the script, there were no problems and the witcher looks different:

To eliminate the element of randomness, we restart the tests several times until we are convinced of the stable reproduction of the problem. This leads to another requirement for the Selenium Grid: you must have many browsers of every type. Because only a parallel run will be able to give an acceptable duration of the tests. In our case, more than three hours at a sequential launch turned into 12-15 minutes after parallelization. We also recommend that long scenarios be divided into independent short ones: the probability of random responses will decrease, and it will become easier to analyze the report.

There are also special requirements for this report: when the test returns a lot of screenshots, it is important to present them correctly. Endless clicks on report sub-pages will take almost as much time as a manual service check. There is no universal recipe for the report, we stopped at the following:

The report includes only the expected test outcomes: success, finding differences, inability to execute the script. It is necessary to exclude any test errors that do not allow to come to one of these outcomes.

Comparing the image-states of the witches, we were able to detect various types of bugs (hereafter, the first screenshot is beta, the second is production):

As a bonus, we were able to find changes in translations. The search is presented in Russian, Ukrainian, Belarusian, Kazakh, Tatar, English, Turkish. It is not easy to keep track of the correctness of all versions, and in the screenshots the differences in the texts are immediately visible.

So, screenshot-based testing can be quite useful. But be careful in evaluations: not every service allows you to apply this approach, and your efforts may be wasted. If you manage to find suitable functionality, there is every chance to reduce the time for manual testing.

November 30 in St. Petersburg, we will hold a test environment - its first event specifically for testers. There we will tell you how our testing is arranged, what we have done to automate it, how we work with errors, data and graphs, and much more. Participation is free, but only 100 seats, so you need to have time to register .

Most often, Selenium WebDriver is used to automate the testing of web services. As a rule, functional tests are written with it. But, as everyone is well aware, functional tests cannot solve the problem of testing the layout of a service, which requires additional manual, often cross-browser, checks. How can a test assess the correctness of the layout? In order to detect the regression errors of the layout, the test will need a certain standard, which can be the image of a correct layout, taken, for example, from the production version of the service. This approach is called screenshot-based testing. This approach is rarely used, and most often the layout is still manually tested. The reason for this is a series of fairly stringent requirements for the service, for the test run environment, and for the tests themselves.

The extended answers of Yandex services in the search results - we call them “witches” in our old tradition inside - an additional link in which something can break.

')

Using the example of testing witches in the search, we will describe what features the testing service should have, what problems we have when using screenshot-based testing, and how we solve them.

Testing witches in search

It takes most of the time allotted to regression testing of desktop search to check witches. It is important to make sure that the witches are correctly displayed in all major browsers (Firefox, Chrome, Opera, IE9 +). Whatever qualitative functional tests we wrote, we did not manage to significantly reduce the regression time. Fortunately, thanks to some features, the witches are quite similar to screenshot-based testing:

- The sorcerer is a rather isolated functionality of the page, it depends little on neighboring elements.

- Most of the witches are static.

- Changes in the witches are relatively rare, so in most cases you can use the production version of the search as a reference.

For testing to be effective, Selenium Grid should have as many browsers of different versions as possible. The benefit of each test is multiplied by the number of browsers in which it runs. It takes a lot of time and resources to create screenshot-based tests, so you should try to carry them out with the maximum degree of efficiency. Otherwise, the gain in time compared with manual testing may be quite insignificant. For testing automation, we deployed Selenium Grid, which provides thousands of browsers of the types we need.

Another problem that you need to think "on the shore" - the stability of the service as a whole. When a service lives and develops rapidly (design, functionality changes significantly), the fight against this noise level will require support and may not pay off from the upgrade to the upgrade of the service. As noted above, warlockers are fairly stable.

So, we want to test the witches with the help of screenshots, while emulating user actions: click on the active elements, enter text in the input fields, switch tabs and so on. But, besides the witcher himself, there are other elements on the page, including non-static ones: snippets, advertisements, and vertical bars. In the overwhelming majority of cases, beta and search search have visible differences. Hence, the comparison page is completely meaningless. But all these elements do not affect the functionality of the witcher. It was possible to hide individual elements of the page, but since we have too many of them, we decided to hide all the elements of the page, except for the testee, with the help of JavaScript. This has an indirect benefit: the page is “compressed”, the screenshot is taken and transmitted over the network faster, it takes up less memory space. In addition, again with the help of JavaScript, we learned to determine the coordinates of the area in which the sorcerer is directly located, and to make a comparison only of this area.

But even the same for the human eye screenshots were distinguished by pixel by pixel comparison. Without going into the reasons for this behavior of browsers, we introduced an experimental threshold for differences in RGB channels, in which the triggering occurs only for differences in the display visible to the human eye.

On the way to full cross-browser testing, many problems were solved, caused primarily by the features of OperaDriver and IEDriver (the description of which is beyond the scope of this article).

But, despite all the efforts, in a significant percentage of cases the tests gave false positives for random reasons: network lag, delays in the execution of JavaScript and AJAX. Although such errors occur with functional tests, in the screenshot-based tests their effect is higher: if the functional test checks element A and the problem occurs in element B, then a false positive may not occur, which is not the case with the screenshot-based test.

Let's give an example. When choosing a different cocktail in the bartender’s bargainer, the drawing of a new recipe does not happen instantly: it takes time to retrieve data over the network using AJAX and in JavaScript redrawing of the barrageer's elements. As a result, on beta, the script did not bring the witcher to the desired state:

Whereas in the production of the script, there were no problems and the witcher looks different:

To eliminate the element of randomness, we restart the tests several times until we are convinced of the stable reproduction of the problem. This leads to another requirement for the Selenium Grid: you must have many browsers of every type. Because only a parallel run will be able to give an acceptable duration of the tests. In our case, more than three hours at a sequential launch turned into 12-15 minutes after parallelization. We also recommend that long scenarios be divided into independent short ones: the probability of random responses will decrease, and it will become easier to analyze the report.

There are also special requirements for this report: when the test returns a lot of screenshots, it is important to present them correctly. Endless clicks on report sub-pages will take almost as much time as a manual service check. There is no universal recipe for the report, we stopped at the following:

- The report consists of one html-page.

- Warlockers are grouped into blocks. The contents of the blocks can be collapsed. At first there are blocks with errors.

- Scripts are displayed inside the wizard block. Successful scenarios are minimized.

- The script logs are available: with which elements the interaction was performed so that the problem could be reproduced.

- When you move the mouse pointer over the screenshot, images from beta and production are alternately displayed so that the person can quickly detect the difference.

The report includes only the expected test outcomes: success, finding differences, inability to execute the script. It is necessary to exclude any test errors that do not allow to come to one of these outcomes.

Examples of bugs found

Comparing the image-states of the witches, we were able to detect various types of bugs (hereafter, the first screenshot is beta, the second is production):

- Removed text (zip charper);

- Image scaling change (warlock event poster);

- Regression in css (black frame at the input fields of the mathematical sorcerer);

- Regression in the data (koldunshchik translations).

As a bonus, we were able to find changes in translations. The search is presented in Russian, Ukrainian, Belarusian, Kazakh, Tatar, English, Turkish. It is not easy to keep track of the correctness of all versions, and in the screenshots the differences in the texts are immediately visible.

So, screenshot-based testing can be quite useful. But be careful in evaluations: not every service allows you to apply this approach, and your efforts may be wasted. If you manage to find suitable functionality, there is every chance to reduce the time for manual testing.

November 30 in St. Petersburg, we will hold a test environment - its first event specifically for testers. There we will tell you how our testing is arranged, what we have done to automate it, how we work with errors, data and graphs, and much more. Participation is free, but only 100 seats, so you need to have time to register .

Source: https://habr.com/ru/post/200968/

All Articles