How Groupon migrated from monolithic Rails applications to the new Node.js infrastructure

I-tier: monolith splitting

We recently completed the annual Grupon web traffic migration project in the USA from the monolithic Ruby on Rails application to the new Node.js stack and obtained significant results.

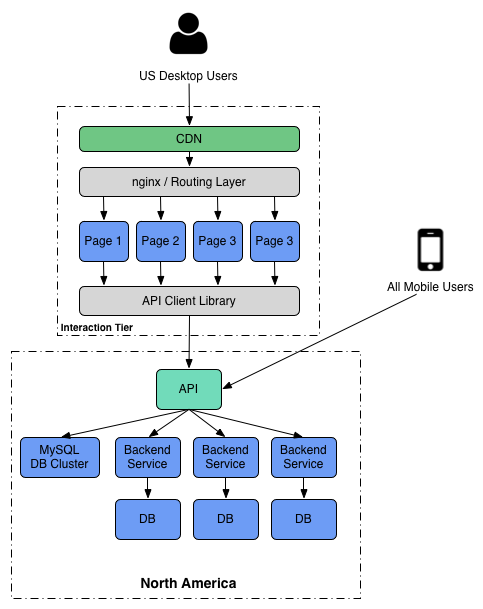

From the very beginning, the entire American frontend Web frontend was a single source for Ruby. The frontend code developed rapidly, which made it difficult to support and complicated the process of adding new features. As a solution to the problem with this giant monolith, we decided to restructure the frontend by dividing it into smaller, independent and easier to manage parts. The basis of this project was the division of the monolithic website into several independent Node.js applications. We also redesigned the infrastructure to ensure that all applications work together. The result was Interaction Tier (I-Tier).

Here are some of the highlights of this global architectural migration:

')

• Pages on the site load much faster.

• Our development teams can develop and add new features faster and with less dependence on other teams.

• We can avoid re-developing the same features in different countries where Grupon is available.

This post is the first of a series of posts about how we restructured the site and what huge benefits we see in the future, which will be the basis of the promotion of the Groupon company.

Short story

Initially, Groupon was a one-page site that displayed daily deals to Chicago residents. An example of such a typical transaction could be a discount to a local restaurant or a ticket to a local concert.

Each transaction had a “tipping point” - the minimum number of people who had to buy a transaction in order for it to become valid. If enough people bought a deal that reached a turning point, everyone got a discount. Otherwise, everyone was the loser.

The site was originally created as a Ruby on Rails application. The Rails application was a good choice to start with, as for our team of developers it was one of the easiest ways to quickly pick up and run a website. In addition, it was pretty easy to implement new features using Rails; at that time it was a huge plus for us, as the feature set was constantly increasing.

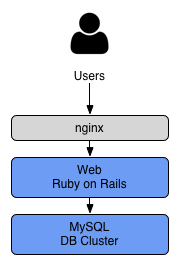

The original Rails architecture was fairly simple:

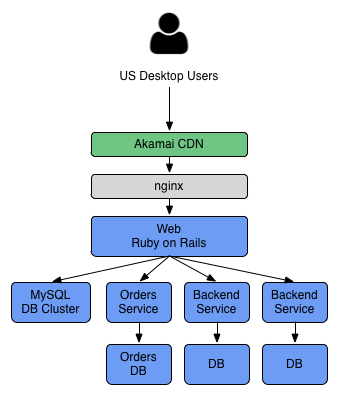

However, we quickly outgrew the ability to serve all of our traffic with a single Rails application directed to a single database cluster. We added more frontend servers and database replicas and placed everything behind the CDN, but this worked only until records in the database became a critical element. Order processing has caused a number of entries in the database; as a result, we decided to move this code from our Rails application to a new service with its separate database cluster.

We continued to follow a similar pattern, breaking the existing backend assignment into new services, but everything else on the website (views, controllers, resources, etc.) remained part of the original Rails application:

This architecture change won us some time, but we knew that this was not for long. The source code could still be managed only by a small team of developers at that time, and this allowed us to keep the site from being overloaded during peak traffic.

Globalization

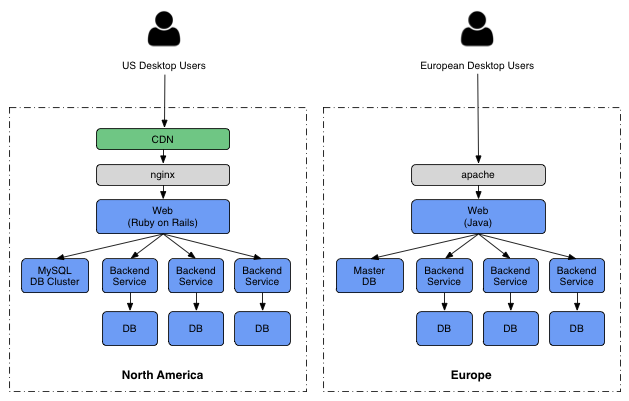

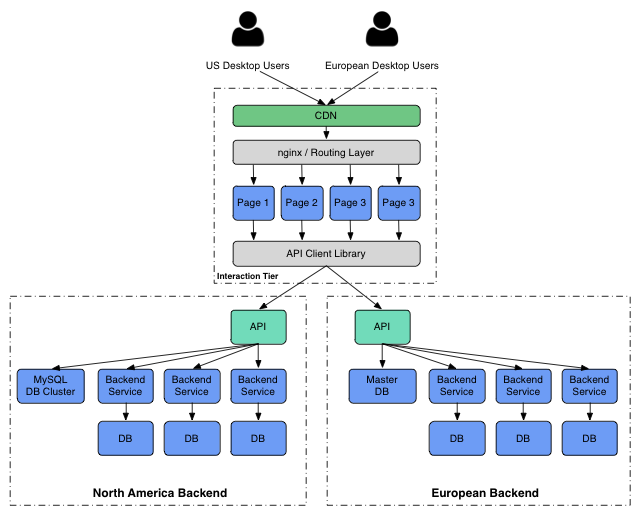

Around this time, Groupon began to expand globally. In a short period, we have moved from conducting operations only in the United States to operating in 48 different countries. At this time, we also acquired several international companies, such as CityDeal. Each acquisition has already included its software stack.

CityDeal architecture had common features with Groupon architecture, but it was a completely autonomous implementation created by another team. As a result, the differences were in design and technology - Java instead of Ruby, Apache instead of nginx, PostgreSQL instead of MySQL.

As we see, in the case of fast-growing companies, we had to choose between slowing down the pace to integrate different stacks and supporting both systems, knowing that we would be in a technical debt, which would later need to be returned. For the first time, we made an international decision to keep the American and European sales separate in exchange for a more rapid development of the company. And the more acquisitions followed, the more complexity was added to the architecture.

Mobile applications

We also created mobile clients for the iPhone, iPad, Android and Windows Mobile; we definitely did not want to create a separate mobile application for each country in which Grupon was available. Instead, we decided to create an API layer on top of each of our backend software platforms; Our mobile clients accessed any API endpoint, corresponding to the user's country.

That worked well for our mobile team. They could build a mobile app that worked in all of our countries.

But there was still a snag. Whenever we created a new product or feature, we first did it for the web and only then created an API, so the feature could be used on a mobile device.

Now that our company has become half mobile in the United States, we need to build, first and foremost, a mobile way of thinking. Accordingly, we want to create an architecture in which a separate backend could serve mobile and desktop clients with minimal development effort.

Compound monoliths

While Grupon continued to develop and launch new products, the amount of Ruby frontend source code was increasing. Too many developers worked on the same code. This has reached the point where it has become difficult for developers to launch an application locally. Test suites slowed down, and unreliable tests became a problem. And since it was one code base, the whole application needed to be deployed urgently. When the error in production required a rollback, instead of one feature, the changes of each command would be returned. In short, we had a lot of problems with monolithic code.

But we have repeatedly encountered similar problems before. We not only had to deal with the code in the United States, but we had many of the same problems with the European code. We needed to completely restructure the frontend.

Rewrite all

Rebuilding the whole front end is risky. It takes a lot of time, involving a lot of different people, and there is a big chance that in the end nothing will come out better than the old system. Or, worse, it takes a lot of time, and you give up halfway, spending a lot of effort, but not getting any results.

But we had tremendous success in the past, when we restructured smaller parts of our infrastructure. For example, both our mobile website and client portal were rebuilt with excellent results. This experience gave us a good starting point and from it we began to set goals for our project.

Objective 1: Combine our front-ends

With numerous software stacks that implement the same features in different countries, we were not able to move as quickly as we wanted. We needed to eliminate redundancy in our stack.

Goal 2: Put mobile apps on the same level as the web.

Since approximately half of our company in the United States became mobile, we could not afford to create a separate desktop and mobile versions. We needed an architecture in which the web would be another client that would use the same API as our mobile apps.

Goal 3: Make the site faster

Our site was slower than we wanted. While we were in a hurry trying to control the growth of the site, the US front-end accumulated technical debt, which made it difficult to optimize. We wanted to find a solution that would not require a large amount of code to serve requests. We wanted something simple.

Goal 4: Allow teams to progress independently

When Grupon was first launched, the site was really simple. But since then we have added many new products with development teams to support them around the world. We wanted each team to be able to create and deploy its features independently of other teams and quickly. We needed to eliminate the interdependence between the teams, the reason for which was that everything was in the same code.

An approach

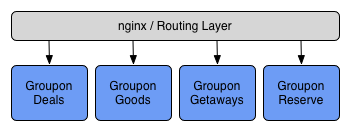

First, we decided to split each main feature of the site into separate web applications:

We built a framework on Node.js, which included the common functionality needed by all applications, so that our teams could easily create these individual applications.

Note: why Node.js?

Before building our new frontend layer, we evaluated several different software stacks to see which one would be best for us.

We were looking for a solution for a specific task - to efficiently serve a multitude of incoming HTTP requests, to execute parallel API requests to the services of each of these HTTP requests; and return the results to HTML. We also wanted something that we could confidently monitor, deploy and maintain.

We wrote prototypes using several software stacks and tested them. We will later post more detailed information with specifics, but in general we found that Node.js is well suited for this particular problem.

Approach, continuation ...

Then we added a top routing layer that redirected users to the appropriate application based on the page they were visiting:

We built the Grupon routing services (which we call Grout) as a nginx module. This allows us to do many interesting things, such as conducting A / B tests between different implementations of the same application on different servers.

And in order for all these independent web applications to work properly together, we created separate services for sharing layouts and CSS styles that support common configuration and control A / B test modes. We will post more details about these services later.

All this is located in front of our API and nothing in the frontend layer is allowed to access the database or backend service directly. This allows us to create a single integrated API layer that serves both our desktop and mobile applications:

We are working on integrating our backend systems, but in the short term, we still need to support our US and European subsystems. Therefore, at the same time, we developed our frontend, which could work with two backends:

Results:

We have just completed the transition of our American frontend from Ruby to the new Node.js infrastructure. The old monolithic frontend was divided into about 20 separate web applications, each of which was completely rewritten. We currently serve an average of 50 thousand requests per minute, but we expect an increase in this traffic during the holiday season. And this number will increase significantly, as we move traffic from our other 48 countries.

These are the advantages that we have revealed recently:

- Pages load faster - usually by 50%. This is partly due to a change in technology and partly because we had the chance to rewrite all of our web pages to speed up our work. And we are still planning to make significant progress here, as we make additional changes.

- We serve the same amount of traffic with a smaller amount of equipment compared to the old stack.

- Teams can deploy changes to their applications independently.

- We can make changes to the code of an individual feature much faster than we could have done with our old architecture.

In general, this migration made it possible for our development team to load pages faster, with fewer interdependencies, and removed some of the performance limitations of our old platform. But we plan to make many other improvements in the future, and in the near future we will post details.

[ original article at engineering.groupon.com]

Source: https://habr.com/ru/post/200906/

All Articles