We do motion detection, or OpenCV - it’s just

It is necessary to justify the name of the company - to do at least something related to the video. According to the previous topic, we can understand that we are not only doing the kettle , but also sawing “smart lighting” for a smart home. This week I was busy picking up OpenCV - a set of algorithms and libraries for working with computer vision. Search for objects on the images, recognition of characters and all that jazz.

In fact, doing something in it is not such a difficult task, even for a non-programmer. So I'll tell you how.

Immediately I say: the article can meet the most terrible bydlokod, which can scare you or deprive you of sleep until the end of life.

If you are not afraid yet - then welcome further.

Introduction

Actually, what was the idea. I wanted to completely get rid of the manual inclusion of light. We have an apartment, and there are people who move along it. People need light. All other objects in the apartment do not need light. Objects do not move, but people move (if a person does not move - he either died or sleeps. The dead and the sleeping also do not need light). Accordingly, it is necessary to cover only those places in the apartment, where there is some movement. Movement stopped - you can turn off the light in half an hour.

How to determine the movement?

About sensors

Here you can define such detectors:

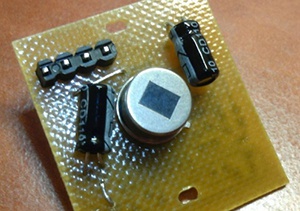

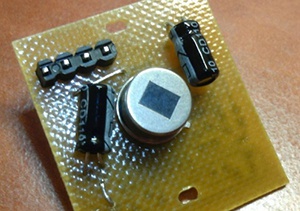

They call it PIR - Passive Infrared Sensor. Or not passive, but pyroelectric. In short, it is based on, in fact, a single pixel of the imager - the same cell that issues a signal if the far infrared hits it.

A simple scheme after it gives a pulse only if the signal changes dramatically - so it will not beep to the hot kettle, but there will be a moving warm object.

Such detectors are installed in 99% of the alarms, and you think them all, saw them - these are the things that hang from the ceiling:

Still the same things, but with strapping are more difficult to stand in contactless thermometers - those that measure the temperature in a couple of seconds on the forehead or in the ear.

And in the pyrometers, the same thermometers, but with a large range:

Although I was distracted. Such sensors, of course, a good thing. But they have a minus - it shows the movement in the whole volume of observation, without specifying where it happened - close, far. And I have a big room. And I want to turn on the light only in the part where the person works. It was possible, of course, to put 5 such sensors, but I abandoned this idea - if you can get by with one camera for about the same amount, why put a bunch of sensors?

')

Well, I wanted to scratch OpenCV, not without it, yes. So I found a camera in the bins, picked up a single-board ( CubieBoard2 on the A20) and drove off.

Installation

Naturally, to use OpenCV, you must first install. In most modern systems (I’m talking about * nix), it is installed using a single command like apt-get install opencv. But we'll go the easy way, right? Yes, and for example in the system for single-board, which I use it is not.

A comprehensive installation guide can be found here , so I will not dwell on it in great detail.

We put cmake and GTK (here I’ve put it with a clear conscience apt-get install cmake libgtk2.0-dev).

Go to the offsite and download the latest version. But if we go to SourceForge using the link from the manual for Robocraft, then we will download not the latest version (2.4.6.1), but 2.4.6, in which image reception from the camera via v4l2 does not work completely unexpectedly. I did not know this, so for 4 days I tried to make this version work. If only they wrote somewhere.

Further - standard:

You can collect examples that come with:

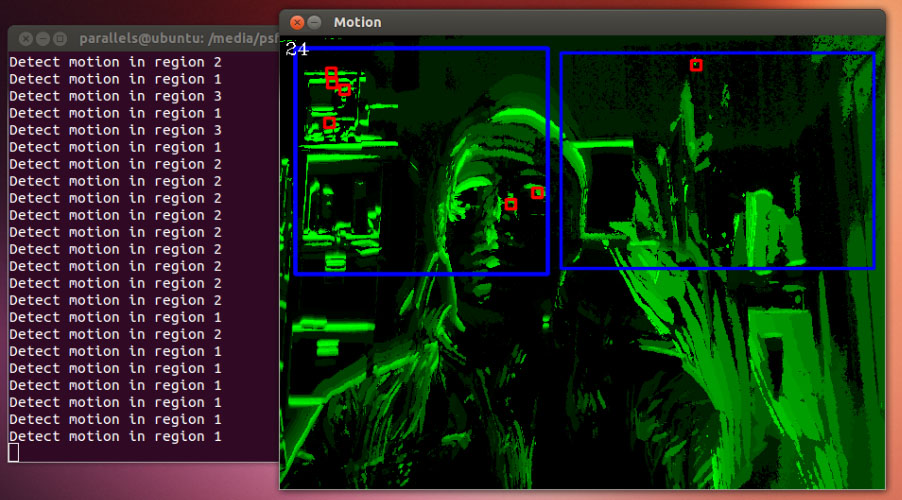

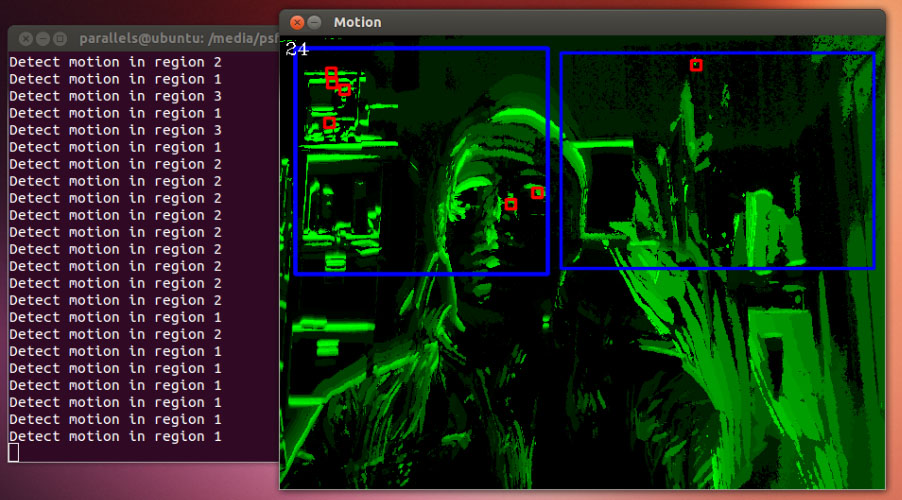

Actually, most of my code is taken from an example called motempl - this is exactly the program that implements the functionality of motion detection in a frame. It looks like this:

Dopilka

It works, but how to apply it to turn on the light? It shows movement on the screen, but we need to know about this controller, which controls the lighting. And it is desirable that he learn not the coordinates of the point, but the place where the light should be turned on.

For a start, let's understand a little how this thing works. To show the video from the camera in the window, much is not required:

You can copy this program into the test.c file and compile it like this:

It starts up and shows you the video from the camera. But you can't even get out of it - the program is stuck in an endless loop and only Ctrl + C interrupts its meaningless life. Add a button handler:

And the FPS counter:

Now we have a program that shows video from the camera. We need to somehow indicate to her those parts of the screen in which we need to define movement. Do not pens them in pixels to set.

Here's how it works:

Regions are switched by number buttons.

But we will not, every time we start the program, set monitoring regions manually? Let's save to file.

We bind these functions, for example, on the w and r buttons, and when pressed, we save and open the array.

There is only a small amount left - actually, the definition in which region the movement occurred. We transfer our achievements to the motempl.s source, and find where we can go.

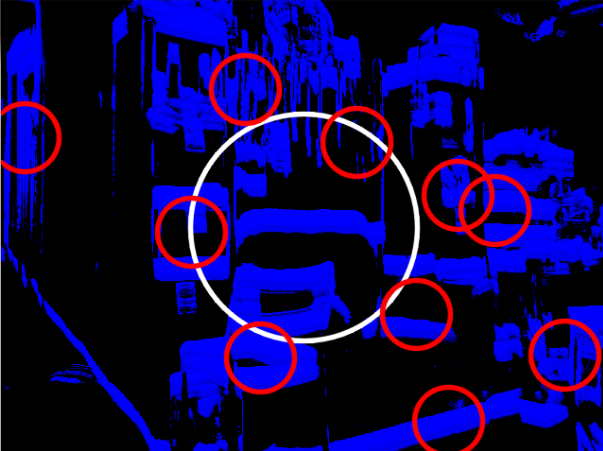

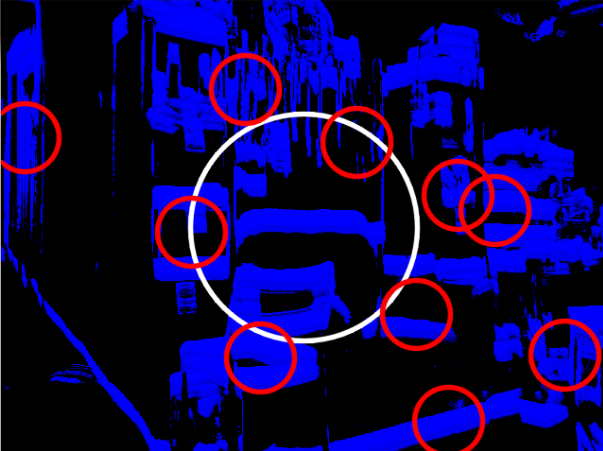

Here is the function that draws circles at the location of motion detection:

And the coordinates of the center are determined like this:

We paste our code into this piece:

Works:

It remains a little: send the output not to the console, but to the UART, connect to any MK relays that will control the light. The program detects movement in the region, sends the region number to the controller, and the latter lights the lamp assigned to it. But about this - in the next series.

I put the project source on github, and I will not mind if someone finds time to correct errors and improve the program:

github.com/vvzvlad/motion-sensor-opencv

I remind you, if you do not want to miss the epic with a kettle and want to see all the new posts of our company, you can subscribe to on the company page (button "subscribe")

on the company page (button "subscribe")

And yes, I again wrote a post at 5 am, so I will accept error messages. But - in a personal.

In fact, doing something in it is not such a difficult task, even for a non-programmer. So I'll tell you how.

Immediately I say: the article can meet the most terrible bydlokod, which can scare you or deprive you of sleep until the end of life.

If you are not afraid yet - then welcome further.

Introduction

Actually, what was the idea. I wanted to completely get rid of the manual inclusion of light. We have an apartment, and there are people who move along it. People need light. All other objects in the apartment do not need light. Objects do not move, but people move (if a person does not move - he either died or sleeps. The dead and the sleeping also do not need light). Accordingly, it is necessary to cover only those places in the apartment, where there is some movement. Movement stopped - you can turn off the light in half an hour.How to determine the movement?

About sensors

Here you can define such detectors:

They call it PIR - Passive Infrared Sensor. Or not passive, but pyroelectric. In short, it is based on, in fact, a single pixel of the imager - the same cell that issues a signal if the far infrared hits it.

A simple scheme after it gives a pulse only if the signal changes dramatically - so it will not beep to the hot kettle, but there will be a moving warm object.

Such detectors are installed in 99% of the alarms, and you think them all, saw them - these are the things that hang from the ceiling:

Still the same things, but with strapping are more difficult to stand in contactless thermometers - those that measure the temperature in a couple of seconds on the forehead or in the ear.

And in the pyrometers, the same thermometers, but with a large range:

Although I was distracted. Such sensors, of course, a good thing. But they have a minus - it shows the movement in the whole volume of observation, without specifying where it happened - close, far. And I have a big room. And I want to turn on the light only in the part where the person works. It was possible, of course, to put 5 such sensors, but I abandoned this idea - if you can get by with one camera for about the same amount, why put a bunch of sensors?

')

Well, I wanted to scratch OpenCV, not without it, yes. So I found a camera in the bins, picked up a single-board ( CubieBoard2 on the A20) and drove off.

Installation

Naturally, to use OpenCV, you must first install. In most modern systems (I’m talking about * nix), it is installed using a single command like apt-get install opencv. But we'll go the easy way, right? Yes, and for example in the system for single-board, which I use it is not.A comprehensive installation guide can be found here , so I will not dwell on it in great detail.

We put cmake and GTK (here I’ve put it with a clear conscience apt-get install cmake libgtk2.0-dev).

Go to the offsite and download the latest version. But if we go to SourceForge using the link from the manual for Robocraft, then we will download not the latest version (2.4.6.1), but 2.4.6, in which image reception from the camera via v4l2 does not work completely unexpectedly. I did not know this, so for 4 days I tried to make this version work. If only they wrote somewhere.

Further - standard:

tar -xjf OpenCV-*.tar.bz2 && cd OpenCV-* && cmake -D CMAKE_BUILD_TYPE=RELEASE -D CMAKE_INSTALL_PREFIX=/usr/local ./ && make && make install You can collect examples that come with:

cd samples/c/ && chmod +x build_all.sh && ./build_all.sh Actually, most of my code is taken from an example called motempl - this is exactly the program that implements the functionality of motion detection in a frame. It looks like this:

Dopilka

It works, but how to apply it to turn on the light? It shows movement on the screen, but we need to know about this controller, which controls the lighting. And it is desirable that he learn not the coordinates of the point, but the place where the light should be turned on.For a start, let's understand a little how this thing works. To show the video from the camera in the window, much is not required:

#include <cv.h> #include <highgui.h> #include <stdlib.h> #include <stdio.h> int main(int argc, char* argv[]) { CvCapture* capture = cvCaptureFromCAM(0);// CvCapture( , ), capture. cvCaptureFromCAM, 0 , . IplImage* image = cvQueryFrame( capture ); // ( image) cvNamedWindow("image window", 1); // image window for(;;) // { image = cvQueryFrame( capture ); // image cvShowImage("image window", image);// (image window) , cvWaitKey(10); // 10 . , . , -, , . } } You can copy this program into the test.c file and compile it like this:

gcc -ggdb `pkg-config --cflags opencv` -o `basename test.c .c` test.c `pkg-config --libs opencv` Again, to be honest, I do not quite understand what this team is doing. Well collects. Why exactly?It starts up and shows you the video from the camera. But you can't even get out of it - the program is stuck in an endless loop and only Ctrl + C interrupts its meaningless life. Add a button handler:

char c = cvWaitKey(10); // . if (c == 113 || c == 81) //, . 113 81 - "q" - . { cvReleaseCapture( &capture ); // . cvDestroyWindow("capture"); // , ! return 0; // . } And the FPS counter:

CvFont font; // "" cvInitFont(&font, CV_FONT_HERSHEY_COMPLEX_SMALL, 1.0, 1.0, 1,1,8); // - , , struct timeval tv0; //- . int fps=0; int fps_sec=0; char fps_text[2]; int now_sec=0;// ... gettimeofday(&tv0,0); // now_sec=tv0.tv_sec; // if (fps_sec == now_sec) //, , { fps++; // , ( , .) } else { fps_sec=now_sec; // , snprintf(fps_text,254,"%d",fps); // FPS fps=0; // } cvPutText(image, fps_text, cvPoint(5, 20), &font, CV_RGB(255,255,255));// (image) 520, , , , , . Full text of the program

#include "opencv2/video/tracking.hpp" #include "opencv2/highgui/highgui.hpp" #include "opencv2/imgproc/imgproc_c.h" #include <time.h> #include <stdio.h> #include <ctype.h> #include <sys/types.h> #include <sys/stat.h> #include <fcntl.h> #include <string.h> #include <errno.h> int main(int argc, char** argv) { IplImage* image = 0; CvCapture* capture = 0; struct timeval tv0; int fps=0; int fps_sec=0; int now_sec=0; char fps_text[2]; CvFont font; cvInitFont(&font, CV_FONT_HERSHEY_COMPLEX_SMALL, 1.0, 1.0, 1,1,8); capture = cvCaptureFromCAM(0); cvNamedWindow( "Motion", 1 ); for(;;) { IplImage* image = cvQueryFrame( capture ); gettimeofday(&tv0,0); now_sec=tv0.tv_sec; if (fps_sec == now_sec) { fps++; } else { fps_sec=now_sec; snprintf(fps_text,254,"%d",fps); fps=0; } cvPutText(image, fps_text, cvPoint(5, 20), &font, CV_RGB(255,255,255)); cvShowImage( "Motion", image ); if( cvWaitKey(10) >= 0 ) break; } cvReleaseCapture( &capture ); cvReleaseImage(&image); cvDestroyWindow( "Motion" ); return 0; } Now we have a program that shows video from the camera. We need to somehow indicate to her those parts of the screen in which we need to define movement. Do not pens them in pixels to set.

int dig_key=0;//, int region_coordinates[10][4]; // , . ... char c = cvWaitKey(20); // . if (c <=57 && c>= 48) //, { dig_key=c-48; //key "0123456789" // , . } cvSetMouseCallback( "Motion", myMouseCallback, (void*) image); //, myMouseCallback , Motion image if (region_coordinates[dig_key][0] != 0 && region_coordinates[dig_key][1] != 0 && region_coordinates[dig_key][2] == 0 && region_coordinates[dig_key][3] == 0) // . - . cvRectangle(image, cvPoint(region_coordinates[dig_key][0],region_coordinates[dig_key][1]), cvPoint(region_coordinates[dig_key][0]+1,region_coordinates[dig_key][1]+1), CV_RGB(0,0,255), 2, CV_AA, 0 ); if (region_coordinates[dig_key][0] != 0 && region_coordinates[dig_key][1] != 0 && region_coordinates[dig_key][2] != 0 && region_coordinates[dig_key][3] != 0) // - . cvRectangle(image, cvPoint(region_coordinates[dig_key][0],region_coordinates[dig_key][1]), cvPoint(region_coordinates[dig_key][2],region_coordinates[dig_key][3]), CV_RGB(0,0,255), 2, CV_AA, 0 ); void myMouseCallback( int event, int x, int y, int flags, void* param) // , { IplImage* img = (IplImage*) param; // . , switch( event ){ // case CV_EVENT_MOUSEMOVE: break; // . , , : printf("%dx %d\n", x, y); case CV_EVENT_LBUTTONDOWN: // if (region_coordinates[dig_key][0] != 0 && region_coordinates[dig_key][1] != 0 && region_coordinates[dig_key][2] == 0 && region_coordinates[dig_key][3] == 0) // ( - ), - { region_coordinates[dig_key][2]=x; //dig_key - , . . region_coordinates[dig_key][3]=y; } if (region_coordinates[dig_key][0] == 0 && region_coordinates[dig_key][1] == 0)// ( ), . { region_coordinates[dig_key][0]=x; region_coordinates[dig_key][1]=y; } break; } } Here's how it works:

Regions are switched by number buttons.

Full text of the program

#include "opencv2/video/tracking.hpp" #include "opencv2/highgui/highgui.hpp" #include "opencv2/imgproc/imgproc_c.h" #include <time.h> #include <stdio.h> #include <ctype.h> #include <sys/types.h> #include <sys/stat.h> #include <fcntl.h> #include <string.h> #include <errno.h> int dig_key=0; int region_coordinates[10][4]; void myMouseCallback( int event, int x, int y, int flags, void* param) { IplImage* img = (IplImage*) param; switch( event ){ case CV_EVENT_MOUSEMOVE: //printf("%dx %d\n", x, y); break; case CV_EVENT_LBUTTONDOWN: //printf("%dx %d\n", region_coordinates[dig_key][0], region_coordinates[dig_key][1]); if (region_coordinates[dig_key][0] != 0 && region_coordinates[dig_key][1] != 0 && region_coordinates[dig_key][2] == 0 && region_coordinates[dig_key][3] == 0) { region_coordinates[dig_key][2]=x; region_coordinates[dig_key][3]=y; } if (region_coordinates[dig_key][0] == 0 && region_coordinates[dig_key][1] == 0) { region_coordinates[dig_key][0]=x; region_coordinates[dig_key][1]=y; } break; case CV_EVENT_RBUTTONDOWN: break; case CV_EVENT_LBUTTONUP: break; } } int main(int argc, char** argv) { IplImage* image = 0; CvCapture* capture = 0; struct timeval tv0; int fps=0; int fps_sec=0; int now_sec=0; char fps_text[2]; CvFont font; cvInitFont(&font, CV_FONT_HERSHEY_COMPLEX_SMALL, 1.0, 1.0, 1,1,8); capture = cvCaptureFromCAM(0); cvNamedWindow( "Motion", 1 ); for(;;) { IplImage* image = cvQueryFrame( capture ); gettimeofday(&tv0,0); now_sec=tv0.tv_sec; if (fps_sec == now_sec) { fps++; } else { fps_sec=now_sec; snprintf(fps_text,254,"%d",fps); fps=0; } cvSetMouseCallback( "Motion", myMouseCallback, (void*) image); if (region_coordinates[dig_key][0] != 0 && region_coordinates[dig_key][1] != 0 && region_coordinates[dig_key][2] == 0 && region_coordinates[dig_key][3] == 0) cvRectangle(image, cvPoint(region_coordinates[dig_key][0],region_coordinates[dig_key][1]), cvPoint(region_coordinates[dig_key][0]+1,region_coordinates[dig_key][1]+1), CV_RGB(0,0,255), 2, CV_AA, 0 ); if (region_coordinates[dig_key][0] != 0 && region_coordinates[dig_key][1] != 0 && region_coordinates[dig_key][2] != 0 && region_coordinates[dig_key][3] != 0) cvRectangle(image, cvPoint(region_coordinates[dig_key][0],region_coordinates[dig_key][1]), cvPoint(region_coordinates[dig_key][2],region_coordinates[dig_key][3]), CV_RGB(0,0,255), 2, CV_AA, 0 ); cvPutText(image, fps_text, cvPoint(5, 20), &font, CV_RGB(255,255,255)); cvShowImage( "Motion", image ); char c = cvWaitKey(20); if (c <=57 && c>= 48) { dig_key=c-48; //key "0123456789" } } cvReleaseCapture( &capture ); cvReleaseImage(&image); cvDestroyWindow( "Motion" ); return 0; } But we will not, every time we start the program, set monitoring regions manually? Let's save to file.

FILE *settings_file; FILE* fd = fopen("regions.bin", "rb"); // . "rb" - if (fd == NULL) { printf("Error opening file for reading\n"); // FILE* fd = fopen("regions.bin", "wb"); // if (fd == NULL) { printf("Error opening file for writing\n"); } else { fwrite(region_coordinates, 1, sizeof(region_coordinates), fd); // - fclose(fd); // printf("File created, please restart program\n"); } return 0; } size_t result = fread(region_coordinates, 1, sizeof(region_coordinates), fd); // if (result != sizeof(region_coordinates)) // printf("Error size file\n"); // fclose(fd); // FILE* fd = fopen("regions.bin", "wb"); // . "wb" - if (fd == NULL) // printf("Error opening file for writing\n"); // fwrite(region_coordinates, 1, sizeof(region_coordinates), fd); // fclose(fd); // We bind these functions, for example, on the w and r buttons, and when pressed, we save and open the array.

There is only a small amount left - actually, the definition in which region the movement occurred. We transfer our achievements to the motempl.s source, and find where we can go.

Here is the function that draws circles at the location of motion detection:

cvCircle( dst, center, cvRound(magnitude*1.2), color, 3, CV_AA, 0 ); And the coordinates of the center are determined like this:

center = cvPoint( (comp_rect.x + comp_rect.width/2), (comp_rect.y + comp_rect.height/2) ); We paste our code into this piece:

int i_mass; // for (i_mass = 0; i_mass <= 9; i_mass++) // , . { if( comp_rect.x + comp_rect.width/2 <= region_coordinates[i_mass][2] && comp_rect.x + comp_rect.width/2 >= region_coordinates[i_mass][0] && comp_rect.y + comp_rect.height/2 <= region_coordinates[i_mass][3] && comp_rect.y + comp_rect.height/2 >= region_coordinates[i_mass][1] ) //, , -. { cvRectangle(dst, cvPoint(region_coordinates[i_mass][0],region_coordinates[i_mass][1]), cvPoint(region_coordinates[i_mass][2],region_coordinates[i_mass][3]), CV_RGB(0,0,255), 2, CV_AA, 0 ); // , , , . printf("Detect motion in region %d\n",i_mass); // } } Works:

It remains a little: send the output not to the console, but to the UART, connect to any MK relays that will control the light. The program detects movement in the region, sends the region number to the controller, and the latter lights the lamp assigned to it. But about this - in the next series.

I put the project source on github, and I will not mind if someone finds time to correct errors and improve the program:

github.com/vvzvlad/motion-sensor-opencv

I remind you, if you do not want to miss the epic with a kettle and want to see all the new posts of our company, you can subscribe to

on the company page (button "subscribe")

on the company page (button "subscribe")And yes, I again wrote a post at 5 am, so I will accept error messages. But - in a personal.

Source: https://habr.com/ru/post/200804/

All Articles