Work with the mdadm utility. Change array type, chunk size, extension

Introduction

By writing this article, I was prompted by the lack of intelligible information in Russian about working with the mdadm utility, which implements various types of RAID on linux. In principle, the main provisions of the work of this utility are covered in sufficient detail. You can read about it, for example, one , two , three .

Convert RAID1 to RAID5

The main message was the conversion of RAID1, created when installing the Ubuntu array of two disks to RAID5. I started, as usual with googling, and came across an earlier article on Habré . Unfortunately, tested on virtual machines, this method guaranteed to kill the ability to boot from the root partition. What is the reason for this, with a newer version of mdadm, or with the specifics of testing (the root partition on the array, work from the live CD is required) was not found.

System version

root@u1:/home/alf# uname -a Linux u1 3.2.0-23-generic #36-Ubuntu SMP Tue Apr 10 20:39:51 UTC 2012 x86_64 x86_64 x86_64 GNU/Linux The disk layout in my case was easier than ever, I modeled it on a test virtual machine, highlighting 20 gigs for the disks:

')

The root partition is located on the / dev / sda2 / dev / sdb2 devices assembled into an array and has the name / dev / md0 in the base system. The boot partition is located on both disks, / boot to a separate disk is not allocated.

In the mdadm version v3.2.5 , which is installed by default in Ubuntu, the RAID conversion procedure is possible in the 1-> 5 direction, and is not possible back. It is executed by the change array command –grow, -G . Before converting, insert a USB flash drive into the device and mount it to save the backup superblock. In case of failures from it we will recover an array. Mount it in / mnt / sdd1

mkdir /mnt/sdd1mount /dev/sdd1 /mnt/sdd1mdadm --grow /dev/md0 --level=5 --backup-file=/mnt/sdd1/backup1The operation usually takes place very quickly and painlessly. It should be noted here that the resulting RAID5-type array on two disks is actually a RAID1 array, with the possibility of expansion. It will become a full-fledged RAID5 only when adding at least one more disk.

It is extremely important that our boot partition is located on this array and after a reboot, GRUB will not automatically load the modules to start. To do this, we update the bootloader

update-grubIf you still forgot to do the update, and after rebooting, you received

cannot load raid5rec grub rescue> Do not despair, you just boot from the LiveCD, copy the / boot directory to the USB flash drive, reboot from the main partition again to grub rescue> and load the RAID5 module from the USB flash drive according to common instructions, for example .

Add the line insmod /boot/grub/raid5rec.mod

After downloading from the main section, do not forget to update-grub

RAID5 array expansion

Expansion of the modified array is described in all sources and is not a problem. Do not turn off the power.

- Clone the structure of an existing disk

sfdisk –d /dev/sda | sfdisk /dev/sdc - Add a disk to the array

mdadm /dev/md0 --add /dev/sdc2 - Expand array

mdadm --grow /dev/md0 --raid-device=3 --backup-file=/mnt/sdd1/backup2 - We are waiting for the end of reconfiguration and watch the process

watch cat /proc/mdstat - Installing the boot sector on a new disk

grub-install /dev/sdc - Update the bootloader

update-grub - Expanding free space to the maximum, works on a live and mounted partition.

resize2fs /dev/md0 - We look at the resulting array

mdadm --detail /dev/md0 - We look at the free space

df -h

Changing the Chunk Size of an existing RAID5 array

There is no Chunk Size concept in RAID1 because This is the block size, which in turn is written to different disks. When converting an array type to RAID5, this parameter appears in the output of detailed information about the array:

Mdadm –detail /dev/md0 /dev/md0: Version : 1.2 Creation Time : Tue Oct 29 03:57:36 2013 Raid Level : raid5 Array Size : 20967352 (20.00 GiB 21.47 GB) Used Dev Size : 20967352 (20.00 GiB 21.47 GB) Raid Devices : 2 Total Devices : 2 Persistence : Superblock is persistent Update Time : Tue Oct 29 04:35:16 2013 State : clean Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 8K Name : u1:0 (local to host u1) UUID : cec71fb8:3c944fe9:3fb795f9:0c5077b1 Events : 20 Number Major Minor RaidDevice State 0 8 2 0 active sync /dev/sda2 1 8 18 1 active sync /dev/sdb2 In this case, the chunk size is selected according to the rule: The largest common divisor of the array size (in our case, 20967352K) and the maximum automatically set chunk size (which is 64K). In my case, the maximum chunk size is 8K (20967352/8 = 2620919 is not divided further by 2).

If you plan to add disks in the future, increasing the size of the array, then you should change the size of the chunk at some point. About performance you can read an interesting article .

To change the chunk parameter in an array, you must align its size with the multiplier. Before this, it is important to change the partition size, since if you cut through the live maximum partition, you will most likely wipe the superblock of the partition, and are guaranteed to corrupt the file system.

How to calculate the necessary partition size for the chunk size you need? We take the maximum current size of the partition in kilobytes

mdadm --detail /dev/md0 | grep ArrayWe divide completely into the chunk size we need and (the number of disks in the array is 1, for RAID5). The resulting number is again multiplied by the chunk size and by (the number of disks in the array is -1), and the result will be the partition size we need.

(41934704/256) / 2 = 81903; 81903 * 256 * 2 = 41934336

It is impossible to reduce the size of the partition to a working, mounted partition, and since it is our root, we reboot from any LiveCD (I like RescueCD , it loads mdadm automatically)

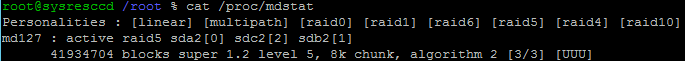

- We check the running arrays

Cat /proc/mdstat

We discover that our array is in RO mode

- We rebuild it as usual

Mdadm --stop /dev/md127Mdadm --assemble --scan

- We perform an array check for errors with autocorrection

e2fsck -f /dev/md127 - We change the size of the active partition by specifying -S 256 as the parameter so that the utility understands that this partition will be located on the RAID array and add the letter K to the end of the new size of the array so that the utility does not count this number as the number of blocks

resize2fs -p S 256 /dev/md127 41934336K - To resize the array, use again the --grow parameter in the mdadm utility. It is necessary to take into account that the array changes on each disk, and therefore in the parameters we indicate not the total required size of the array, but the size of the array on each disk, respectively, it is necessary to divide by (the number of disks in the array -1). The operation is instantaneous

41934336/2=20967168mdadm --grow /dev/md127 --size=20967168 - Reboot to normal mode, change the chunk size, remembering to pre-mount the USB flash drive for the superblock backup, and wait for the array to re-synchronize.

mdadm --grow /dev/md0 --chunk=256 --backup-file=/mnt/sdd1/backup3watch cat /proc/mdstat

The operation is long, much longer than adding a disc, prepare to wait. Power, as usual, can not be turned off.

Array recovery

If at some stage of working with the array via mdadm you had a failure, then to restore the array from the backup (did you remember to specify the file for the backup?) You need to boot from the liveCD and rebuild the array again indicating the correct sequence of disks included in array and an indication of the superblock to load

mdadm --assemble /dev/md0 /dev/sda2 /dev/sdb2 /dev/sdc2 --backup-file=/mnt/sdd1/backup3In my case, the last operation to change the chunk array transferred it to the * reshape state , however, the reassembly process itself did not start for a long time. I had to reboot from the liveCD and restore the array. After that, the process of rebuilding the array started, and at the end of it, the Chunk Size size was already new, 256K.

Conclusion

I hope this article will help you to change your home storage systems painlessly, and maybe someone will suggest that the mdadm utility is not as incomprehensible as it seems.

Additionally read

www.spinics.net/lists/raid/msg36182.html

www.spinics.net/lists/raid/msg40452.html

enotty.pipebreaker.pl/2010/01/30/zmiana-parametrow-md-raid-w-obrazkach

serverhorror.wordpress.com/2011/01/27/migrating-raid-levels-in-linux-with-mdadm

lists.debian.org/debian-kernel/2012/09/msg00490.html

www.linux.org.ru/forum/admin/9549160

For special gourmet fossies.org/dox/mdadm-3.3/Grow_8c_source.html

Source: https://habr.com/ru/post/200194/

All Articles