How Yandex clouds are arranged: Elliptics

Over the past few years, a fashionable trend has emerged in the IT world - the use of everything “cloudy” to develop new products. Public cloud providers are not many, the most popular among them - Amazon. However, many companies are not willing to trust private data to anyone, while they want to store them securely, and therefore raise small private clouds.

Any cloud consists of two main components: Single Entry Point (ETV) and Cloud Magic (OM). Consider the Amazon S3 cloud storage: the RTV API is used in the role of ETV, and the elves working on dollars provide Cloud Magic. Companies wishing to place small video files or a database in S3 preliminarily calculate on the calculator the amount they will pay per month at the planned load.

This article is about another cloud storage, in which the elves feed on the Spirit of Freedom, electricity, and they still need a little bit of " cocaine ."

This repository is called Elliptics .

The history of its creation dates back to 2007 as part of POHMELFS . The following year, Elliptics was put into a separate project, and many different approaches to distributed data storage were tried in it, many of them did not work because of the complexity, because of too little practicality in real life. In the end, its author, Yevgeny Polyakov, came to the creation of Elliptics in its modern form.

')

This is the first of the Five Elements, for the preservation of which Elliptics is directed. Machines are constantly breaking down, hard drives fail, and elves from time to time go on strike and throw bark. The data center can also fall out of our world at any time, by virtue of Black Magic and Sorcery. The most obvious of these are Cable Break and Electricity Loss.

Everyone has long known what to do in case the disk or server is disconnected. But no one wonders what will happen to their system if the data center fails, the Amazon region or another major event occurs. Elliptics was originally planned to solve this class of problems.

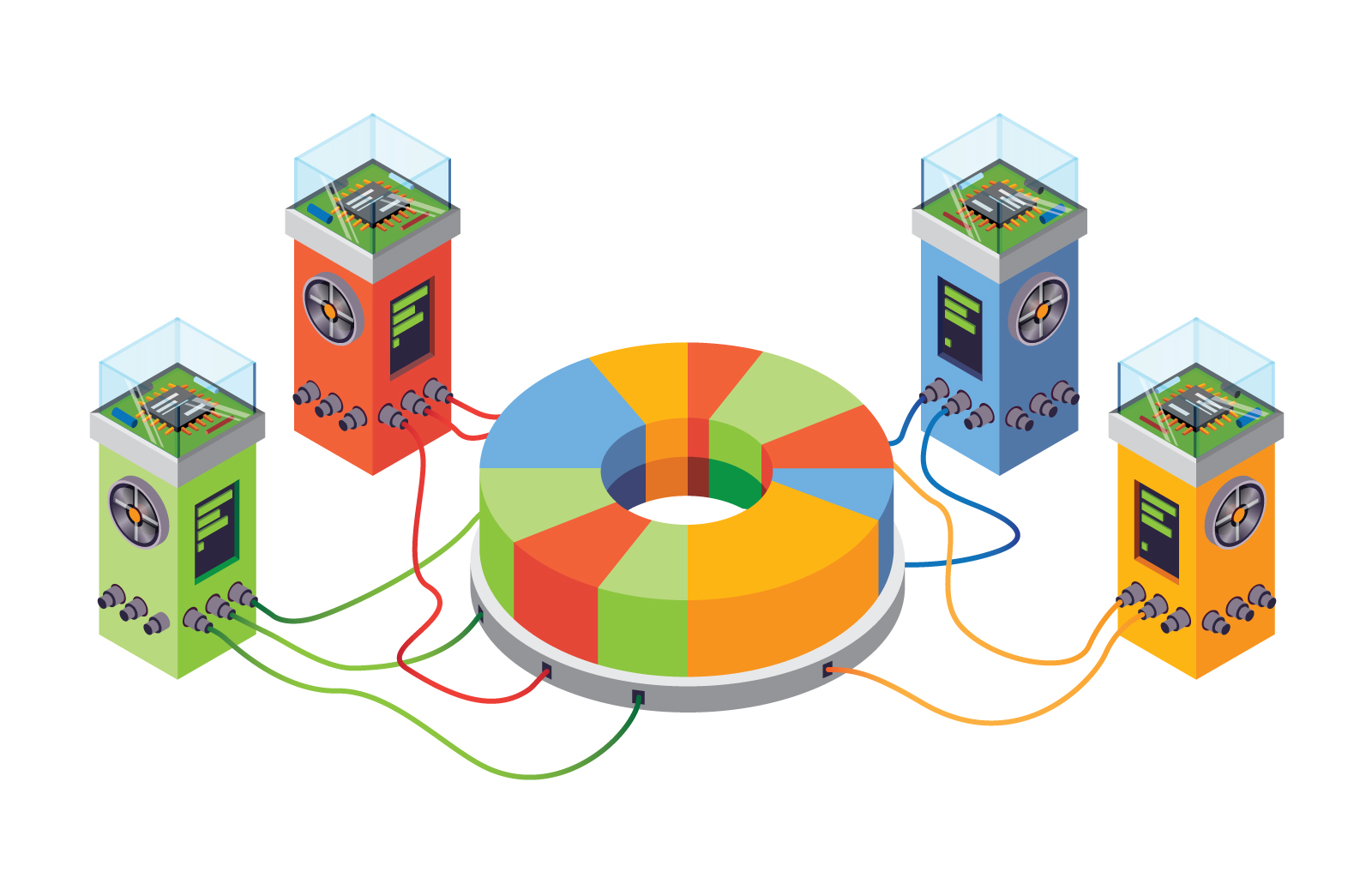

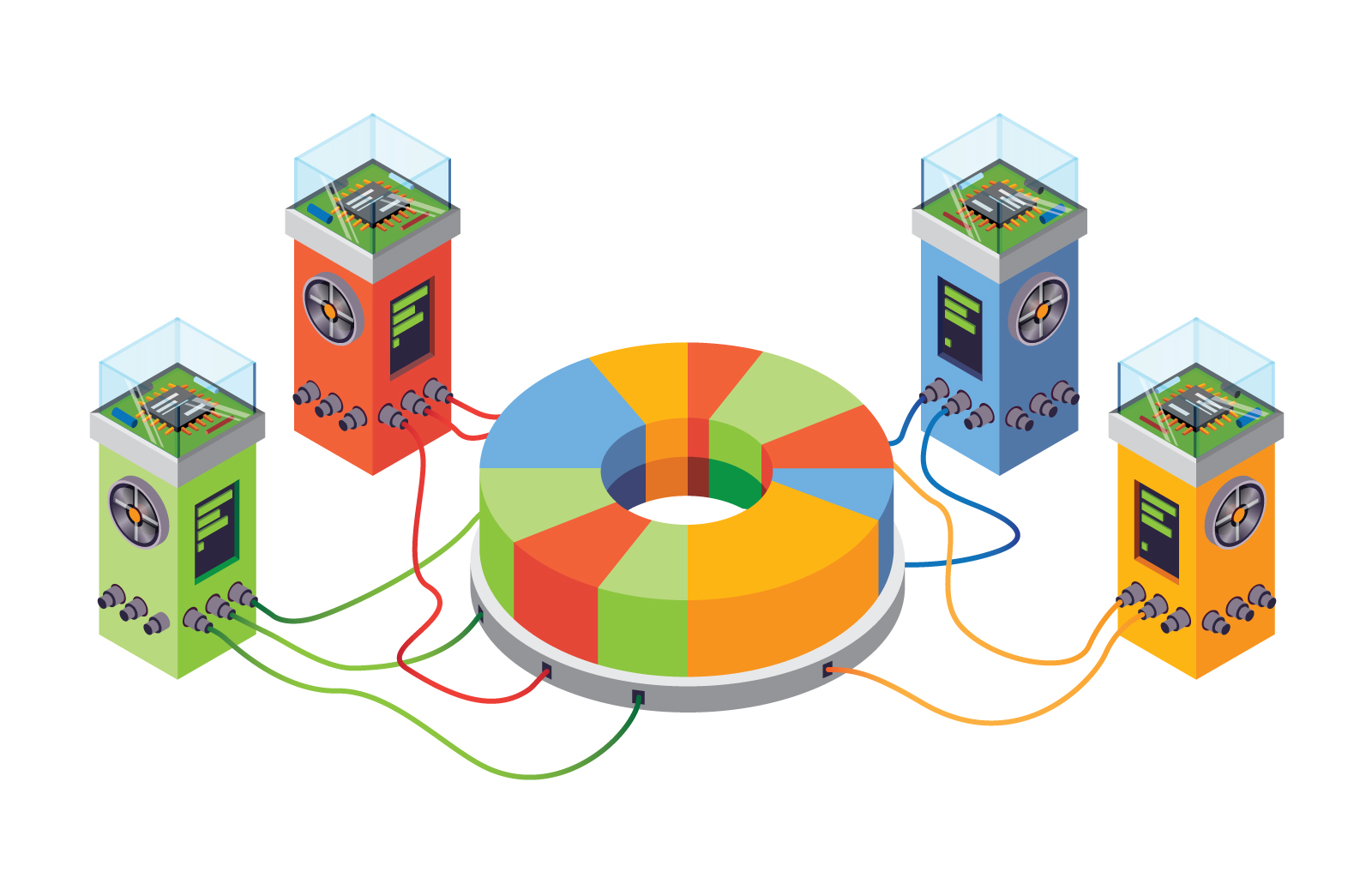

In Elliptics, all documents are stored on 512-bit keys, which are obtained as a result of the sha512 function of the document name. All keys can be represented as Distributed Hash Table (DHT), rings with a range of values from 0 to 2,512 . The ring is randomly divided between all the machines of one group, and each key can be simultaneously stored in several different groups. We can roughly assume that one group is one data center. As a rule, Yandex keeps 3 copies of all documents, and in the event of the failure of a machine, we lose only one copy of a part of the ring. Even if the entire data center fails, the information is not lost, we still have all the documents in at least two copies, and so the elves still can bring joy to people.

Administrator elves always wanted to be able to connect additional machines, and Elliptics can do it! When a new computer is connected to the group, it takes away random intervals from those 2,512 keys, and all subsequent requests for these keys will come to this machine.

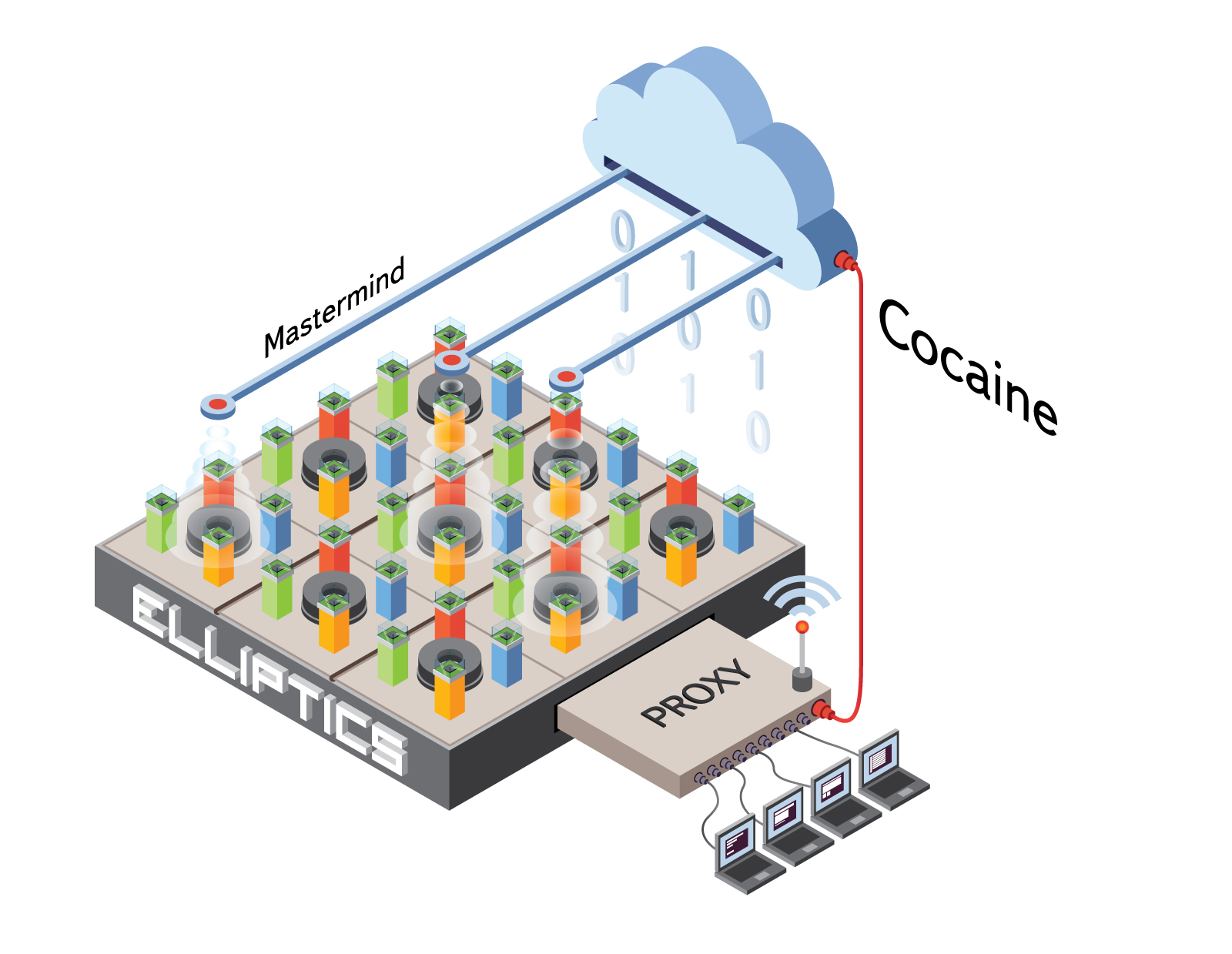

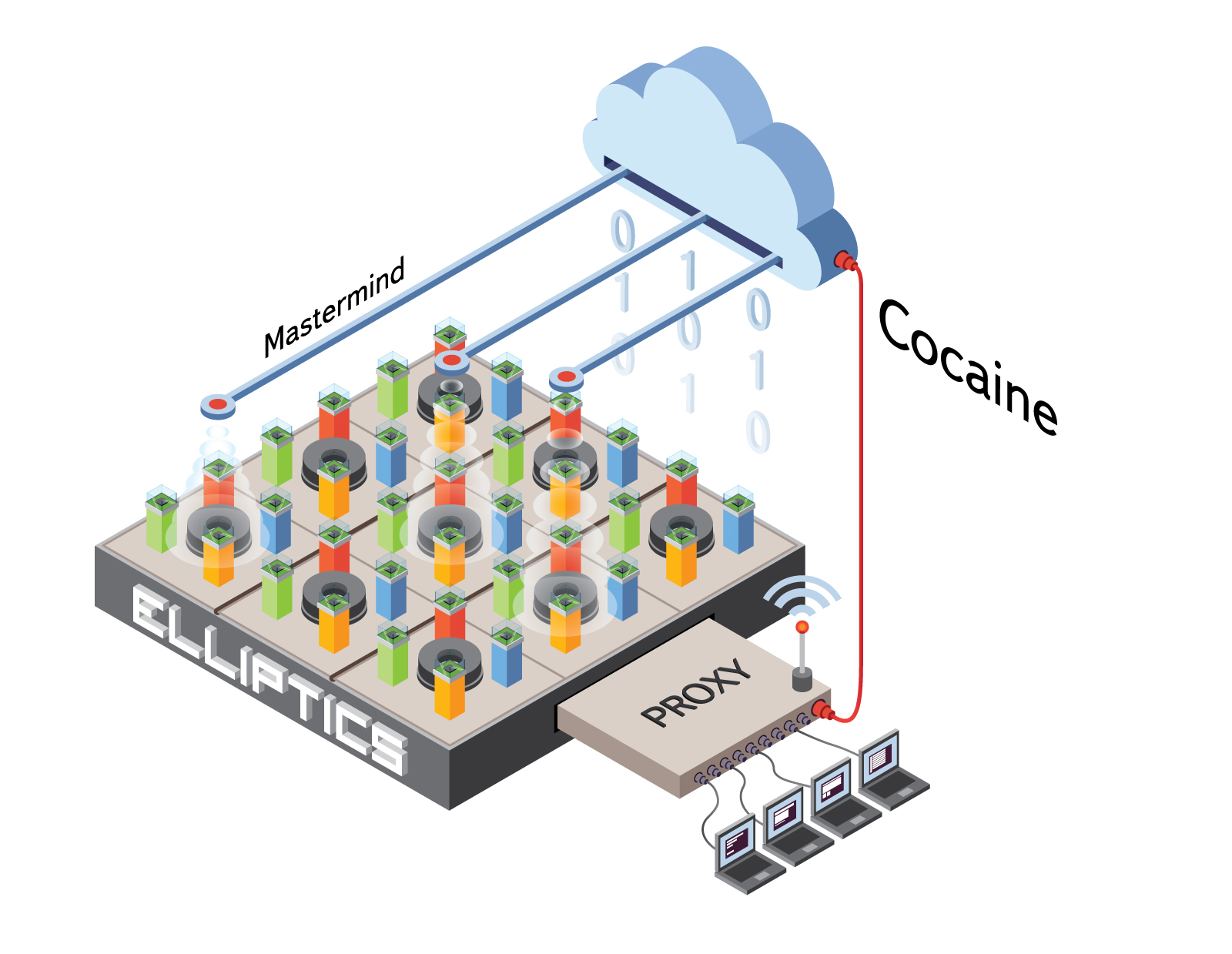

If we have three groups consisting of hundreds of machines each, then adding new ones will entail rebalancing and, as a result, large amounts of data will have to be poured over the network to restore them. For those to whom this is not suitable, we have developed a load balancing system - Mastermind , which runs on the Cocaine cloud platform. Mastermind is a set of peer super-nodes that determine in which groups a particular file will be stored based on the load on each of the servers. Here, too, there is a difficult Mathematical Magic - the free space, disk load, CPU load, switch load, drop rate and much more are taken into account. In case of failure of any of the Mastermind nodes, everything continues to work as before. If the group size is kept small, then when new machines are added, it is enough for them to issue a new group and let the Mastermind know about it. In this case, the information about the groups in which it should be searched is added to the file identifier. This mechanism allows for a truly infinite and very simple storage expansion.

Let's take a look at what the system does with old data that were not on those machines after balancing or were completely lost.

In this case, Elliptics provides a recovery system. The scheme of its work is as follows: it goes through all the machines and, if the data is on your own machine, moves them to the right one. If it turns out that some document is not in three copies, then during this procedure the document will be reproduced on the necessary machines. Moreover, if the system works with Mastermind, the group number of the file location is taken from the meta-information. The recovery speed depends on many factors and the exact key recovery speed cannot be called, but, as load testing shows, the bottleneck is the network channel between the machines, which is used to transfer data.

Elliptics has little overhead and is capable of performing up to 240,000 read operations per second on a single machine while working with the cache. When working with a disk, the speed naturally drops and rests on the speed of reading data from the disk.

On the basis of Elliptics implemented project HistoryDB. With it, we keep logs coming from various external events. During testing, a group of three machines quietly and smoothly coped with a load of 10 thousand requests per second, when logs from 30 million users were written. Moreover, 10% of them generated 80% of all data. In total, 30 million users had about 500 million updates every day. However, simply storing this data would be too easy. Therefore, the system was tested in such a way that, working under load, it allowed to get a list of all users who were active during a certain period (day / month), to see the logs of any user for any day and it was possible to add any other secondary indexes to user data.

Also, on the basis of Elliptics, Yandex has built the work of such services as Yandex.Music, Photos, Maps, Market, vertical searches, people search, mail backups. Preparing for the move and Yandex.Disk.

In Elliptics, clients connect directly to servers, and this allows:

For users of the data storage service, there are the following APIs with which you can easily write, delete, read and process data:

However, a silver bullet does not exist, and Elliptics has its limitations and problems:

In the following articles we will give an example of using Elliptics and will describe more technical details: the internal structure of the cache, the work of our backend eblob, data streaming, secondary indexes and much more.

Any cloud consists of two main components: Single Entry Point (ETV) and Cloud Magic (OM). Consider the Amazon S3 cloud storage: the RTV API is used in the role of ETV, and the elves working on dollars provide Cloud Magic. Companies wishing to place small video files or a database in S3 preliminarily calculate on the calculator the amount they will pay per month at the planned load.

This article is about another cloud storage, in which the elves feed on the Spirit of Freedom, electricity, and they still need a little bit of " cocaine ."

This repository is called Elliptics .

The history of its creation dates back to 2007 as part of POHMELFS . The following year, Elliptics was put into a separate project, and many different approaches to distributed data storage were tried in it, many of them did not work because of the complexity, because of too little practicality in real life. In the end, its author, Yevgeny Polyakov, came to the creation of Elliptics in its modern form.

')

fault tolerance

This is the first of the Five Elements, for the preservation of which Elliptics is directed. Machines are constantly breaking down, hard drives fail, and elves from time to time go on strike and throw bark. The data center can also fall out of our world at any time, by virtue of Black Magic and Sorcery. The most obvious of these are Cable Break and Electricity Loss.

One sad story

One day, in the rain, one little kitten felt cold, and he decided to warm himself. Nearby there was not a single room, except for the transformer box, which was made strictly according to GOST, with an opening of 10 centimeters from the bottom. The transformer was short-circuited, and one kitten was smaller, and the data center was without electricity. It happened on a weekend, on Saturday, and therefore full-time electricians were not in the workplace and could not restore electricity. Of course, the diesel generator was available and worked, but the fuel was enough for only a few hours. The security service refused to allow an operatively called fuel truck into the data center. In the end, everything was solved safely, but no one is immune from such situations.

Everyone has long known what to do in case the disk or server is disconnected. But no one wonders what will happen to their system if the data center fails, the Amazon region or another major event occurs. Elliptics was originally planned to solve this class of problems.

In Elliptics, all documents are stored on 512-bit keys, which are obtained as a result of the sha512 function of the document name. All keys can be represented as Distributed Hash Table (DHT), rings with a range of values from 0 to 2,512 . The ring is randomly divided between all the machines of one group, and each key can be simultaneously stored in several different groups. We can roughly assume that one group is one data center. As a rule, Yandex keeps 3 copies of all documents, and in the event of the failure of a machine, we lose only one copy of a part of the ring. Even if the entire data center fails, the information is not lost, we still have all the documents in at least two copies, and so the elves still can bring joy to people.

Extensibility

Administrator elves always wanted to be able to connect additional machines, and Elliptics can do it! When a new computer is connected to the group, it takes away random intervals from those 2,512 keys, and all subsequent requests for these keys will come to this machine.

If we have three groups consisting of hundreds of machines each, then adding new ones will entail rebalancing and, as a result, large amounts of data will have to be poured over the network to restore them. For those to whom this is not suitable, we have developed a load balancing system - Mastermind , which runs on the Cocaine cloud platform. Mastermind is a set of peer super-nodes that determine in which groups a particular file will be stored based on the load on each of the servers. Here, too, there is a difficult Mathematical Magic - the free space, disk load, CPU load, switch load, drop rate and much more are taken into account. In case of failure of any of the Mastermind nodes, everything continues to work as before. If the group size is kept small, then when new machines are added, it is enough for them to issue a new group and let the Mastermind know about it. In this case, the information about the groups in which it should be searched is added to the file identifier. This mechanism allows for a truly infinite and very simple storage expansion.

Data integrity

Let's take a look at what the system does with old data that were not on those machines after balancing or were completely lost.

In this case, Elliptics provides a recovery system. The scheme of its work is as follows: it goes through all the machines and, if the data is on your own machine, moves them to the right one. If it turns out that some document is not in three copies, then during this procedure the document will be reproduced on the necessary machines. Moreover, if the system works with Mastermind, the group number of the file location is taken from the meta-information. The recovery speed depends on many factors and the exact key recovery speed cannot be called, but, as load testing shows, the bottleneck is the network channel between the machines, which is used to transfer data.

Speed

Elliptics has little overhead and is capable of performing up to 240,000 read operations per second on a single machine while working with the cache. When working with a disk, the speed naturally drops and rests on the speed of reading data from the disk.

On the basis of Elliptics implemented project HistoryDB. With it, we keep logs coming from various external events. During testing, a group of three machines quietly and smoothly coped with a load of 10 thousand requests per second, when logs from 30 million users were written. Moreover, 10% of them generated 80% of all data. In total, 30 million users had about 500 million updates every day. However, simply storing this data would be too easy. Therefore, the system was tested in such a way that, working under load, it allowed to get a list of all users who were active during a certain period (day / month), to see the logs of any user for any day and it was possible to add any other secondary indexes to user data.

Also, on the basis of Elliptics, Yandex has built the work of such services as Yandex.Music, Photos, Maps, Market, vertical searches, people search, mail backups. Preparing for the move and Yandex.Disk.

Simplicity of architecture

In Elliptics, clients connect directly to servers, and this allows:

- have a simple architecture;

- ensure that transactions are performed correctly;

- always know which machine in the group is responsible for a particular key.

For users of the data storage service, there are the following APIs with which you can easily write, delete, read and process data:

- asynchronous library in C ++ 11

- Python Binding

- FastCGI and TheVoid HTTP Frontends (using boost :: asio)

However, a silver bullet does not exist, and Elliptics has its limitations and problems:

- Eventual consistency. Since Elliptics is fully distributed, for various problems the server may give the file version older than the current one. In some cases, this may not be applicable, then by declining the response time, you can use more reliable methods for querying data.

- Due to the fact that client data is written in parallel to several servers, the network between the client and servers can become a bottleneck.

- The API may not be very convenient for high-level queries. At the moment we do not provide convenient SQL-like data queries.

- Also in Elliptics there is no high-level transaction support, so it is impossible to guarantee that a group of commands will either complete everything, or not at all.

In the following articles we will give an example of using Elliptics and will describe more technical details: the internal structure of the cache, the work of our backend eblob, data streaming, secondary indexes and much more.

Source: https://habr.com/ru/post/200080/

All Articles