Nine-year router optimization

I want to tell the story of the server life in the campus network of the Novosibirsk University, which began in the distant 2004, as well as the stages of its optimization and downgrade .

Many things in the article will seem well-known, if only for the reason that we are talking about the events of almost a decade ago, although at that time they were advanced technologies. For the same reason, some things have lost their relevance at all, but not all, since the server still lives and maintains a grid of 1000 machines.

The network itself has existed since 1997 - this is the date when all the hostels were united into a single network and got access to the Internet. Until 2004, the campus network was built entirely on copper, the links between the hostels were forwarded with the P270 cable (good, the distance between the hostels did not exceed 350m, and the link, using 3c905 cards, went up "on the weave"). Each building had its own server, which had 3 network cards. Two of them "looked" at the neighboring servers, the "lokalka" of the hostel was connected to the third. In total, all six (and so much was a hostel at our university) were closed in a ring, and the routes between them were built using the OSPF protocol, which allowed, if the line was broken, to start traffic bypassing the dropped link. And cliffs happened often: either the thunderstorm would break out and the link would fall down, then the electricians would nakhimyat. Yes, and it was not very convenient to maintain the servers themselves, especially since they were all motley: in different years from 486DX4 (yes, from 8MB of RAM, on the 2.0.36 core and 3com cards she pulled two 100Mbps links, though the download was to the ceiling on bare routing without any ipfwadm) to AMD K6-2 and even P4 2.8Ghz.

The disadvantages of such an organization (besides the very unreliable by today's standards link) are obvious: it is very inconvenient to manage the user base. Addresses are white, their number is limited. The contract was tied to IP for life, that is, for the entire duration of the student’s education. The network is cut into segments, the dimensions of which were originally made taking into account the number of students in each dormitory. But then the students moved from the dorm to the dormitory, then the Faculty of Automation took noticeably more addresses than philologists did - in general, it was horror.

Access restrictions were on a pair of MAC-IP, because at the time of “conceiving” the network, changing the MAC address of a network card without having a programmer at hand (or even a soldering iron) was very problematic, if not impossible. Therefore, it was enough to keep the file / etc / ethers up-to-date and to escape from 99% of lovers of freebies. Only dreamed of managed switches at that time, and certainly it was too expensive to put them as subscriber equipment (as the network developed 100% for the money of the students themselves, and the students, as we know, are not rich people)

In 2004, a good opportunity turned up: one of the city providers offered, in exchange for peering between its network and the campus network, to connect all buildings on optics for free. Well, how to connect - the initiative group of students was directly involved in the installation of optics, and the techies of the provider just missed it. As a result, using this optics, we managed to build not a ring, but a star!

And then the idea was born - to put one good server, with several gigabit network cards, link all the links in one bridge and make one flat network, which would get rid of the headache with the address space cut on the subnet, and also allowed to control access from one places.

Since the PCI bus could not pump such traffic, and the required 6-8 Gigabit ports could not be obtained due to the lack of such a number of PCI slots on the motherboards, it was decided to use 2x Intel Quad Port Server Adapter on the PCI-X 133Mhz bus. These network cards had to take the Supermicro X6DHE-XG2 motherboard due to the presence of as many as three PCI-X 133, well, processors for it, Xeon 3Ghz 2 pcs (these are the ones that can be found on ark.intel.com in the Legacy Xeon section)

And it started: RHELAS 2.1 is installed on the server, the bridge is started up, the network is glued together into one large / 22. And here it turns out that if you restrict access to a couple of hundreds of addresses using rules like:

then the load on the server bounces to indecent values. The server can not cope?

Search on the Internet suggests only the project that has appeared - ipset . Yes, it turned out that this is exactly what you need. In addition to the fact that it was possible to get rid of a large number of similar records in iptables, it became possible to bind IP-MAC using macipmap.

One of the peculiarities of the bridge was that the packet passing through the bridge in some cases fell into the FORWARD chain, and in some cases it did not. It turned out that packets that are routed between interfaces fall into FORWARD, and packets that “bridge” (that is, those entering into br0 right there and leaving br0) do not fall.

The solution was to use the mangle table instead of filter.

It also turned out to make the binding of a specific address not only to the MAC, but also to the hostel in which the network user lived. It was done using the iptables physdev module and looked like this:

Since the optical "star" was built using opto-converters, then each building was "watched" by its own network card. And it was necessary to add only the MAC-IP pairs of the first hostel users to the IPMAC_H1 set, the second hostel set to the IPMAC_H2 set, and so on.

The order of the rules themselves inside iptables tried to make such that the above were those rules describing hostels, where users are more active, which allowed packages to pass chains faster.

')

As a result, all the intercommunication and external traffic eventually began to pass through the server, an idea emerged if the subscriber is disconnected, or if the IP-MAC pair does not match - to display the user with a certain page with information explaining why network does not work. It seemed that it is not difficult. Instead of DROP packages going to port 80, it was necessary to make a MARK package, and then redirect the marked packages using DNAT to the local web server.

The first problem turned out to be that if you simply redirect the packages to a web server, the web server in 99% of cases responds that the page was not found. Because, if the user went to ark.intel.com/products/27100 , and you wrapped it on your web server, it is unlikely that there will be a products / 27100 page, and you will at best get an error 404. Therefore, it was written simple a demon on C that issued to any request

Later this crutch was replaced by a more beautiful solution with mod_rewrite.

The second, and most significant, problem was that as soon as the nat module was loaded into the kernel, the load jumped again. Of course, the conntrack table is to blame, and with so many connections and pps, the existing iron did not take out at peak load hours.

The server can not cope?

We start to think. The goal is quite interesting, but does not work on the existing hardware. Using

which simply replaced the gateway address and sent the packet further, bypassing the rest of the chains.

In general, it was very convenient to send “objectionable” packets in this way for processing to a third-party server, not worrying about this package passing other chains of the filter type, but with a change in the architecture of the kernel, this patch from patch-o-matic became unsupported.

Solution: mark the necessary packets with the 0x1 brand, then, using ip rule fw, send the packet to the “other” routing table, where the only route is our NAT server

As a result, the “good” traffic was passed, and the “bad” users were shown a page with information about blocking. And also, in the event of an IP-MAC mismatch, the user could enter a username / password to renegotiate his current MAC.

The action takes place at the time of pomegbyte traffic in the hostel. That is, in a cashless, internet-active and IT-advanced user environment. This means that simple IP-MAC bindings are no longer enough, and cases of Internet traffic theft are becoming ubiquitous.

The only sane cost option is vpn. But, given that by that time the campus network had a free peering with half a dozen city operators, it would not work to drive peering traffic through a vpn server, it simply would not take out. Of course, a widely spread method was possible: to the Internet - via vpn, to peering and LAN - a batch file with routes. But the batch file seemed to me a very ugly decision. The option with RIPv2 was considered, which at that time was “embedded” in most of the operating systems used, but there remained an open question about the authenticity of the announcements. Without additional configuration, anyone could send out routes, and in the then popular WindowXP and its “RIP Listener” there was no configuration at all.

Then an “asymmetric VPN” was “invented”. The client for accessing the Internet establishes the usual vpn-pptp connection to the server with a login / password, while unchecking the checkbox “Use gateway in remote network” in the settings. The client’s end of the tunnel was given the address 192.0.2.2, and all clients are the same, and as will be shown later, it didn’t matter at all.

On the VPN server side, the / etc / ppp / ip-up script has been modified, performed after authentication and interface raising

That is, in the PEERIP variable from the database, the script pulled out the IP address that the user should have with PEERNAME (the login under which he connected), and if this address matches the IP from which the connection occurred (LOGDEVICE) to the VPN server, then All traffic to this IP is routed to the IFNAME interface via table 101. Also, in table 101, the default gateway is 127.0.0.1

All routed traffic is wrapped in table 101 by rule

As a result, we find that the traffic that arrives at the vpn server and the next one is NOT on the vpn server (it will go to the local table, which is the default) goes to table 101. And there it will “spread out” on ppp-tunnels. And if you do not find the right, then just drop it.

An example of what is obtained in the result in the plate 101 (ip r sh ta 101)

Now it only remains to turn all traffic from the “Internet” interface to the vpn gateway on the central router, and users will not have it without connecting to the VPN Internet. And the rest of the traffic (peer-to-peer) will run IPoE (that is, in the “normal” way), and will not load the VPN server. When additional peering networks appear, the user will not have to edit any bat files. Again, access to some internal resources, even though IP, even though ports, can be done via VPN, it’s enough to wrap the package on the VPN server.

When using this technique, an attacker of course can send traffic to the Internet by replacing IP-MAC, but cannot get anything back, since the vpn-tunnel is not raised. What almost completely kills the meaning of the substitution - “sitting on the Internet” from someone else's IP is now impossible.

In order for client computers to receive packets through a vpn-tunnel, it was necessary to set the key IPEnableRouter = 1 in the registry in Windows, and rp_filter = 0 in linux. Otherwise, the OS did not accept replies from other interfaces to which the requests were sent.

The cost of the implementation is almost zero, at ~ 700 simultaneous connections to the vpn hawatlo server of the celeron 2Ghz level, since the Internet traffic inside ppp at the time of the megabyte tariffs was not very large. At the same time, the peering traffic ran at speeds up to 6 Gbit / s in total (via Xeon on S604)

It worked all this miracle for about 8 years. In 2006, RHELAS 2.1 was replaced with a freshly hung CentOS 4. The central switches in the buildings were changed to DES-3028, DES-1024 remained subscriber. Access control on the DES-3028 could not be done properly. In order to bind the ip-mac to the port using the ACL, there were not enough 256 entries, because in some hostels there were more than 300 computers. Changing equipment became a problem, since the university “legalized” the network, and now it was necessary to pay for it at the university’s cash desk, when money was not allocated for equipment back, and if it was allocated, it was very sparingly, after a year and through the competition (when people do not buy what is needed, but what is cheaper, or where the rollback is greater).

And then the server broke. Rather, the motherboard burned (according to the conclusion from the workshop - the north bridge died). Need to collect something to replace, no money. That is nice to be free. And so that you can insert a PCI-X network card. Fortunately, an acquaintance gave a server decommissioned from a bank, it was just a couple of PCI-X133 slots. But the motherboard is single-processor, and there is not Socket 478 Pentium 4 3Ghz in it

We throw the screws, network cards. We start - it seems to work.

But softirq “eats” 90% in total from two pseudo-cards (there is one core in the processor, and hyper-trading is turned on), ping jumps to 3000, even the console “tupit” to impossibility.

It would seem that here it is, the server is outdated, it's time to rest.

I arm myself with oprofile, I start “mowing the excess”. In general, oprofile in the process of “communication” with this server was used quite often, and more than once it was rescued. For example, even using ipset I try to use ipmap, and not iphash (if possible), since with oprofile you can see how dramatic the difference in performance is. According to my data, it turned out to be two orders of magnitude, that is, it swam from 200 to 400 times. Also, when calculating traffic at different times, I switched from ipcad-ulog to ipcad-pcap, and then to nflow, focusing on profiling. ipt_NETFLOW no longer used, so entered the century of “unlimited Internet”, and our top-level netflow provider writes for SORM or not - its problems. Actually, using oprofile revealed that ip_conntrack was the main resource eater when turning on nat.

In general, oprofile this time tells me that 60% of the processor cycles are taken by the e1000 kernel module (network card). So what to do with it? Recommended in e1000.txt

inscribed in 2005.

A quick look at git.kernel.org/cgit/linux/kernel/git/stable/linux-stable.git for any significant changes in the e1000 did not produce results (that is, there are of course some changes, but either fixing errors or placing gaps in code). In any case, the kernel still updated, but it did not give results.

In the kernel,

Oprofile also claims that the bridge module occupies a rather large proportion of cycles. And, it would seem, there is nowhere without it, since IP addresses are spread out in disarray across several buildings, and it is no longer possible to split them into segments again (the option to merge everything into one segment without a server is not considered, as control is lost)

I'm thinking of breaking the bridge, and using proxy_arp. Especially since long ago I wanted to do this, after detecting bugs in DES-3028 with flood_fdb. In principle, it is possible to load all addresses in the routing table in the form:

because it is known which subscriber should be where (stored in the database)

But I also wanted to realize the IP-MAC binding not only to the building, but also to the nodal switch port on the building (I repeat, there are DES-1024 subscribers for subscribers)

And then reach the hands to sort out with dhcp-relay and dhcp-snooping.

On switches included:

On the server, I brought interfaces out of the bridge, removed the IP addresses on them (interfaces without IP), turned on arp_proxy

Isc-dhcp setup

In the dhcp-event file, agent.circuit-id, agent.remote-id, IP and MAC are checked for validity, and if everything is ok, they will add them to this address through the necessary interface

Primitive example dhcp-event:

here, only $ ARGV [3] is checked (that is, agent.remote-id, or the MAC switch, through which the DHCP request is received), but you can also check all other fields by getting their valid values, for example, from the database

As a result, we obtain:

1) a client not requesting an address via DHCP - does not go beyond its unmanaged switch, it is not passed by IP-MAC-PORT-BINDING;

2) a client whose MAC is known (available in the database), but the request does not match the port or the switch — will receive an IP associated with this MAC, but the route to it will not be added, respectively, proxy_arp will “respond” that the address is already taken and the address will be immediately released;

3) a client whose MAC is not known will receive an address from the temporary pool. These addresses are redirected to a page with information, here you can re-register your MAC using a login / password;

4) and finally the client, whose MAC is known, and matched to the switch and the port, will receive its address. Dhcp-snooping will add a dynamic binding to the impb table on the switch, the server will add a route to this address through the necessary interface of the former bridge.

When the lease is terminated or the address is released, the / etc / dhcp / dhcp-release script is called, the contents of which are utterly primitive:

There is a small flaw in security, specifically in p.2. If you use a non-standard dhcp-client that does not check whether the address issued by the dhcp server is busy, the address will not be released. Of course, the user will not be able to access the external network, beyond the router, since the server will not add a route to this address through the necessary interface, but the switch unlocks this MAC-IP pair on its port.

You can bypass this flaw by using classes in dhcpd.conf, but this significantly complicates the configuration file, and, accordingly, increases the load on our old server. Because for each subscriber you have to create your own class, with a rather complicated condition for hitting it, and then your own pool. Although plans to try in practice, how much it will increase the load.

Thus, it turned out that the compliance of the IP-MAC pair is now "monitored" by DHCP when issuing the address, access from "invalid" MAC-IP is limited by the switch. From the server, it was now possible to remove not only the bridge, but also macipmap, replacing 6 sets (from “Optimization 1”) with one ipmap, which contains all open IP addresses. As well as removing -m physdev.

More server interfaces moved from promisc mode to normal, which also slightly reduced the load.

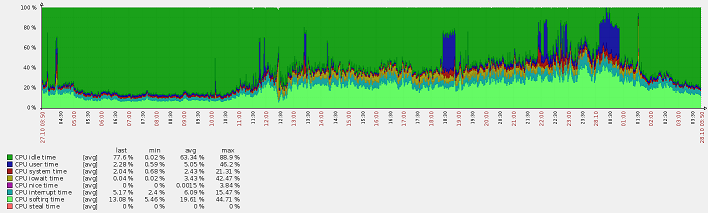

Namely, the whole procedure with disassembling the bridge reduced the total load on the server by almost 2 times ! Softirq is now not 50-100%, but 25-50%. At the same time, access control to the network has only become better.

After the last optimization, although the load dropped noticeably, a strange thing was noticed: iowait increased. Not that much, from 0-0.3% to 5-7%. This is taking into account the fact that there are practically no disk operations on this server at all - it simply throws sachets.

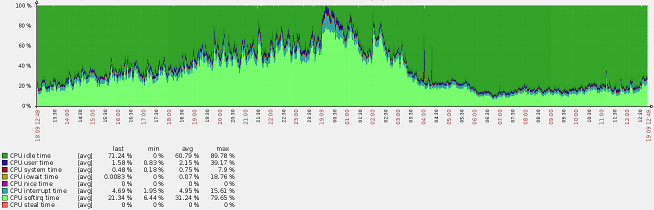

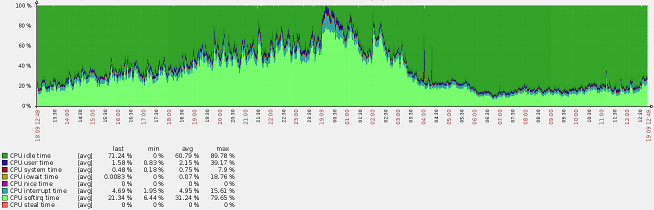

(blue user time - kernel compilation)

iostat showed that there is a constant load on the disk in 800-820 Blk_wrtn / s

Started the search for processes that could be engaged in writing. Performance

gave a strange result: the culprits were

Ext3 is in mode

I started to scroll through the steps in my head what I was doing and what could have influenced iowait. As a result, I stopped syslog - and iowait plummeted to ~ 0%.

In order for dhcp not to clutter up the messages with its messages, I sent them to the local6 log-facility, and wrote in syslog.conf:

it turned out that when writing through syslog, sync is done for each line. There are quite a lot of requests to the dhcp server, a lot of events are generated that get to the log, and a lot of sync is called.

Fix on

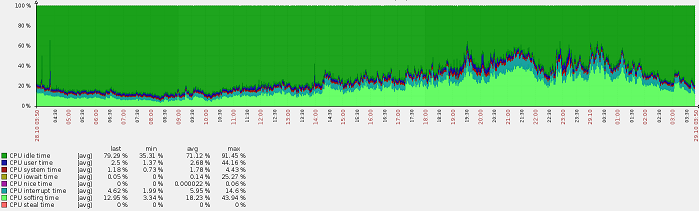

in my case, iowait reduced 10-100 times, instead of 5-7%, it was 0-0.3%.

Optimization result:

Why this article.

First, perhaps someone will draw from it useful solutions. I did not discover America here, although most of the events described here were in the pre-gage era, and the “google recipes” didn’t help much, reached the whole with their head.

Secondly, we constantly have to deal with the fact that instead of optimizing the code, developers are working on building up computing power, and this article can be an example of that, if you wish, you can find errors in your seemingly proven code many times, and cope with them . 90% of the site developers check the work of the site on their machine, put it into production. Then the whole thing slows down under load. Teasing the admin server. By optimizing the server in this case, little can be achieved if the code is not originally written optimally. And bought a new server, or another server and balancer. Then balancer balancer and so on. And the code inside was not optimal, and it remains forever. Of course, I understand that in the current realities, code optimization is more expensive than extensive capacity building,but with the advent of a huge number of “homegrown” IT people, the volumes of the “bad code” will become serious.

From nostalgic: I have an old laptop on the shelf, P3-866 2001gv (Panasonic CF-T1 if someone says something to it), but now it is impossible even to look at sites, although there is no more sense on these sites for 10 years. I love to remember interesting toys on the ZX-Spectrum on the gameplay is not inferior to today's monsters that require 4 cores / 4giga

Many things in the article will seem well-known, if only for the reason that we are talking about the events of almost a decade ago, although at that time they were advanced technologies. For the same reason, some things have lost their relevance at all, but not all, since the server still lives and maintains a grid of 1000 machines.

Network

The network itself has existed since 1997 - this is the date when all the hostels were united into a single network and got access to the Internet. Until 2004, the campus network was built entirely on copper, the links between the hostels were forwarded with the P270 cable (good, the distance between the hostels did not exceed 350m, and the link, using 3c905 cards, went up "on the weave"). Each building had its own server, which had 3 network cards. Two of them "looked" at the neighboring servers, the "lokalka" of the hostel was connected to the third. In total, all six (and so much was a hostel at our university) were closed in a ring, and the routes between them were built using the OSPF protocol, which allowed, if the line was broken, to start traffic bypassing the dropped link. And cliffs happened often: either the thunderstorm would break out and the link would fall down, then the electricians would nakhimyat. Yes, and it was not very convenient to maintain the servers themselves, especially since they were all motley: in different years from 486DX4 (yes, from 8MB of RAM, on the 2.0.36 core and 3com cards she pulled two 100Mbps links, though the download was to the ceiling on bare routing without any ipfwadm) to AMD K6-2 and even P4 2.8Ghz.

The disadvantages of such an organization (besides the very unreliable by today's standards link) are obvious: it is very inconvenient to manage the user base. Addresses are white, their number is limited. The contract was tied to IP for life, that is, for the entire duration of the student’s education. The network is cut into segments, the dimensions of which were originally made taking into account the number of students in each dormitory. But then the students moved from the dorm to the dormitory, then the Faculty of Automation took noticeably more addresses than philologists did - in general, it was horror.

Access restrictions were on a pair of MAC-IP, because at the time of “conceiving” the network, changing the MAC address of a network card without having a programmer at hand (or even a soldering iron) was very problematic, if not impossible. Therefore, it was enough to keep the file / etc / ethers up-to-date and to escape from 99% of lovers of freebies. Only dreamed of managed switches at that time, and certainly it was too expensive to put them as subscriber equipment (as the network developed 100% for the money of the students themselves, and the students, as we know, are not rich people)

Star

In 2004, a good opportunity turned up: one of the city providers offered, in exchange for peering between its network and the campus network, to connect all buildings on optics for free. Well, how to connect - the initiative group of students was directly involved in the installation of optics, and the techies of the provider just missed it. As a result, using this optics, we managed to build not a ring, but a star!

And then the idea was born - to put one good server, with several gigabit network cards, link all the links in one bridge and make one flat network, which would get rid of the headache with the address space cut on the subnet, and also allowed to control access from one places.

Since the PCI bus could not pump such traffic, and the required 6-8 Gigabit ports could not be obtained due to the lack of such a number of PCI slots on the motherboards, it was decided to use 2x Intel Quad Port Server Adapter on the PCI-X 133Mhz bus. These network cards had to take the Supermicro X6DHE-XG2 motherboard due to the presence of as many as three PCI-X 133, well, processors for it, Xeon 3Ghz 2 pcs (these are the ones that can be found on ark.intel.com in the Legacy Xeon section)

And it started: RHELAS 2.1 is installed on the server, the bridge is started up, the network is glued together into one large / 22. And here it turns out that if you restrict access to a couple of hundreds of addresses using rules like:

iptables -A FORWARD -s abcd -j REJECTthen the load on the server bounces to indecent values. The server can not cope?

Optimization 1

Search on the Internet suggests only the project that has appeared - ipset . Yes, it turned out that this is exactly what you need. In addition to the fact that it was possible to get rid of a large number of similar records in iptables, it became possible to bind IP-MAC using macipmap.

One of the peculiarities of the bridge was that the packet passing through the bridge in some cases fell into the FORWARD chain, and in some cases it did not. It turned out that packets that are routed between interfaces fall into FORWARD, and packets that “bridge” (that is, those entering into br0 right there and leaving br0) do not fall.

The solution was to use the mangle table instead of filter.

It also turned out to make the binding of a specific address not only to the MAC, but also to the hostel in which the network user lived. It was done using the iptables physdev module and looked like this:

iptables -t mangle -A PREROUTING -m physdev --physdev-in eth1 -m set --set IPMAC_H1 src -j ACCEPT iptables -t mangle -A PREROUTING -m physdev --physdev-in eth2 -m set --set IPMAC_H2 src -j ACCEPT ... iptables -t mangle -A PREROUTING -i br0 -j DROP Since the optical "star" was built using opto-converters, then each building was "watched" by its own network card. And it was necessary to add only the MAC-IP pairs of the first hostel users to the IPMAC_H1 set, the second hostel set to the IPMAC_H2 set, and so on.

The order of the rules themselves inside iptables tried to make such that the above were those rules describing hostels, where users are more active, which allowed packages to pass chains faster.

')

Optimization 2

As a result, all the intercommunication and external traffic eventually began to pass through the server, an idea emerged if the subscriber is disconnected, or if the IP-MAC pair does not match - to display the user with a certain page with information explaining why network does not work. It seemed that it is not difficult. Instead of DROP packages going to port 80, it was necessary to make a MARK package, and then redirect the marked packages using DNAT to the local web server.

The first problem turned out to be that if you simply redirect the packages to a web server, the web server in 99% of cases responds that the page was not found. Because, if the user went to ark.intel.com/products/27100 , and you wrapped it on your web server, it is unlikely that there will be a products / 27100 page, and you will at best get an error 404. Therefore, it was written simple a demon on C that issued to any request

Location: myserverruLater this crutch was replaced by a more beautiful solution with mod_rewrite.

The second, and most significant, problem was that as soon as the nat module was loaded into the kernel, the load jumped again. Of course, the conntrack table is to blame, and with so many connections and pps, the existing iron did not take out at peak load hours.

The server can not cope?

We start to think. The goal is quite interesting, but does not work on the existing hardware. Using

-t raw -j NOTRACK helped, but not much. The solution was found as follows: NAT packets are not on the central router, but on one of the old machines that still remained and were used for various services such as a p2p server, game server, jabber server, or even just standing idle. In the case of a surge in traffic on this server, in the worst case, the subscriber would not receive a message in the browser window that it is disabled (or that its IP does not match the registered MAC), and this would not affect the work of other network users. And in order to deliver user traffic to this server with NAT, the following command was used:iptables -t mangle -A POSTROUTING -p tcp --dport 80 -j ROUTE --gw abcdwhich simply replaced the gateway address and sent the packet further, bypassing the rest of the chains.

In general, it was very convenient to send “objectionable” packets in this way for processing to a third-party server, not worrying about this package passing other chains of the filter type, but with a change in the architecture of the kernel, this patch from patch-o-matic became unsupported.

Solution: mark the necessary packets with the 0x1 brand, then, using ip rule fw, send the packet to the “other” routing table, where the only route is our NAT server

iptables -t mangle -A PREROUTING -p tcp --dport 80 -j MARK --set-mark 0x1 ip route flush table 100 ip route add via abcd table 100 ip rule add fwmark 0x1 lookup 100 As a result, the “good” traffic was passed, and the “bad” users were shown a page with information about blocking. And also, in the event of an IP-MAC mismatch, the user could enter a username / password to renegotiate his current MAC.

Optimization 3

The action takes place at the time of pomegbyte traffic in the hostel. That is, in a cashless, internet-active and IT-advanced user environment. This means that simple IP-MAC bindings are no longer enough, and cases of Internet traffic theft are becoming ubiquitous.

The only sane cost option is vpn. But, given that by that time the campus network had a free peering with half a dozen city operators, it would not work to drive peering traffic through a vpn server, it simply would not take out. Of course, a widely spread method was possible: to the Internet - via vpn, to peering and LAN - a batch file with routes. But the batch file seemed to me a very ugly decision. The option with RIPv2 was considered, which at that time was “embedded” in most of the operating systems used, but there remained an open question about the authenticity of the announcements. Without additional configuration, anyone could send out routes, and in the then popular WindowXP and its “RIP Listener” there was no configuration at all.

Then an “asymmetric VPN” was “invented”. The client for accessing the Internet establishes the usual vpn-pptp connection to the server with a login / password, while unchecking the checkbox “Use gateway in remote network” in the settings. The client’s end of the tunnel was given the address 192.0.2.2, and all clients are the same, and as will be shown later, it didn’t matter at all.

On the VPN server side, the / etc / ppp / ip-up script has been modified, performed after authentication and interface raising

PATH=/sbin:/usr/sbin:/bin:/usr/bin export PATH LOGDEVICE=$6 REALDEVICE=$1 [ -f /etc/sysconfig/network-scripts/ifcfg-${LOGDEVICE} ] && /etc/sysconfig/network-scripts/ifup-post ifcfg-${LOGDEVICE} [ -x /etc/ppp/ip-up.local ] && /etc/ppp/ip-up.local "$@" PEERIP=`/usr/local/bin/getip.pl $PEERNAME` if [ $LOGDEVICE == $PEERIP ] ; then ip ro del $PEERIP table vpn > /dev/null 2>/dev/null& ip ro add $PEERIP dev $IFNAME table 101 else ifconfig $IFNAME down kill $PPPD_PID fi exit 0 That is, in the PEERIP variable from the database, the script pulled out the IP address that the user should have with PEERNAME (the login under which he connected), and if this address matches the IP from which the connection occurred (LOGDEVICE) to the VPN server, then All traffic to this IP is routed to the IFNAME interface via table 101. Also, in table 101, the default gateway is 127.0.0.1

All routed traffic is wrapped in table 101 by rule

ip ru add iif eth0 lookup 101As a result, we find that the traffic that arrives at the vpn server and the next one is NOT on the vpn server (it will go to the local table, which is the default) goes to table 101. And there it will “spread out” on ppp-tunnels. And if you do not find the right, then just drop it.

An example of what is obtained in the result in the plate 101 (ip r sh ta 101)

[root@vpn ~]# ip route show table 101 abcd dev ppp2 scope link abce dev ppp6 scope link abcf dev ppp1 scope link default via 127.0.0.1 dev lo Now it only remains to turn all traffic from the “Internet” interface to the vpn gateway on the central router, and users will not have it without connecting to the VPN Internet. And the rest of the traffic (peer-to-peer) will run IPoE (that is, in the “normal” way), and will not load the VPN server. When additional peering networks appear, the user will not have to edit any bat files. Again, access to some internal resources, even though IP, even though ports, can be done via VPN, it’s enough to wrap the package on the VPN server.

When using this technique, an attacker of course can send traffic to the Internet by replacing IP-MAC, but cannot get anything back, since the vpn-tunnel is not raised. What almost completely kills the meaning of the substitution - “sitting on the Internet” from someone else's IP is now impossible.

In order for client computers to receive packets through a vpn-tunnel, it was necessary to set the key IPEnableRouter = 1 in the registry in Windows, and rp_filter = 0 in linux. Otherwise, the OS did not accept replies from other interfaces to which the requests were sent.

The cost of the implementation is almost zero, at ~ 700 simultaneous connections to the vpn hawatlo server of the celeron 2Ghz level, since the Internet traffic inside ppp at the time of the megabyte tariffs was not very large. At the same time, the peering traffic ran at speeds up to 6 Gbit / s in total (via Xeon on S604)

Works

It worked all this miracle for about 8 years. In 2006, RHELAS 2.1 was replaced with a freshly hung CentOS 4. The central switches in the buildings were changed to DES-3028, DES-1024 remained subscriber. Access control on the DES-3028 could not be done properly. In order to bind the ip-mac to the port using the ACL, there were not enough 256 entries, because in some hostels there were more than 300 computers. Changing equipment became a problem, since the university “legalized” the network, and now it was necessary to pay for it at the university’s cash desk, when money was not allocated for equipment back, and if it was allocated, it was very sparingly, after a year and through the competition (when people do not buy what is needed, but what is cheaper, or where the rollback is greater).

Server broke

And then the server broke. Rather, the motherboard burned (according to the conclusion from the workshop - the north bridge died). Need to collect something to replace, no money. That is nice to be free. And so that you can insert a PCI-X network card. Fortunately, an acquaintance gave a server decommissioned from a bank, it was just a couple of PCI-X133 slots. But the motherboard is single-processor, and there is not Socket 478 Pentium 4 3Ghz in it

We throw the screws, network cards. We start - it seems to work.

But softirq “eats” 90% in total from two pseudo-cards (there is one core in the processor, and hyper-trading is turned on), ping jumps to 3000, even the console “tupit” to impossibility.

It would seem that here it is, the server is outdated, it's time to rest.

Optimization 4

I arm myself with oprofile, I start “mowing the excess”. In general, oprofile in the process of “communication” with this server was used quite often, and more than once it was rescued. For example, even using ipset I try to use ipmap, and not iphash (if possible), since with oprofile you can see how dramatic the difference in performance is. According to my data, it turned out to be two orders of magnitude, that is, it swam from 200 to 400 times. Also, when calculating traffic at different times, I switched from ipcad-ulog to ipcad-pcap, and then to nflow, focusing on profiling. ipt_NETFLOW no longer used, so entered the century of “unlimited Internet”, and our top-level netflow provider writes for SORM or not - its problems. Actually, using oprofile revealed that ip_conntrack was the main resource eater when turning on nat.

In general, oprofile this time tells me that 60% of the processor cycles are taken by the e1000 kernel module (network card). So what to do with it? Recommended in e1000.txt

options e1000 RxDescriptors=4096,4096,4096,4096,4096,4096,4096,4096,4096,4096 TxDescriptors=4096,4096,4096,4096,4096,4096,4096,4096,4096,4096 InterruptThrottleRate=3000,3000,3000,3000,3000,3000,3000,3000,3000,3000inscribed in 2005.

A quick look at git.kernel.org/cgit/linux/kernel/git/stable/linux-stable.git for any significant changes in the e1000 did not produce results (that is, there are of course some changes, but either fixing errors or placing gaps in code). In any case, the kernel still updated, but it did not give results.

In the kernel,

CONFIG_HZ_100=y is also worth it; with a larger value, the results are even worse.Oprofile also claims that the bridge module occupies a rather large proportion of cycles. And, it would seem, there is nowhere without it, since IP addresses are spread out in disarray across several buildings, and it is no longer possible to split them into segments again (the option to merge everything into one segment without a server is not considered, as control is lost)

I'm thinking of breaking the bridge, and using proxy_arp. Especially since long ago I wanted to do this, after detecting bugs in DES-3028 with flood_fdb. In principle, it is possible to load all addresses in the routing table in the form:

ip route add abc1.d1 dev eth1 src 1.2.3.4 ip route add abc1.d2 dev eth1 src 1.2.3.4 ... ip route add abc2.d1 dev eth2 src 1.2.3.4 ip route add abc2.d2 dev eth2 src 1.2.3.4 ... because it is known which subscriber should be where (stored in the database)

But I also wanted to realize the IP-MAC binding not only to the building, but also to the nodal switch port on the building (I repeat, there are DES-1024 subscribers for subscribers)

And then reach the hands to sort out with dhcp-relay and dhcp-snooping.

On switches included:

enable dhcp_relay config dhcp_relay option_82 state enable config dhcp_relay option_82 check enable config dhcp_relay option_82 policy replace config dhcp_relay option_82 remote_id default config dhcp_relay add ipif System 10.160.8.1 enable address_binding dhcp_snoop enable address_binding trap_log config address_binding ip_mac ports 1-28 mode acl stop_learning_threshold 500 config address_binding ip_mac ports 1-24 state enable strict allow_zeroip enable forward_dhcppkt enable config address_binding dhcp_snoop max_entry ports 1-24 limit no_limit config filter dhcp_server ports 1-24 state enable config filter dhcp_server ports 25-28 state disable config filter dhcp_server trap_log enable config filter dhcp_server illegal_server_log_suppress_duration 1min On the server, I brought interfaces out of the bridge, removed the IP addresses on them (interfaces without IP), turned on arp_proxy

Isc-dhcp setup

log-facility local6; ddns-update-style none; authoritative; use-host-decl-names on; default-lease-time 300; max-lease-time 600; get-lease-hostnames on; option domain-name "myserver.ru"; option ntp-servers myntp.ru; option domain-name-servers mydnsp-ip; local-address 10.160.8.1; include "/etc/dhcp-hosts"; # MAC-IP "host hostname {hardware ethernet AA:BB:CC:55:92:A4; fixed-address wxyz;}" on release { set ClientIP = binary-to-ascii(10, 8, ".", leased-address); log(info, concat("***** release IP " , ClientIP)); execute("/etc/dhcp/dhcp-release", ClientIP); } on expiry { set ClientIP = binary-to-ascii(10, 8, ".", leased-address); log(info, concat("***** expiry IP " , ClientIP)); execute("/etc/dhcp/dhcp-release", ClientIP); } on commit { if exists agent.remote-id { set ClientIP = binary-to-ascii(10, 8, ".", leased-address); set ClientMac = binary-to-ascii(16, 8, ":", substring(hardware, 1, 6)); set ClientPort = binary-to-ascii(10,8,"",suffix(option agent.circuit-id,1)); set ClientSwitch = binary-to-ascii(16,8,":",substring(option agent.remote-id,2,6)); log(info, concat("***** IP: " , ClientIP, " Mac: ", ClientMac, " Port: ",ClientPort, " Switch: ",ClientSwitch)); execute("/etc/dhcp/dhcp-event", ClientIP, ClientMac, ClientPort, ClientSwitch); } } option space microsoft; # - option microsoft.disable-netbios-over-tcpip code 1 = unsigned integer 32; if substring(option vendor-class-identifier, 0, 4) = "MSFT" { vendor-option-space microsoft; } shared-network HOSTEL { subnet 10.160.0.0 netmask 255.255.248.0 { range 10.160.0.1 10.160.0.100; # option routers 10.160.1.1; option microsoft.disable-netbios-over-tcpip 2; } subnet abc0 netmask 255.255.252.0 { option routers abcd; option microsoft.disable-netbios-over-tcpip 2; } subnet 10.160.8.0 netmask 255.255.255.0 { # dhcp-relay } } In the dhcp-event file, agent.circuit-id, agent.remote-id, IP and MAC are checked for validity, and if everything is ok, they will add them to this address through the necessary interface

Primitive example dhcp-event:

#hostel 1 if ($ARGV[3] eq '0:21:91:92:d7:55') { system "/sbin/ip ro add $ARGV[0] dev eth1 src abcd"; } #hostel 2 if ($ARGV[3] eq '0:21:91:92:d4:92') { system "/sbin/ip ro add $ARGV[0] dev eth2 src abcd"; } here, only $ ARGV [3] is checked (that is, agent.remote-id, or the MAC switch, through which the DHCP request is received), but you can also check all other fields by getting their valid values, for example, from the database

As a result, we obtain:

1) a client not requesting an address via DHCP - does not go beyond its unmanaged switch, it is not passed by IP-MAC-PORT-BINDING;

2) a client whose MAC is known (available in the database), but the request does not match the port or the switch — will receive an IP associated with this MAC, but the route to it will not be added, respectively, proxy_arp will “respond” that the address is already taken and the address will be immediately released;

3) a client whose MAC is not known will receive an address from the temporary pool. These addresses are redirected to a page with information, here you can re-register your MAC using a login / password;

4) and finally the client, whose MAC is known, and matched to the switch and the port, will receive its address. Dhcp-snooping will add a dynamic binding to the impb table on the switch, the server will add a route to this address through the necessary interface of the former bridge.

When the lease is terminated or the address is released, the / etc / dhcp / dhcp-release script is called, the contents of which are utterly primitive:

system "/sbin/ip ro del $ARGV[0]"; There is a small flaw in security, specifically in p.2. If you use a non-standard dhcp-client that does not check whether the address issued by the dhcp server is busy, the address will not be released. Of course, the user will not be able to access the external network, beyond the router, since the server will not add a route to this address through the necessary interface, but the switch unlocks this MAC-IP pair on its port.

You can bypass this flaw by using classes in dhcpd.conf, but this significantly complicates the configuration file, and, accordingly, increases the load on our old server. Because for each subscriber you have to create your own class, with a rather complicated condition for hitting it, and then your own pool. Although plans to try in practice, how much it will increase the load.

Thus, it turned out that the compliance of the IP-MAC pair is now "monitored" by DHCP when issuing the address, access from "invalid" MAC-IP is limited by the switch. From the server, it was now possible to remove not only the bridge, but also macipmap, replacing 6 sets (from “Optimization 1”) with one ipmap, which contains all open IP addresses. As well as removing -m physdev.

More server interfaces moved from promisc mode to normal, which also slightly reduced the load.

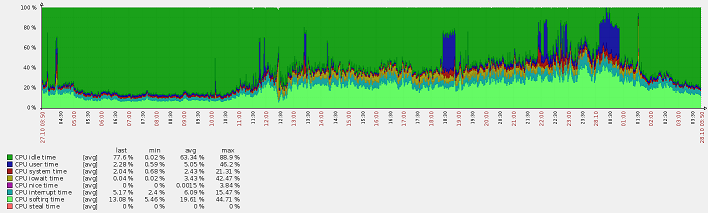

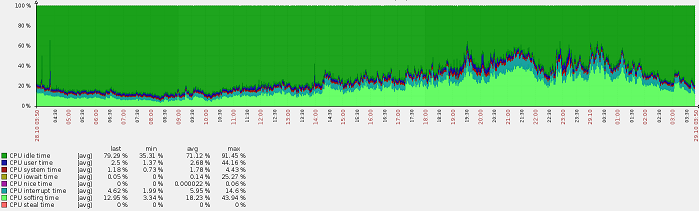

Namely, the whole procedure with disassembling the bridge reduced the total load on the server by almost 2 times ! Softirq is now not 50-100%, but 25-50%. At the same time, access control to the network has only become better.

Optimization 5

After the last optimization, although the load dropped noticeably, a strange thing was noticed: iowait increased. Not that much, from 0-0.3% to 5-7%. This is taking into account the fact that there are practically no disk operations on this server at all - it simply throws sachets.

(blue user time - kernel compilation)

iostat showed that there is a constant load on the disk in 800-820 Blk_wrtn / s

Started the search for processes that could be engaged in writing. Performance

echo 1 > /proc/sys/vm/block_dump gave a strange result: the culprits were

kjournald(483): WRITE block 76480 on md0 md0_raid1(481): WRITE block 154207744 on sdb2 md0_raid1(481): WRITE block 154207744 on sda3 Ext3 is in mode

data=writeback, noatime, and nothing is written to the disk, except perhaps for logs. But what logs were written yesterday - they are written today, their volume has not increased, that is, iowait was also not to increase.I started to scroll through the steps in my head what I was doing and what could have influenced iowait. As a result, I stopped syslog - and iowait plummeted to ~ 0%.

In order for dhcp not to clutter up the messages with its messages, I sent them to the local6 log-facility, and wrote in syslog.conf:

*.info;mail.none;authpriv.none;cron.none;local6.none /var/log/messages local6.info /var/log/dhcpd.log it turned out that when writing through syslog, sync is done for each line. There are quite a lot of requests to the dhcp server, a lot of events are generated that get to the log, and a lot of sync is called.

Fix on

local6.info -/var/log/dhcpd.log in my case, iowait reduced 10-100 times, instead of 5-7%, it was 0-0.3%.

Optimization result:

Why this article.

First, perhaps someone will draw from it useful solutions. I did not discover America here, although most of the events described here were in the pre-gage era, and the “google recipes” didn’t help much, reached the whole with their head.

Secondly, we constantly have to deal with the fact that instead of optimizing the code, developers are working on building up computing power, and this article can be an example of that, if you wish, you can find errors in your seemingly proven code many times, and cope with them . 90% of the site developers check the work of the site on their machine, put it into production. Then the whole thing slows down under load. Teasing the admin server. By optimizing the server in this case, little can be achieved if the code is not originally written optimally. And bought a new server, or another server and balancer. Then balancer balancer and so on. And the code inside was not optimal, and it remains forever. Of course, I understand that in the current realities, code optimization is more expensive than extensive capacity building,but with the advent of a huge number of “homegrown” IT people, the volumes of the “bad code” will become serious.

From nostalgic: I have an old laptop on the shelf, P3-866 2001gv (Panasonic CF-T1 if someone says something to it), but now it is impossible even to look at sites, although there is no more sense on these sites for 10 years. I love to remember interesting toys on the ZX-Spectrum on the gameplay is not inferior to today's monsters that require 4 cores / 4giga

Source: https://habr.com/ru/post/199480/

All Articles