As the "magic" of an error correction code that is over 50 years old, can speed up flash memory

An error correction code (Error Correction Code or ECC) is added to the transmitted signal and allows not only to detect errors during transmission, but also to correct them if necessary (which is obvious from the name) without re-requesting data from the transmitter. This algorithm allows you to transfer data at a constant speed, which can be important in many cases. For example, when you watch digital television - to look at the frozen image, waiting for repeated repeated requests for data, would be very uninteresting.

In 1948, Claude Shannon published his famous work on the transfer of information, which, among other things, formulated a theorem on the transfer of information through a channel with interference. After publication, quite a few error correction algorithms were developed with a slight increase in the amount of data transferred, but one of the most frequently encountered families of algorithms are algorithms based on low-density parity-check code (LDPC-code, low-density code), now widespread due to ease of implementation.

')

Claude Shannon

LDPC was first introduced to the world at MIT by Robert Gray Gallager, an eminent specialist in communications networks. It happened in 1960, and the LDPC was ahead of its time. Vacuum tube computers, which were common at that time, rarely had enough power to work efficiently with LDPC. A computer capable of processing such data in real time in those years occupied an area of almost 200 square meters, and this automatically made all algorithms based on LDPC unprofitable. Therefore, for almost 40 years, simpler codes have been used, while LDPC remained rather an elegant theoretical construction.

Robert Gallagher

In the mid-1990s, engineers working on satellite-based digital television transmission algorithms “shook off the dust” from the LDPC and began to use it, since computers by then had become both more powerful and less. By the beginning of the 2000s, LDPC is becoming ubiquitous because it allows correcting errors in high-speed data transmission under high-interference conditions (for example, with strong electromagnetic interference) with great efficiency. The emergence of specialized systems on chips used in WiFi technology, hard disks, SCSI controllers, etc. also contributed to the spread, such SoCs are optimized for tasks, and for them the calculations related to LDPC do not pose a problem at all. In 2003, the LDPC code supplanted the turbo code technology and became part of the standard for satellite data transmission for digital television DVB-S2. A similar replacement occurred in the DVB-T2 standard for digital terrestrial television.

It is worth saying that very different solutions are built on the basis of the LDPC, there is no “only correct” reference implementation. Often, solutions based on LDPC are incompatible with each other and the code developed, for example, for satellite television, cannot be ported and used in hard drives. Although more often than not, combining the efforts of engineers in different areas provides a lot of advantages, and LDPC "in general" is a non-patented technology, different know-how and proprietary technologies, along with corporate interests, stand in the way. Most often, such cooperation is possible only within one company. An example is the LSI HDD reading channel solution called TrueStore®, which the company has been offering for the past 3 years. After acquiring SandForce, LSI engineers began working on SHIELD ™ error correction algorithms for SSD controllers (based on LDPC), there were no ports of algorithms for working with SSDs, but the knowledge of the engineering team that worked on HDD solutions greatly helped in the development of new algorithms.

Here, of course, the majority of readers will have a question: how are the algorithms, each LDPC algorithm different from the rest? Most LDPC solutions begin as hard decision decoders, that is, such a decoder works with a strictly limited set of data (most often 0 and 1) and uses an error correction code for the slightest deviations from the norm. Such a solution, of course, allows you to effectively detect errors in the transmitted data and correct them, but in the case of a high level of errors, which sometimes happens when working with SSD, such algorithms no longer cope with them. As you remember from our previous articles , any flash memory is subject to an increase in the number of errors during operation. This inevitable process is worth considering when developing error correction algorithms for SSD drives. What to do in case of an increase in the number of errors?

Here come the LDPC with a soft solution, which are essentially "more analog." Such algorithms "look" deeper than "hard" ones, and have a large set of features. An example of the simplest such solution can be an attempt to read the data again using a different voltage, just as we often ask the interlocutor to repeat the phrase louder. Continuing the metaphors with communication of people, we can give an example of more complex correction algorithms. Imagine that you speak English with someone who speaks with a strong accent. In this case, a strong focus acts as a hindrance. Your interlocutor said a long phrase that you did not understand. In the role of a soft decision LDPC in this case there will be a few short leading questions that you can ask and clarify the whole meaning of the phrase that you did not initially understand. Such soft solutions are often used as complex statistical algorithms to eliminate false positive responses. In general, as you already understood, such solutions are noticeably more difficult to implement, but they often show much better results than “hard-hitting” ones.

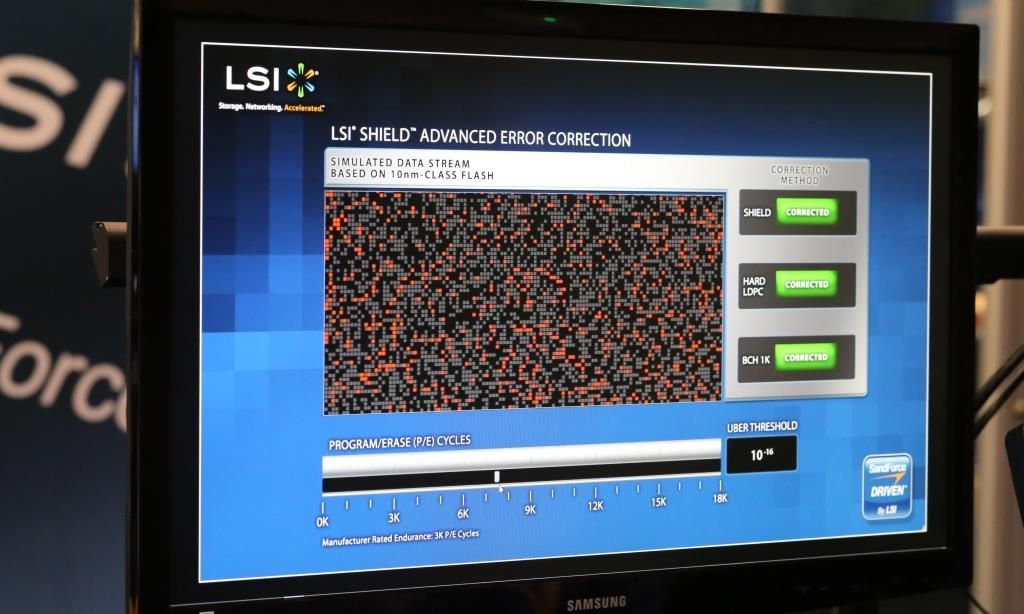

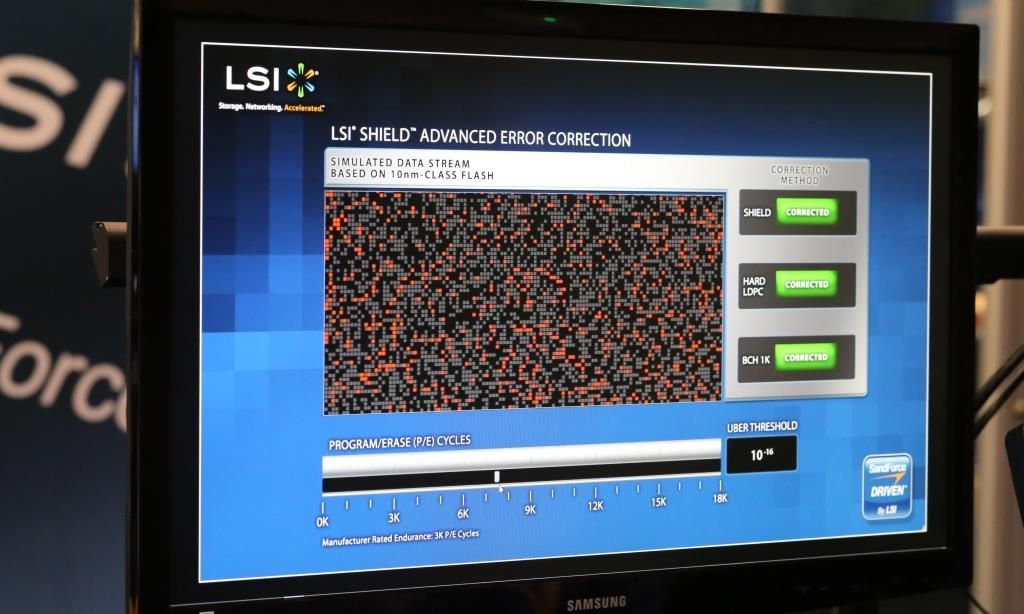

In 2013, at the flash memory summit held in Santa Clara, California, LSI presented their SHIELD extended error correction technology. Combining approaches with a soft and tough solution, DSP SHIELD offers a number of unique optimizations for future Flash memory technologies. For example, Adaptive Code Rate technology allows changing the volume allocated for ECC so that it occupies as little space as possible initially, and dynamically increases as the inevitable increase in the number of errors characteristic of SSD.

As you can see, the various LDPC solutions work very differently, and offer different functions and capabilities, which will largely determine the quality of the final product.

In 1948, Claude Shannon published his famous work on the transfer of information, which, among other things, formulated a theorem on the transfer of information through a channel with interference. After publication, quite a few error correction algorithms were developed with a slight increase in the amount of data transferred, but one of the most frequently encountered families of algorithms are algorithms based on low-density parity-check code (LDPC-code, low-density code), now widespread due to ease of implementation.

')

Claude Shannon

LDPC was first introduced to the world at MIT by Robert Gray Gallager, an eminent specialist in communications networks. It happened in 1960, and the LDPC was ahead of its time. Vacuum tube computers, which were common at that time, rarely had enough power to work efficiently with LDPC. A computer capable of processing such data in real time in those years occupied an area of almost 200 square meters, and this automatically made all algorithms based on LDPC unprofitable. Therefore, for almost 40 years, simpler codes have been used, while LDPC remained rather an elegant theoretical construction.

Robert Gallagher

In the mid-1990s, engineers working on satellite-based digital television transmission algorithms “shook off the dust” from the LDPC and began to use it, since computers by then had become both more powerful and less. By the beginning of the 2000s, LDPC is becoming ubiquitous because it allows correcting errors in high-speed data transmission under high-interference conditions (for example, with strong electromagnetic interference) with great efficiency. The emergence of specialized systems on chips used in WiFi technology, hard disks, SCSI controllers, etc. also contributed to the spread, such SoCs are optimized for tasks, and for them the calculations related to LDPC do not pose a problem at all. In 2003, the LDPC code supplanted the turbo code technology and became part of the standard for satellite data transmission for digital television DVB-S2. A similar replacement occurred in the DVB-T2 standard for digital terrestrial television.

It is worth saying that very different solutions are built on the basis of the LDPC, there is no “only correct” reference implementation. Often, solutions based on LDPC are incompatible with each other and the code developed, for example, for satellite television, cannot be ported and used in hard drives. Although more often than not, combining the efforts of engineers in different areas provides a lot of advantages, and LDPC "in general" is a non-patented technology, different know-how and proprietary technologies, along with corporate interests, stand in the way. Most often, such cooperation is possible only within one company. An example is the LSI HDD reading channel solution called TrueStore®, which the company has been offering for the past 3 years. After acquiring SandForce, LSI engineers began working on SHIELD ™ error correction algorithms for SSD controllers (based on LDPC), there were no ports of algorithms for working with SSDs, but the knowledge of the engineering team that worked on HDD solutions greatly helped in the development of new algorithms.

Here, of course, the majority of readers will have a question: how are the algorithms, each LDPC algorithm different from the rest? Most LDPC solutions begin as hard decision decoders, that is, such a decoder works with a strictly limited set of data (most often 0 and 1) and uses an error correction code for the slightest deviations from the norm. Such a solution, of course, allows you to effectively detect errors in the transmitted data and correct them, but in the case of a high level of errors, which sometimes happens when working with SSD, such algorithms no longer cope with them. As you remember from our previous articles , any flash memory is subject to an increase in the number of errors during operation. This inevitable process is worth considering when developing error correction algorithms for SSD drives. What to do in case of an increase in the number of errors?

Here come the LDPC with a soft solution, which are essentially "more analog." Such algorithms "look" deeper than "hard" ones, and have a large set of features. An example of the simplest such solution can be an attempt to read the data again using a different voltage, just as we often ask the interlocutor to repeat the phrase louder. Continuing the metaphors with communication of people, we can give an example of more complex correction algorithms. Imagine that you speak English with someone who speaks with a strong accent. In this case, a strong focus acts as a hindrance. Your interlocutor said a long phrase that you did not understand. In the role of a soft decision LDPC in this case there will be a few short leading questions that you can ask and clarify the whole meaning of the phrase that you did not initially understand. Such soft solutions are often used as complex statistical algorithms to eliminate false positive responses. In general, as you already understood, such solutions are noticeably more difficult to implement, but they often show much better results than “hard-hitting” ones.

In 2013, at the flash memory summit held in Santa Clara, California, LSI presented their SHIELD extended error correction technology. Combining approaches with a soft and tough solution, DSP SHIELD offers a number of unique optimizations for future Flash memory technologies. For example, Adaptive Code Rate technology allows changing the volume allocated for ECC so that it occupies as little space as possible initially, and dynamically increases as the inevitable increase in the number of errors characteristic of SSD.

As you can see, the various LDPC solutions work very differently, and offer different functions and capabilities, which will largely determine the quality of the final product.

Source: https://habr.com/ru/post/199360/

All Articles