Oriense. Development of devices to help the blind and visually impaired

The project's history dates back to the distant 2006.

Then an active member of the society of the blind addressed a St. Petersburg scientific research institute with a proposal to create a device to help the blind and visually impaired.

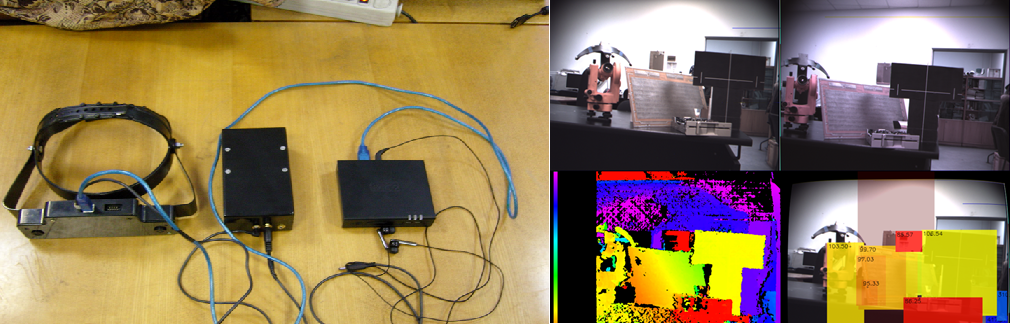

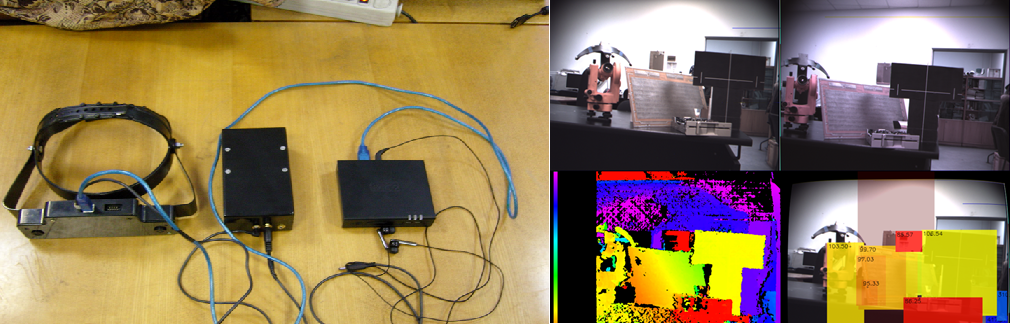

One of the founders of the current Oriense then headed the department, which was involved, including the vision of robots and developed his stereo camera. They decided to base the device on it: a wearable computer processes information from two cameras and two ultrasonic sonars and gives hints to the headphones using a voice synthesizer.

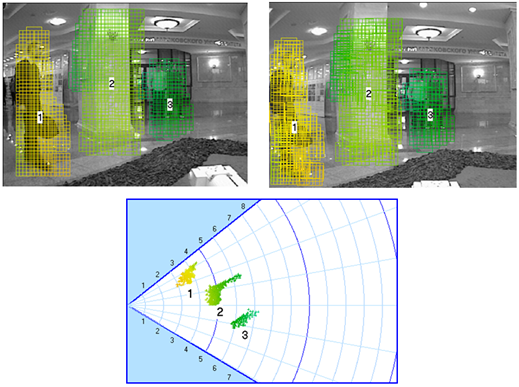

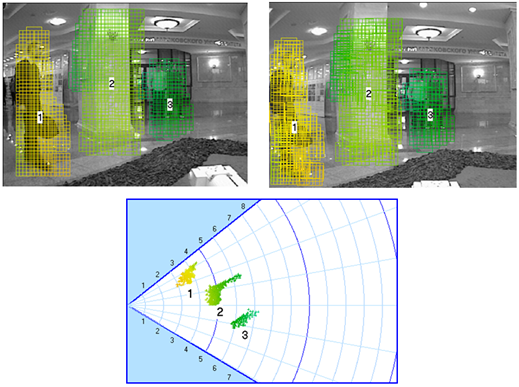

The depth map should have been built by a separate stereo processor on Altera, but this did not happen, the processor only carried out stereo input. The onboard computer software built a cloud of points, segmented into objects, simply classified them, determined the degree of danger and issued warnings.

')

The layout was made, the project attracted enough attention, but such performance and functionality was not enough, and then it did not go and the project froze, never reaching the users.

In 2010, the work was resumed, but as a result, we hardly made any progress, only a few having modernized the layout. Then it became finally clear that bringing such a device to a state of real utility would require a lot of time and financial influence. Yes, and the approach should be different: like many teams that tried to create something similar, we were repelled by the available technologies, and not by the real needs of users. As a result, the project was again abandoned, not counting my experiments with image recognition in the framework of the thesis and postgraduate grants of the government of St. Petersburg.

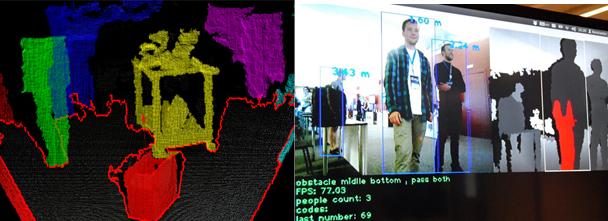

In 2012 was the third approach to the projectile, but this time in the form of student-graduate development for the Microsoft Imagine Cup. The stereo camera was replaced with Kinekt (attached to a construction helmet with tape), the software was quickly added with the recognition of objects, text and faces.

We won the Petersburg stage, and at the All-Russian we were content with a special prize from Intel. Then there was a BIT (competition “Business of innovative technologies) - the second place and a special prize - a trip to Slush. Then, SumIT start-up school, where we were noticed by iDealMachine start-up accelerator managing partners Sergey Fradkov and Mikhail Averbakh. We hit the accelerator, received $ 20,000 preseed from the RSV Venture Partners fund and started Oriense as a startup. It was in November 2012.

iDealMachine put the right development process to us, we finally went directly to the blind and visually impaired with questions and formed the problem and the required functionality. The prototype was brought to a tolerable state for demonstrations, Kinekt was replaced with PrimeSense Carmine, mounted on the chest. The unit was finally tested by blind people at the center for medical and social rehabilitation of visually impaired persons .

Then we plunged into the usual start-up troubles: looking for funding for the next stage, resolving legal and organizational issues, presented a lot (including at DEMO Europe and the “Iron” VC Day Ingria). In June 2013, we received a state grant under the START program of the Assistance Fund, slightly expanded our staff, purchased components and finally worked closely on development. Now we are completing the first version of the product, for which we have a small pre-order from the educational center "Edukor" , which already has experience of using the development for people with disabilities - an educational class with devices for computer control without hands.

Oriense-1 is still based on PrimeSense, so it will work only in the conditions of the premises, but at least we can run in local navigation software and the design of a wearable device. Odroid-U2 is used as on-board computer, now the body is being drawn .

At the same time, we are working on a 3D sensor on a stereo camera, it was planned to use Etron's stereo-vision chip, but negotiations have come to a standstill so far. On the base of the Hardkernel board with the Exynos 4412 SoC, we are working on our single board computer, which will include all the sensor controls: stereo, GPS / GLONASS, 9-axis position sensor and optionally a 3G modem. It is possible that the stereo will be calculated on the new 16-core Epiphany processors from Adapteva , which are used in the Parallella Board . Thus, we can make a device consisting of one module, with full functionality: local navigation, global navigation, text reading, object recognition, etc.

We wanted to release a GPS-navigator and as a separate device, based on the RaspberryPi and sensor boards - it is now in the layout. The software was planned to be made open, on the OpenStreetMap database, under Linux, in order to later include it into a full-featured device. True, there is no promotion for this software yet.

The most popular means of GPS navigation for blind people was the LoadStone GPS application for Symbian, but due to the demise of the platform they are forced to switch to smartphones, and based on the OsmAnd application for Android, there is a version developed in Russia for the blind Osmand Access , which includes part of the LoadStone functionality . A big minus of the smartphone in the absence of a physical keyboard, so we are now communicating with the developers of OsmAnd Access to create a navigator sharpened for it with its presence.

On November 1-2, we will be in Moscow at the Open Innovations Forum (due to the fact that the Russian Startup Rating presented us with the highest AAA), on November 6-7, at the Webit Congress in Istanbul, with its own stand.

We are based in St. Petersburg, our website is oriense.com , mail is info@oriense.com, twitter , YouTube channel , the latest version of our presentation

Thank you for your attention, we will be happy to feed back.

Then an active member of the society of the blind addressed a St. Petersburg scientific research institute with a proposal to create a device to help the blind and visually impaired.

One of the founders of the current Oriense then headed the department, which was involved, including the vision of robots and developed his stereo camera. They decided to base the device on it: a wearable computer processes information from two cameras and two ultrasonic sonars and gives hints to the headphones using a voice synthesizer.

The depth map should have been built by a separate stereo processor on Altera, but this did not happen, the processor only carried out stereo input. The onboard computer software built a cloud of points, segmented into objects, simply classified them, determined the degree of danger and issued warnings.

')

The layout was made, the project attracted enough attention, but such performance and functionality was not enough, and then it did not go and the project froze, never reaching the users.

In 2010, the work was resumed, but as a result, we hardly made any progress, only a few having modernized the layout. Then it became finally clear that bringing such a device to a state of real utility would require a lot of time and financial influence. Yes, and the approach should be different: like many teams that tried to create something similar, we were repelled by the available technologies, and not by the real needs of users. As a result, the project was again abandoned, not counting my experiments with image recognition in the framework of the thesis and postgraduate grants of the government of St. Petersburg.

In 2012 was the third approach to the projectile, but this time in the form of student-graduate development for the Microsoft Imagine Cup. The stereo camera was replaced with Kinekt (attached to a construction helmet with tape), the software was quickly added with the recognition of objects, text and faces.

We won the Petersburg stage, and at the All-Russian we were content with a special prize from Intel. Then there was a BIT (competition “Business of innovative technologies) - the second place and a special prize - a trip to Slush. Then, SumIT start-up school, where we were noticed by iDealMachine start-up accelerator managing partners Sergey Fradkov and Mikhail Averbakh. We hit the accelerator, received $ 20,000 preseed from the RSV Venture Partners fund and started Oriense as a startup. It was in November 2012.

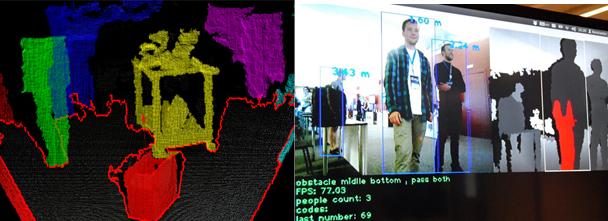

iDealMachine put the right development process to us, we finally went directly to the blind and visually impaired with questions and formed the problem and the required functionality. The prototype was brought to a tolerable state for demonstrations, Kinekt was replaced with PrimeSense Carmine, mounted on the chest. The unit was finally tested by blind people at the center for medical and social rehabilitation of visually impaired persons .

Then we plunged into the usual start-up troubles: looking for funding for the next stage, resolving legal and organizational issues, presented a lot (including at DEMO Europe and the “Iron” VC Day Ingria). In June 2013, we received a state grant under the START program of the Assistance Fund, slightly expanded our staff, purchased components and finally worked closely on development. Now we are completing the first version of the product, for which we have a small pre-order from the educational center "Edukor" , which already has experience of using the development for people with disabilities - an educational class with devices for computer control without hands.

Oriense-1 is still based on PrimeSense, so it will work only in the conditions of the premises, but at least we can run in local navigation software and the design of a wearable device. Odroid-U2 is used as on-board computer, now the body is being drawn .

At the same time, we are working on a 3D sensor on a stereo camera, it was planned to use Etron's stereo-vision chip, but negotiations have come to a standstill so far. On the base of the Hardkernel board with the Exynos 4412 SoC, we are working on our single board computer, which will include all the sensor controls: stereo, GPS / GLONASS, 9-axis position sensor and optionally a 3G modem. It is possible that the stereo will be calculated on the new 16-core Epiphany processors from Adapteva , which are used in the Parallella Board . Thus, we can make a device consisting of one module, with full functionality: local navigation, global navigation, text reading, object recognition, etc.

We wanted to release a GPS-navigator and as a separate device, based on the RaspberryPi and sensor boards - it is now in the layout. The software was planned to be made open, on the OpenStreetMap database, under Linux, in order to later include it into a full-featured device. True, there is no promotion for this software yet.

The most popular means of GPS navigation for blind people was the LoadStone GPS application for Symbian, but due to the demise of the platform they are forced to switch to smartphones, and based on the OsmAnd application for Android, there is a version developed in Russia for the blind Osmand Access , which includes part of the LoadStone functionality . A big minus of the smartphone in the absence of a physical keyboard, so we are now communicating with the developers of OsmAnd Access to create a navigator sharpened for it with its presence.

On November 1-2, we will be in Moscow at the Open Innovations Forum (due to the fact that the Russian Startup Rating presented us with the highest AAA), on November 6-7, at the Webit Congress in Istanbul, with its own stand.

We are based in St. Petersburg, our website is oriense.com , mail is info@oriense.com, twitter , YouTube channel , the latest version of our presentation

Thank you for your attention, we will be happy to feed back.

Source: https://habr.com/ru/post/199192/

All Articles