Zabbix: Backing up a small base

Omit a long introduction on the need to back up data. We all know what backups need to do. Those who actively use Zabbix, also think about the possibility of restoring the database if it is damaged or transferred to a new server, etc. It is clear that replication is the best option for this, but not every organization can afford it. I will show how the problem of Zabbix backup is solved here. If anyone is interested, I ask under the cat.

Warning: I do not consider the diagram below as ideal, so the article was not written as a sample, but with the aim of obtaining constructive criticism and useful tips for improvement.

When I faced the need to back up the Zabbix database, I did not go far and decided to use a proven tool: mysqldump . It has proven itself well when used with databases of other web services, such as redmine, glpi, drupal and others, but in the case of Zabbix it turned out to be completely unsuitable. Backups were made, no errors occurred, but one day it was a black day when backup was required to be restored. It took about two days to unload a relatively small database from a dump. Considering that the database at that time was, it can be said, in its infancy, the downtime would increase significantly in the future. It was absolutely unacceptable. It was then that my eyes fell on Percona XtraBackup .

Percona Xtrabackup is an open source software product that allows you to make backup copies of MySQL databases without blocking. Open Query, mozilla.org and Facebook use this program, which allows to conclude that this is not a raw red-eyed product. I note that in my case, Percona could not be used in conjunction with a zabbix server due to the poor performance of the disk subsystem. Sometimes there were errors related to the fact that Percona did not have time to read the data that Zabbix was actively recording. It was decided to stop the zabbix server at the time of the backup. Today it takes about 6 minutes a day. Considering that all particularly critical triggers are tied to SNMP traps, which, after running the zabbix-server, will still not be left unrecorded, for me this time is quite acceptable. Perhaps, in your case, you will not have to stop the Zabbix daemon, and there will be no idle time at all.

')

Then I thought what should be the perfect backup for me. And I realized that the best backup is the one you don’t worry about, but you know that it is executed. I managed to achieve this result: I do not care about backups. I do not go to the server every morning, fingers crossed, and do not check the log, file size and remaining space on the partition. But I know that backups are being made, because I receive all the necessary information by e-mail. This is helped by a fairly simple script that I want to bring to the public. Perhaps there are some problems with him that I do not suspect?

In Zabbix, we set up email notifications. To do this, use ssmtp . There are enough instructions on the Internet, besides, the topic is considered to be quite different, so I will not dwell on this. In addition to Percona and ssmtp, nothing specific is used: tar, gzip, sed and find is in every distribution. For reliability, backup files are duplicated to a remote server via NFS.

The system on which it is used:

Files from the / etc / zabbix and / usr / share / zabbix directories are copied to save settings and additional scripts used in Zabbix.

To restore the database from the copy, it is enough to stop mysqld, replace the directory with the one extracted from the archive and restart the MySQL daemon. Count yourself how much time it will take for you, but I think a lot less than two days.

All working backups and stable operation of all systems.

Warning: I do not consider the diagram below as ideal, so the article was not written as a sample, but with the aim of obtaining constructive criticism and useful tips for improvement.

When I faced the need to back up the Zabbix database, I did not go far and decided to use a proven tool: mysqldump . It has proven itself well when used with databases of other web services, such as redmine, glpi, drupal and others, but in the case of Zabbix it turned out to be completely unsuitable. Backups were made, no errors occurred, but one day it was a black day when backup was required to be restored. It took about two days to unload a relatively small database from a dump. Considering that the database at that time was, it can be said, in its infancy, the downtime would increase significantly in the future. It was absolutely unacceptable. It was then that my eyes fell on Percona XtraBackup .

Percona Xtrabackup is an open source software product that allows you to make backup copies of MySQL databases without blocking. Open Query, mozilla.org and Facebook use this program, which allows to conclude that this is not a raw red-eyed product. I note that in my case, Percona could not be used in conjunction with a zabbix server due to the poor performance of the disk subsystem. Sometimes there were errors related to the fact that Percona did not have time to read the data that Zabbix was actively recording. It was decided to stop the zabbix server at the time of the backup. Today it takes about 6 minutes a day. Considering that all particularly critical triggers are tied to SNMP traps, which, after running the zabbix-server, will still not be left unrecorded, for me this time is quite acceptable. Perhaps, in your case, you will not have to stop the Zabbix daemon, and there will be no idle time at all.

')

Then I thought what should be the perfect backup for me. And I realized that the best backup is the one you don’t worry about, but you know that it is executed. I managed to achieve this result: I do not care about backups. I do not go to the server every morning, fingers crossed, and do not check the log, file size and remaining space on the partition. But I know that backups are being made, because I receive all the necessary information by e-mail. This is helped by a fairly simple script that I want to bring to the public. Perhaps there are some problems with him that I do not suspect?

In Zabbix, we set up email notifications. To do this, use ssmtp . There are enough instructions on the Internet, besides, the topic is considered to be quite different, so I will not dwell on this. In addition to Percona and ssmtp, nothing specific is used: tar, gzip, sed and find is in every distribution. For reliability, backup files are duplicated to a remote server via NFS.

The system on which it is used:

Actually script

#!/bin/bash DAY=`date +%Y%m%d` LOGFILE=/var/log/zabbix_backup.log # E-mail, EMAIL=admin@host.com # logfile mailfile: logrotate MAILFILE=/tmp/mailfile.tmp # BK_GLOBAL=/home/zabbix/backups # BK_DIR=$BK_GLOBAL/Zabbix_$DAY # # set_date set_date () { DT=`date "+%y%m%d %H:%M:%S"` } # mkdir $BK_DIR set_date echo -e "$DT ZABBIX" > $MAILFILE service zabbix-server stop 2>>$MAILFILE innobackupex --user=root --password=qwerty --no-timestamp $BK_DIR/xtra 2>&1 | tee /var/log/innobackupex.log | egrep "ERROR|innobackupex: completed OK" >>$MAILFILE innobackupex --apply-log --use-memory=1000M $BK_DIR/xtra 2>&1 | tee /var/log/innobackupex.log | egrep "ERROR|innobackupex: completed OK" >>$MAILFILE # Percona Xtrabackup : . , tee egrep. service zabbix-server start 2>>$MAILFILE set_date echo -e "$DT " >> $MAILFILE set_date echo -e "$DT " >> $MAILFILE cd $BK_DIR tar -cf $BK_DIR/zabbix_db_$DAY.tar ./xtra 2>>$MAILFILE rm -rf $BK_DIR/xtra cd /usr/share tar -cf $BK_DIR/zabbix_files_$DAY.tar ./zabbix 2>>$MAILFILE cd /etc tar -cf $BK_DIR/zabbix_etc_$DAY.tar ./zabbix 2>>$MAILFILE cd / gzip $BK_DIR/zabbix_db_$DAY.tar 2>>$MAILFILE gzip $BK_DIR/zabbix_files_$DAY.tar 2>>$MAILFILE gzip $BK_DIR/zabbix_etc_$DAY.tar 2>>$MAILFILE set_date echo -e "$DT " >> $MAILFILE rm -f zabbix_db_$DAY.tar rm -f zabbix_files_$DAY.tar rm -f zabbix_etc_$DAY.tar set_date echo -e "$DT NFS-" >> $MAILFILE mount 192.168.1.30:/home/backups /mnt/nfs 2>>$MAILFILE set_date echo -e "$DT " >> $MAILFILE mkdir /mnt/nfs/Zabbix_$DAY cp $BK_DIR/zabbix_db_$DAY.tar.gz /mnt/nfs/Zabbix_$DAY 2>>$MAILFILE cp $BK_DIR/zabbix_files_$DAY.tar.gz /mnt/nfs/Zabbix_$DAY 2>>$MAILFILE cp $BK_DIR/zabbix_etc_$DAY.tar.gz /mnt/nfs/Zabbix_$DAY 2>>$MAILFILE set_date echo -e "$DT " >> $MAILFILE echo -e "$DT " >> $MAILFILE find $BK_GLOBAL/* -type f -ctime +30 -exec rm -rf {} \; 2>>$MAILFILE find /mnt/nfs/* -type f -ctime +30 -exec rm -rf {} \; 2>>$MAILFILE find $BK_GLOBAL/* -type d -name "*" -empty -delete 2>>$MAILFILE find /mnt/nfs/* -type d -name "*" -empty -delete 2>>$MAILFILE set_date echo -e "$DT " >> $MAILFILE echo -e "$DT NFS-\n" >> $MAILFILE umount /mnt/nfs 2>>$MAILFILE cat $MAILFILE >> $LOGFILE sed -i -e "1 s/^/Subject: Zabbix backup log $DAY\n\n/;" $MAILFILE 2>>$LOGFILE sed -i -e "1 s/^/From: zabbixsender@host.com\n/;" $MAILFILE 2>>$LOGFILE sed -i -e "1 s/^/To: $EMAIL\n/;" $MAILFILE 2>>$LOGFILE echo -e "\n $BK_DIR:\n" >> $MAILFILE ls -lh $BK_DIR >> $MAILFILE echo -e "\n :\n" >> $MAILFILE df -h >> $MAILFILE cat $MAILFILE | mail -t 2>>$LOGFILE Files from the / etc / zabbix and / usr / share / zabbix directories are copied to save settings and additional scripts used in Zabbix.

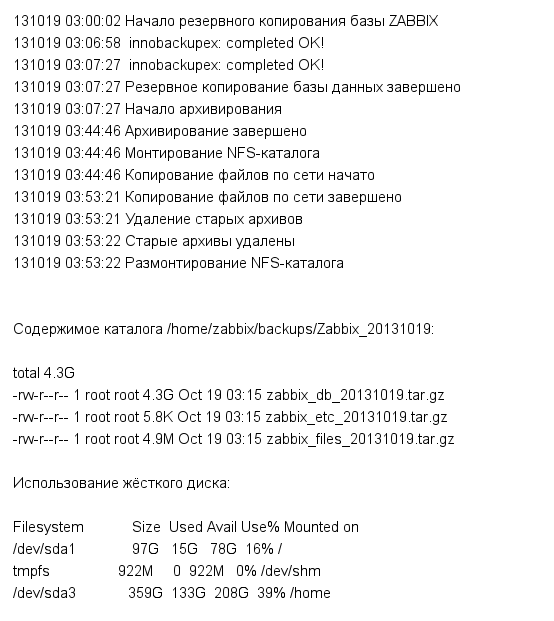

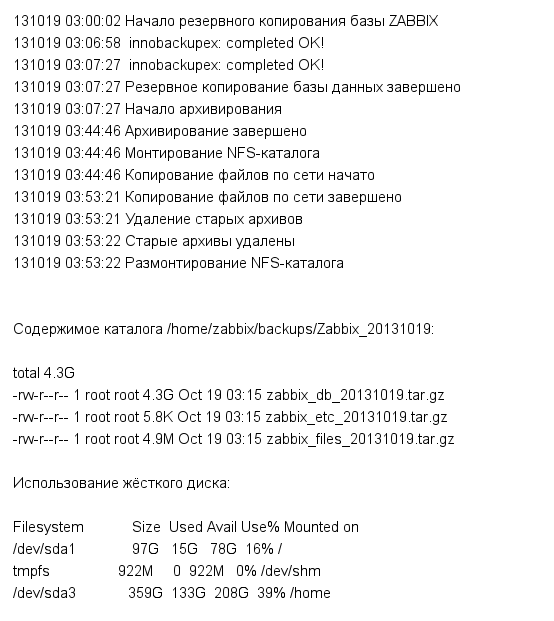

The text of the letter sent by the script

To restore the database from the copy, it is enough to stop mysqld, replace the directory with the one extracted from the archive and restart the MySQL daemon. Count yourself how much time it will take for you, but I think a lot less than two days.

All working backups and stable operation of all systems.

Source: https://habr.com/ru/post/198276/

All Articles