Large Hadron Collider: LHC circles and data path

LHC (Large Hadron Collider) is not only a huge scientific experiment, but also a very complex computer network.

In this post (and if it works out well and it will be interesting for people, then in the series) I will try to tell what happens to the “stack of CDs 20km high” that the collider generates every year (now, by the way, it is stopped and new data in the next year or two will not be).

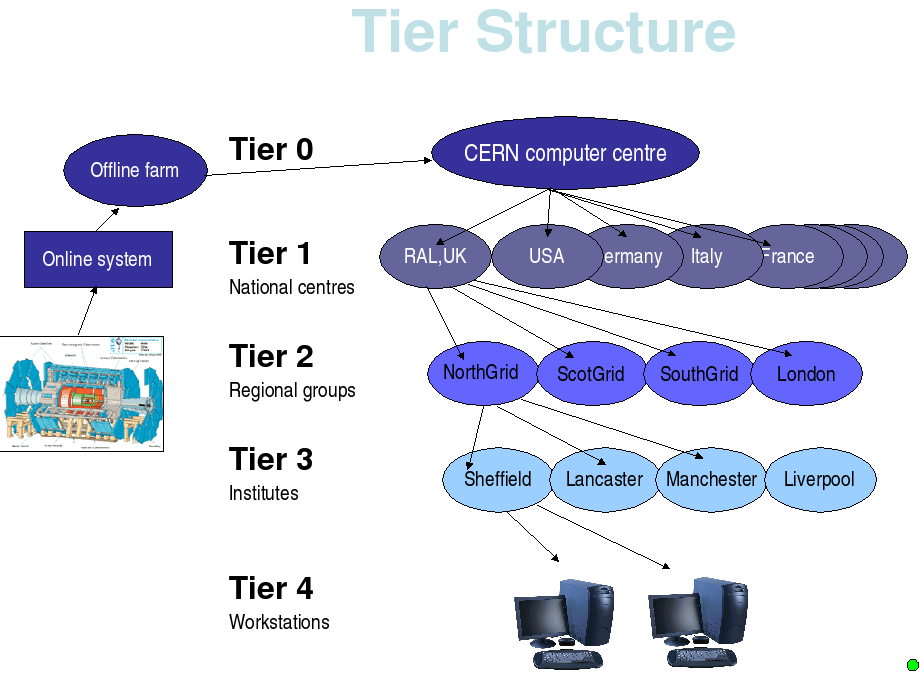

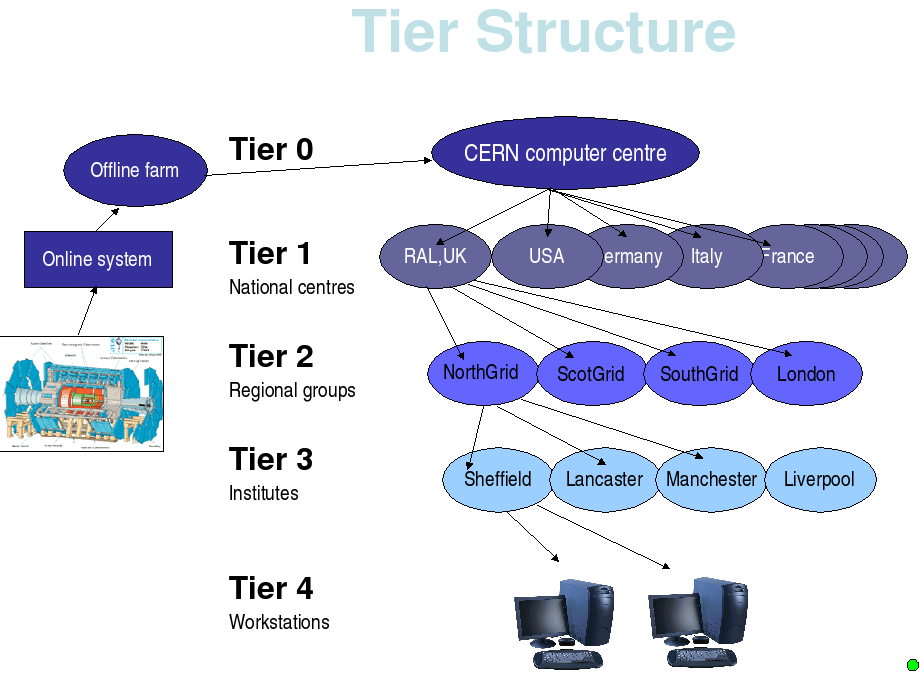

Picture borrowed from Sheffield University

')

The detectors of four experiments (ATLAS, Alice, LHCb, CMS) record the passage of elementary particles through them (events). What detectors have fixed is raw data (RAW data). Their flow is huge and very unevenly distributed over time: about 40Tb of raw data (~ 15Pb per year) is accumulated per day, but all this happens in a few hours of the experiment. The only thing that can be done with this stream is at the zero level (Tier-0) - just save the data for further processing. After the data is saved, their processing begins. The Tier-0 computing power is not large , “only” ~ 50,000 cores, which is approximately 10% of the total computing power of the whole GRID serving the LHC. In Tier-0, data is pre-processed: natural noise is removed, etc. (in these matters, unfortunately, I am not strong) . Thus, the Tier-0 has a complete copy of the data ever received at the LHC. Tier-0 storage capacity is 83Pb tapes and 33Pb disks.

Further, these data are distributed between 11 computer centers around the world (in Canada, Germany, Spain, France, Italy, Holland, Taiwan, England, USA x2, collaborations of northern European countries. In Russia they make the 12th) - Tier-1. A Tier-0 is associated with each Tier-1 high-speed link (usually from 2Gb / s).

In each Tier-1, raw data is also stored on tape. In addition, there begins the main data processing.

Each experiment uses Tier's computational power in different ways, but the essence is the same: based on the data from the detectors and the laws of physics, the trajectories of millions of particles and the pattern of beam collisions are reconstructed. In addition to event recovery, it is checked how well one or another mathematical model corresponds to the results obtained during the experiments.

Tier-1 computing power and storage is relatively large. For example, the English Tier-1 has about 10Pb tapes and disks and about 14,000 cores.

Not everyone who wants to take part in experiments can afford it. According to this, smaller computer centers “feed on” data from Tier-1 (Tier-2, of which there are about 140 , and Tier-3).

Tier-2 no longer has its own tape storage, and only process data received from its region's Tier-1.

Tier-2 level centers in Russia 9 . For comparison: all Russian Tier-2 computing complexes account for “total” 4Pb disks and 7,500 cores, which are extremely unevenly distributed between computing centers.

In this post (and if it works out well and it will be interesting for people, then in the series) I will try to tell what happens to the “stack of CDs 20km high” that the collider generates every year (now, by the way, it is stopped and new data in the next year or two will not be).

What happens data?

Picture borrowed from Sheffield University

')

The detectors of four experiments (ATLAS, Alice, LHCb, CMS) record the passage of elementary particles through them (events). What detectors have fixed is raw data (RAW data). Their flow is huge and very unevenly distributed over time: about 40Tb of raw data (~ 15Pb per year) is accumulated per day, but all this happens in a few hours of the experiment. The only thing that can be done with this stream is at the zero level (Tier-0) - just save the data for further processing. After the data is saved, their processing begins. The Tier-0 computing power is not large , “only” ~ 50,000 cores, which is approximately 10% of the total computing power of the whole GRID serving the LHC. In Tier-0, data is pre-processed: natural noise is removed, etc. (in these matters, unfortunately, I am not strong) . Thus, the Tier-0 has a complete copy of the data ever received at the LHC. Tier-0 storage capacity is 83Pb tapes and 33Pb disks.

Further, these data are distributed between 11 computer centers around the world (in Canada, Germany, Spain, France, Italy, Holland, Taiwan, England, USA x2, collaborations of northern European countries. In Russia they make the 12th) - Tier-1. A Tier-0 is associated with each Tier-1 high-speed link (usually from 2Gb / s).

In each Tier-1, raw data is also stored on tape. In addition, there begins the main data processing.

Each experiment uses Tier's computational power in different ways, but the essence is the same: based on the data from the detectors and the laws of physics, the trajectories of millions of particles and the pattern of beam collisions are reconstructed. In addition to event recovery, it is checked how well one or another mathematical model corresponds to the results obtained during the experiments.

Tier-1 computing power and storage is relatively large. For example, the English Tier-1 has about 10Pb tapes and disks and about 14,000 cores.

Not everyone who wants to take part in experiments can afford it. According to this, smaller computer centers “feed on” data from Tier-1 (Tier-2, of which there are about 140 , and Tier-3).

Tier-2 no longer has its own tape storage, and only process data received from its region's Tier-1.

Tier-2 level centers in Russia 9 . For comparison: all Russian Tier-2 computing complexes account for “total” 4Pb disks and 7,500 cores, which are extremely unevenly distributed between computing centers.

Source: https://habr.com/ru/post/197496/

All Articles