Monitoring code coverage during unit testing in Windows Phone

Greetings to the habrazhiteli!

I want to share my achievements in improving control of code coverage during unit testing of applications under Windows Phone. It is noteworthy that when solving this problem, we had to face some aspects of the "correct" design of applications. Therefore, this post can be considered as a small tutorial.

Formulation of the problem

Given:

The development of a small application for Windows Phone begins. The application is typical - it takes some data from its server and in some form shows it to the user.

Required:

Design the application architecture so that, with continuous integration, the maximum of the application code responsible for the operation logic is closed with tests with the ability to control this coverage.

General approach

As I already wrote , in Windows Phone runtime there was no means to control code coverage. On the advice of Nagg and halkar, I rendered the code with the functionality (operation logic) of the application in the Portable Class Library. This made it possible to perform tests in a standard .NET Framework environment with control coverage. In addition, such an approach eliminates shamanism with a system of continuous integration, and also reduces the time to perform tests.

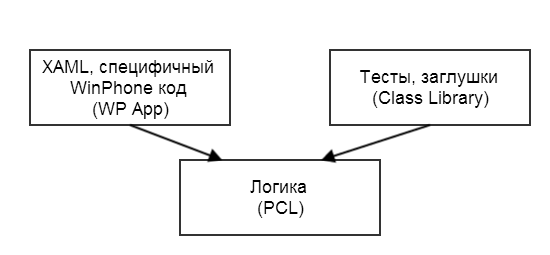

Thus, the application can be simplified in the form of three components:

All application logic is in PCL, independent of either Windows Phone runtime or the testing environment.

A Windows Phone application uses this library and contains XAML markup and minimal code.

The DLL with tests also refers to PCL and contains the actual test code. I used MSTest as a testing framework, but it doesn't matter.

')

The application logic will not work in a vacuum - you need to interact with the user, with the web server and another one directly connected with Windows Phone. At the same time, when executing tests, this interaction should somehow be emulated already in the medium of the usual .NET Framework.

As a result, the following was removed from the PCL:

- work with a web server (sending a request and receiving a response)

- work with files (saving, reading, getting a list)

- localization of string resources

- Internet accessibility check

- something else

Consider this in a bit more detail. The generalized scheme of the application is as follows:

- HTTP request sent to server

- Accepted response

- The answer is parsed / processed

- The results of fights are displayed on the screen.

The logic to be tested is only at step 3. In this case, it is logical to take the code responsible for all the other steps out of the PCL.

In the case of displaying for Windows Phone, such takeaway is quite transparent - in PCL, there are ViewModels, in XAML, the binding of control properties to ViewModels properties is described. When testing, we can simply pull the desired properties and methods of ViewModels, simulating user actions and checking the reaction to them.

Now consider the work with the server. PCL has an implementation of the HTTP client (

HttpWebRequest ), and it seems logical to write the code to work with the web server directly into PCL.However, we must not forget that our goal is to test the application logic. If we tightly sew the code for sending requests to the web server to PCL, then for testing we will need to raise a separate web server or somehow intercept the requests, simulating the operation of the server.

Go ahead. During processing, data can be cached - saved as files on the device. However, PCL does not have complete file management tools. This is due to the fact that different execution environments use different mechanisms for organizing storage. So, in our usual .NET these are “regular” files (for example,

File ), and on Windows Phone this is already IsolatedStorageFile . We must not forget that when testing, we also need to imitate such a repository.Actually, the decoupling was done in a simple way - in PCL we declare interfaces for working with web requests, files, localization, etc., and implement them already in the Windows Phone application with our own specifics. Accordingly, for testing purposes, it is also necessary to implement these interfaces so as to more or less reliably imitate the work of a “live” environment.

Inversion of control

With some approximation, this decoupling is called scientific inversion of control . I will not get into the jungle, because I

Let's look at the file management interface for example:

public interface IFileStorage { Stream GetWriteFileStream(string fileName); Stream GetReadFileStream(string fileName); bool IsFileExists(string fileName); List<string> GetAllFileNames(string path); } This interface is enough to operate with files, without going into details of their storage. Accordingly, it is about this interface and PCL knows nothing more. It is implemented in the Windows Phone application, and the implementation uses

IsolatedStorageFile .Also, this interface is implemented in a test DLL-ke, and quite primitive - all files are stored in memory in the form of a

Dictionary<string, MemoryStream> . Thus, the program's logic is satisfied, thinking that it works with real files, and we are satisfied, because at the same time we solve the problem with the independence of tests. New test - a new instance of the class - a clean "file system".But besides the files, we also need to unleash the work c web, localization and more. For convenience, combine it all into a different interface:

public interface IContainer { IFileStorage FileStorage { get; } IDataRequest DataRequest { get; } ILocalizer Localizer { get; } IUiExtenter Extender { get; } DateTime Now { get; } } I called it “container”, although in the terminology of the “Inversion of Control” pattern, this may not be entirely correct.

This interface gives our PCL logic tools for working with files (

FileStorage ), requests to the server ( DataRequest ), localization ( Localizer ) and other things ( Extender ) combined into one interface for conciseness.Separately note property Now. It is necessary only for testing. The fact is that in many places the logic is tied to the current time, and this time must be changed for the correct emulation of the test environment. Changing the system time is a bad idea, so this property appeared. The main thing was to remember that instead of

DateTime.Now you need to use this property.The container has two implementations - in the Windows Phone application and in the test DLL. Each container "slipped" its interface implementations. For

IDataRequest , a container in a DLL with tests implemented an IDataRequest in the form of a stub, allowing tests to simulate server responses and communication problems.The object that implements

IContainer is created at the beginning of the application. During testing, a new such object is created for each test. Classes that manage application logic should have a link to this object. An easy way to provide this is to require a reference in the constructors of all classes.Of course, there are a number of ready-made

Problems

In theory, everything sounds good and beautiful, but in practice I had to face several problems. Strictly speaking, it did not work out all the logic in PCL. So, navigation between pages, the code for creating Dependency Property and some other things remained in the project of the Windows Phone application and thus turned out to be not closed tests. Theoretically, most of this code could be transferred to PCL, but this would lead to unjustified (IMHO of course) inflating abstractions and, as a consequence, complication of code support in the future.

Another problem was the binding of ViewModels properties to the properties of controls in XAML. Many of the latter have specific types (

Brush , Visibility ) that are not available in PCL. The problem is beautifully solved through the conversion mechanism , but you need to remember that with a large number of controls, such a conversion can slow down the application.Something else

There are a couple of things that, I think, can be implemented more correctly, but I can’t think how. I would be grateful to those who guide the true path.

The first thing. According to the logic of the system, in a certain place of the algorithm you need to show the user a dialogue with a choice, and then continue the algorithm depending on the user's choice. I didn’t think of anything better than to implement the ShowDialog method in one of the container interfaces and call it directly from the code in PCL. Accordingly, the “combat” implementation of the method showed a real dialogue and waited for the user's choice, while the test implementation returned a pre-configured value.

The second thing. The logic provides for updating the state of the control by timer. But the

Timer class available in PCL calls the interval end handler in a separate thread. Attempt to update the state of the element in this case ends badly. My frontal decision is to describe in the interface a method with a delegate as an argument. In the "combat" implementation, this method calls BeginInvoke for synchronization in the main thread, and in the test BeginInvoke simply calls the delegate.One more thing

Results

In the remainder of the tests, most of the functionality was closed. The code of ViewModels tests now resembles the description of real test cases (“click here” - “enter such a number here” - “compare the value with the expected one”).

Writing such tests is a good way to motivate testers seeking developers. And they are pleased to write code instead of clicking, and testing goes :)

When ideas with scripts and bugs dried up, there was only confidence that with the green eye of TeamCity, the application could be launched directly to

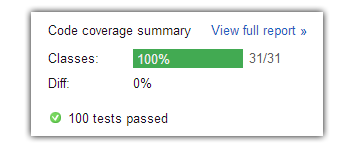

The most important result is that after the execution of the code, we can see on which lines the tests were “passed” and which remained intact. These data are shown in TeamCity as in the form of statistics (on the "affected" classes, methods and lines of code with different levels of detail), as well as in the form of sources with a red-green coloring.

All the code tests still would not work. Thus, the code-behind code remains, by definition, not closed, as well as the implementation of container interfaces. However, what was inside PCL (and this is most of the program code that is directly responsible for its functionality) is covered and controlled very well.

Since I’m a maniac, I set up the build to “crash” in the case of less than 100% method coverage (that is, if there was at least one method in the controlled code, including lambda expressions that the tests didn’t enter). Although for normal people such control will probably be overkill.

In any case, code coverage correlates with functional test coverage, and the study of red lines often suggested that test scenarios should be coded.

The main conclusion - for Windows Phone, you can organize unit-testing with control code coverage.

The main conclusion - for Windows Phone, you can organize unit-testing with control code coverage.Using coverage metrics is formal, of course, nonsense. However, this refers to the formal use of any other metrics. But if you consider the coating not as an annoying KPI that you want to wind, but as a tool for improving the quality, then this can be a really powerful thing.

Source: https://habr.com/ru/post/196992/

All Articles