We attach the spatial index to the unsuspecting OpenSource database.

I always liked it when the headline clearly says what will happen next, for example, “Texas Chainsaw Massacre”. Therefore, under the cut, we really will add spatial search to the database, in which it was not originally.

Introductory

Let's try to do without common words about the importance and usefulness of spatial search.

When asked why it was impossible to take an open DBMS with an already built-in spatial search, the answer would be: “if you want to do something well, do it yourself” (C). But seriously, we will try to show that most practical spatial problems can be solved on a completely ordinary desktop without overvoltage and special expenses.

As the experimental DBMS, we will use the open edition of OpenLink Virtuoso . Spatial indexing is available in its paid version, but this is a completely different story. Why this particular DBMS? The author likes it. Fast, easy, powerful. Copied-launched-everything works. We will use only standard means, no C-plugins or special assemblies, only an official assembly and a text editor.

Index type

We will index the points using a pixel-code (block) index.

What is the pixel-code index? This is a compromise version of the sweeping curve, allowing you to work with non-point objects in a 2-dimensional search space. Let's unite in this class all the methods that:

- Somehow divide the search space into blocks

- All blocks are numbered.

- Each indexed object is assigned one or more block numbers in which it is located.

- When searching, the extent is split into blocks, and for each block or continuous chain of blocks from a regular integer index (B-tree), all the objects that belong to them are obtained.

The most well-known methods are:

- Good old Igloo (or other sweeping curve ) of limited accuracy. The space is cut by a grid, the step of which (usually) is the characteristic size of the object being indexed. The cells are numbered X ... xY ... yZ ... z ... i.e. gluing the cell numbers to the normals.

- HEALPix . The name is derived from the Hierarchical Equal Area iso-Latitude Pixelisation. This method is intended for splitting a spherical surface in order to avoid the main Igloo deficiency - a different area under the cells at different latitudes. The sphere is divided into 12 equal-sized sections, each of which is further hierarchically divided into 4 sub-sections. The illustration for this method is in the header of the article.

- Hierarchical Triangular Mesh ( HTM ) .This is a sequential approximation to a sphere using triangles, starting with 8 starting points, each of which is hierarchically divided into 4 sub-triangles

- One of the most well-known block index schemes is GRID. Spatial data are considered as a special case of a multidimensional index. Wherein:

- the space is cut into pieces using the grille

- the resulting cells are numbered in some way

- data trapped in a specific cell is stored together

- attribute values are converted to cell numbers along the axes using a “directory”, it is assumed that it is small and is located in memory

- if the “directory” is large, it is divided into levels a la tree

- when changing, the lattice can adapt, cells or columns split or, conversely, merge

With all the drawbacks, the main one of which, perhaps, is non-adaptability, the need to customize the index for a specific task, block indexes also have advantages: simplicity and omnivorousness. In essence, this is an ordinary tree with an additional semantic load. We somehow convert the geometry into numbers when indexing and in the same way we get the keys to search in the index.

In this paper, we will use the line scan (+1 ystein ) because of the simplicity: the coordinates in the number can be cheaply converted by means of PL / SQL. Using HTM or the Hilbert curve without involving C-plugins would be difficult.

')

Data type

Why point? As already noted, Block indices are just the same as indexing. At the same time, the rasterizer for points is trivial, and for polygons it would have to be written, and this is completely irrelevant for the disclosure of the topic.

Actually the data

Without further ado,

- put the points on the nodes of the lattice 4000x4000 with the beginning in (0,0) and step 1.

- get a square of 16 million points.

- cell block index we take for 10X10,

- thus, we have 400x400 cells each with 100 points.

To generate the data, we will use the following AWK script (let’s be 'data.awk'):

BEGIN { cnt = 1; sz = 4000; # Set the checkpoint interval to 100 hours -- enough to complete most of experiments. print"checkpoint_interval (6000);"; print"SET AUTOCOMMIT MANUAL;"; for (i=0; i < sz; i++) { for (j=0; j < sz; j++) { print "insert into \"xxx\".\"YYY\".\"_POINTS\""; print " (\"oid_\",\"x_\", \"y_\") values ("cnt","i","j");"; cnt++; } print"commit work;" } print"checkpoint;" print"checkpoint_interval (60);"; exit; } Server

- Take the official build from the company website.

The author used the latest version 7.0.0 (x86_64), but it does not matter, you can take any other. - Install, if not, Microsoft Visual C ++ 2010 Redistributable Package (x64)

- Select the directory for the experiments and copy libeay32.dll, ssleay32.dll, isql.exe, virtuoso-t.exe from the archive bin and virtuoso.ini from the archive database

- We start the server using 'start virtuoso-t.exe + foreground', allow the firewall to open ports

- We test server availability with the command 'isql localhost: 1111 dba dba'. After establishing the connection, enter, for example. 'select 1;' and make sure the server is alive.

Data schema

First, create a table of points, nothing more:

create user "YYY"; create table "xxx"."YYY"."_POINTS" ( "oid_" integer, "x_" double precision, "y_" double precision, primary key ("oid_") ); Now the actual index:

create table "xxx"."YYY"."_POINTS_spx" ( "node_" integer, "oid_" integer references "xxx"."YYY"."_POINTS", primary key ("node_", "oid_") ); where node_ is the space cell identifier. Note that, although the index is created as a table, both fields are present in the primary key and, therefore, are packed in a tree.Configure index options:

registry_set ('__spx_startx', '0'); registry_set ('__spx_starty', '0'); registry_set ('__spx_nx', '4000'); registry_set ('__spx_ny', '4000'); registry_set ('__spx_stepx', '10'); registry_set ('__spx_stepy', '10'); The system function registry_set allows you to write string name / value pairs to the system registry — it is much faster than keeping them in auxiliary table and is still covered in transactions.

Write trigger:

create trigger "xxx_YYY__POINTS_insert_trigger" after insert on "xxx"."YYY"."_POINTS" referencing new as n { declare nx, ny integer; nx := atoi (registry_get ('__spx_nx')); declare startx, starty double precision; startx := atof (registry_get ('__spx_startx')); starty := atof (registry_get ('__spx_starty')); declare stepx, stepy double precision; stepx := atof (registry_get ('__spx_stepx')); stepy := atof (registry_get ('__spx_stepy')); declare sx, sy integer; sx := floor ((n.x_ - startx)/stepx); sy := floor ((n.y_ - starty)/stepy); declare ixf integer; ixf := (nx / stepx) * sy + sx; insert into "xxx"."YYY"."_POINTS_spx" ("oid_","node_") values (n.oid_,ixf); }; We put all this in, for example, 'sch.sql' and execute it by

isql.exe localhost:1111 dba dba <sch.sql Data loading

Once the circuit is ready, you can fill in the data.

gawk -f data.awk | isql.exe localhost:1111 dba dba >log 2>errlog It took the author 45 minutes (~ 6000 per second) on the Intel i7-3612QM 2.1GHz with 8Gb of memory.The memory size occupied by the server was about 1 Gb, the size of the database on the disk was 540Mb, i.e. ~ 34 bytes per point and its index.

Now we need to make sure that the result is correct, we enter in isql

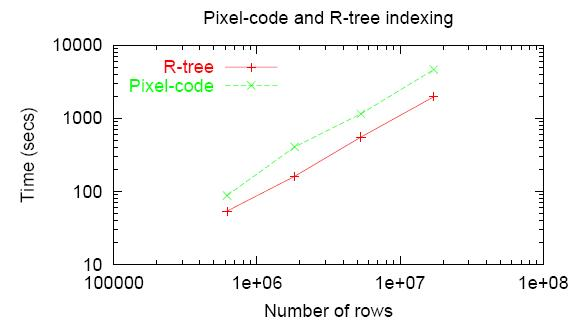

select count(*) from "xxx"."YYY"."_POINTS"; select count(*) from "xxx"."YYY"."_POINTS_spx"; Both requests must give out 16,000,000.As a guideline, you can use the data published here :

.

.They are received on PostgreSQL (Linux), 2.2GHz Xeon.

On the one hand, the data was obtained 9 years ago. On the other hand, in this case disk operations are a bottleneck, and random disk access has not accelerated much over the past period.

And here we can learn that the time of spatial indexing is commensurate with the time of filling the actual data.

It should be noted that filling data with subsequent indexing occurs (for obvious reasons) much faster than indexing on the fly. But we will be more interested in indexing on the fly because for practical purposes, a spatial database is more interesting, rather than a spatial search system.

And yet, it should be noted that when filling in spatial data, the order of their filling is very important.

Both in this paper and in the aforementioned order, the point feeding was perfect (or close to it) in terms of spatial index. If the data is mixed, the performance will fall quite dramatically, regardless of the selected DBMS.

If the data arrives arbitrarily, it can be recommended to accumulate them in a settling tank, as it overflows, sort and fill with portions.

We are developing a search

To do this, we use such a remarkable feature of Virtuoso, as a procedural view.

create procedure "xxx"."YYY"."_POINTS_spx_enum_items_in_box" ( in minx double precision, in miny double precision, in maxx double precision, in maxy double precision) { declare nx, ny integer; nx := atoi (registry_get ('__spx_nx')); ny := atoi (registry_get ('__spx_ny')); declare startx, starty double precision; startx := atof (registry_get ('__spx_startx')); starty := atof (registry_get ('__spx_starty')); declare stepx, stepy double precision; stepx := atof (registry_get ('__spx_stepx')); stepy := atof (registry_get ('__spx_stepy')); declare sx, sy, fx, fy integer; sx := floor ((minx - startx)/stepx); fx := floor (1 + (maxx - startx - 1)/stepx); sy := floor ((miny - starty)/stepy); fy := floor (1 + (maxy - starty - 1)/stepy); declare mulx, muly integer; mulx := nx / stepx; muly := ny / stepy; declare res any; res := dict_new (); declare cnt integer; for (declare iy integer, iy := sy; iy < fy; iy := iy + 1) { declare ixf, ixt integer; ixf := mulx * iy + sx; ixt := mulx * iy + fx; for select "node_", "oid_" from "xxx"."YYY"."_POINTS_spx" where "node_" >= ixf and "node_" < ixt do { dict_put (res, "oid_", 0); cnt := cnt + 1; } } declare arr any; arr := dict_list_keys (res, 1); gvector_digit_sort (arr, 1, 0, 1); result_names(sx); foreach (integer oid in arr) do { result (oid); } }; create procedure view "xxx"."YYY"."v_POINTS_spx_enum_items_in_box" as "xxx"."YYY"."_POINTS_spx_enum_items_in_box" (minx, miny, maxx, maxy) ("oid" integer); In essence, this procedure is very simple, it finds out which cells in the block index the search extent falls into and makes a sub-request for each line of these cells, writes record identifiers to the hash map, eliminating duplicates and giving the resulting set of identifiers as a result.How to use the spatial index? For example:

select count(*), avg(x.x_), avg(x.y_) from "xxx"."YYY"."_POINTS" as x, "xxx"."YYY"."v_POINTS_spx_enum_items_in_box" as a where a.minx = 91. and a.miny = 228. and a.maxx = 139. and a.maxy = 295. and x."oid_" = a.oid and x.x_ >= 91. and x.x_ < 139. and x.y_ >= 228. and x.y_ < 295.; Here is the join between the table of points and the synthetic recordset from the procedural view by identifiers. Here the procedural view plays the role of a coarse filter that works up to the cell. Therefore, in the last line we placed a thin filter.Expected Result:

- Number: (139 - 91) * (295 - 228) = 3216

- Average over X: (139 + 91 - 1) / 2 = 114.5 (-1 since the right border is excluded)

- Y average: (295 + 228 - 1) / 2 = 261 (...)

Benchmark

To prepare the request flow, use the following script on AWK:

BEGIN { srand(); for (i=0; i < 100; i++) { for (j=0; j < 100; j++) { startx = int(rand() * 3900); finx = int(startx + rand() * 100); starty = int(rand() * 3900); finy = int(starty + rand() * 100); print "select count(*), avg(x.x_), avg(x.y_) from"; print "\"xxx\".\"YYY\".\"_POINTS\" as x, " print "\"xxx\".\"YYY\".\"v_POINTS_spx_enum_items_in_box\" as a" print "where a.minx = "startx". and a.miny = "starty"." print " and a.maxx = "finx". and a.maxy = "finy". and" print "x.\"oid_\" = a.oid and x.x_ >= "startx". and x.x_ < "finx"." print " and x.y_ >= "starty". and x.y_ < "finy".;" } } exit; } As you can see, it turns out 10,000 requests in random places of random size (0 ... 100). Let's prepare 4 such sets and we will execute three series of tests - we start one isql with tests, 2 in parallel and 4 in parallel. On the eight-core processor, there is no more parallelizing. Measure performance.- 1 stream: 151 sec = ~ 66 requests per second per stream

- 2 threads: 159 sec = ~ 63 ...

- 4 threads: 182 sec = ~ 55 ...

You can try to compare these times with those obtained by the spinal cord by means of two ordinary indices - x_ & y_. We index the table for these fields and run a similar series of tests with queries like

select count(*), avg(x.x_), avg(x.y_) from "xxx"."YYY"."_POINTS" as x where x.x_ >= 91. and x.x_ < 139. and x.y_ >= 228. and x.y_ < 295.; The result is as follows: a series of 10,000 requests is executed in 417 seconds, we have 24 requests per second.Conclusion

The presented method has a number of drawbacks, the main of which, as already noted, is the need to tune it for a specific task. Its performance is highly dependent on the settings, which can no longer be changed after creating the index. However, this is true for most spatial indices in general.

But even this index written on the knee gives a decent performance. In addition, since we completely control the process, on this basis it is possible to make more complex structures, for example, multi-table indexes, which are difficult to build using standard methods. But more about that next time.

Source: https://habr.com/ru/post/196682/

All Articles