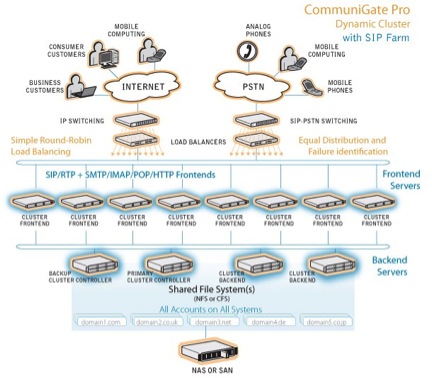

CommuniGate Pro Dynamic Cluster

In this article, we will look at the features and unique advantages of the CommuniGate Pro Dynamic Cluster for SaaS hosting providers.

CommuniGate Pro is a platform for organizing cloud-based Integrated Communications (UC) and Dynamic Cluster - the most reliable and scalable configuration of this platform.

The CommuniGate Pro architecture is a single core that provides all services, as opposed to the standard approach of integrating several services with each other. This significantly reduces transaction costs, and is confirmed by more than 15,000 installations worldwide with more than 150 million end users. Individual CommuniGate Pro installations successfully manage resources of more than 100,000 domains, each with its own set of users, a login page, a set of services, administrators, all of which fit into one Dynamic Cluster.

')

If you are interested in the nuances of the architecture and configuration of such a cluster, as well as real hardware estimates based on the projects implemented on our platform, I ask for a cat.

Corporate and small business solutions are usually not suitable for hosting platforms. These technologies, as a rule, operate within the framework of one company with a large IT department managing the system. For such systems, it is possible to make “scheduled outages” for maintenance, which is completely unsuitable for SaaS, which must be operational 100% of the time. Also, very often in corporate solutions there is not enough API for connecting the most important component for the provider - billing, since users within the same company are usually not charged.

The CommuniGate Pro Dynamic Cluster implements an architecture in which all nodes in the cluster are “active”. Many other solutions are content with recovery schemes after a fall or hot swap, but not a Dynamic Cluster - in it all systems work together in the form of a single logical entity and all nodes take on their share of the load. At the same time, each node at any time can be removed from the cluster or add a new one in any role. Thus, the Dynamic Cluster allows servicing of any of its members and increasing (and decreasing) the involved capacities directly during operation without interruption in the provision of services.

The key benefits of the Dynamic Cluster, which we will discuss in more detail below, are:

- Maintenance of nodes without stopping the service

- one system

- Efficient use of servers

- Predictable low overhead scalability

- High availability of services

Maintenance of nodes without stopping the service

Software and hardware updates on nodes can dramatically worsen the availability time of a communication system. These overlays are often referred to as “scheduled outages” in the corporate IT world. Unfortunately, such outages are completely unacceptable in the field of carrier-grade SaaS solutions. Imagine a situation in which the cable telephone would be disconnected for servicing on Saturdays.

In order to eliminate the need for outages, the Dynamic Cluster implements a sequential update mechanism. Such an update mechanism allows the cluster administrator to deactivate a node while distributing open connections to other cluster members until the node is completely offline. After that, it is available for maintenance, and in the future it can be returned back to the cluster.

one system

The CommuniGate Pro Dynamic Cluster allows the operator to treat the entire system as a single entity, even if it consists of more than 40 servers. Thus, managing a large-scale infrastructure is much simpler than in the case of corporate-level systems.

SaaS service providers for small businesses and individual entrepreneurs need an easily scalable system. And for our customers using Dynamic Cluster, clouds serving more than 20,000 small (5-30 end users) companies are completely common.

At the same time, in the case of IP PBXs and email solutions that were not designed for use as a SaaS platform, management becomes more complicated with the growth of the user base due to the fact that the number of separate parts increases - proxy server, database, LDAP servers, media gateways, etc.

Dynamic Cluster is an elegant solution, growing at no extra cost along with your user base.

Efficiency

The CommuniGate Pro platform uses hardware resources very efficiently. As a result, the provider can achieve a much greater density of users on each server compared to corporate solutions. The density of users is critical in data centers, as it allows to significantly reduce administration costs, electricity, cooling.

On most 64-bit carrier-class systems (Solaris, Linux, BSD), CommuniGate Pro can reach 90,000 sessions per system. There are also proven operating configurations for more than 450,000 end users on a single system.

Predictable Scalability

Dynamic Cluster is a system with minimal scaling overhead. To increase system capacity, simple enough cheap 1U form factor servers or blade servers are enough. Unlike other architectures with high demands on computing power, for CommuniGate Pro it is not recommended to use too powerful servers (such as 8-way). For example, a Dynamic Cluster 4x4 with 2 processor servers is better than 2x2 with 4 processor servers, since in the first case the specific load on one server is much lower.

Since the CommuniGate Pro source code is well parallelized, computing resources and memory are used as efficiently as possible and the prediction of the amount of required resources while increasing the user base is transparent and close to linear dependence. All nodes of the CommuniGate Pro cluster use the same executable file, and therefore there are no differences in node performance typical of heterogeneous architectures.

The elegant structure of the Dynamic Cluster allows providers to analyze and predict their costs with high accuracy, be it a server or a data warehouse.

High availability

One of the main features and goals of the development of a Dynamic Cluster is to reduce to zero the time of lack of service. All cluster nodes are active, and when one of them falls, the other members of the cluster assume the user load.

Dynamic Cluster Architecture

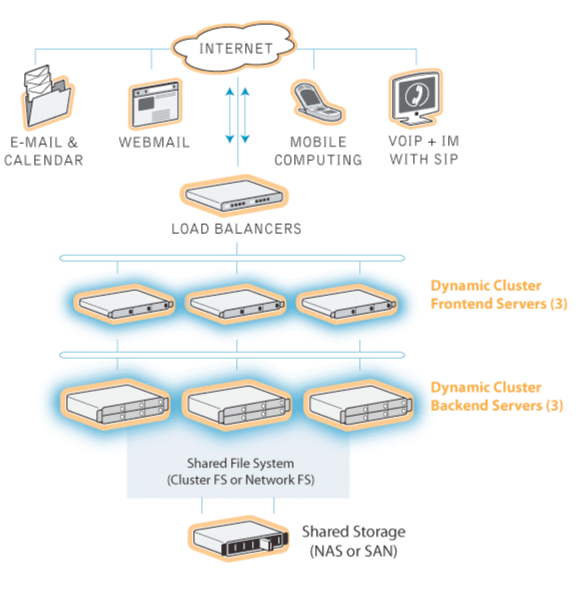

The main elements of the CommuniGate Pro cluster architecture include:

- Load balancer

- Network topology

- Frontend

- Backend

- Shared Storage Type NFS / CFS

Load balancer

A cluster includes several servers, so in order for users to access it at the same URL or IP address, a load balancer is needed. There are many working Dynamic Clusters in the world, and they use a variety of balancers. We recommend using only high-quality L4 devices with good bandwidth from Cisco, F5, Foundry. More detailed information on the configuration of balancers is available in the manual .

In addition, Linux frontends themselves can serve as balancers using the IPVS technology built into the kernel.

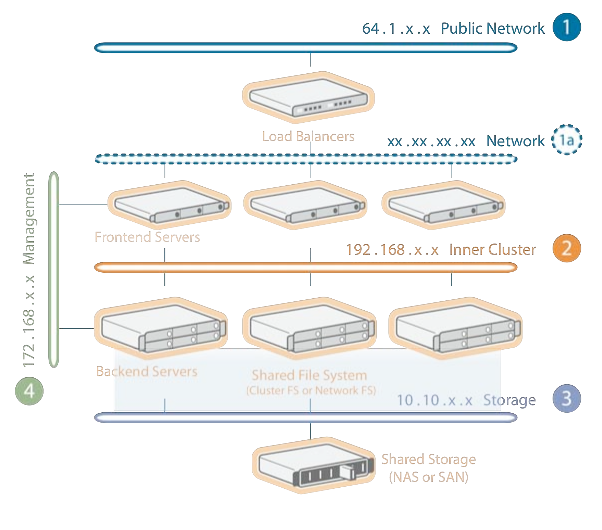

Network topology

When organizing a Dynamic Cluster, at least 4 separate networks and several high-speed switches are used for optimal performance. We recommend Cisco, F5, Foundry, HP or other similar level switches that provide gigabit speeds. At the same time it should be as simple and reliable devices, for example, we indicate the Cisco Catalyst 2960 series.

Each server in the cluster must have at least 3 network interfaces. The following set of network configurations is necessary for the Dynamic Cluster to work correctly:

- A public network is an external network with white IP addresses. Usually, each frontend has one such address (along with DNS records) and one or several balancers. For example:

Balancer, 64.10.1.1, uc.domain.com

frontend1.domain.com -> 64.10.1.11

frontend2.domain.com -> 64.10.1.12

frontend3.domain.com -> 64.10.1.13

End users, however, use only uc.domain.com in their client applications. And the balancer, in turn, scatters requests for active frontends. DNS records for frontends are needed for the convenience of administrators. - The network for intracluster exchange - the internal network of gray IP addresses is used to transfer information between cluster nodes, no other traffic is allowed, so a separate (second) interface is used on the frontends for this network.

- Storage network — An internal network used to connect backends to storage, this should be a high-speed network, optical fiber, or 10 Gigabit Ethernet.

- The control network is a closed network, usually this is the local network of the provider. It is intended for administrators, as well as for invoking the billing API, plug-ins, and other cluster management tasks.

Frontends

In a cluster, the front-ends perform the following functions:

- Exchange SMTP mail with other servers.

- The organization of SIP signaling and the execution of PBX applications (the execution of this function is synchronized between nodes, and we also call such clusters SIP farms, SIP-farm)

- Protective functions - RBL, error counters and amounts of received information from one address, black / white lists by IP and DNS addresses, and others ...

- Organization of client-server connections (IMAP, POP, HTTP, XIMSS, ...). After organizing the connection, the frontend calls the backend only for data.

- Handling SSL connections.

Backends

In the Dynamic Cluster, backends are the core of the platform.

They are responsible for:

- Domain Settings

- Account Settings

- Letters and other objects created by users

- Cluster Assistance Data

- Cluster management

- Authentication

- Generating HTML pages using WSSP technology

The account-level synchronization technology used in backends ensures that only one server has access to the account files at any one time (6-second intervals). The CommuniGate Pro Cluster thus eliminates the need to lock files at the file system level (except for one - heartbeat.data, which is blocked by the Cluster Controller). Due to the fact that the cluster does not rely on locking mechanisms in the file system, the performance of NFS increases by 5-7 times.

Dynamic cluster supports alternate update of nodes and automatic recovery after the fall of any node at any time; The recommended configuration in this case is a 3x3 cluster (3 frontends, 3 backends). But for the scheduled shutdown, it is recommended to use a “soft” shutdown - the “Make Not-Ready” setting in the WebAdmin interface on the Monitors-> Clusters page.

Cluster installation

The most critical part of the Dynamic Cluster is the data warehouse.

It is recommended to use either a NAS or a SAN solution depending on the operating system and the available features, such as NFS protocols and various file systems (CFS).

The installation process itself consists of the following steps:

- Network configuration

- Setting up the operating system

- Choosing a file system and data storage

- Comply with operating system requirements

- Configuring CommuniGate Pro.

Network configuration

The CommuniGate Pro cluster allows some flexibility in network settings, it is possible to:

- Place all cluster subnets behind NAT

- Use direct WAN addresses

- Networks of the mixed look, frontends at the same time are in DMZ and backends in the protected network

CommuniGate Pro can manage hundreds of IP addresses and assign them to specific domains that it serves.

Sometimes an administrator can achieve greater performance from a cluster node simply by reconfiguring a bit of the operating system. Typical settings to be adjusted:

- Limit on the number of open files

- Limit on the number of processes started by one user

- Network Buffers and Caches

- Settings for parts of the OS kernel that manage the network

- File System Cache Settings

Specific settings and values are different in each case.

Always check with the OS vendor side effects settings.

File system selection

The choice of file system depends on the choice of OS and data access system. But in any case, the file system should:

- Support simultaneous operations with files from the same folder, performed by different nodes of the cluster

- Deal with millions of small files

- Deal with about 10,000 files in one folder.

- Maintain path to file longer than 255 bytes

- Support multibyte characters in file and folder names

OS requirements

- The server name must match the main domain name in the license key (for example, the license is * .domain.com, the server is frontend1.domain.com)

- All IP addresses used during administration are configured before installing CommuniGate Pro

- There are no other mail servers installed in the OS.

- In the OS configuration there are working DNS servers

- At the time of installation, there are no firewalls in the system (they can be installed later)

In addition to the above, it is strongly recommended that you thoroughly check backends for performance before installing CommuniGate Pro and mounting the repository.

Practical examples of hardware selection

The choice of hardware depends, of course, on many factors. The examples in this section are based on two standard options for using a cluster in order to form a general idea of what equipment and architecture works optimally and stably in practice. The following parameters affect the calculation of the required capacity and performance of “iron”:

- Types of service provided (mail, VoIP, synchronization with mobile devices, Pronto! (Flash client to server), expected number of audio conferences, the amount of data that users will store, ...)

- In the case of VoIP, IM, Presence services, the use of media stream redirection / transcoding will take resources. The ideal option would be if all media streams went from user to user directly, but networks with a complex topology and a mismatch of audio codecs often result in the media stream being processed by the server.

- The number of users broken down by the types of services they use.

- Download percentage is the number of active sessions to the total number of accounts during peak hours, broken down by connection type.

- Templates of using services (different users can vary significantly in the number of email clients, mobile and calls per unit of time).

All of these factors must be evaluated to formulate hardware requirements. Based on them and on the practice of deploying multiple Dynamic Clusters, the CommuniGate Systems team can help with the choice of platform and its parameters.

Example 1: Email service with push notifications and instant messages

| User type | Number of users |

|---|---|

| Total | 70,000 |

| Pop | 70,000 |

| IMAP / MAPI | 70,000 |

| WebMail & Pronto! (Flash) | 70,000 |

| Synchronization with mobile devices | 5,000 |

| Messages (XMPP / SIP) and statuses (Presence) | 70,000 |

Estimated traffic percentage:

| Protocol | Share in traffic (%) |

|---|---|

| Pop | 20 |

| IMAP | 20 |

| MAPI | five |

| Webmail | 35 |

| Signals (XMPP / SIP) | 20 |

Estimated number of simultaneously connected users: 4,000.

Recommended architecture:

3x3 dynamic cluster.

Frontends

HP DL380G6 X5550

CPU: 2 x Intel Xeon Processor X5550 (2.66 GHz, 8MB L3 Cache, 95W, DDR3-1333, HT, Turbo 2/2/3/3)

Memory: 12 GB

Network Controllers: 2 x HP NC382i Dual Port Multifunction Gigabit Server Adapters

Storage controller: HP Smart Array P410i / 512MB, BBWC

Operating system: SuSE or Redhat Linux 64bit

Backends

HP ProLiant DL580G5 X7460 16GB (4P)

CPU: Intel Xeon X7460 (6 core, 2.67 GHz, 16 MB L3, 130W)

Memory: 16 GB

Network Controllers: 1GbE NC373i Multifunction 2 Ports

Storage controller: Smart Array P400i / 512MB, BBWC

Operating system: SuSE or Redhat Linux 64bit

With an increase in the number of users up to 100,000, the configuration should be strengthened with one frontend (4x3). With an increase to 200 000 - 6 frontends and 4 backends.

Example 2: VoIP service with Pronto! as a softphone, HD Audio with media streaming

| User type | Number of users |

|---|---|

| Total | 2,000,000 |

| VoIP | 90,000 |

Ratio of traffic types:

| Protocol | Share of traffic (%) |

|---|---|

| Rtp | 80% |

| XIMSS (Pronto! - alarm, mail, calendars) | 20% |

Total number of simultaneous calls: 9,000.

The number of simultaneously open user sessions: 90,000.

Recommended architecture:

3x3 dynamic cluster.

Frontends

DELL PowerEdge R710

CPU: 2 x Intel Xeon Processor X5550 (2.66 GHz, 8MB L3 Cache, 95W, DDR3-1333, HT, Turbo 2/2/3/3)

Memory: 24 GB

Network Controllers: 2 x HP NC382i Dual Port Multifunction Gigabit Server Adapters

Storage controller: HP Smart Array P410i / 512MB with BBWC

Operating system: SuSE or Redhat Linux 64bit

Backend

DELL PowerEdge R710

CPU: 2 x Intel Xeon Processor X5550 (2.66 GHz, 8MB L3 Cache, 95W, DDR3-1333, HT, Turbo 2/2/3/3)

Memory: 16 GB

Network Controllers: 1GbE NC373i Multifunction 2 Ports

Storage Controller: Smart Array P400i / 512MB BBWC

Operating system: SuSE or Redhat Linux 64bit

Tips for setting up data storage for a cluster

In the cluster domain, all user accounts must be accessible to all backends. We recommend using NAS with NFS protocol for organizing storage, since the CommuniGate Pro cluster does not use file locking at the file system level, NFS is the most suitable protocol for it.

Multiple access points and multiple NFS servers can be used in the same cluster. Examples of solutions used:

| NETAPP FAS2000 | EMC Celerra NS-120 | SUN Storage 7110 |

|---|---|---|

|  |  |

File structure in a cluster

Accounts in the shared domain are saved in folders with a similar path:

SharedDomains / domainName / accountName.macnt /

For example:

SharedDomains / company.com / aivanov.macnt /

It is inconvenient to save tens of thousands of accounts in one directory (most file systems slowly open folders with a large number of objects). Therefore, the “Account-level foldering” mechanism was invented, it lies in the fact that accounts are saved in a set of subfolders:

SharedDomains / company.com / a.sub / aivanov.macnt /

or

SharedDomains / company.com / a.sub / i.sub / aivanov.macnt /

or

SharedDomains / company.com / ai.sub / v.sub / aivanov.macnt /

If there are many domains on the cluster (from about 5,000), then it makes sense to use Domain-level foldering:

SharedDomains / c.sub / company.com / aivanov.macnt /

SharedDomains / c.sub / o.sub / company.com / aivanov.macnt /

Or in the case of a large number of large domains:

SharedDomains / c.sub / company.com / a.sub / i.sub / aivanov.macnt /

It is best to choose a partitioning method as soon as the number of users and domains becomes known. But you can change these settings in the process of the cluster.

Storage Optimization with SSD

CommuniGate Pro has a feature that can be used to optimize storage using an SSD.

Each account in the folder has the account.setting and account.info files. These files are read every time a user logs in with this account, and the .info file changes every time the account's metadata changes (for example, when registering a SIP device).

To increase the overall efficiency of the storage, you can save these files separately on a more expensive and efficient storage medium (SSD). For 1 million accounts, the total size of all such files will be 5-20 GB.

For example, suppose that in a standard cluster configuration, files are stored in a folder:

SharedDomains / company.com / a.sub / aivanov.macnt / account.settings

SharedDomains / company.com / a.sub / aivanov.macnt / account.info

Then if we enable the use of “Fast Storage Type” (set it to a non-zero value, for example, 1), then the path to the .info and .settings files will be:

SharedDomains / company.com / fast / a.sub / aivanov.settings

SharedDomains / company.com / fast / a.sub / aivanov.info

Thus, you can install fast storage at

SharedDomains / company.com / fast /, and all .settings and .info files will be saved to it.

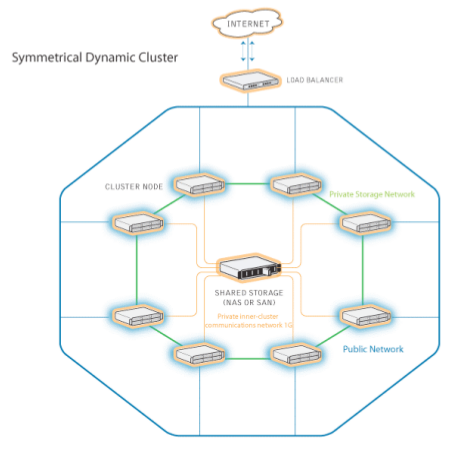

Symmetric Dynamic Cluster

The CommuniGate Pro cluster can be configured in such a way that each node acts as both a frontend and a backend. This is called a symmetrical configuration:

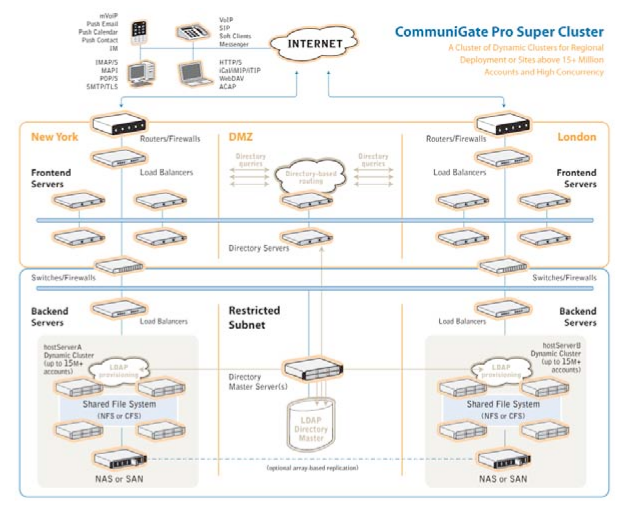

Super Cluster (cluster of clusters)

This configuration applies when you need to provide:

Regional power distribution for better connection quality and local access for users.

Service for a very large number of users (more than 15 million accounts) for sharing the load between several Dynamic Clusters.

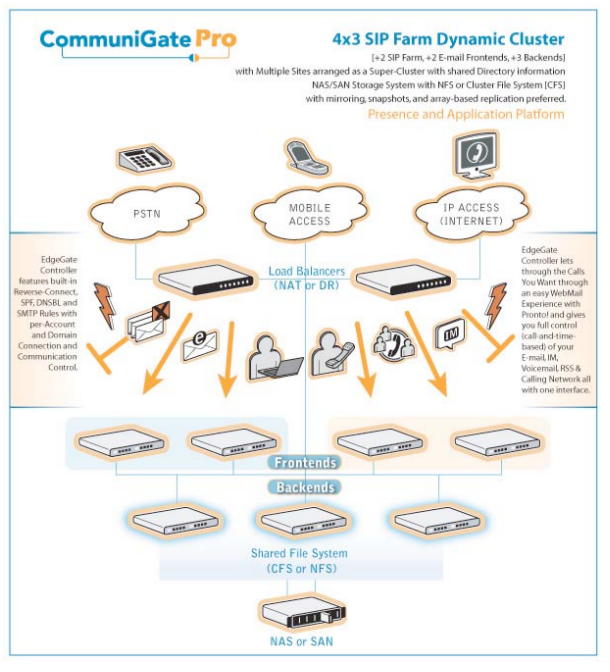

Dynamic Cluster in SIP Farm Configuration

SIP Farm is a technology from CommuniGate Systems for clustering VoIP and achieving 99.999% service availability time, endurance and scalability.

Both normal Dynamic Cluster and Super Cluster can be configured as a SIP farm (in this case we say that some of the nodes are allocated to the SIP farm).

Incoming SIP UDP packets or TCP connections are distributed, as usual, through load balancers. The server that receives the packet determines whether it should not be processed on another member of the SIP farm (if it is a response or an ACK for an existing request or a packet for a task created on a specific server) and forwards it if necessary.

Packages that are not intended for specific nodes are distributed among farm members using intracluster algorithms in accordance with the load and availability of nodes in a SIP farm.

Results

Dynamic Cluster is considered to be the flagship of all possible CommuniGate Pro server configurations. Historically, it is the “use cases” like the ones mentioned in the article - high-performance services intended for mass use have always been the basis of product design.

If you have any questions about the product, do not hesitate to contact us at russia@communigate.com. For complex technical issues - support@communigate.com

Download the free (up to 5 users, without a cluster) version on our website .

Source: https://habr.com/ru/post/196660/

All Articles