Writing a server that does not fall under load

From the translator: This is the fifth article from the cycle about Node.js from the Mozilla Identity team that deals with the Persona project.

From the translator: This is the fifth article from the cycle about Node.js from the Mozilla Identity team that deals with the Persona project. All articles of the cycle:

- " Hunting for memory leaks in Node.js "

- "We load Node to the eyeballs "

- " Store the session on the client to simplify application scaling "

- " Frontend performance. Part 1 - concatenation, compression, caching "

- " We write a server that does not fall under load "

- " Frontend performance. Part 2 - we cache dynamic content using etagify "

- " Taming Web Application Configurations with node-convict "

- " Frontend performance. Part 3 - font optimization "

- " Localization of Node.js Applications. Part 1 "

- " Localization of Node.js Applications. Part 2: Toolkit and Process "

- " Localization of Node.js Applications. Part 3: Localization in Action "

- " Awsbox - PaaS infrastructure for deploying Node.js applications in the Amazon cloud "

How to write a Node.js application that will continue to work even under an impossible load? This article shows the methodology and library node-toobusy, which embodies it, the essence of which can be most briefly conveyed by this code snippet:

')

var toobusy = require('toobusy'); app.use(function(req, res, next) { if (toobusy()) res.send(503, "I'm busy right now, sorry."); else next(); }); What is the problem?

If your application performs an important task for people, it is worth spending a little time thinking about the most disastrous scenarios. It can be a disaster in a good way - when your site is in the spotlight of social media, and instead of ten thousand visitors a day, you suddenly receive a million. Preparing in advance, you can create a website that can withstand a sudden increase in attendance that exceeds the usual load by orders of magnitude. If, however, these preparations are neglected, the site will fall exactly when you least want it, when it is visible to everyone.

This could be a malicious surge of traffic, for example, from a DoS attack. The first step to combat such attacks is to write a server that does not crash.

Your server is under load

To show how a regular, unprepared for attendance surge behaves, the server, I wrote a demo application that makes five asynchronous calls for each request, all in all spending five milliseconds of CPU time.

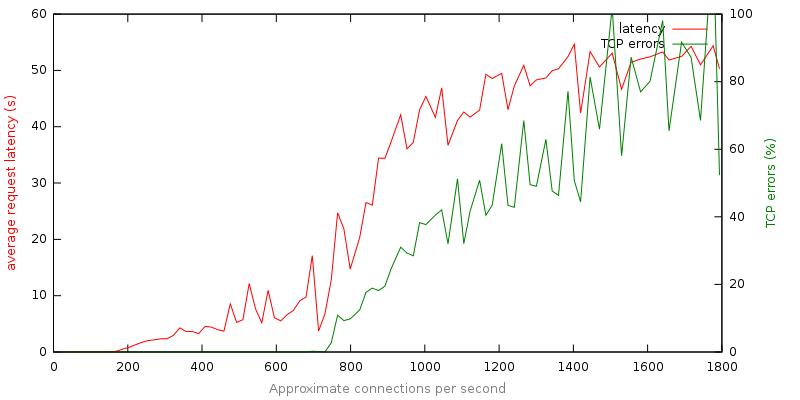

This roughly corresponds to the usual application, which can, with each request, write something to the logs, access the database, render the template and send a response to the client. Below is a graph of the delay and TCP error versus the number of connections.

The analysis of this data is quite obvious:

- The server can not be called responsive. Under load, six times the nominal (1,200 requests per second), he squeaks out an answer after an average of 40 seconds.

- Failures look terrible. In 80% of cases, the user receives an error message after almost a minute of anxious waiting.

Learning to refuse politely

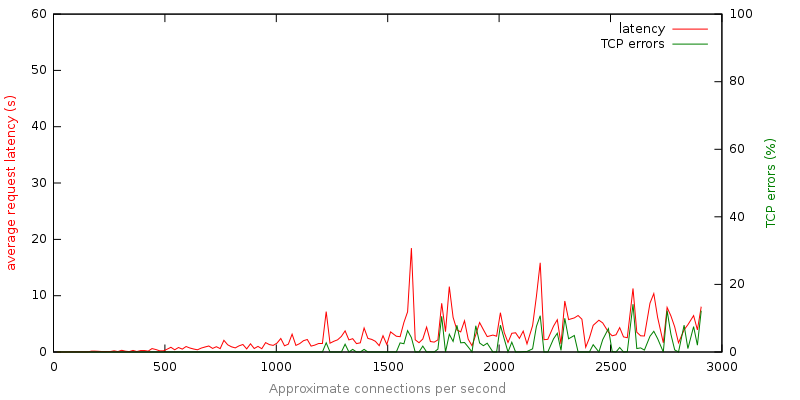

For comparison, I applied the approach described at the beginning of the article to the demo application. It allows you to determine when the load exceeds the allowable and immediately respond with an error message. Here is a schedule similar to the first example for it:

The graph does not show the number of errors with code 503 ( service unavailable ) - it gradually increases with increasing load. What conclusions can be made by looking at this chart?

- Forward error messages add reliability. Under load, ten times the full-time, the application behaves quite responsive.

- Successful response or failure occurs quickly. The average response time is almost always less than 10 seconds.

- Failures occur politely. By rejecting the request in the event of an overload, we replace the awkward time-out with the instant response with the 503rd error.

In order to force the server to accurately report the 503rd error, it is only necessary to create a small template with a message for the user. An example of such an answer can be seen on many popular sites.

How to use node-toobusy

The node-toobusy module is available as an npm package and on Github . After installation (

npm install toobusy ), it is normally included in the application: var toobusy = require('toobusy'); After connecting, the module begins actively monitoring the process in order to determine when it is overloaded. You can check its status anywhere in the application, but it is better to do this in the early stages of processing the request.

// middleware, // , - app.use(function(req, res, next) { // - toobusy() , // if (toobusy()) res.send(503, "I'm busy right now, sorry."); else next(); }); Already in this form, the node-toobusy module significantly increases the resistance of the application under load. It remains to choose the value of sensitivity , the most suitable for your application.

How it works?

How can you reliably determine that the Node application is too busy?

This is a more interesting question than one would expect, especially considering that node-toobusy works in any out-of-box application. Consider some approaches to solving this problem:

Tracking CPU usage for the current process. We could use the number shown by the

top command - the percentage of time that the processor spends on the application. If, for example, this figure exceeds 90%, we can conclude that the application is overloaded. But if several processes work on the machine, one cannot be sure that the process with the Node application can use the processor 100%. In such a scenario, the application may never reach this 90% and at the same time practically lie.Track total system load. We could calculate the total system load to take it into account when determining application overload. We would also have to take into account the number of available processor cores, etc. Very quickly, this approach turns out to be too complicated, it requires platform-specific extensions, and the process priority must also be taken into account!

We need a solution that just works. All we need is to determine that our application is unable to respond to requests at an acceptable rate. This criterion does not depend on the platform or on other processes in the system.

In node-toobusy, the main event loop delay measurement is used. This cycle underlies any Node.js application. All work is queued, and in the main loop, tasks from this queue are executed sequentially. When the process is overloaded, the queue begins to grow - work becomes more than can be done. To find out the degree of overload, it is enough to measure the time it takes a tiny task to defend the entire queue. For this, node-toobusy uses a callback that must be called every 500 milliseconds. By subtracting 500 ms from the actual measured interval value, you can get the time during which the task was in the queue, that is, the desired delay.

Thus, node-toobusy for determining the process overload constantly measures the delay of the main event cycle - it is a simple and reliable method that works in any environment on any server.

All articles of the cycle:

- " Hunting for memory leaks in Node.js "

- "We load Node to the eyeballs "

- " Store the session on the client to simplify application scaling "

- " Frontend performance. Part 1 - concatenation, compression, caching "

- " We write a server that does not fall under load "

- " Frontend performance. Part 2 - we cache dynamic content using etagify "

- " Taming Web Application Configurations with node-convict "

- " Frontend performance. Part 3 - font optimization "

- " Localization of Node.js Applications. Part 1 "

- " Localization of Node.js Applications. Part 2: Toolkit and Process "

- " Localization of Node.js Applications. Part 3: Localization in Action "

- " Awsbox - PaaS infrastructure for deploying Node.js applications in the Amazon cloud "

Source: https://habr.com/ru/post/196518/

All Articles