Hardware virtualization. Theory, reality and support in processor architectures

In this post I will try to describe the basis and features of the use of hardware support for computer virtualization. I will begin by defining the three necessary conditions for virtualization and formulating theoretical grounds for achieving them. Then proceed to the description of how the theory is reflected in the harsh reality. As an illustration, we will briefly describe how different vendors of processors of different architectures implemented virtualization in their products. In the end, the question of recursive virtualization will be raised.

First - a few definitions, maybe not quite typical for articles on this topic, but used in this note.

I will try to define the rest of the terminology as it appears in the text.

Virtualization was of interest even before the invention of the microprocessor, in times of predominance of large systems — meenframes, whose resources were very expensive, and their downtime was economically unacceptable. Virtualization allowed to increase the degree of utilization of such systems, while freeing users and application programmers from the need to rewrite their software, since from their point of view the virtual machine was identical to the physical one. The pioneer in this area was IBM with System / 360, System / 370 mainframes, created in the 1960-1970s.

Not surprisingly, the criteria for creating an effective virtual machine monitor were obtained at about the same time. They are formulated in the classic work of 1974 by Gerald Popek and Robert Goldberg “Formal requirements for virtualizable third generation architectures” [8]. Consider its main prerequisites and formulate its main conclusion.

')

In the following, a simplified representation of a “standard” computer is used from an article consisting of one central processor and linear homogeneous RAM. Peripheral devices, as well as means of interaction with them, are omitted. The processor supports two modes of operation: the supervisor mode used by the operating system and the user mode in which applications are executed. Memory supports the segmentation mode used for organizing virtual memory.

Requirements for a virtual machine monitor (VM):

The processor state contains at least three registers: M, which determines whether it is in supervisor mode s or user u, P is the current instruction pointer, and R is the state that defines the boundaries of the memory segment used (in the simplest case, R specifies a segment, that is, R = (l, b), where l is the address of the beginning of the range, b is its length).

Memory E consists of a fixed number of cells that can be accessed by their number t, for example, E [t]. The size of the memory and cells for this consideration is irrelevant.

When executed, each instruction i in the general case can change both (M, P, R) and memory E, i.e. it is a conversion function: (M 1 , P 1 , R 1 , E 1 ) -> (M 2 , P 2 , R 2 , E 2 ).

It is considered that for some input conditions the instruction causes a trap exception ( eng. Trap) if, as a result of its execution, the contents of the memory do not change, except for a single cell E [0], into which the previous state of the processor is placed (M 1 , P 1 , R 1 ). The new state of the processor (M 2 , P 2 , R 2 ) is copied from E [1]. In other words, the trap allows you to save the full state of the program at the time before the execution of its last instruction begins and transfer control to the processor, in the case of conventional systems, usually working in supervisor mode and designed to provide additional actions on the system state, and then return control to the program by restoring the state E [0].

Further, traps may have two signs.

Note that these signs are not mutually exclusive. That is, the result of execution can simultaneously be a flow control trap and memory protection.

The machine instructions of the processor in question can be classified as follows:

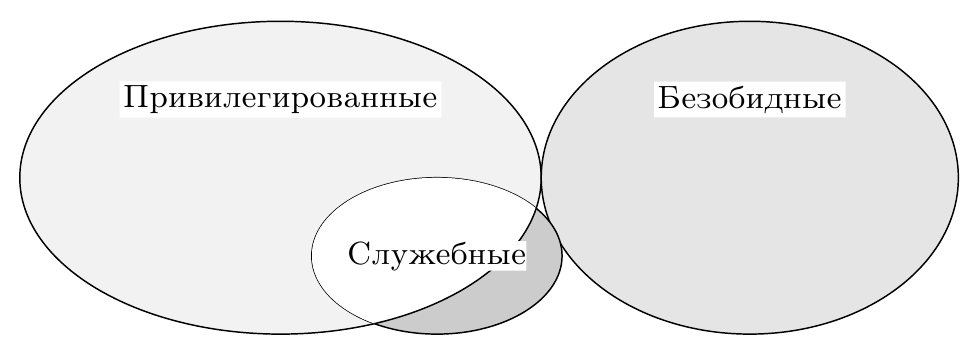

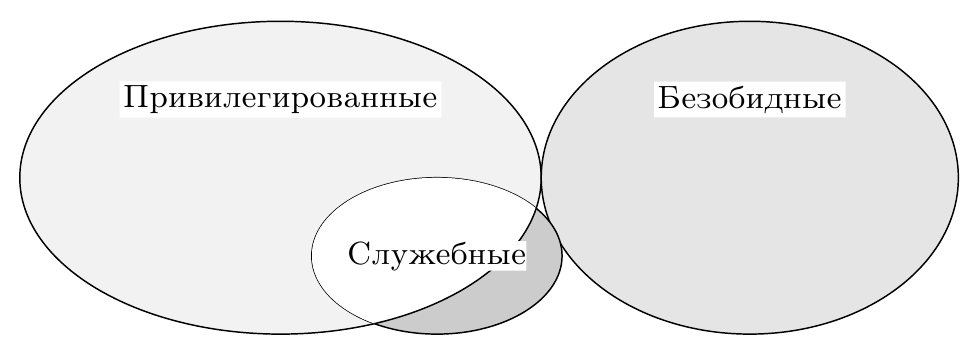

The observance of the three conditions stated above for the possibility of building a virtual machine monitor is given in the following sentence: the set of service instructions is a subset of privileged instructions (Fig. 1). Omitting the formal proof of Theorem 1 from the article, we note the following circumstances.

Fig. 1: Fulfillment of virtualization condition. The set of service instructions is a subset of privileged

Despite the simplicity of the model used and the conclusions drawn from it, the work of Goldberg and Popek is still relevant. It should be noted that non-observance of the conditions described in it does not at all make the creation or use of virtual machines on some architecture fundamentally impossible, and there are practical examples of implementations confirming this. However, it becomes impossible to maintain the optimal balance between the three properties: insulation, equivalence and efficiency. Most often, it is necessary to pay for the speed of virtual machines because of the need for thorough search and programmatic control over the execution of service, but not privileged instructions, because the hardware itself does not provide this (Fig. 2). Even the only such instruction, executed directly by the VM, threatens the stable operation of the monitor, and therefore he has to scan the entire stream of guest instructions.

Fig. 2: Failure to comply with the condition of virtualizability. Utility, but not privileged instructions require the implementation of complex logic in the monitor

The work [8] itself contains both clearly indicated simplifications of the studied structure of real systems (no peripherals and input / output systems), as well as implicit assumptions about the structure of executable guest programs (almost entirely consisting of harmless instructions) and host systems (single processor).

We now consider these limitations in more detail, and also suggest how the criterion can be extended to additional resources that require virtualization, and thus increase its practical value for architects of new computing systems.

For effective work of programs inside a VM, it is necessary that most of their instructions are harmless. As a rule, this is true for application applications. Operating systems, in turn, are designed to manage system resources, which implies the use of privileged and service instructions, and the monitor has to intercept and interpret them with a corresponding drop in performance. Therefore, ideally, the instruction set should be as privileged as possible so that the frequency of occurrence of traps is minimal.

Since peripheral devices are a service resource of a computer, it is obvious that to ensure isolation and equivalence conditions it is necessary that all access attempts are controlled by a VM monitor in the same way as they are controlled in a multitasking operating system by its core. Currently, access to devices is most often done through the mechanism of reflecting them in the physical memory of the system (memory mapped I / O), which means that inside the monitor this read / write of some regions should either cause a memory protection trap or be non-functional i.e. not cause a trap and do not affect the state in an uncontrolled manner.

The intensity of the interaction of applications with peripherals can be different and is determined by their functionality, which affects their deceleration during virtualization. In addition, a VM monitor can make various classes of peripherals present on a host available within several VMs in various ways.

Interrupts are the mechanism for notifying the processor of external device events requiring the attention of the operating system. In the case of using virtual machines, the monitor must be able to control the delivery of interrupts, since some or all of them must be processed inside the monitor. For example, an interrupt timer can be used by it to track / limit guest use of processor time and to be able to switch between several simultaneously running VMs. In addition, in the case of several guests it is unclear in advance which of them should deliver the interruption, and the monitor should decide.

The simplest isolation solution is to route all interrupts to the VM monitor. In this case, the equivalence will be ensured by him: an interruption, if necessary, will be delivered to the inside of the guest through a simulation of a change in his state. The monitor can additionally create virtual interrupts, due only to the logic of its operation, and not external events. However, the effectiveness of this solution will not be optimal. As a rule, the response of the system to an interruption must occur within a limited time, otherwise it will lose its meaning for the external device or will have disastrous consequences for the system as a whole. The introduction of a virtualization layer increases the delay between the moment of the occurrence of the event and the moment of its processing in the guest compared to the system without virtualization. More effective is the hardware control over the delivery of interrupts, allowing some of them to be harmless to the state of the system and not to require the intervention of the monitor program every time.

Almost all modern computers contain more than one core or processor. In addition, several VMs can be executed within one monitor, each of which can have several virtual processors at its disposal. Consider how these circumstances affect the conditions of virtualization.

Introduction to the consideration of several host and guest processors leaves the condition of effective virtualizability in force. However, it is necessary to pay attention to the fulfillment of the conditions for the efficiency of multi-threaded applications inside the VM. Unlike single-threaded, they are characterized by the processes of synchronization of program parts running on different virtual processors. At the same time, all participating threads are waiting for all of them to reach the predetermined point of the algorithm, the so-called. barrier. In the case of virtualization of the system, one or several guest streams may turn out to be inactive, displaced by the monitor, because of which the rest will waste time.

An example of such ineffective behavior of guest systems is synchronization with the use of cyclic locks ( English spin lock) inside VM [9]. Being inefficient and therefore unused for single-processor systems, in the case of multiple processors it is a lightweight alternative to other, more heavy locks ( English lock) used to enter the critical sections of parallel algorithms. Most often they are used inside the operating system, but not user programs, since only the OS can determine exactly which system resources can be effectively protected using cyclic locks. However, in the case of a virtual machine, it is not the OS that is actually scheduling resources, but a VM monitor, which is generally not aware of them and can force out a thread capable of freeing a resource, while the second thread will perform a cyclic lock, wasting CPU time. The best solution is to deactivate the blocked thread until the resource it needs is released.

The existing solutions for this problem are described below.

Finally, we note that the delivery and interrupt handling schemes in systems with several processors are also more complex, and this has to be taken into account when creating a VM monitor for such systems, and its efficiency may be lower than that of a single-processor equivalent.

The machine instruction model used earlier to formulate an assertion about efficient virtualization used a simple linear address translation scheme based on segmentation, popular in the 1970s. It is computationally simple, it does not change with the introduction of a VM monitor, and therefore, no analysis has been made of the influence of the address translation mechanism on efficiency.

Currently, virtual paged memory mechanisms apply non-linear conversion of virtual addresses of user applications to physical addresses used by hardware. The system resource involved in this is the register-pointer of the address of the transformation table (most often in practice several tables are used that form a hierarchy with a common root). In the case of VM operation, this pointer needs to be virtualized, since each guest system has its own register contents, as well as the position / contents of the table. The cost of software implementation of this mechanism inside the monitor is high, so applications that actively use memory can lose their effectiveness in virtualization.

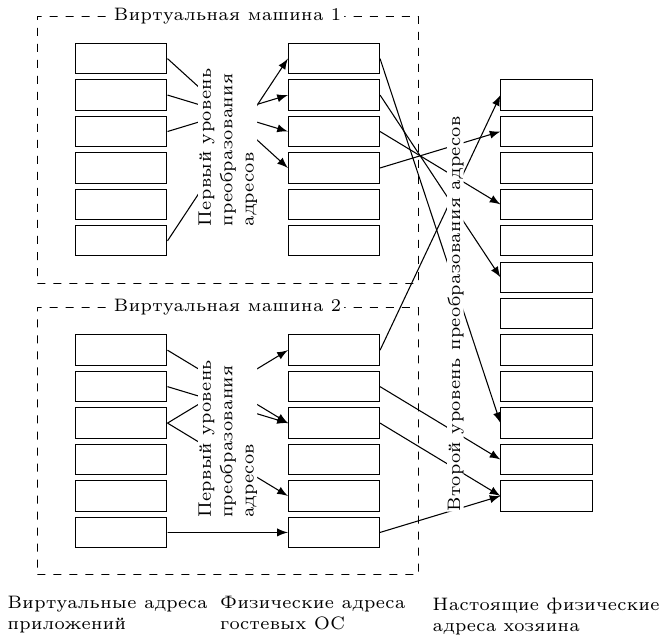

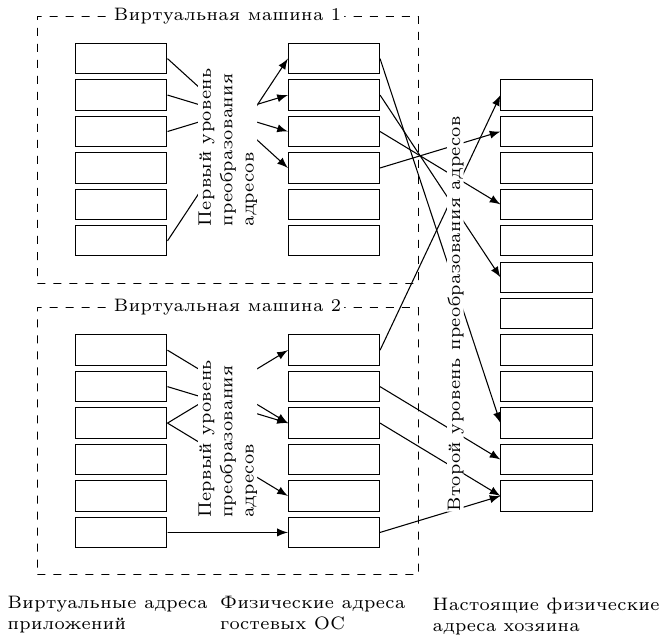

To solve this problem, a two-level hardware address translation is used (Fig. 3). Guest OSs see only the first level, while the physical address generated for them is subsequently transmitted by the second level to the real address.

Fig. 3: Two-level translation of addresses. The first level is controlled by the guest OS, the second - by the virtual machine monitor

Another computer resource responsible for address translation is an associative translation buffer ( English translation lookaside buffer, TLB) consisting of several entries. Each guest system has its own TLB content, so when changing an active VM or switching to a monitor, it should be reset. This adversely affects the performance of systems, since the restoration of its contents takes time, during which it is necessary to use a less efficient access to the address translation table located in memory.

The solution is to share TLB resources among all systems [10]. Each line of the buffer is associated with an identifier - a tag unique to each VM. When searching in it, the equipment takes into account only the lines whose tag corresponds to the current VM.

In addition to processors, peripheral devices can also access memory directly using DMA technology (direct memory access). In this case, the treatment in classical systems without virtualization goes to physical addresses. Obviously, it is necessary to translate such addresses inside a virtual machine, which translates into overhead and a decrease in monitor efficiency.

The solution is to use an IOMMU (Input Output Memory Management Unit) device, which allows you to control how the host devices access physical memory.

Let's expand the virtualization condition by replacing the word “instruction” with “operation”: the set of service operations is a subset of the privileged ones. In this case, by operation we will mean any architecturally defined activity on reading or changing the state of the system, including instructions, interrupts, access to devices, address translation, etc.

At the same time, the condition for increasing the efficiency of virtualization will sound as follows: the system architecture must have a minimum number of service operations . It can be achieved in two ways: transferring service instructions to harmless or reducing the number of privileged ones. To do this, most of the architectures followed the path of adding a new mode of the rm monitor mode to the state register M ( English root mode). It relates to mode s as s to u; in other words, the updated class of privileged instructions now calls the control flow trap, which translates the processor from s to r.

Consider the main modern architectures of computing systems used on servers, workstations, as well as embedded systems, from the point of view of practical implementation of the above-described theoretical principles. See also the series of articles [5,6,7].

IBM was one of the first to introduce the architecture with hardware support for virtualization on the server microprocessor market in the POWER4 series in 2001. It was intended to create isolated logical partitions ( English logical partitions, LPAR), each of which is associated with one or more processors and I / O resources. To this end, a new hypervisor mode was added to the processor to the supervisor and user modes already present. To protect the memory, each LPAR is limited in the mode with address translation disabled and has access only to a small private memory region; to use the rest of the memory, the guest OS is obliged to turn on the broadcast, controlled by the VM monitor.

In 2004, the development of this architecture, called POWER5, brought serious improvements to the mechanisms of virtualization. Thus, a new timer device was added, available only for the VM monitor, which allowed it to control the guest systems more precisely and allocate processor resources to them with an accuracy of one hundredth of a processor. Also, the VM monitor was able to control the interrupt delivery address - in the LPAR or in the hypervisor. The most important innovation was the fact that the presence of the hypervisor was mandatory - it loaded and managed system resources, even if there was a single LPAR partition on the system. Supported operating systems (AIX, Linux, IBM i) were modified with this in mind in order to maintain a kind of paravirtualization scheme. To manage I / O devices, one (or two, for load balancing) from the LPAR loads a special operating system — the virtual I / O server (VIOS), which provides these resources for the remaining partitions.

Sun, which developed UltraSPARC and Solaris, offered OS-level virtualization (so-called containers or zones) since 2004. In 2005, hardware virtualization was introduced to Niagara 1 multi-threaded processors. At the same time, the virtualization granularity was equal to one thread (in total, the chip had eight cores, four threads each).

For the interaction between the OS and the hypervisor, a public and stable interface for privileged applications [3] was presented, hiding most of the architectural registers from the OS.

For address translation, the previously described two-level scheme with virtual, real and physical addresses is used. In this case, the TLB does not store the intermediate translation address.

Unlike POWER and SPARC, the IA-32 architecture (and its AMD64 extension) has never been controlled by a single company, which could add (a couple) virtualization between hardware and OS, violating backward compatibility with existing operating systems. In addition, it clearly violates the conditions for efficient virtualization - about 17 service instructions are not privileged, which prevented the creation of hardware-supported VM monitors. However, software monitors existed until 2006, when Intel introduced the VT-x technology, and AMD - a similar, but incompatible AMD-V.

New processor modes, VMX root and non root, were introduced, and pre-existing privilege modes 0-3 can be used in both of them. The transition between modes can be carried out using the new vmxon and vmxoff instructions.

To store the state of the guest systems and the monitor, a new VMCS structure (the virtual machine control structure) is used, copies of which are located in physical memory and are available for the VM monitor.

An interesting solution is the configurability of which events in the guest will trigger a trap event and transition to the hypervisor, and which ones are left for OS processing. For example, for each guest, you can choose whether external interrupts are handled by him or the monitor; writing to which bits of the control registers CR0 and CR4 will be intercepted; which exceptions should be handled by the guest, and which ones by the monitor, etc. This solution allows you to achieve a compromise between the degree of control over each VM and the efficiency of virtualization. Thus, for trusted guests, monitor control can be relaxed, while third-party operating systems that are simultaneously running with them will still be under strict supervision. To optimize TLB operation, the technique described above for tagging its records using ASID ( English address space identifier) is used. To speed up the address translation process, a two-level translation scheme is called Intel EPT (extended page walk).

Intel added hardware virtualization to Itanium (VT-i technology [4]) simultaneously with IA-32 in 2006. The special mode was activated with the help of a new bit in the status register PRS.vm. With the bit previously turned on, service but not privileged instructions begin to trigger a trap and exit to the monitor. To return to guest OS mode, use the vmsw instruction. The part of the instructions, which are service ones, when the virtualization mode is on, generates a new kind of synchronous exception, for which its own handler is allocated.

Since the operating system accesses the hardware through a special PAL interface (processor abstraction level), the latter has been expanded to support operations such as creating and destroying environments for guest systems, saving and loading their state, configuring virtual resources, etc. It can be noted that adding hardware virtualization to IA-64 required less effort than IA-32.

The ARM architecture was originally designed for embedded and mobile systems, the effective virtualization of which, compared to server systems, has not been a key factor in commercial and technological success for a long time. However, in recent years there has been a tendency to use VMs on mobile devices to ensure the protection of critical parts of the system code, for example, cryptographic keys used in the processing of commercial transactions. In addition, ARM processors began to move into the server systems market, and this required expanding the architecture and adding features such as support for addressing large amounts of memory and virtualization.

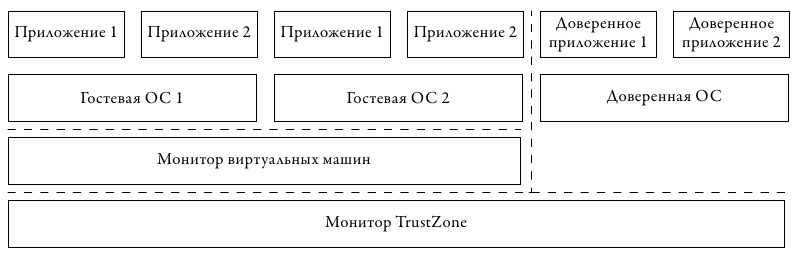

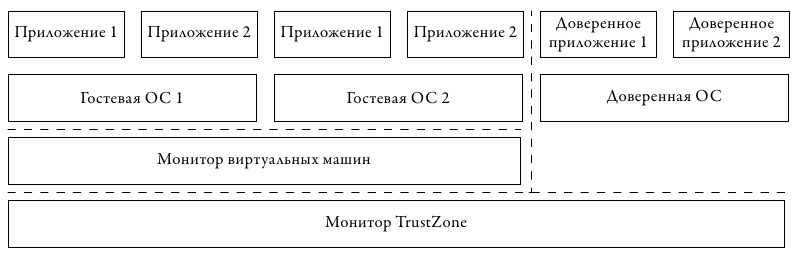

Both aspects were reflected in ARM's chosen approach to the development of its architecture. In fig. 4 shows the scheme implying the nesting of two levels of virtualization, presented in 2010 in updating the architecture of Cortex A15 [1].

Fig. 4: ARM Virtualization. The TrustZone monitor provides isolation and cryptographic authentication of the trusted "world". In the usual "world" uses its own monitor VM

To insulate critical components, the first layer of virtualization, called TrustZone, is used. With it, all running software components are divided into two "worlds" - trusted and normal. In the first environment, those parts of the system are executed, whose operation should not be subject to external influences of ordinary code. In the second environment, user applications and the operating system are executed, which could theoretically be compromised. However, the usual "world" does not have access to the trusted. The TrustZone monitor provides access in the opposite direction, which allows trusted code to monitor the status of the equipment.

The second layer of virtualization is executed under the control of an untrusted monitor and provides opportunities for multiplexing the work of several user OSs. It adds new HVC and ERET instructions for entering and exiting / from hypervisor mode (a). For the trap events, the previously reserved interrupt vector 0x14 was used, new registers were added: the SPSR stack pointer, the HCR virtual resource status and the HSR “syndrome” register, which stores the reason for leaving the guest to the monitor, which allows the latter to quickly analyze the situation and simulate the necessary functionality without excessive reading of guest state.

Just as was done in the architectures discussed earlier, a two-tier scheme is used to speed up the address translation mechanisms, in which the physical addresses of the guest OS are intermediate. , , .

MIPS , ARM: . , , 2012 . MIPS R5 MIPS VZ [2]. 32-, 64- .

. , COP0, . , COP0 . , , , , , .

, .. ( . onion) . , .. , . (), , , .

, , TLB MMU. hypercall, .

, .

- . , ( . 1), , .

1. Intel IA-32 ( [11])

, , , (. IDZ: 1 , 2 , 3 ).

, , , , , . IA-32 . , .

In order to avoid scenario repetition: exit from the VM to the monitor, interpretation of the instruction, reverse entrance to the VM, only for the next instruction to re-enter the monitor — use the preview of instructions [11]. After processing the trap, before the monitor transfers control back to the VM, the flow of instructions is viewed a few instructions forward in search of privileged instructions. If they are detected, the simulation switches to binary translation mode for a while. This avoids the negative impact of the clustering effect of privileged instructions.

A situation where a virtual machine monitor is launched under the control of another monitor that is directly executing on hardware is called recursive virtualization. Theoretically, it can be not limited to only two levels - the next one can be executed within each VM monitor, thereby forming a hierarchy of hypervisors.

The ability to run a single hypervisor under the control of a VM monitor (or, which is the same thing, a simulator) has practical value. Any VM monitor is a rather complicated program, to which the usual methods of debugging applications and even the OS are not applicable, since it loads very early during system operation, when it is difficult to connect a debugger. Execution under control of the simulator allows you to inspect and monitor its work from the very first instruction.

Goldberg and Popeck, in their previously mentioned work, addressed issues of effective support, including recursive virtualization. However, their conclusions, unfortunately, do not take into account many of the above-mentioned features of modern systems.

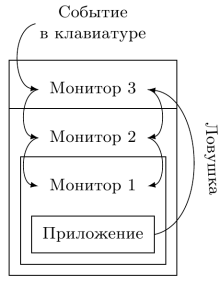

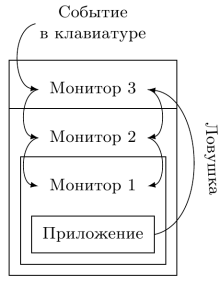

Consider one of the difficulties associated with the specifics of the nested launch of VM monitors - handling traps and interrupts. In the simplest case, the most external monitor is always responsible for handling all types of exceptional situations, the task of which is either to handle the event itself, thereby “hiding” it from other levels, or transfer it to the next one.

For both interrupts and traps, this is often suboptimal - an event must pass through several levels of hierarchy, each of which will delay its processing. In fig. Figure 5 shows the processing of two types of messages - interrupts that occur in external equipment, and control flow traps that occur inside the application.

Fig. 5: Recursive virtualization. All events must be processed by an external monitor, which lowers them down the hierarchy, and a delay is formed.

For optimal processing of various types of traps and interrupts for each of them, the hierarchy level of VM monitors should be selected, and when an event occurs, control should be transferred directly to this level, bypassing additional processing by overlying levels and without the associated overhead.

The task of hardware support for the second and more levels of nesting virtualization processor manufacturers pay much less attention than its first level. Nevertheless, such works exist. So, in the eighties of the twentieth century, for IBM / 370 systems [13], the ability to run copies of system software inside an operating system already running on hardware was implemented. For this task, the SIE instruction ( English start interpreted execution) [14] was introduced. There are proposals for an interface between nested levels of virtualization [12], which would effectively support nesting of multiple VM monitors, and the implementation of recursive virtualization for IA-32 [15]. However, modern processor architectures are still limited to hardware support for at most one level of virtualization.

First - a few definitions, maybe not quite typical for articles on this topic, but used in this note.

- Host ( eng. Host) - a hardware system running a virtual machine monitor or simulator.

- Guest ( eng. Guest) - a virtual or simulated system running under the control of a monitor or simulator. Also sometimes referred to as a target system ( English target system).

I will try to define the rest of the terminology as it appears in the text.

Introduction

Virtualization was of interest even before the invention of the microprocessor, in times of predominance of large systems — meenframes, whose resources were very expensive, and their downtime was economically unacceptable. Virtualization allowed to increase the degree of utilization of such systems, while freeing users and application programmers from the need to rewrite their software, since from their point of view the virtual machine was identical to the physical one. The pioneer in this area was IBM with System / 360, System / 370 mainframes, created in the 1960-1970s.

Classic virtualization criterion

Not surprisingly, the criteria for creating an effective virtual machine monitor were obtained at about the same time. They are formulated in the classic work of 1974 by Gerald Popek and Robert Goldberg “Formal requirements for virtualizable third generation architectures” [8]. Consider its main prerequisites and formulate its main conclusion.

')

System model

In the following, a simplified representation of a “standard” computer is used from an article consisting of one central processor and linear homogeneous RAM. Peripheral devices, as well as means of interaction with them, are omitted. The processor supports two modes of operation: the supervisor mode used by the operating system and the user mode in which applications are executed. Memory supports the segmentation mode used for organizing virtual memory.

Requirements for a virtual machine monitor (VM):

- Isolation - each virtual machine should have access only to the resources that were assigned to it. It should not be able to affect the operation of both the monitor and other VMs.

- Equivalence - any program executed under the control of a VM should demonstrate behavior that is completely identical to its execution on a real system, with the exception of effects caused by two circumstances: the difference in the amount of available resources (for example, a VM may have a smaller memory size) and operation durations (from - for the possibility of sharing execution time with other VMs).

- Efficiency - in the original work, the condition is formulated as follows: “a statistically prevailing subset of virtual processor instructions must be executed directly by the host processor, without the intervention of a VM monitor”. In other words, a significant part of the instructions must be simulated in direct execution mode. The requirement of efficiency is the most ambiguous of the three listed requirements, and we will return to it. In the case of simulators based on the interpretation of instructions, the efficiency condition is not met, since Each guest instruction requires processing by the simulator.

Instruction classes

The processor state contains at least three registers: M, which determines whether it is in supervisor mode s or user u, P is the current instruction pointer, and R is the state that defines the boundaries of the memory segment used (in the simplest case, R specifies a segment, that is, R = (l, b), where l is the address of the beginning of the range, b is its length).

Memory E consists of a fixed number of cells that can be accessed by their number t, for example, E [t]. The size of the memory and cells for this consideration is irrelevant.

When executed, each instruction i in the general case can change both (M, P, R) and memory E, i.e. it is a conversion function: (M 1 , P 1 , R 1 , E 1 ) -> (M 2 , P 2 , R 2 , E 2 ).

It is considered that for some input conditions the instruction causes a trap exception ( eng. Trap) if, as a result of its execution, the contents of the memory do not change, except for a single cell E [0], into which the previous state of the processor is placed (M 1 , P 1 , R 1 ). The new state of the processor (M 2 , P 2 , R 2 ) is copied from E [1]. In other words, the trap allows you to save the full state of the program at the time before the execution of its last instruction begins and transfer control to the processor, in the case of conventional systems, usually working in supervisor mode and designed to provide additional actions on the system state, and then return control to the program by restoring the state E [0].

Further, traps may have two signs.

- Caused by an attempt to change the state of the processor (control flow trap).

- References to the contents of the memory, beyond the range defined in (memory protection trap).

Note that these signs are not mutually exclusive. That is, the result of execution can simultaneously be a flow control trap and memory protection.

The machine instructions of the processor in question can be classified as follows:

- Privileged ( English privileged). Instructions that execute with M = u always cause a control flow trap. In other words, such an instruction can only be executed in supervisor mode, otherwise it will necessarily cause an exception.

- Service ( English sensitive. I do not know the established Russian term for this concept. Sometimes there is a translation of “sensitive” instructions in the literature). The class consists of two subclasses. 1. Instructions whose execution ended without a memory protection trap and caused a change in M and / or R. They can change the processor mode from the supervisor to user or back or change the position and size of the available memory segment. 2. Instructions whose behavior in cases where they do not trigger the memory protection trap depend either on mode M or on value R.

- Harmless ( eng. Innocuous). Non-official. The widest class of instructions that do not manipulate anything except the instruction pointer P and the memory E, whose behavior does not depend on which mode or address in memory they are located.

Sufficient condition for building a VM monitor

The observance of the three conditions stated above for the possibility of building a virtual machine monitor is given in the following sentence: the set of service instructions is a subset of privileged instructions (Fig. 1). Omitting the formal proof of Theorem 1 from the article, we note the following circumstances.

- Isolation is provided by placing the monitor in supervisor mode, and VM - only in the user. At the same time, the latter cannot arbitrarily change system resources - an attempt will cause a control flow trap on the service instruction and a transition to the monitor, as well as memory due to the fact that the configuration does not allow this, and the processor will execute the memory protection trap.

- Equivalence is proved by the fact that harmless instructions are executed in the same way regardless of whether a monitor is present in the system or not, and service instructions always cause an exception and are interpreted. Note that even in the simple scheme described above, the first weakening condition appears: even without taking into account the memory required to store the code and data of the hypervisor, the amount of memory available for the VM will be at least two cells less than the host system has.

- Efficiency is guaranteed by the fact that all harmless instructions inside the VM are executed directly, without slowing down. This implies that their set includes a “statistically prevailing subset of virtual processor instructions”.

Fig. 1: Fulfillment of virtualization condition. The set of service instructions is a subset of privileged

Limitations of the applicability of the virtualization criterion

Despite the simplicity of the model used and the conclusions drawn from it, the work of Goldberg and Popek is still relevant. It should be noted that non-observance of the conditions described in it does not at all make the creation or use of virtual machines on some architecture fundamentally impossible, and there are practical examples of implementations confirming this. However, it becomes impossible to maintain the optimal balance between the three properties: insulation, equivalence and efficiency. Most often, it is necessary to pay for the speed of virtual machines because of the need for thorough search and programmatic control over the execution of service, but not privileged instructions, because the hardware itself does not provide this (Fig. 2). Even the only such instruction, executed directly by the VM, threatens the stable operation of the monitor, and therefore he has to scan the entire stream of guest instructions.

Fig. 2: Failure to comply with the condition of virtualizability. Utility, but not privileged instructions require the implementation of complex logic in the monitor

The work [8] itself contains both clearly indicated simplifications of the studied structure of real systems (no peripherals and input / output systems), as well as implicit assumptions about the structure of executable guest programs (almost entirely consisting of harmless instructions) and host systems (single processor).

We now consider these limitations in more detail, and also suggest how the criterion can be extended to additional resources that require virtualization, and thus increase its practical value for architects of new computing systems.

Guest Program Structure

For effective work of programs inside a VM, it is necessary that most of their instructions are harmless. As a rule, this is true for application applications. Operating systems, in turn, are designed to manage system resources, which implies the use of privileged and service instructions, and the monitor has to intercept and interpret them with a corresponding drop in performance. Therefore, ideally, the instruction set should be as privileged as possible so that the frequency of occurrence of traps is minimal.

Periphery

Since peripheral devices are a service resource of a computer, it is obvious that to ensure isolation and equivalence conditions it is necessary that all access attempts are controlled by a VM monitor in the same way as they are controlled in a multitasking operating system by its core. Currently, access to devices is most often done through the mechanism of reflecting them in the physical memory of the system (memory mapped I / O), which means that inside the monitor this read / write of some regions should either cause a memory protection trap or be non-functional i.e. not cause a trap and do not affect the state in an uncontrolled manner.

The intensity of the interaction of applications with peripherals can be different and is determined by their functionality, which affects their deceleration during virtualization. In addition, a VM monitor can make various classes of peripherals present on a host available within several VMs in various ways.

- Dedicated device - a device that is available exclusively within the same guest system. Examples: keyboard, monitor.

- Shared - common to several guests. Such a device or has several parts, each of which is allocated for the needs of one of them ( English partitioned mode), for example, a hard disk with several partitions, or is connected to each of them in turn ( English shared mode). Example: network card.

- Completely virtual - a device that is absent in the real system (or present, but in limited quantities) and is modeled programmatically inside the monitor. Examples: interrupt timers - each guest has his own timer, despite the fact that there is only one in the host system, and it is used for its own monitor needs.

Interruptions

Interrupts are the mechanism for notifying the processor of external device events requiring the attention of the operating system. In the case of using virtual machines, the monitor must be able to control the delivery of interrupts, since some or all of them must be processed inside the monitor. For example, an interrupt timer can be used by it to track / limit guest use of processor time and to be able to switch between several simultaneously running VMs. In addition, in the case of several guests it is unclear in advance which of them should deliver the interruption, and the monitor should decide.

The simplest isolation solution is to route all interrupts to the VM monitor. In this case, the equivalence will be ensured by him: an interruption, if necessary, will be delivered to the inside of the guest through a simulation of a change in his state. The monitor can additionally create virtual interrupts, due only to the logic of its operation, and not external events. However, the effectiveness of this solution will not be optimal. As a rule, the response of the system to an interruption must occur within a limited time, otherwise it will lose its meaning for the external device or will have disastrous consequences for the system as a whole. The introduction of a virtualization layer increases the delay between the moment of the occurrence of the event and the moment of its processing in the guest compared to the system without virtualization. More effective is the hardware control over the delivery of interrupts, allowing some of them to be harmless to the state of the system and not to require the intervention of the monitor program every time.

Multiprocessor systems

Almost all modern computers contain more than one core or processor. In addition, several VMs can be executed within one monitor, each of which can have several virtual processors at its disposal. Consider how these circumstances affect the conditions of virtualization.

Synchronization and virtualization

Introduction to the consideration of several host and guest processors leaves the condition of effective virtualizability in force. However, it is necessary to pay attention to the fulfillment of the conditions for the efficiency of multi-threaded applications inside the VM. Unlike single-threaded, they are characterized by the processes of synchronization of program parts running on different virtual processors. At the same time, all participating threads are waiting for all of them to reach the predetermined point of the algorithm, the so-called. barrier. In the case of virtualization of the system, one or several guest streams may turn out to be inactive, displaced by the monitor, because of which the rest will waste time.

An example of such ineffective behavior of guest systems is synchronization with the use of cyclic locks ( English spin lock) inside VM [9]. Being inefficient and therefore unused for single-processor systems, in the case of multiple processors it is a lightweight alternative to other, more heavy locks ( English lock) used to enter the critical sections of parallel algorithms. Most often they are used inside the operating system, but not user programs, since only the OS can determine exactly which system resources can be effectively protected using cyclic locks. However, in the case of a virtual machine, it is not the OS that is actually scheduling resources, but a VM monitor, which is generally not aware of them and can force out a thread capable of freeing a resource, while the second thread will perform a cyclic lock, wasting CPU time. The best solution is to deactivate the blocked thread until the resource it needs is released.

The existing solutions for this problem are described below.

- The VM monitor may attempt to detect the use of guest OS cyclic locks. This requires analyzing the code before execution, setting breakpoints at the addresses of the lock. The method is not distinguished by universality and reliability of detection.

- The guest system can signal the monitor that it intends to use cyclic locking using special instructions. The method is more reliable, but requires modification of the guest OS code.

Interrupts in multiprocessor systems

Finally, we note that the delivery and interrupt handling schemes in systems with several processors are also more complex, and this has to be taken into account when creating a VM monitor for such systems, and its efficiency may be lower than that of a single-processor equivalent.

Address Translation

The machine instruction model used earlier to formulate an assertion about efficient virtualization used a simple linear address translation scheme based on segmentation, popular in the 1970s. It is computationally simple, it does not change with the introduction of a VM monitor, and therefore, no analysis has been made of the influence of the address translation mechanism on efficiency.

Currently, virtual paged memory mechanisms apply non-linear conversion of virtual addresses of user applications to physical addresses used by hardware. The system resource involved in this is the register-pointer of the address of the transformation table (most often in practice several tables are used that form a hierarchy with a common root). In the case of VM operation, this pointer needs to be virtualized, since each guest system has its own register contents, as well as the position / contents of the table. The cost of software implementation of this mechanism inside the monitor is high, so applications that actively use memory can lose their effectiveness in virtualization.

To solve this problem, a two-level hardware address translation is used (Fig. 3). Guest OSs see only the first level, while the physical address generated for them is subsequently transmitted by the second level to the real address.

Fig. 3: Two-level translation of addresses. The first level is controlled by the guest OS, the second - by the virtual machine monitor

Tlb

Another computer resource responsible for address translation is an associative translation buffer ( English translation lookaside buffer, TLB) consisting of several entries. Each guest system has its own TLB content, so when changing an active VM or switching to a monitor, it should be reset. This adversely affects the performance of systems, since the restoration of its contents takes time, during which it is necessary to use a less efficient access to the address translation table located in memory.

The solution is to share TLB resources among all systems [10]. Each line of the buffer is associated with an identifier - a tag unique to each VM. When searching in it, the equipment takes into account only the lines whose tag corresponds to the current VM.

Address translation for peripheral devices

In addition to processors, peripheral devices can also access memory directly using DMA technology (direct memory access). In this case, the treatment in classical systems without virtualization goes to physical addresses. Obviously, it is necessary to translate such addresses inside a virtual machine, which translates into overhead and a decrease in monitor efficiency.

The solution is to use an IOMMU (Input Output Memory Management Unit) device, which allows you to control how the host devices access physical memory.

Extension of the principle

Let's expand the virtualization condition by replacing the word “instruction” with “operation”: the set of service operations is a subset of the privileged ones. In this case, by operation we will mean any architecturally defined activity on reading or changing the state of the system, including instructions, interrupts, access to devices, address translation, etc.

At the same time, the condition for increasing the efficiency of virtualization will sound as follows: the system architecture must have a minimum number of service operations . It can be achieved in two ways: transferring service instructions to harmless or reducing the number of privileged ones. To do this, most of the architectures followed the path of adding a new mode of the rm monitor mode to the state register M ( English root mode). It relates to mode s as s to u; in other words, the updated class of privileged instructions now calls the control flow trap, which translates the processor from s to r.

Support status in modern architectures

Consider the main modern architectures of computing systems used on servers, workstations, as well as embedded systems, from the point of view of practical implementation of the above-described theoretical principles. See also the series of articles [5,6,7].

Ibm power

IBM was one of the first to introduce the architecture with hardware support for virtualization on the server microprocessor market in the POWER4 series in 2001. It was intended to create isolated logical partitions ( English logical partitions, LPAR), each of which is associated with one or more processors and I / O resources. To this end, a new hypervisor mode was added to the processor to the supervisor and user modes already present. To protect the memory, each LPAR is limited in the mode with address translation disabled and has access only to a small private memory region; to use the rest of the memory, the guest OS is obliged to turn on the broadcast, controlled by the VM monitor.

In 2004, the development of this architecture, called POWER5, brought serious improvements to the mechanisms of virtualization. Thus, a new timer device was added, available only for the VM monitor, which allowed it to control the guest systems more precisely and allocate processor resources to them with an accuracy of one hundredth of a processor. Also, the VM monitor was able to control the interrupt delivery address - in the LPAR or in the hypervisor. The most important innovation was the fact that the presence of the hypervisor was mandatory - it loaded and managed system resources, even if there was a single LPAR partition on the system. Supported operating systems (AIX, Linux, IBM i) were modified with this in mind in order to maintain a kind of paravirtualization scheme. To manage I / O devices, one (or two, for load balancing) from the LPAR loads a special operating system — the virtual I / O server (VIOS), which provides these resources for the remaining partitions.

SPARC

Sun, which developed UltraSPARC and Solaris, offered OS-level virtualization (so-called containers or zones) since 2004. In 2005, hardware virtualization was introduced to Niagara 1 multi-threaded processors. At the same time, the virtualization granularity was equal to one thread (in total, the chip had eight cores, four threads each).

For the interaction between the OS and the hypervisor, a public and stable interface for privileged applications [3] was presented, hiding most of the architectural registers from the OS.

For address translation, the previously described two-level scheme with virtual, real and physical addresses is used. In this case, the TLB does not store the intermediate translation address.

Intel IA-32 and AMD AMD64

Unlike POWER and SPARC, the IA-32 architecture (and its AMD64 extension) has never been controlled by a single company, which could add (a couple) virtualization between hardware and OS, violating backward compatibility with existing operating systems. In addition, it clearly violates the conditions for efficient virtualization - about 17 service instructions are not privileged, which prevented the creation of hardware-supported VM monitors. However, software monitors existed until 2006, when Intel introduced the VT-x technology, and AMD - a similar, but incompatible AMD-V.

New processor modes, VMX root and non root, were introduced, and pre-existing privilege modes 0-3 can be used in both of them. The transition between modes can be carried out using the new vmxon and vmxoff instructions.

To store the state of the guest systems and the monitor, a new VMCS structure (the virtual machine control structure) is used, copies of which are located in physical memory and are available for the VM monitor.

An interesting solution is the configurability of which events in the guest will trigger a trap event and transition to the hypervisor, and which ones are left for OS processing. For example, for each guest, you can choose whether external interrupts are handled by him or the monitor; writing to which bits of the control registers CR0 and CR4 will be intercepted; which exceptions should be handled by the guest, and which ones by the monitor, etc. This solution allows you to achieve a compromise between the degree of control over each VM and the efficiency of virtualization. Thus, for trusted guests, monitor control can be relaxed, while third-party operating systems that are simultaneously running with them will still be under strict supervision. To optimize TLB operation, the technique described above for tagging its records using ASID ( English address space identifier) is used. To speed up the address translation process, a two-level translation scheme is called Intel EPT (extended page walk).

Intel IA-64 (Itanium)

Intel added hardware virtualization to Itanium (VT-i technology [4]) simultaneously with IA-32 in 2006. The special mode was activated with the help of a new bit in the status register PRS.vm. With the bit previously turned on, service but not privileged instructions begin to trigger a trap and exit to the monitor. To return to guest OS mode, use the vmsw instruction. The part of the instructions, which are service ones, when the virtualization mode is on, generates a new kind of synchronous exception, for which its own handler is allocated.

Since the operating system accesses the hardware through a special PAL interface (processor abstraction level), the latter has been expanded to support operations such as creating and destroying environments for guest systems, saving and loading their state, configuring virtual resources, etc. It can be noted that adding hardware virtualization to IA-64 required less effort than IA-32.

ARM

The ARM architecture was originally designed for embedded and mobile systems, the effective virtualization of which, compared to server systems, has not been a key factor in commercial and technological success for a long time. However, in recent years there has been a tendency to use VMs on mobile devices to ensure the protection of critical parts of the system code, for example, cryptographic keys used in the processing of commercial transactions. In addition, ARM processors began to move into the server systems market, and this required expanding the architecture and adding features such as support for addressing large amounts of memory and virtualization.

Both aspects were reflected in ARM's chosen approach to the development of its architecture. In fig. 4 shows the scheme implying the nesting of two levels of virtualization, presented in 2010 in updating the architecture of Cortex A15 [1].

Fig. 4: ARM Virtualization. The TrustZone monitor provides isolation and cryptographic authentication of the trusted "world". In the usual "world" uses its own monitor VM

To insulate critical components, the first layer of virtualization, called TrustZone, is used. With it, all running software components are divided into two "worlds" - trusted and normal. In the first environment, those parts of the system are executed, whose operation should not be subject to external influences of ordinary code. In the second environment, user applications and the operating system are executed, which could theoretically be compromised. However, the usual "world" does not have access to the trusted. The TrustZone monitor provides access in the opposite direction, which allows trusted code to monitor the status of the equipment.

The second layer of virtualization is executed under the control of an untrusted monitor and provides opportunities for multiplexing the work of several user OSs. It adds new HVC and ERET instructions for entering and exiting / from hypervisor mode (a). For the trap events, the previously reserved interrupt vector 0x14 was used, new registers were added: the SPSR stack pointer, the HCR virtual resource status and the HSR “syndrome” register, which stores the reason for leaving the guest to the monitor, which allows the latter to quickly analyze the situation and simulate the necessary functionality without excessive reading of guest state.

Just as was done in the architectures discussed earlier, a two-tier scheme is used to speed up the address translation mechanisms, in which the physical addresses of the guest OS are intermediate. , , .

MIPS

MIPS , ARM: . , , 2012 . MIPS R5 MIPS VZ [2]. 32-, 64- .

. , COP0, . , COP0 . , , , , , .

, .. ( . onion) . , .. , . (), , , .

, , TLB MMU. hypercall, .

, .

- . , ( . 1), , .

| , | ||

|---|---|---|

| Prescott | 3 . 2005 | 3963 |

| Merom | 2 . 2006 | 1579 |

| Penryn | 1 . 2008 | 1266 |

| Nehalem | 3 . 2009 | 1009 |

| Westmere | 1 . 2010 | 761 |

| Sandy Bridge | 1 . 2011 | 784 |

1. Intel IA-32 ( [11])

, , , (. IDZ: 1 , 2 , 3 ).

, , , , , . IA-32 . , .

* in %al,%dx * out $0x80,%al mov %al,%cl mov %dl,$0xc0 * out %al,%dx * out $0x80,%al * out %al,%dx * out $0x80,%al In order to avoid scenario repetition: exit from the VM to the monitor, interpretation of the instruction, reverse entrance to the VM, only for the next instruction to re-enter the monitor — use the preview of instructions [11]. After processing the trap, before the monitor transfers control back to the VM, the flow of instructions is viewed a few instructions forward in search of privileged instructions. If they are detected, the simulation switches to binary translation mode for a while. This avoids the negative impact of the clustering effect of privileged instructions.

Recursive virtualization

A situation where a virtual machine monitor is launched under the control of another monitor that is directly executing on hardware is called recursive virtualization. Theoretically, it can be not limited to only two levels - the next one can be executed within each VM monitor, thereby forming a hierarchy of hypervisors.

The ability to run a single hypervisor under the control of a VM monitor (or, which is the same thing, a simulator) has practical value. Any VM monitor is a rather complicated program, to which the usual methods of debugging applications and even the OS are not applicable, since it loads very early during system operation, when it is difficult to connect a debugger. Execution under control of the simulator allows you to inspect and monitor its work from the very first instruction.

Goldberg and Popeck, in their previously mentioned work, addressed issues of effective support, including recursive virtualization. However, their conclusions, unfortunately, do not take into account many of the above-mentioned features of modern systems.

Consider one of the difficulties associated with the specifics of the nested launch of VM monitors - handling traps and interrupts. In the simplest case, the most external monitor is always responsible for handling all types of exceptional situations, the task of which is either to handle the event itself, thereby “hiding” it from other levels, or transfer it to the next one.

For both interrupts and traps, this is often suboptimal - an event must pass through several levels of hierarchy, each of which will delay its processing. In fig. Figure 5 shows the processing of two types of messages - interrupts that occur in external equipment, and control flow traps that occur inside the application.

Fig. 5: Recursive virtualization. All events must be processed by an external monitor, which lowers them down the hierarchy, and a delay is formed.

For optimal processing of various types of traps and interrupts for each of them, the hierarchy level of VM monitors should be selected, and when an event occurs, control should be transferred directly to this level, bypassing additional processing by overlying levels and without the associated overhead.

Support for recursive virtualization in existing solutions

The task of hardware support for the second and more levels of nesting virtualization processor manufacturers pay much less attention than its first level. Nevertheless, such works exist. So, in the eighties of the twentieth century, for IBM / 370 systems [13], the ability to run copies of system software inside an operating system already running on hardware was implemented. For this task, the SIE instruction ( English start interpreted execution) [14] was introduced. There are proposals for an interface between nested levels of virtualization [12], which would effectively support nesting of multiple VM monitors, and the implementation of recursive virtualization for IA-32 [15]. However, modern processor architectures are still limited to hardware support for at most one level of virtualization.

Literature

- Goodacre John. Hardware accelerated Virtualization in the ARM Cortex Processors. 2011. xen.org/files/xensummit_oul11/nov2/2_XSAsia11_JGoodacre_HW_accelerated_virtualization_in_the_ARM_Cortex_processors.pdf

- Hardware-assisted Virtualization with the MIPS Virtualization Module. 2012. www.mips.com/application/login/login.dot?product_name=/auth/MD00994-2B-VZMIPS-WHT-01.00.pdf

- Hypervisor / Sun4v Reference Materials. 2012. kenai.com/projects/hypervisor/pages/ReferenceMaterials

- Intel Virtualization Technology / F. Leung, G. Neiger, D. Rodgers et al. // Intel Technology Journal. 2006. Vol. 10. www.intel.com/technology/itj/2006/v10i3

- McGhan Harlan. The gHost in the Machine: Part 1 // Microprocessor Report. 2007. mpronline.com

- McGhan Harlan. The gHost in the Machine: Part 2 // Microprocessor Report. 2007. mpronline.com

- McGhan Harlan. The gHost in the Machine: Part 3 // Microprocessor Report. 2007. mpronline.com

- Popek Gerald J., Forge P. Goldberg. Formal requirements for third generation architects // Communications of the ACM. Vol. 17. 1974.

- Southern Gabriel. Analysis of SMP VM CPU Scheduling. 2008. cs.gmu.edu/~hfoxwell/cs671projects/southern_v12n.pdf

- Yang Rongzhen. Virtual Translation Lookaside Buffer. 2008. www.patentlens.net/patentlens/patent/US_2008_0282055_A1/en .

- Ole Agesen, Jim Mattson, Radu Rugina, Jeffrey Sheldon // Proceedings of the 2012 technical review. USENIX ATC'12. Berkeley, CA, USA: USENIX Association, 2012. P. 35-35. www.usenix.org/system/files/conference/atc12/atc12-final158.pdf

- Poon Wing-Chi, Mok AK: Improving the Latency of VM Exit Forwarding in Recursive Virtualization for the x86 Architecture // System Science (HICSS), 2012 45th Hawaii International Conference on. 2012. p. 5604-5612.

- Osisek DL, Jackson KM, Gum PH ESA / 390 interpretive execution architecture, foundation for VM / ESA // IBM Syst. J. - 1991— V. 30, No 1. - Pp. 34–51. - ISSN: 0018-8670. —DOI: 10.1147 / sj.301.0034.

- Andy Glew. Sie. - semipublic.comp-arch.net/wiki/SIE

- The Turtles Project: Design and Muli Ben-Yehuda [et al.] //. - 2010. - P. 423–436. www.usenix.org/event/osdi10/tech/full_papers/Ben-Yehuda.pdf

Source: https://habr.com/ru/post/196444/

All Articles