Mistress of the present - integrated graphics (Intel GPU) 2013 or "mylophone with me!"

In February, when many were still thinking about whether it’s time to throw out the New Year tree, I decided it was time to introduce Habra readers to what awaits them in the summer, and created a guest post from the future telling about the integrated graphics (GPU) on that the moment of the upcoming Intel processors codenamed Haswell.

And now, when Intel's integrated graphics are no longer a guest from the future, but a hostess from the present, and one of these GPUs is in the ultrabook on which I write these lines, you can, without being limited to secrecy, tell something about Intel

So, the Intel graphics processor is integrated into all mobile, desktop and embedded, as well as half of the server models of the 4th generation of the Intel Core CPU (known before the release as “Haswell”). For those interested - A complete list of CPU models with all the features .

This is the seventh generation of Intel graphics solutions, starting with the iconic Intel740 (hence the unofficial name for this family Gen7) and the third version of Intel HD Graphics microarchitecture, the one used in the previous CPU family, Ivy Bridge, but some additions and improvements, so in fact these GPUs are Gen7.5.

')

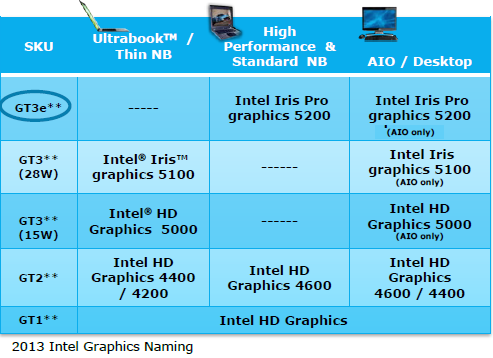

In general, starting a meeting with a name is unoriginal. But very practical. The table below, taken from IDF 2013 materials, shows the correspondence of the pre-release designations of the modifications of the Intel GPU 2013 with their official names, as well as the scope.

The youngest version of the GT1 with 10 actuators (EU) is simply called Intel HD Graphics ,

GT2 with 20 EU is, depending on the frequency, Intel HD Graphics 4200/4400/4600 .

Haswell GT3 with 40 EU, capable of operating at a maximum frequency of 1.1GHz , is called Intel HD Graphics 5000 . By the way, this model is used in the MacBook Air.

And finally, the two top versions with 40 EU and a frequency of 1.3GHz, got the name proper Iris , which can be perceived either as simply a “beautiful female name” or as “eye iris”: Intel Iris graphics 5100 and Intel Iris Pro graphics 5200 (representing Intel Iris graphics 5100 with integrated DRAM).

Supported by the new generation of Intel HD Graphics API, as well as the internal device modifications GT1-GT3 described in the above post , so I will not repeat, but immediately turn your attention to the GT3e - Intel Iris Pro graphics 5200 . This model differs from the others in the presence of 128MB of integrated memory - eDRAM. Why do you need it?

Although Intel's CPU and GPU are on the same chip, all the data exchange between them takes place through common system memory, which of course is a bottleneck - the cause of performance problems. The solution is to add a “fast memory” to the system, which is fully shared between the CPU cores, graphics and media GPU subsystems. And not just memory, but cache memory, which will be a 4th level cache (L4) on this system. It is not difficult to guess how positively this cache will affect the performance of graphics operations that repeatedly reuse data, i.e. on texturing and anti-aliasing.

I also want to draw your attention to the values of thermal power (TDP) for GT3, shown in the table above - depending on the frequency of 15W or 28W. This in itself is surprisingly small, but if you also notice that these figures refer not only to the GPU, but to the entire CPU system and the GPU as a whole, then they become even more fantastic. By the way, the TDP Iris Pro graphics 5200 (also in conjunction with the CPU) is 47W.

And yet - we note that the Iris graphics, and indeed the entire upper GT3 series is used exclusively in laptops and all-in-one computers (All in One = AIO), but only the more modest Intel HD Graphics are integrated into desktop CPUs of any level.

From iron to the software. Significant changes have occurred in the drivers integrated graphics Intel - they have lost much weight. Below is an explanatory picture of the series was-became (on the right, on a white background - new architecture, on the left, on gray - the old one):

As you can see, Hardware Abstraction Layer completely disappeared from the driver, and its functions were partially taken over by user mode drivers, which are now specially created and optimized for each specific platform. But, in general, drivers began to do less optimization work, which means that performance is now much more dependent on the applications themselves!

Intel 2013 graphics performance tests now abound. The most complete list of tests - games and benchmarks of Intel graphics in comparison with cards from other manufacturers is on notebookcheck.net: for Iris Pro , for Iris . But I’ll give a general conclusion here - On systems with Iris Pro graphics 5200, many modern games (2013) can work fine on medium or high quality settings at a resolution of 1366x768. For earlier or less graphics-intensive games, such as Diablo III or Fifa 2013, even higher resolutions or quality settings are possible. On systems with Intel Iris graphics 5100, modern games are free to run at low or medium quality settings at resolutions of 1024x768 or 1366x768.

A comparison of models of Intel HD Graphics "among themselves" - Intel HD Graphics 3000/4000/4400/5000, can be found in the article anandtech

Here I propose to take a close look at the beautiful and useful benchmark Unigine Heaven 4.0. It not only loads the GPU in full, with an absolutely real game load (based on Heaven is the real Unigine game engine), but also allows you to choose between three APIs - OpenGL, DirectX9 and DirectX11.

For tests, a system with Intel Core i7-4850HQ (Intel Iris Pro graphics 5200), Windows 8.0 was used, the latest driver version at 9.18.10.3257 (15.31.17.64.3257) at the time of writing this post.

Heaven 4.0 was launched in full-screen mode at a resolution of 1920x1200 and medium (middle) quality settings with the rest of the default settings.

The results are summarized in the table below:

| API | Score | FPS | FPS min | FPS max |

|---|---|---|---|---|

| Directx 11 | 435 | 17.3 | 6.3 | 31.8 |

| DirectX 9 | 445 | 17.6 | 6.4 | 38.4 |

| Opengl | 415 | 16.5 | 6.1 | 30.0 |

That is, the highest score on points is shown by DirectX 9 , DirectX 11 lags behind it by 2% and OpenGL by 7%. The lag seems to be insignificant, but if we compare the maximum achievable frame rate in the test, then the difference becomes impressive - compared to DX9, DX11 loses more than 20%, and OpenGL - 28%!

Why it happens? Different drivers (as mentioned above), different overhead. What to do - it is obvious - for maximum performance, other things being equal, choose DX9.

Now let's see what exactly caused such a sharp drop in performance on some scenes - up to just six and a few frames per second! Here, with the maximum frame rate, everything is clear “by eye” - it is achieved, for example, on dark scenes or close-ups, when much of the details simply do not draw. But why suddenly among normal, daytime scenes, with shadows, highlights, anti-aliasing and performance around 25 FPS, for example, such as in the screenshot below, what happens four times slow down?

( Normal frame, FPS ~ 25 )

( Very slow frame, FPS <7 )

Maybe the grass in the foreground is to blame for everything? A comprehensive answer to this question is provided by the excellent free Intel Graphics Performance Analyzers (GPA) tool.

After downloading and installing the version for Windows (by the way, there is also a version for Android), launch GPA Monitor and configure the trigger so that when the frame rate of Heaven drops below 7, the corresponding frame is automatically captured:

Then we run our benchmark from GPA Monitor and go do something else, for example, read habrahabr.

Five minutes later (or five hours later - that's someone as it will) come back and open another GPA component - Frame Analyzer (Frame Analyzer), where we select the desired frame from the list of captured frames and load it (except for the necessary one, a number of frames are captured at the beginning of the download benchmark - at the menu showing stage, when FPS is low simply by the design of Heaven). The result is something like this (by clicking on the image below - the full-size version):

The upper part of the working area of the window shows all the operations for drawing this frame in the form of ergs (erg) -conditional “units of work of the GPU”, which can be both drawing calls of various objects, and clearing buffers and so on. The height of the bars indicates the time taken for each erg.

The column on the left shows the render buffers (Render Targets, RT) used to create this frame, and, of course, their contents. It can be seen that as many as 37 RT are involved in this frame! But they are used competently in terms of performance, i.e. sequentially, in turn. This can be seen from the information in brackets under each RT - the numbers of ergs that use them.

Now let's choose the highest bar, standing alone in a fairly flat profile of ergs on the right, with a duration exceeding 10 thousand microseconds, and see what exactly it corresponds to.

After highlighting this erg, the GPA will automatically highlight its corresponding RT, show what exactly is happening (in this case, 506 primitives are drawn by index in erg # 1708) and most importantly, it will provide an opportunity to see which pixels it displays. Here they are - in the screenshot below (the original size by clicking on the image):

As you can see, this is not grass at all, but a smoke (clouds?) In the distance. Moreover, a significant part of them is not visible at the very frame, since obstructed by scene objects. Therefore, the haze can either be abandoned altogether or greatly simplified.

GPA allows you to conduct an experiment - “disable” selected ergs and see how the frame will look like without them. In this case, the frame was so similar to the original, that I will not give the picture. But the time spent on the frame under study was reduced by 15%, which in our case gave an increase in FPS by almost a whole frame. So, if you want to play a game, in the settings of which particle effects can be controlled (this is exactly the effect of smoke), then you understand what to do. Also, it's pretty clear what to do to ensure maximum performance on the Intel GPU for game developers. But only about. If you need more specific and detailed tips, you can read them (so far only in English) in the updated Intel GPU Developer Guide for the 4th Generation Intel® Core ™ Processor Graphics .

Source: https://habr.com/ru/post/196394/

All Articles