Go: multithreading

I was interested in a topic about multithreading in Go: habrahabr.ru/post/195574 .

Carefully re-read the author and community comments and decided that the topic was not fully disclosed.

Further, in order not to misunderstand, I ask you to accept that hereinafter the term “stream” is used exclusively in the sense of “thread”, and not in the meaning of “stream”. Thank.

Like the author of the first topic, I also love Go very much and use it at the first opportunity.

I am also impressed with the style of its multithreading, and at any opportunity I apply parallelization.

For example, the organization of work with the database using multiple threads has so far allowed to significantly increase the access speed and achieve acceptable values even for one process.

But here's an example with channels got you thinking.

On my car, the difference was not 10-20 times, but it was. And the difference is very significant - in 2 threads, the task was performed approximately 2 times slower.

I rewrote the program to a convenient form, added load cycles and a pair of keys to it. And that's what came out of it.

Machine: Intel, 4 cores for 3 GHz, 4 GB RAM, linux-x86-64, kernel-3.11, golang-1.1.2

')

The maximum reachable value of runtime.MAXPROCS on my machine is equal to 256. You can set more, but still will be set to 256. The default value = 1.

The number of threads generated by the program (ps -C channel_test01 -L)

With -maxprocs = 1: 3 streams.

With -maxprocs = 2 or more: always 4 threads.

Sometimes during long operations, 2 more streams appeared, which remained until the end of the process. It looks like garbage collection.

(I tried on one of the virtual servers, which shows the presence of 24 cores in / proc / cpuinfo. The number of threads on it was also equal to 4. But often there was a fifth thread - it is very similar to the fact that the greedy vds did not hurry to allocate memory , and the garbage collector got out to look around).

Maximum performance develops with MAXPROCS = 1

Under load, multi-threading still takes its toll.

With maxprocs = 1, everything works in 1 thread, pseudo-multithreading is provided by the internal goal scheduler, which is very effective.

When maxprocs = 2 or more, more streams appear, the interaction mechanism between streams is activated. There are locks, and everything becomes slower, on sleeping streams - about 2 times.

With maxprocs = 3..256, the actual number of threads in the system does not grow, but some of the flows become “pseudo-flows”, the interaction between which begins to fall under the control of the internal glang scheduler. This gives a small increase in speed with each increase in the number of "pseudo-threads."

With a further increase in maxprocs (up to its maximum value of 256), more and more threads become pseudo-threads and fall under the control of the internal scheduler. But some of the threads still remain independent system threads. On sleep streams and fast calculations, this costs us about 10% of the performance.

Changing the runtime.MAXPROCS parameter can bring both gains and performance losses. This again confirms the general principle that the effect of parallelization depends on the specific task and specific equipment.

Carefully re-read the author and community comments and decided that the topic was not fully disclosed.

Further, in order not to misunderstand, I ask you to accept that hereinafter the term “stream” is used exclusively in the sense of “thread”, and not in the meaning of “stream”. Thank.

Like the author of the first topic, I also love Go very much and use it at the first opportunity.

I am also impressed with the style of its multithreading, and at any opportunity I apply parallelization.

For example, the organization of work with the database using multiple threads has so far allowed to significantly increase the access speed and achieve acceptable values even for one process.

But here's an example with channels got you thinking.

On my car, the difference was not 10-20 times, but it was. And the difference is very significant - in 2 threads, the task was performed approximately 2 times slower.

I rewrote the program to a convenient form, added load cycles and a pair of keys to it. And that's what came out of it.

Text of the program

/* channel_test01.go * Tests how go-routines interact with channels * Call: channel_test01 --help * Pls, do not use name "channel_test", because this name always is used by go-pkg-system */ package main import ( "fmt" "time" "flag" "os" "runtime" ) // flag support for program var MAXPROCS int var LOAD_CYCLES int //internal burden cycle var Usage = func() { fmt.Fprintf(os.Stderr, "Usage channel_test01 [-maxprocs=NN] [-cycles=NN] \n", os.Args[0]) } func init() { flag.IntVar(&MAXPROCS, "maxprocs", 1, "maxprocs for testing. From 1 to 256 "); flag.IntVar(&LOAD_CYCLES, "cycles", 1000, "burden internal cycle for testing. From 1 to 1000000 and more "); } func main() { flag.Parse();//get MAXPROCS and LOAD_CYCLES from flags // runtime.GOMAXPROCS() returns previous max_procs max_procs := runtime.GOMAXPROCS(MAXPROCS) // second call to get real state max_procs = runtime.GOMAXPROCS(MAXPROCS) fmt.Println("MaxProcs = ", max_procs) ch1 := make(chan int) ch2 := make(chan float64) go chan_filler(ch1, ch2) go chan_extractor(ch1, ch2) fmt.Println("Total:", <-ch2, <-ch2) } func chan_filler(ch1 chan int, ch2 chan float64) { const CHANNEL_SIZE = 1000000 for i := 0; i < CHANNEL_SIZE; i++ { for j := 0; j < LOAD_CYCLES; j++ { i++ } //thus we avoid optimizer influence i = i - LOAD_CYCLES ch1 <- i } ch1 <- -1 ch2 <- 0.0 } func chan_extractor(ch1 chan int, ch2 chan float64) { const PORTION_SIZE = 100000 total := 0.0 for { t1 := time.Now().UnixNano() for i := 0; i < PORTION_SIZE; i++ { // burden cycle for j := 0; j < LOAD_CYCLES; j++ { i++ } i = i - LOAD_CYCLES m := <-ch1 if m == -1 { ch2 <- total } } t2 := time.Now().UnixNano() dt := float64(t2 - t1)/1e9 //nanoseconds ==> seconds total += dt fmt.Println(dt) } } results

Machine: Intel, 4 cores for 3 GHz, 4 GB RAM, linux-x86-64, kernel-3.11, golang-1.1.2

')

The maximum reachable value of runtime.MAXPROCS on my machine is equal to 256. You can set more, but still will be set to 256. The default value = 1.

The number of threads generated by the program (ps -C channel_test01 -L)

With -maxprocs = 1: 3 streams.

With -maxprocs = 2 or more: always 4 threads.

Sometimes during long operations, 2 more streams appeared, which remained until the end of the process. It looks like garbage collection.

(I tried on one of the virtual servers, which shows the presence of 24 cores in / proc / cpuinfo. The number of threads on it was also equal to 4. But often there was a fifth thread - it is very similar to the fact that the greedy vds did not hurry to allocate memory , and the garbage collector got out to look around).

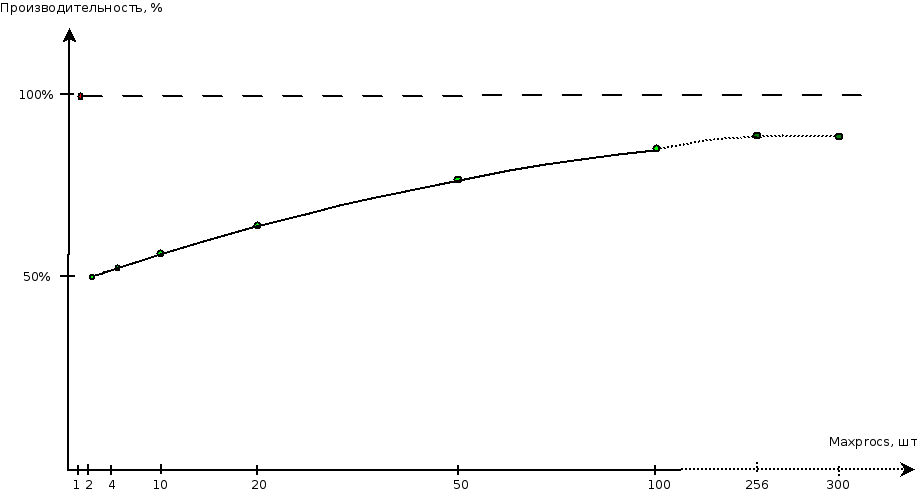

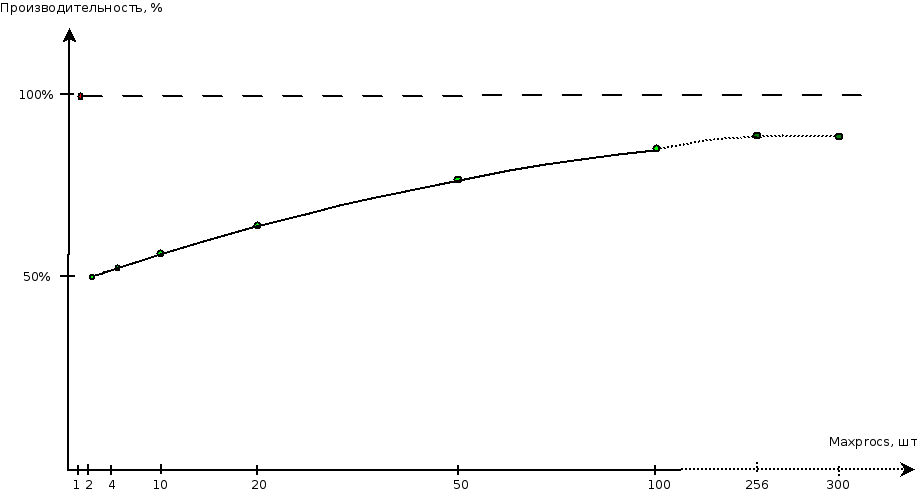

Dependence of the channel filling speed on the number of threads with cycles = 1000

Maximum performance develops with MAXPROCS = 1

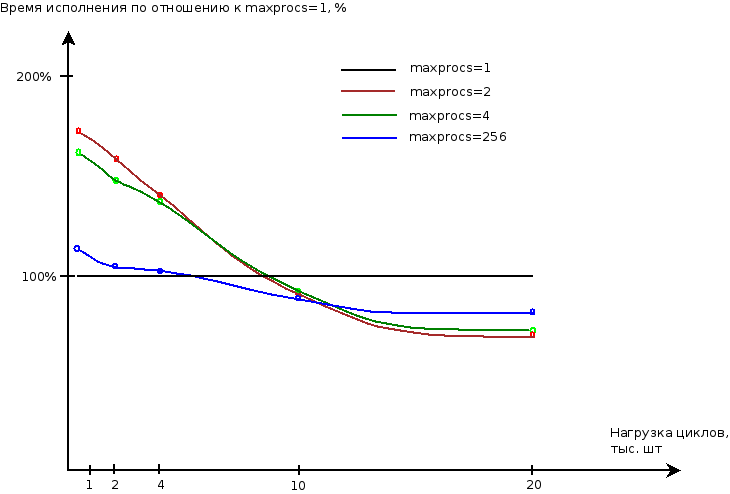

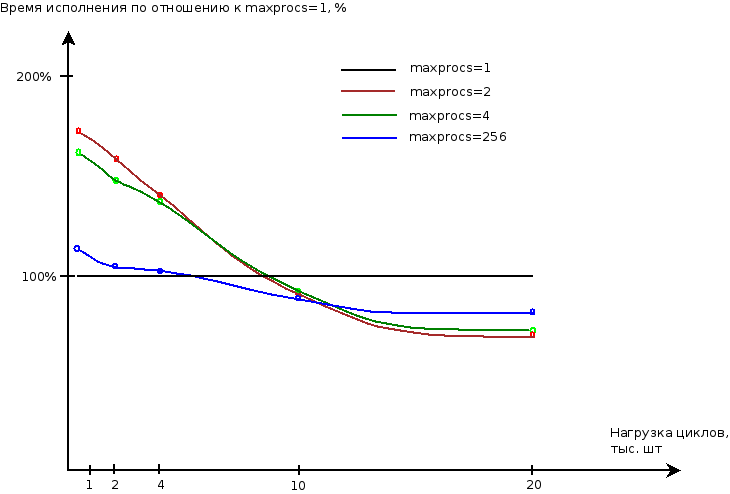

The dependence of the filling rate of the channel from the increase in ballast load

Under load, multi-threading still takes its toll.

Conclusions from the graphs

With maxprocs = 1, everything works in 1 thread, pseudo-multithreading is provided by the internal goal scheduler, which is very effective.

When maxprocs = 2 or more, more streams appear, the interaction mechanism between streams is activated. There are locks, and everything becomes slower, on sleeping streams - about 2 times.

With maxprocs = 3..256, the actual number of threads in the system does not grow, but some of the flows become “pseudo-flows”, the interaction between which begins to fall under the control of the internal glang scheduler. This gives a small increase in speed with each increase in the number of "pseudo-threads."

With a further increase in maxprocs (up to its maximum value of 256), more and more threads become pseudo-threads and fall under the control of the internal scheduler. But some of the threads still remain independent system threads. On sleep streams and fast calculations, this costs us about 10% of the performance.

Conclusion.

Changing the runtime.MAXPROCS parameter can bring both gains and performance losses. This again confirms the general principle that the effect of parallelization depends on the specific task and specific equipment.

Source: https://habr.com/ru/post/195818/

All Articles