Intel Perceptual Computing Challenge Competition - Inside View

September 23, 23-59 GMT, application acceptance for the Intel Perceptual Computing Challenge has ended. Now we, the participants of the competition (including several habrauser, for example still ithabr ), are waiting for the results. And the results will probably not be soon - at best in the middle of October. And in order not to lose my mind in the process of waiting, I decided to write this post about how the competition was held and the development was carried out from the point of view of our competitive project. After all, as you know, if you don’t know what to do, smack a wand .

I hope the other participants of the competition will add my story with their impressions in the comments, and even, what the hell is not joking, in their posts.

')

If possible, I will try to refrain from advertising / PR of our project, but since it so happened that I participated in the development of my own project, and did not participate in others, I will be able to tell about its evolution the most. I hope someone will be interested, and maybe useful.

Just in case, I will clarify - this is not a success story. No one knows (I think even the judges do not know yet) what our project will take and whether it will take at all. It is possible that our application they simply will not start because of some little things that we have not provided. It will not start, and it will simply go on to the next candidate. And, in my opinion, that is why it is best to write an article now, than to write another proud success story, or silently not write a story of failure.

Start

After the hackathon, we knew that there would be a big Intel contest on the same PerC SDK, and we already knew that we would participate there. And finally, the sixth of May announced the start of the competition. Until June 26, it was necessary to submit an application with a description of the idea. From the list of all ideas, the organizers had to select the 750 best, and only they were allowed to further participate in the competition.

The idea, as such, we had, in general, is ready. It was formulated on the hackathon - this is our project Virtualens (a virtual lens, translated from basurmansky). The idea was to solve one simple problem arising during video calls (for the same skype for example) - sometimes we have something (or someone) in the background that is not very convenient to show to the other person. Well, there are socks on the sofa scattered, or a mountain of dirty dishes, or just someone from the household, such as a girl, does not want her uncombed to be seen by chance in the background in full detail, or vice versa, if you are a girl, it could be a guy in family, which mother during the video call is better not to show in small details.

Since the camera working with PerC SDK (Creative senz3d, but promising other models) provides not only a picture, but also information on depth (depth map), the solution was obvious: we will emulate the effect of a small depth of field of the camera, that is, only what is at a given distance from the camera, and everything that is farther from it is blurred (in reality, what is closer is blurred, too, and we even realized it, but then refused, it only creates additional inconvenience for the user).

Algorithms

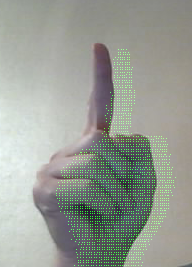

We even had some kind of implementation-prototype that we put on the hackathon. And there was an understanding that there was still a lot of work. So I took a vacation for the whole of June, and the whole vacation dealt with algorithms. And there was something to do. For example, holes in the depth map after displaying it on the rgb-picture (see the starting picture of the post - this green is there for a reason, these are all points, information about the depth of which is missing).

The appearance of points with unknown depth has several reasons:

- The distance to some points is so far that the camera just does not shine through them with its “IR-flashlight”

- To some points, on the contrary, it is either too close, or the material is shiny there, or the sun is on the other side, so here we have the illumination, and again we don’t know the point information

- In the picture you can see the shadow on the blind by hand. It arises from the fact that the "IR flashlight" and the camera lens are not at the same point. Exactly the same effect can be observed when shooting from a mobile phone with a built-in flash.

- And finally, the most important and most vicious is the artifacts of stretching the depth map to rgb - the angle of view of the rgb camera and the depth camera are different. Accordingly, when trying to combine pictures, we get about the same thing as if you try to wrap a ball with a flat piece of paper - it does not turn out, you have to cut it into pieces and glue it later. Here is the picture and "cracked." (You can, of course, do it more carefully, with interpolation and so on, but the thing is that you need to do all this very quickly, if in the PerC SDK, instead of the current one, there will be some other wonderful algorithm that will do it perfectly, but load processor and give 10 fps, it will not need anyone.)

I read all sorts of research on the subject, watched the classic inpaint algorithms. For example, Telea’s algorithm was not suitable for this task due to its performance. As a result, we managed to write a heuristic algorithm that fits well with our task (but is not a solution in the general case).

Filing an application

The organizers of the competition at the last moment brought a surprise. Pleasant. The deadline for submitting the application was postponed for a week (July 1). So not only did I manage to comb the algorithms, but we managed to implement all of this up to the state of techno-mode, capture and “mount” even a video with a demonstration, but without sound. It turned out this:

Here we have another implementation with blur and the fact that it is too close to the camera.

Began to appear on YouTube and video applications of other participants. Here, for example, in my opinion, a cool idea / demo:

Finalists

The results of the selection of applications became known only on July 12. That is, 12 days after filing. The finalists should have sent the cameras. We already had one camera (according to the results of the hakaton), but one more camera was still needed - there were two of us, and one camera. In addition, the second copy of the camera was also important because it was necessary to assess what the spread of the calibration cameras. The fact is that on the camera we had there was a problem - after displaying depth on rgb, the result was shifted (see the picture - green dots, this is the depth data corresponding to the hand). And it was not clear - is it all cameras so, or will everyone have different ways? It was critical for our task, although not for many others.

The results of the selection of applications became known only on July 12. That is, 12 days after filing. The finalists should have sent the cameras. We already had one camera (according to the results of the hakaton), but one more camera was still needed - there were two of us, and one camera. In addition, the second copy of the camera was also important because it was necessary to assess what the spread of the calibration cameras. The fact is that on the camera we had there was a problem - after displaying depth on rgb, the result was shifted (see the picture - green dots, this is the depth data corresponding to the hand). And it was not clear - is it all cameras so, or will everyone have different ways? It was critical for our task, although not for many others.While waiting for the camera, we solved two more tasks.

First, it was necessary not only to be able to blur the image where necessary, and not to blur where it was not necessary, it was also necessary to somehow deliver this video stream to the application (say, Skype). That is, you had to pretend to be a camera. After the experiments, it was decided to stop creating a DirectShow source filter. Alas, Microsoft cut access to DirectShow in Metro-applications, now there is MediaFoundation, and in Media Foundation it’s impossible to pretend to be a camera for someone else's application. To support Metro, you need to create a fully-fledged driver, which is more difficult. Therefore, we decided to abandon the support of Metro in the version of the program for the competition - more chances to have time to bring the application to the deadline.

Secondly, we were looking for someone who was not a programmer, but vice versa. That is, a designer / creative, someone who would understand in a human, and not in a programmer. For we needed icons, we needed a minimal but neat interface, we had to shoot a video and so on. Oh yes, it was necessary to still speak English in order to voice this video. And after ten days of searching for such a person, we managed to find one!

Thus, now our team has grown to three people:

- Nadia - GUI programmer, Sharp's expert, and generally a clever beauty

- Lech is our friend and creative mastermind, he helped with the design of the UI, drew pictures and icons, shot and edited the video, and you will hear his voice in all of our English-language videos

- Your humble servant, valexey - algorithms and any system, as well as project-ideological

At the same time, the long-awaited camera arrived.

Development

To the joy that we finally had a person who knows how to end Photoshop, we began to come up with options for icons for our application (the most important thing in the program, yeah, especially when there is a month left on the deadline, but nothing is ready yet, although this is for me now it is clear that then nothing was ready, but then it seemed that everything was almost ready!). Probably 10 variants were tested, and something was spent for about a week. And then we shoved it into the system tray (where it should be - when using our virtual camera, an application lives in the system tray, through which you can quickly set something up if you don’t want to use gestures) and realized that it’s ok does not look at all. So I had to redo it again.

Then we started coming up with an interface for the program. It is clear that basically the user of the interface will not see - he works with Skype, or there is another Google Hangouts, he sets up the parameters of Virtualens mainly with gestures, but sometimes ... for example for the first time, he will go into the settings dialog where you can turn it on and off gestures, well, adjust the distance from which you want to blur, and the power of blurring (although these two parameters are more convenient to adjust with gestures). In addition to the settings, this dialog was also supposed to play a teaching role - to suggest exactly which gestures work, how to perform them. Gestures are simple, but people are still not used to them, and at least the first time the user would be nice to see how someone does it right.

There was also an idea of how to show what the power of blur is, and how it differs from the distance. A GUI component for this was invented and even implemented, depicting at the same time the dependence of the size of the convolution kernel (blur force) on the distance from the camera, and an example of how exactly the picture will be blurred in this combination. But after several people completely interpreted what this component showed, it was decided to abandon it. Too ... hmm ... mathematically it turned out.

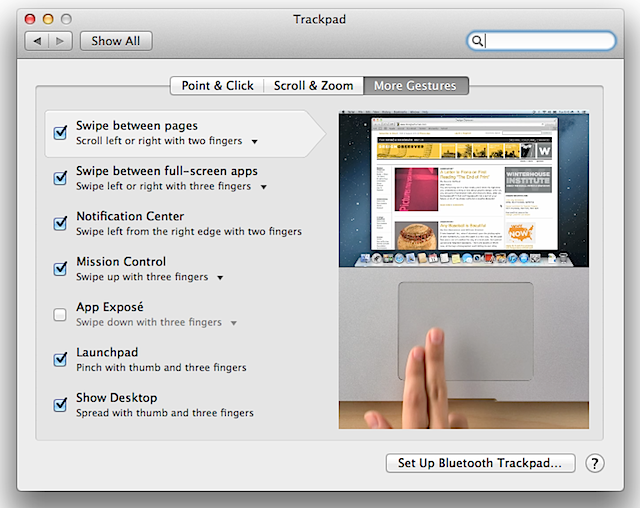

As a result, it was decided to simplify the GUI as much as possible, to make it look as native as possible (WinForms were used for the GUI, by default they do not look native, I had to tinker a bit) and lightweight. And to teach a user to borrow an idea from an apple, from the Trackpad settings dialog - with playing video demonstrations of gestures when hovering over the corresponding item:

But what happened with us:

The “play” buttons are added for a reason - they are for those who use the touchscreen, because in this case the person will not crawl with the mouse and most likely simply won’t guess that you can stick there to play the video. And by default, this video camera shows the preview from the camera (already blurred as it should and where it should be).

Camera calibration

The camera arrived made us sad. She had no (or almost no) displacement. This meant that she was either so lucky, or everything was fine in the new cameras, but in any case it meant that we didn’t know what the user (judges) would have - what kind of camera and what depth shifts relative to rgb. It was necessary to think about calibration. And, apparently, one that would be as simple and user friendly as possible. It was complicated. It was very difficult, and threatened to bury the whole project. That is, it is difficult not to do the calibration as such, but to make it so that any person far from the equipment could produce it.

Fortunately, on August 9, the Perceptual Computing Challenge Questions and Answers Session was held. Yes, in Russian. With Russian developers from Intel (before that there was still an English-language webinar, it seemed to me rather useless). At this session I asked a question about calibration, and about problems. And they told me that yes, the problem is known that this poorly fixed matrix of old cameras may shift during transportation, and that soon they will release a tool for calibration. That is, they take on this problem. Fuh! We exhaled. Thanks, Intel!

Video shooting

Deadline was approaching and it was necessary to shoot a video about the project and about us. To do this, we armed ourselves with the Man with the Camera and went to some studio, which quite inexpensively agreed to suffer one evening our presence.

I never thought that talking to the camera was so difficult. No, I gave an interview (including on camera) - this is not that. This is not at all. To record a video with a distinct story, you need to do a lot of duplicates. It is very difficult to be shot together - while one says, the other will probably look somewhere not there.

But we shot. And then thrown out and reshooted at another time and in another place and in a different format, and already completely independently. And in September.

Postponement

On August 10, 16 days before the Final Great Deadline, the organizers said that everything was postponed again. On September 23. In fact, they gave us another month, but I would not say that this month has gone a long way for us. We relaxed a bit. Slightly rested. And then it got colder and a little bit sore. For example, I unsuccessfully tried to break between the main work and the project - and for that and the other did not have enough strength. Completely to hammer on that and on another - too it was impossible. As a result, both there and there successes were more than modest. Alas.

But something did happen for the second half of August and the beginning of September. Basically, it was not so much programmer accomplishments, as much as a glance around, what is similar in general in the world. And there was something to see. For example, Google Hangouts already has a built-in blur or background replacement. Only there is a nuance - when you leave the frame, your transparent contour remains, through which everything shows what is happening in the room.

A competitor was discovered even worse - we found Personify for Skype . Which removes the background (does not blur), and which comes immediately with the camera. And with whom Intel is friends. They are already where we only dream to be! It is designed in the form of a plug-in for skype (it was in the conversations of Intel that people constantly thought that our project was also a plug-in for skype! And I was all surprised, for doing this is a plug-in, well, very somehow strange, well, in general, In theory, the knowledge of the subtleties of Skype's plug-in organization is beyond the competence of Intel employees involved in the PerC SDK). However, the installation of this application showed a strange one - it was not very well designed. Moreover, there was a bug (including how they achieved it - I did not understand), because of which during the operation of their plug-in (and he strapped right after Skype started, captured the camera and loaded the processor of my laptop by 70%, i.e. this is without a call) it is impossible to use other PerC SDK applications that use depth & rgb at the same time.

Generally. as a result, we were not upset, but rather the opposite - we received a charge of optimism - we can be better!

But the main work, of course, as always, fell on the last week (who would doubt!).

the last week

Suddenly, there is a week left before the deadline. And we still had to re-shoot the video, it was necessary to adjust the interaction of various components that are spinning in different address spaces, it was necessary to make a quick settings dialog, make the installer and a thousand more vital details. The main functionality works, but without trifles it will not take off everything! But there were no trifles, for example, it was not a small memory leak that was found in the GUI

We worked 20 hours a day this week.

We worked and reworked gestures. Initially it was assumed that the user would enter the camera settings mode by clapping his hands in front of the camera lens. It turned out that this is very unpleasant for the interlocutor. Therefore, we switched to a combination of gestures - now the user must first make a V gesture (for example, he is in the picture at the beginning of the post), the Virtualens icon appears on the preview, confirming that the gesture was recognized, then you just need to wave it to him. It turned out very intuitively - first we turn to Virtualens, showing the first letter of the name on the fingers, then wave our hand to it. The exit from the settings is the same V, only you don’t have to wave your hand.

We made a shutdown of the camera image, if the user closed it with her hand - instead of looking for where the Skype button has to disable the video, we simply close the lens with our hand and go to solve our problems.

We shot the video ourselves, this time outside, and mounted it. We caught and crushed a terrible cloud of bugs (how many of them did we add, kodya at such a pace ?!). We shot a video to show gestures. Nadia from shooting on the street sick - caught a cold. She said the day before the deadline on the night: “I still do not fall asleep from cough until the morning, but I’ll finish the project!”, And did finish it — I decided two very nasty problems that night that suddenly got out. And the next day she was hospitalized.

My sister helped me a lot with the installer - she did the main work, all I had to do was to fix some details in the installer.

2 hours before the deadline, everything seemed to be ready for me (in fact, later it turned out that not everything). They waited only when the final video will be edited with English voice acting and rendered. I am waiting. It remains an hour before the deadline. I am waiting. It remains 30 minutes - I begin to frantically independently impose English-language subs on the video. Without 15 deadline I call Alexey, asking what is there. And there, it turns out, he put on the render, it is rendered for about 20 minutes ... and he was knocked out on the spot - he fell asleep! People have a tensile strength. But we had time. Without 4 minutes deadline everything was sent.

We had time.

Results

Following the results, I forgot to write in the description of the project that I need to restart Skype after installation. Added to the description of the video on YouTube, but not the fact that someone will notice. Now the nightmare torments me - the judge's installer was launched, the Virtualens item did not appear among the cameras on Skype, deleted, moved on to the next project.

I do not know, we do not know what the results of the competition will be. We can not even suggest. But we know that it has already paid off - it paid off with experience. I did not know that I could work so productively (even if only a few days). This competition served as a benchmark for our abilities. However, our project will not end at the competition. We have now distributed our cameras to several people so that they can test our program, and also Personify for Skype. We need to understand what is right and what is wrong and what needs to be corrected.

Video projects

Our main video:

I think the video about how to install something, and then restart Skype here is not interesting to anyone. But the settings dialog to see it live I think it will be interesting:

Well, how to configure Virtualens, if your client does not have webcam options buttons:

Other projects: in general, you can go here , and watch all the videos that are not older than one month - these will be the final applications for the competition.

Also, one participant has already walked on YouTube and gathered together all the found projects (it turned out something about 110 pieces): software.intel.com/en-us/forums/topic/474069

Well, what I specifically liked:

I liked the ithabr project, but he asked not to distribute the link to his video. So I will not.

This is also from Russia, more precisely from Samara:

And this is just magic. In the literal sense - through the PerC SDK spells are created in the game. You can feel like a magician to the fullest. To pump not abstract figures, but own dexterity and smoothness of hands:

Thanks to everyone who read this sheet to the end.

Source: https://habr.com/ru/post/195474/

All Articles