Performance comparison of Xamarin (monodroid) and Java (DalvikVM) on Android devices

Good day. Many people are interested in how much Xamarin performance differs on Android or iOS. I’ll leave the issue with iOS open for now, but I’d like to close all questions on monodroid performance once and for all.

Often, these questions are caused due to a misunderstanding of how the monodroid works, for example, I was asked questions like “And does Xamarin rebuild under JVM?”. This is certainly not the case. It is important to understand that Xamarin runs at the same Android level as the Android Dalvik virtual machine. Therefore, when comparing performance, we actually have a comparison of the performance of two virtual machines: Mono VM and Dalvik VM.

Test method

To verify performance, you need a Java-implemented and generally accepted method that will need to be implemented in C #. I decided to use the well-known LINPACK benchmark primarily because its source code is open and it will be easy to implement the C # version, and secondly, there is a ready-made Android version of LINPACK for Android written in Java.

')

Performance test LINPACK is a performance evaluation method by evaluating the speed of a floating-point operation of a computer system. The benchmark was created by Jack Dongarra and measures how fast a computer is looking for a solution to a dense SLAE Ax = B of the dimension N x N using the LU decomposition method. The solution is obtained by the Gauss method with the selection of the main element ( description ), in which 2/3 * N 3 + 2 * N 2 floating point operations are performed. The result is defined in Floating-point Operations Per Second (MFLOP / s, more often just FLOPS). The performance test itself was described in the LINPACK Fortran linear computing library documentation and since then its variations have been used, for example, to compile TOP500 supercomputers.

Implementation

Despite the rather simple task, I decided to implement it according to the guideline I developed for cross-platform development.

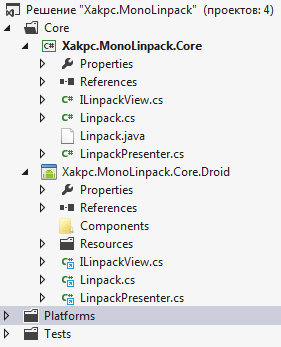

In the first step, we build a cross-platform solution:

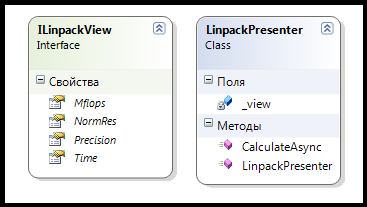

Then, according to the MVP design pattern, we create Presenter and View.

We write tests and implement Presenter through a test. For this, I use NUnit and NSubstitute. There is no special point in describing NUnit, in my opinion there is simply no more convenient framework for testing. NSubstitute is an extremely convenient framework for creating stubs based on interfaces. The frameworks are installed via NuGet.

A small example of using an NSubstitute from docks

// Let's say we have a basic calculator interface: public interface ICalculator { int Add(int a, int b); string Mode { get; set; } event Action PoweringUp; } // We can ask NSubstitute to create a substitute instance for this type. We could ask for a stub, mock, fake, spy, test double etc., but why bother when we just want to substitute an instance we have some control over? _calculator = Substitute.For<ICalculator>(); // Now we can tell our substitute to return a value for a call: _calculator.Add(1, 2).Returns(3); Assert.That(_calculator.Add(1, 2), Is.EqualTo(3)); // We can check that our substitute received a call, and did not receive others: _calculator.Add(1, 2); _calculator.Received().Add(1, 2); _calculator.DidNotReceive().Add(5, 7); // If our Received() assertion fails, NSubstitute tries to give us some help as to what the problem might be: NSubstitute.Exceptions.ReceivedCallsException : Expected to receive a call matching: Add(1, 2) Actually received no matching calls. Received 2 non-matching calls (non-matching arguments indicated with '*' characters): Add(1, *5*) Add(*4*, *7*) // We can also work with properties using the Returns syntax we use for methods, or just stick with plain old property setters (for read/write properties): _calculator.Mode.Returns("DEC"); Assert.That(_calculator.Mode, Is.EqualTo("DEC")); _calculator.Mode = "HEX"; Assert.That(_calculator.Mode, Is.EqualTo("HEX")); In our case, we first create the Setup method.

[TestFixtureSetUp] public void Setup() { view = Substitute.For<ILinpackView>(); presenter = new LinpackPresenter(view); } And we perform a simple test, naturally asynchronously.

[Test] public void CalculateAsyncTest() { presenter.CalculateAsync().Wait(); Assert.That(view.Mflops, Is.EqualTo(130.0).Within(5).Percent); } With third-party software, I found out that the performance of my PC is about 130 MFLOPS / s, so we will write it in the expected values, adding an error.

Inside my method, I create an asynchronous Task and populate the View. Everything is simple and clear

public Task CalculateAsync() { Linpack l = new Linpack(); return Task.Factory.StartNew(() => l.RunBenchmark()) .ContinueWith(t => { _view.Mflops = l.MFlops; _view.NormRes = l.ResIDN; _view.Precision = l.Eps; _view.Time = l.Time.ToString(); } ); } The program is actually implemented, not a single line of platform-specific code has been written yet. Now we are creating an Android project:

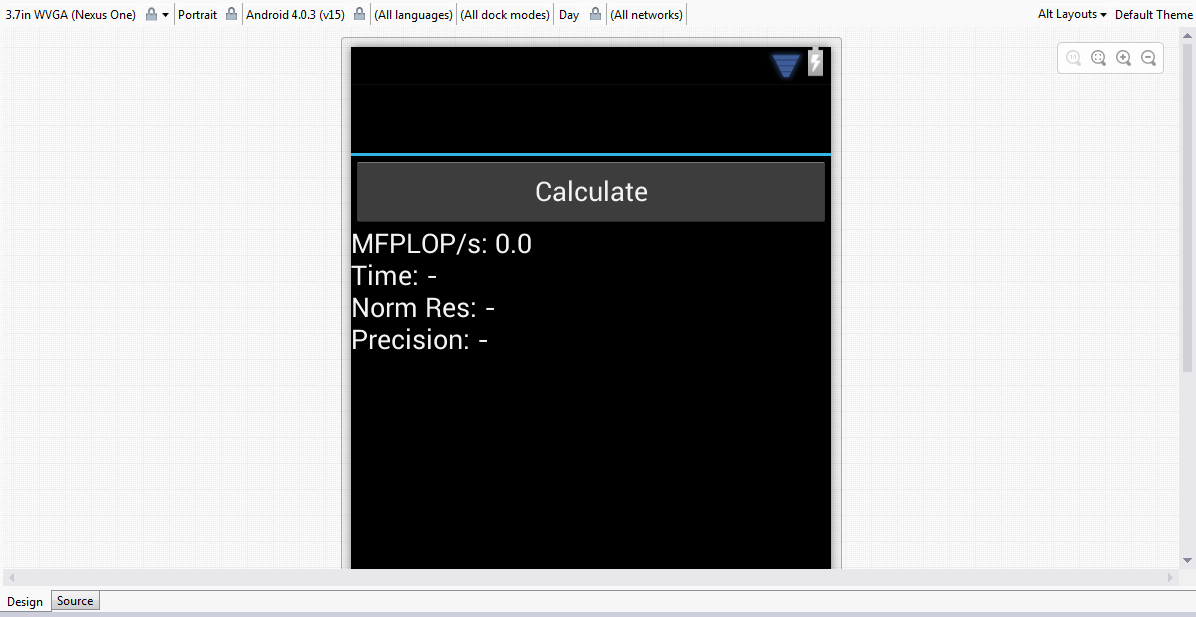

Visual designer inside Visual Studio will allow us to quickly and easily distribute the necessary application and see how it looks with different themes. True, since none of the topics have been applied to the Activity, then on the device we will get the default view

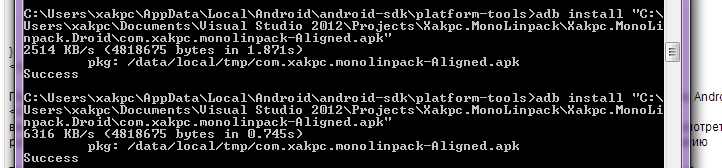

Now it remains only to compile the release, sign it with the master attached to the studio and install it on devices using the console utility adb.exe

Recently, I noticed that the speed of code execution depends not only on Debug / Release but also on the connection of the device via USB. This happens due to the fact that when the phone is connected in Android development mode, it drives huge amounts of debugging information to a computer, which may affect the speed of operations (or maybe not), especially if there are logging structures like Trace. Therefore, disable the device and run the tests.

Done!

Result

I have two test devices, HTC Desire with Android 2.3 (Qualcomm QSD8250, 1000 MHz, 512 RAM) and Fly IQ443 with Android 4.0.4 (MediaTek MT6577, 1000 MHz, 512 RAM)

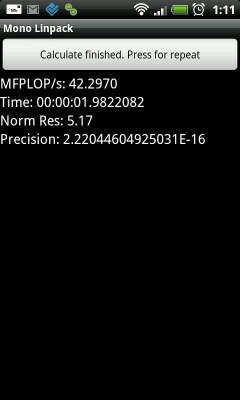

Here are their results:

HTC Desire:

|  |

Fly IQ443:

|  |

And beautiful graphics

Conclusion

The test results show that Mono's work on Android is at least comparable to, and sometimes exceeds, the performance of Dalvik, but on the whole they are roughly equal. The second column is incorrect, since Mono independently parallelized the test into two cores without any actions on my part, I suppose somewhere on the Task level, while for Linpack for Android it is necessary to explicitly select the multi-threaded test.

Incidentally, this project shows the differences in size for release assemblies. The Java version of the test weighs only 280K when the Monodroid version weighs almost 2.3 MB for one type of processor (armeabi and armeabi-v7), i.e. in the amount of 4.6 MB , which, however, in the conditions of modern networks does not seem to me particularly critical. If this apk size seems unacceptable to you, you can separately build and distribute packages for armeabi and armeabi-v7, the benefit of Google Play allows you to download different apk for different platforms.

The source code of the application is here.

PS I would like to collect statistics on devices. So if anyone has Android and 10 minutes of free time I will be very grateful if you measure the performance of your device using LINPACK for Android and the newly created MonoLINPACK and write the result here (if you have a multi-core processor, select Run Multiple Thread right away in LINPACK for Android)

PPS A similar test for iOS will

UPD1 According to current tests, it is clear that Mono and Task by default does not use all cores on 4-core processors. Therefore, the results between Linpack for Android and MonoLinpack on such devices are very different. In the near future, MonoLINPACK will be modified using TPL.

Source: https://habr.com/ru/post/194866/

All Articles