And how many drones give milk? Reverse engineering techniques in sound design

The article is an attempt to figure out whether it is possible to transfer the experience of reverse engineering in sound design by means of expressiveness of the Russian language.

Once, while reading music forums, I came across a topic discussing sound design in the Oblivion film. People were interested in the process of creating the sounds of drones. Since there are essentially no answers, after a few weeks, the topic did not appear, and there was only water in the official video , I decided to try to find the answer myself, using reverse engineering methods.

The first scene with the participation of a drone (in the 12th minute of the film), which can be found on YouTube, was chosen as a reference. After several hours of work, I was able to get the following result:

')

The whole development cycle can be divided into the following stages:

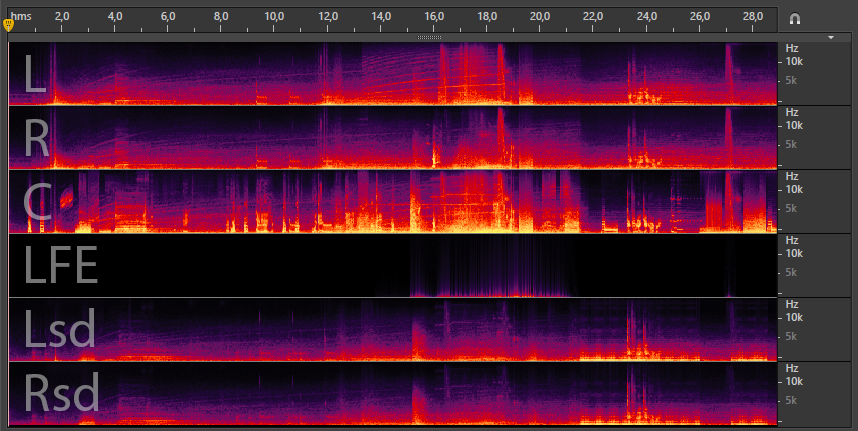

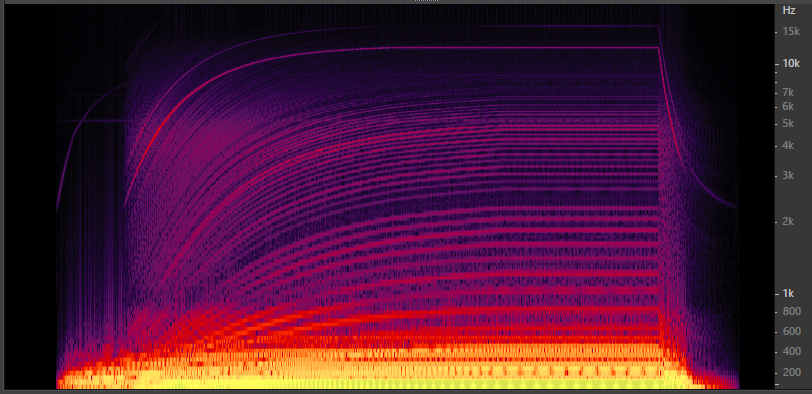

First of all, with the help of ffmpeg, I cut out a 30-second reference scene from the movie and saved it as an audio file that I imported into the main host for convenient A / B comparison in the process. Then, using SoX , I made large-format (2000x2000 pixels) spectrograms of each audio channel. Despite the fact that I spend most of my work with the spectrum in Adobe Audition, where there is a spectral editor, the SoX spectrograms allow you to quickly get an idea of the sound picture as a whole and the filling of each of the 6 channels of 5.1 sound.

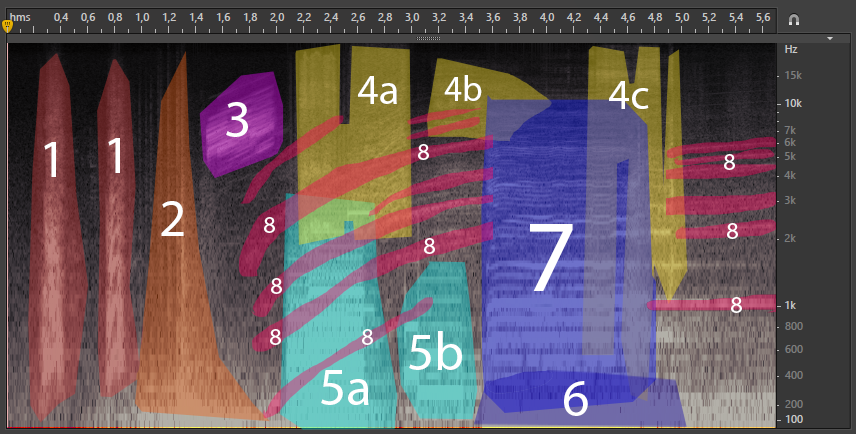

Spectrogram of the original 5.1 sound scene in Adobe Audition

Since the episode I chose is rather static, the main sounds are in the center channel, which is confirmed by the spectrogram. This greatly facilitates further work. Using ffmpeg, I export the central channel and open it in the audio editor.

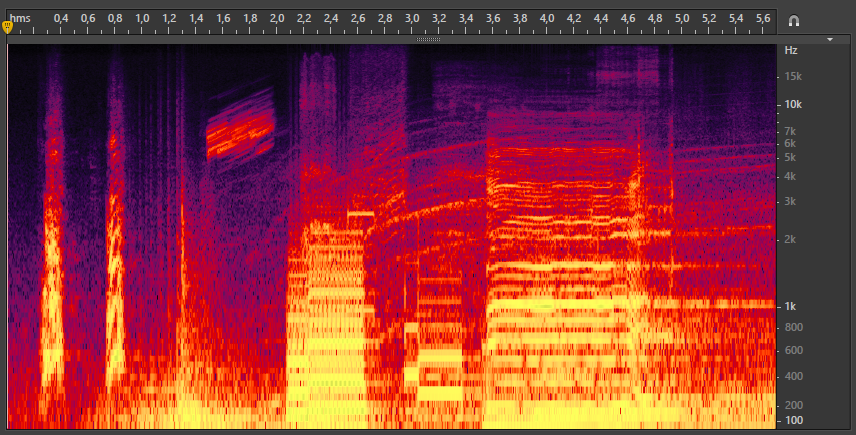

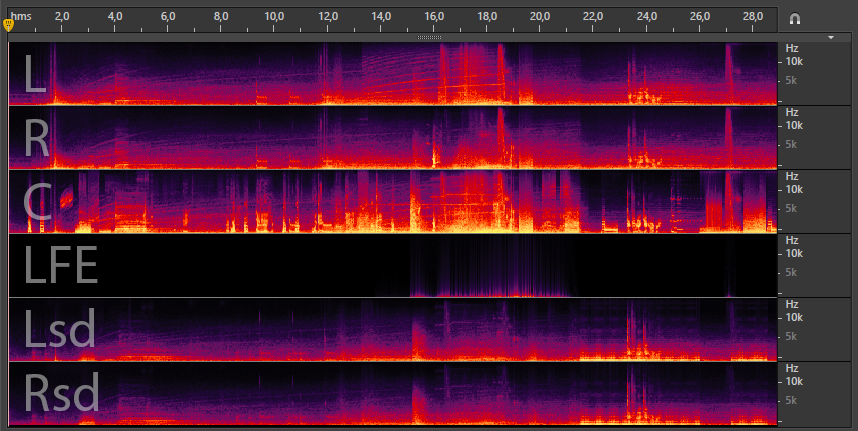

Wave and spectral modes of sound display of the central channel

As a rule, the mode of viewing the waveform helps in analyzing simple sounds, and the main information we can get with it is at what point the sounds appear, what is their amplitude and duration. In the case of complex scenes in which there are background noise and multilayer elements, you can safely switch to the spectral mode.

In a few words about the difference between the wave and spectral modes: in the wave mode, sound is represented in two-dimensional space XY, where X is the time axis, and Y is the amplitude of the wave oscillation in dB. Spectral mode allows you to see sound in three-dimensional space XYZ, where X is time, Y is the frequency range in Hz, and Z is the intensity (loudness) of the signal, which is set in color, according to the principle: the louder the sound, the brighter the color.

Let's sort the first 6 seconds of the scene. So its spectrum looks like:

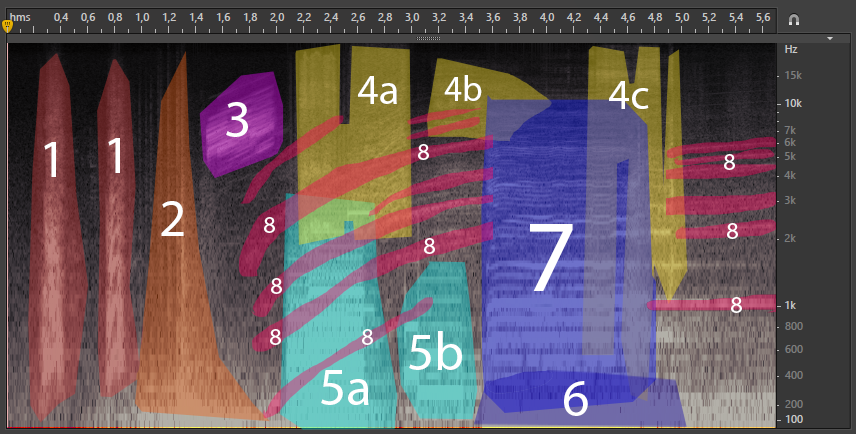

After carefully listening to the scene and studying the spectrogram, the following sound elements can be distinguished:

We break them into logical groups:

We get the following list:

This is our sound card. Let me remind you that the map! = Territory. In this case, this is my subjective vision of the sound content of the scene. Another person may have a different map and group. And there is nothing bad in it, it is important to understand that our further actions and the final result will depend on how believable and detailed we will draw the map.

So, the map. Barking dogs and drumming are not related to the drone, so we will immediately move on to point 3.

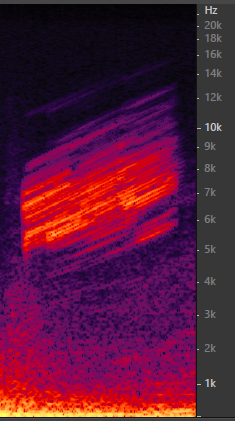

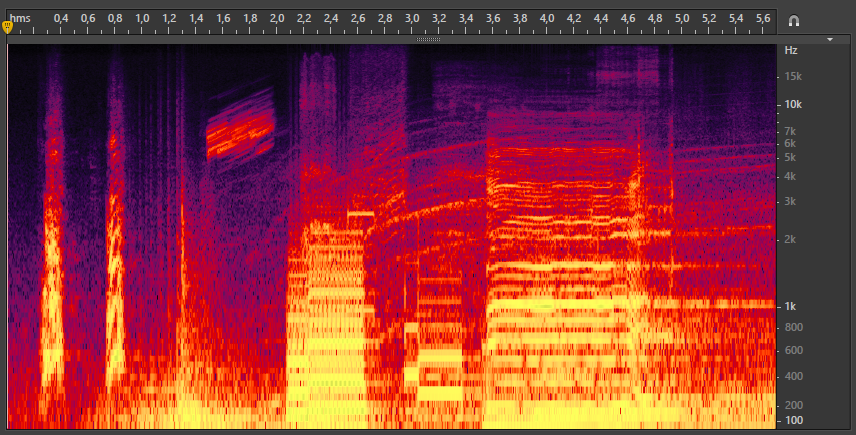

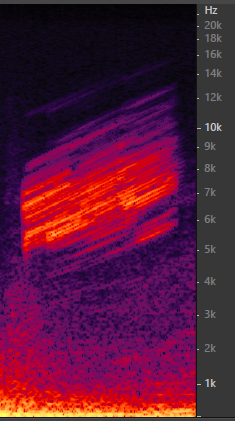

Spectrum sound activation drone

The spectrogram shows that the beginning of this sound fits in the range from 5,000 to 10,000 Hz and then goes linearly to the range from 6000 to 12,000 Hz. This means that we can synthesize a static sound, with a spectrum, as at the beginning of the activation sound, and then, using automation, smoothly change the pitch to the state at the end of the activation sound. The very sound of this element has tonal characteristics and in the spectrum, among the noise, there are individual strips of harmonics. It can be assumed that initially it was a signal rich in harmonics (for example, a sawtooth wave), which was processed with a band-pass filter (with a passband of 5,000–10,000 Hz). Let's try this process again.

Synthesizer U-HE Zebra is known among musicians and sound designers not only for its depressing appearance, but also for a very flexible modular organization, as well as a large number of unique effects that allow you to create sounds of almost any complexity. Well-known sound designer Howard Scarr used Zebra to create sounds for "Inception", "The Dark Knight", "The Dark Knight Rises" and many other films.

Synthesizer U-HE Zebra. Preset sound activation drone

The preset logic in the screenshot above is simple: the OSC1 oscillator, which generates a sawtooth wave, applies the Wrap effect (to enrich the spectrum with additional harmonics and noise) and Bandworks (bandpass filter, which removes everything from the spectrum except the range of 5000-10000 Hz). The pitch of OSC1 (Tune) varies in time with the MSEG1 envelope. At the end of the chain, a cut filter (VCF1) cuts off frequencies above 10,000 Hz that Bandworks could not cope with, and also slightly compresses the sound with resonance (Res) and saturation (Drive). The whole process of sound formation can be represented as a chain of modules:

OSC1 -> Wrap -> Bandworks >>> MSEG1 >>> VCF1 -> Res -> Drive >>> Envelope 1

The last module in the list is the so-called. The ADSR envelope , which in our case controls the change in overall volume.

As a result of this operation, we obtain:

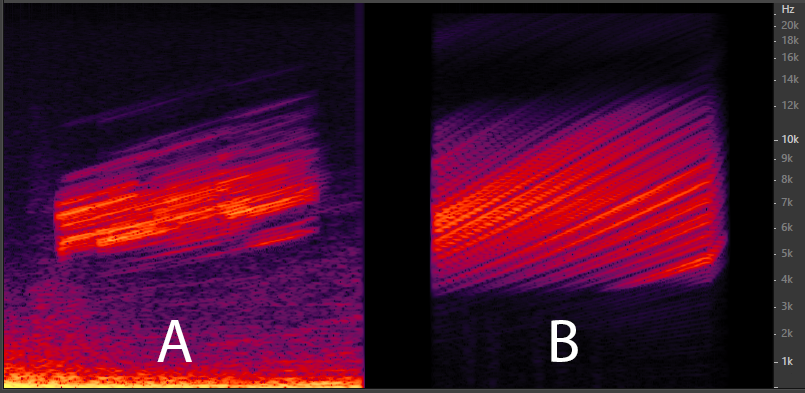

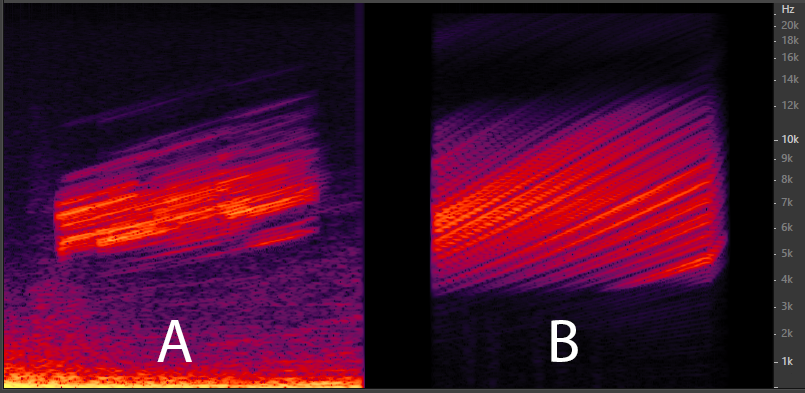

Comparison of the spectrum of the original (A) and synthesized (B) activation sounds

Download MP3 sample from Google Drive

Player

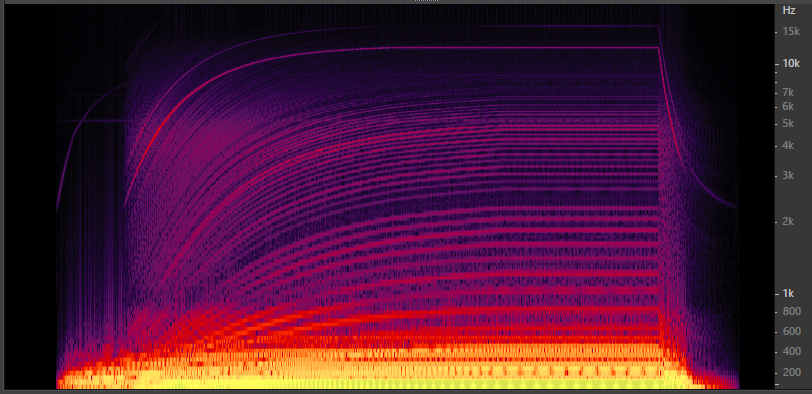

The synthesis of servomechanisms is a separate topic and I will not discuss it in detail in this article, since in the original scene, recorded samples were most likely used to sound these elements. I can only say that the sound of the operation of any mechanism consists of three phases: on, on, on, off. The sound of work is a looped short fragment that repeats until the off phase occurs. The repetition of a looped fragment with a frequency higher than 20 times per second outputs this (carrier) frequency (vibrations) to the region heard by a person. What we hear in this situation is called the drone. Drones, for example, include the sounds of working fans, engines of machines and machines, drills, electric razors, the buzz of insects, etc ... atonal (tonality is difficult or impossible to determine). In the case of a flying drone in our scene, we are dealing with a working engine at the time of acceleration, that is, it is an atonal drone, the carrier frequency of which gradually rises. In the screenshot with groups, this sound is marked with the number 8, and it is synthesized by the same principle as the previous element. In the spectrogram, we select the place where all the harmonics are clearly visible, record their frequencies at this point in time and recreate it using one or more synthesizer oscillators. Then automate the change in pitch, simulating acceleration. Since in our scene the sound of the engine does not play a significant role, I did not begin to reproduce it in every detail, but quickly threw a preset for Zebra to demonstrate the idea itself:

Engine sound preset

Spectrogram of synthesized sound engine

Download MP3 sample from Google Drive

Player

Go ahead. The tone of the siren stands out from the drone's sound palette primarily by the presence of character. It does not look like the rest of the cold electronic tweeters and hooters. This is a sound that clearly promises imminent trouble to the one who hears it, he hints, as it were, that something seems to have gone wrong (and this will be a disaster).

Spectrogram siren from the movie

This is a harmonic-rich sound; in the harmonics there is a slight vibration with a non-fixed frequency, which is characteristic of the sounds of nature. A siren resembles a cry of a person or an animal, and in timbre it resembles something between the sounds A, U, U, which confirms the version that it is a live sound. At first, I thought that the sound engineers who worked on the film probably read Philip Dick and, perhaps, decided to use the bleating sheep - a kind of Easter egg as the source of this sound. But after searching for sheep on freesound.org, I came to the conclusion that their voices are too high and therefore you need to look for a bigger animal, but with similar voice characteristics. The first sample of a mooing cow turned out to be what I was looking for.

Applying the effects of time stretch, distortion to this mooing and slightly correcting the pitch, we get the following:

Download MP3 sample from Google Drive

Player

Add a low frequency signal (6) and reverb:

Download MP3 sample from Google Drive

Player

Let's compare the siren spectrum from the film and the final version of our cow:

All three elements 5a, 5b and 6 are intervals played by one or three similar instruments, in the timbre of which there are signs characteristic of FM synthesis . The sound also resembles DTMF tones . These moments are determined without analyzing spectrograms, just by ear, like the intervals themselves: for 5a this is a triton up, for 5b it is a quint down, for 6th a quart up. Further, experimenting with the FM oscillator in Zebra, we quickly get a similar sound.

Download MP3 sample from Google Drive

Player

OSC1 oscillator generates a sine wave that sets the pitch. The OSC2 and FMO1 oscillator are in dissonance with each other and with OSC1 (that is, their frequencies are not multiple of the OSC1 tone frequency), which as a result gives this tense sound, something like a siren or a police quack.

Background noise is probably the most underrated by most people information component of the sound picture. However, the same is true for the background in all other areas of life. At one time, the emergence of such a genre as a landscape, was a revolution in painting, the pattern of the man in the street rushed at the sight of paintings, where there was no main character. Today everyone knows what Mona Lisa looks like, but not everyone can remember what is depicted behind her, whether she is sitting at an open window or, perhaps, standing in a clean field. And yet, if you remove the background completely, it immediately catches the eye. The same story and sound background. If not, the scene loses realism, atmosphere and meaning. Sound events occur in ... "nowhere." Therefore, in order to revive the sound of our scene, I selected an emotional background suitable for her on freesound.org.

The final version with all the whistles and beeps:

Download MP3 sample from Google Drive

Player

When I conceived this article, I planned to describe the process of creating all the sounds that are in the final version. But judging by the amount of water I diluted here, even this paragraph was most likely reached by a few. Hello! Thank you for reading to the end.

FM synthesis (Wikipedia)

DTMF (Wikipedia)

ADSR envelope (Wikipedia)

Cow sample

Environmental sample

Samples of servomechanisms from the final version [1] , [2]

Howard scarr

U-HE Zebra

Once, while reading music forums, I came across a topic discussing sound design in the Oblivion film. People were interested in the process of creating the sounds of drones. Since there are essentially no answers, after a few weeks, the topic did not appear, and there was only water in the official video , I decided to try to find the answer myself, using reverse engineering methods.

The first scene with the participation of a drone (in the 12th minute of the film), which can be found on YouTube, was chosen as a reference. After several hours of work, I was able to get the following result:

')

Training

The whole development cycle can be divided into the following stages:

- Find any additional information on the topic.

- video from studios involved in film voice acting

- interview with creators

- discussions on specialized forums (what if someone already figured out everything and we reinvent the wheel)

- technical articles on related topics

- Original analysis

- visual analysis of the waveform, spectrogram, etc.

- making a list of all sounds used in the scene

- description of each sound in technical terms (timbre, spectrum, type of synthesis, layers, articulation)

- compiling a list of associations for each sound (objects, emotions)

- grouping of related sounds (to avoid repeated actions)

- Selection of suitable tools

- spectrum analyzer

- audio editor

- synthesizer

- Synthesis

- proper synthesis

- additional sound design

Original analysis

First of all, with the help of ffmpeg, I cut out a 30-second reference scene from the movie and saved it as an audio file that I imported into the main host for convenient A / B comparison in the process. Then, using SoX , I made large-format (2000x2000 pixels) spectrograms of each audio channel. Despite the fact that I spend most of my work with the spectrum in Adobe Audition, where there is a spectral editor, the SoX spectrograms allow you to quickly get an idea of the sound picture as a whole and the filling of each of the 6 channels of 5.1 sound.

Spectrogram of the original 5.1 sound scene in Adobe Audition

Since the episode I chose is rather static, the main sounds are in the center channel, which is confirmed by the spectrogram. This greatly facilitates further work. Using ffmpeg, I export the central channel and open it in the audio editor.

Wave and spectral modes of sound display of the central channel

As a rule, the mode of viewing the waveform helps in analyzing simple sounds, and the main information we can get with it is at what point the sounds appear, what is their amplitude and duration. In the case of complex scenes in which there are background noise and multilayer elements, you can safely switch to the spectral mode.

In a few words about the difference between the wave and spectral modes: in the wave mode, sound is represented in two-dimensional space XY, where X is the time axis, and Y is the amplitude of the wave oscillation in dB. Spectral mode allows you to see sound in three-dimensional space XYZ, where X is time, Y is the frequency range in Hz, and Z is the intensity (loudness) of the signal, which is set in color, according to the principle: the louder the sound, the brighter the color.

Let's sort the first 6 seconds of the scene. So its spectrum looks like:

After carefully listening to the scene and studying the spectrogram, the following sound elements can be distinguished:

We break them into logical groups:

We get the following list:

- Dog's bark

- Drumroll

- HF drone activation sound

- The sound of the servo

a. High frequency noise (addition to 5a)

b. High frequency noise (addition to 5b)

c. The sound of fixing the servo mechanism (addition to 7) - Signal

a. "Question"

b. "Answer" - Low-frequency signal (addition to 7)

- Siren

- Engine sound

- Background broadband noise (environmental sounds, wind, sand, etc.)

This is our sound card. Let me remind you that the map! = Territory. In this case, this is my subjective vision of the sound content of the scene. Another person may have a different map and group. And there is nothing bad in it, it is important to understand that our further actions and the final result will depend on how believable and detailed we will draw the map.

So, the map. Barking dogs and drumming are not related to the drone, so we will immediately move on to point 3.

Spectrum sound activation drone

The spectrogram shows that the beginning of this sound fits in the range from 5,000 to 10,000 Hz and then goes linearly to the range from 6000 to 12,000 Hz. This means that we can synthesize a static sound, with a spectrum, as at the beginning of the activation sound, and then, using automation, smoothly change the pitch to the state at the end of the activation sound. The very sound of this element has tonal characteristics and in the spectrum, among the noise, there are individual strips of harmonics. It can be assumed that initially it was a signal rich in harmonics (for example, a sawtooth wave), which was processed with a band-pass filter (with a passband of 5,000–10,000 Hz). Let's try this process again.

Synthesis

Synthesizer U-HE Zebra is known among musicians and sound designers not only for its depressing appearance, but also for a very flexible modular organization, as well as a large number of unique effects that allow you to create sounds of almost any complexity. Well-known sound designer Howard Scarr used Zebra to create sounds for "Inception", "The Dark Knight", "The Dark Knight Rises" and many other films.

Synthesizer U-HE Zebra. Preset sound activation drone

The preset logic in the screenshot above is simple: the OSC1 oscillator, which generates a sawtooth wave, applies the Wrap effect (to enrich the spectrum with additional harmonics and noise) and Bandworks (bandpass filter, which removes everything from the spectrum except the range of 5000-10000 Hz). The pitch of OSC1 (Tune) varies in time with the MSEG1 envelope. At the end of the chain, a cut filter (VCF1) cuts off frequencies above 10,000 Hz that Bandworks could not cope with, and also slightly compresses the sound with resonance (Res) and saturation (Drive). The whole process of sound formation can be represented as a chain of modules:

OSC1 -> Wrap -> Bandworks >>> MSEG1 >>> VCF1 -> Res -> Drive >>> Envelope 1

The last module in the list is the so-called. The ADSR envelope , which in our case controls the change in overall volume.

As a result of this operation, we obtain:

Comparison of the spectrum of the original (A) and synthesized (B) activation sounds

Download MP3 sample from Google Drive

Player

Mechanism synthesis

The synthesis of servomechanisms is a separate topic and I will not discuss it in detail in this article, since in the original scene, recorded samples were most likely used to sound these elements. I can only say that the sound of the operation of any mechanism consists of three phases: on, on, on, off. The sound of work is a looped short fragment that repeats until the off phase occurs. The repetition of a looped fragment with a frequency higher than 20 times per second outputs this (carrier) frequency (vibrations) to the region heard by a person. What we hear in this situation is called the drone. Drones, for example, include the sounds of working fans, engines of machines and machines, drills, electric razors, the buzz of insects, etc ... atonal (tonality is difficult or impossible to determine). In the case of a flying drone in our scene, we are dealing with a working engine at the time of acceleration, that is, it is an atonal drone, the carrier frequency of which gradually rises. In the screenshot with groups, this sound is marked with the number 8, and it is synthesized by the same principle as the previous element. In the spectrogram, we select the place where all the harmonics are clearly visible, record their frequencies at this point in time and recreate it using one or more synthesizer oscillators. Then automate the change in pitch, simulating acceleration. Since in our scene the sound of the engine does not play a significant role, I did not begin to reproduce it in every detail, but quickly threw a preset for Zebra to demonstrate the idea itself:

Engine sound preset

Spectrogram of synthesized sound engine

Download MP3 sample from Google Drive

Player

Siren synthesis

Go ahead. The tone of the siren stands out from the drone's sound palette primarily by the presence of character. It does not look like the rest of the cold electronic tweeters and hooters. This is a sound that clearly promises imminent trouble to the one who hears it, he hints, as it were, that something seems to have gone wrong (and this will be a disaster).

Spectrogram siren from the movie

This is a harmonic-rich sound; in the harmonics there is a slight vibration with a non-fixed frequency, which is characteristic of the sounds of nature. A siren resembles a cry of a person or an animal, and in timbre it resembles something between the sounds A, U, U, which confirms the version that it is a live sound. At first, I thought that the sound engineers who worked on the film probably read Philip Dick and, perhaps, decided to use the bleating sheep - a kind of Easter egg as the source of this sound. But after searching for sheep on freesound.org, I came to the conclusion that their voices are too high and therefore you need to look for a bigger animal, but with similar voice characteristics. The first sample of a mooing cow turned out to be what I was looking for.

Applying the effects of time stretch, distortion to this mooing and slightly correcting the pitch, we get the following:

Download MP3 sample from Google Drive

Player

Add a low frequency signal (6) and reverb:

Download MP3 sample from Google Drive

Player

Let's compare the siren spectrum from the film and the final version of our cow:

Signal synthesis

All three elements 5a, 5b and 6 are intervals played by one or three similar instruments, in the timbre of which there are signs characteristic of FM synthesis . The sound also resembles DTMF tones . These moments are determined without analyzing spectrograms, just by ear, like the intervals themselves: for 5a this is a triton up, for 5b it is a quint down, for 6th a quart up. Further, experimenting with the FM oscillator in Zebra, we quickly get a similar sound.

Download MP3 sample from Google Drive

Player

OSC1 oscillator generates a sine wave that sets the pitch. The OSC2 and FMO1 oscillator are in dissonance with each other and with OSC1 (that is, their frequencies are not multiple of the OSC1 tone frequency), which as a result gives this tense sound, something like a siren or a police quack.

Background

Background noise is probably the most underrated by most people information component of the sound picture. However, the same is true for the background in all other areas of life. At one time, the emergence of such a genre as a landscape, was a revolution in painting, the pattern of the man in the street rushed at the sight of paintings, where there was no main character. Today everyone knows what Mona Lisa looks like, but not everyone can remember what is depicted behind her, whether she is sitting at an open window or, perhaps, standing in a clean field. And yet, if you remove the background completely, it immediately catches the eye. The same story and sound background. If not, the scene loses realism, atmosphere and meaning. Sound events occur in ... "nowhere." Therefore, in order to revive the sound of our scene, I selected an emotional background suitable for her on freesound.org.

The final version with all the whistles and beeps:

Download MP3 sample from Google Drive

Player

Finally

When I conceived this article, I planned to describe the process of creating all the sounds that are in the final version. But judging by the amount of water I diluted here, even this paragraph was most likely reached by a few. Hello! Thank you for reading to the end.

Links

FM synthesis (Wikipedia)

DTMF (Wikipedia)

ADSR envelope (Wikipedia)

Cow sample

Environmental sample

Samples of servomechanisms from the final version [1] , [2]

Howard scarr

U-HE Zebra

Source: https://habr.com/ru/post/194670/

All Articles