Centrifuge is gaining momentum

Hello!

Hello!A couple of months ago I published an article on Habré describing the open-source project Centrifuge . Let me remind you that this is a server for sending messages to connected clients (mainly from a web browser) in real time. Written in Python.

Since then, I continued to work on the project in my free time and now I am ready to share my accumulated thoughts and changes.

')

Initially, the centrifuge was a distinctive project. Not much concern for reproducing the functionality of existing analogs, I wrote the code in the way it seemed right to me. As a result, messages were delivered to customers, everything worked, but! Was it convenient to use all this? Not!

At the end of June, I came across a great article from Serge Koval - Python and real-time Web . Surprisingly, at that time I did not know about the existence of Faye . The article opened this wonderful project for me, as well as the understanding of the fact that, nevertheless, the Centrifuge in its current state does not greatly simplify life when developing real-time web applications.

Since that time, I have finished the Centrifuge with an eye to usability and with an eye on pusher.com , pubnub.com and Faye.

The question of why I needed to write code from scratch, if there are already more mature and advanced analogues, is inevitable. Here are some reasons:

- It is interesting. The project currently uses Tornado, ZeroMQ, Redis, SockJS, Bootstrap 3. Excellent tools, working with which - unlimited happiness.

- Pusher.com and Pubnub.com are cloud services, it’s not always possible / have_ meaning / want to rely on a third party. Unable to make changes to the server side.

- I still haven't found any Python analogs (maybe you know?), The Faye backend is Ruby or NodeJS. To make the subscription authorization to the channel, you need to write extensions in these languages. I wanted to create a solution that is more independent of the backend language of the web application, providing the necessary functionality out of the box.

- Some features that are not in the analogues. This is the presence of namespaces that define the characteristics of the behavior of their channels. A web interface for managing projects, their settings and the ability to monitor messages in channels in real time.

Now I’ll tell you about the changes that have occurred since the previous Centrifuge article was written.

Structure - projects, namespaces and their settings - will now by default be stored in SQLite, the database included in the standard Python library. Therefore, when running Centrifuge processes on one machine, it is no longer necessary to install PostgreSQL or MongoDB, as it was before. Since the centrifuge is designed for use in small and medium-sized projects - I think this is an important and necessary change, since one machine should be enough in full.

You can go a little further - and run the centrifuge with the structure described in the configuration file. At the same time, the ability to dynamically make and save changes from the web interface is lost, but there are no dependencies on external storage. This feature also helps tremendously in development.

There is support for presence and history - now you can find out who is currently connected to the channel, as well as get the latest messages sent to the channel. Redis is used to store this data. If Redis is not configured - the data simply will not be available to customers, nothing will break.

The question arises. Now the centrifuge uses ZeroMQ PUB / SUB sockets for communication between several of its processes. Perhaps, once Redis entered the game as a repository of information about connected clients and message history, should you use its PUB / SUB capabilities for communication between Centrifuge processes instead of ZeroMQ? In the only comparative benchmark that I saw, ZeroMQ outperforms Redis in performance.

Therefore, at the moment I left everything as it is. However, this is a controversial and important point.

Even now you can receive messages about the connection (disconnection) of the client to the channel (from the channel). Pleasant little thing.

Finally, perhaps the most important thing is a javascript client appeared - a wrapper over the Centrifuge protocol. It is based on the Event Emitter , written by Oliver Caldwell . Now it’s very easy to interact with Centrifuge from the browser. Like this:

var centrifuge = new centrifuge ( {

// authentication settings

} ) ;

centrifuge. on ( 'connect' , function ( ) {

// the connection to the Centrifuge is established

var subscription = centrifuge. subscribe ( 'python: django' , function ( message ) {

// function called when a new message is received from the channel

} ) ;

subscription. on ( 'ready' , function ( ) {

subscription. presence ( function ( message ) {

// received information about clients connected to the channel

} ) ;

subscription. history ( function ( message ) {

// history of the latest channel messages

} ) ;

subscription. on ( 'join' , function ( message ) {

// called when a new client connects to the channel

} ) ;

subscription. on ( 'leave' , function ( message ) {

// called when the client disconnects from the channel

} ) ;

} ) ;

} ) ;

centrifuge. on ( 'disconnect' , function ( ) {

// Centrifuge connection lost

} ) ;

centrifuge. connect ( ) ;

Overboard in this example are the authentication settings (you can read about them in the documentation ). Also note the name of the channel - it consists of the namespace name that must be created in the administrative interface before connecting, in this case

python . The name of the channel itself is indicated after - in this case, django . The namespace defines the settings of all channels belonging to it. In the project settings, you can choose the default namespace - then in the javascript code, you can not explicitly specify the name of the namespace. That is, if the python namespace is the default for the project, you can write like this:centrifuge. on ( 'connect' , function ( ) {

var subscription = centrifuge. subscribe ( 'django' , function ( message ) {

console. log ( message ) ;

} ) ;

} ) ;

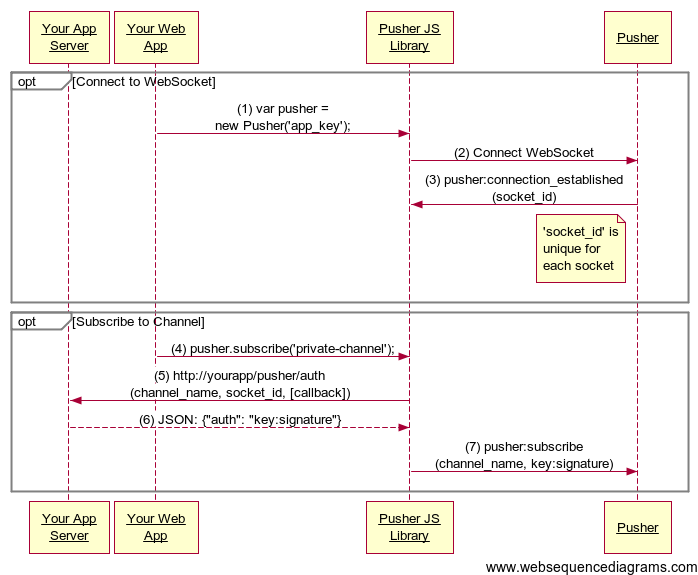

Authorization in this kind of applications is perhaps the most difficult part. As I already mentioned, in Faye you need to write extensions on NodeJS or Ruby to protect access to certain channels. Pusher.com for private channels offers the following scheme:

When you try to subscribe to a private channel, an AJAX request is sent to the backend of your application with the channel name. In case access is allowed, you must return a signed response, which is then sent along with the channel name directly to Pusher. The advantage here is that at the time of receiving an AJAX request, your application already contains the current user object in most cases (for example, in Django, it's

request.user ).The centrifuge takes a slightly different approach. The current user ID is sent once at the moment of connection - you specify it when you configure the javascript client along with the project ID and token. A token is an HMAC generated based on the project's secret key (which only your application’s backend should know about), the project ID, and the user ID. The token is required to verify the correctness of the transmitted project ID and user ID. In the future, when subscribing to private channels, the Centrifuge will send a POST request to your application with string user IDs, namespace name and channel name. Therefore, first of all, in the authorization handler function, you will need to get your user's object by ID.

Another important point regarding authorization is that now, in order to subscribe to several channels, you have to call the

subscribe function several times on the client side. If the channels are private, then each such subscription will result in a POST request to your application. Not optimized behavior that I would like to improve. But the same pusher.com, recognizing that such cases, although rare, are among the demands of their clients, has not yet fully resolved this problem . Here I am still looking for the right solution.I would like to mention another way to protect private data, which has not been canceled. For example, to make separate private channels for each user of the application - it is possible to generate difficult to guess channel names based on some secret key and user ID. In this case, it is quite possible to do without additional authorization, at least as long as it is not profitable for your clients to share the names of their private channels :)

It is possible to add a custom asynchronous function (framed by the @coroutine tornado decorator) before posting a message to the channel. Inside this function, you can do anything with the message, including returning None and thereby unpublish the message. But, perhaps, this is of little use to anyone, as is the similar opportunity to add a handler called after publication. This is a fairly low-level intervention and requires knowledge of Python and Tornado.

Installing a Centrifuge in the simplest case comes down to one

pip install centrifuge command inside virtualenv. However, ZeroMQ (libzmq3) and the dev package for PostgreSQL must be installed on the machine (PostgreSQL server itself is optional). The found problems that may arise during installation from PYPI, and how to solve them are described in the documentation . Start of one process is performed by the centrifuge team. However, a configuration file is required to launch into the combat environment, as it contains important security settings. You also can’t do without additional command line options if you want to run multiple processes.Here in this section of the documentation I tried to explain in as much detail as possible how the centrifuge works, what startup options there are, what address to specify when connecting from a browser and much more. In English, really.

Load testing has not yet conducted. I hope to do some benchmarks in the near future. It is interesting to compare with Faye, it is interesting to run on PYPY. And, of course, it is necessary to continue working on resistance to all sorts of errors, improve the Python code and the javascript client, and so on. Join now!

Thanks for attention!

Source: https://habr.com/ru/post/194640/

All Articles