Ten sensors and one grandmother in the service of progress

Good afternoon, Habr!

Good afternoon, Habr!Today I will tell you about the ICDAR and CBDAR conferences held in late August in the capital city of Washington. More precisely, not about conferences entirely - science is quite specific there, not in vain, in addition to the staff of ABBYY, the Russian-speaking participants there were once or twice and obchelsya. Here we will talk about the work of the team under the guidance of an enthusiastic scientist named Kai Kunze .

Kai's area of interest does not quite correspond to the topics of conferences, but is closely intertwined with it. In sufficient detail, Kai spoke about his developments at his plenary report (Keynote talk) at the CBDAR conference. And Kai is doing what the computer teaches to understand what the user is doing. Since most of the conference participants (including me) have little knowledge of hardware, this topic was not properly covered in the reports, so there are no stories about hardware under the cut.

Further, I will present the vision of this scientist (as far as I managed to understand him), without mentioning too often that the judgments here belong not to me, but to Kai Kunze.

')

At the present stage of technology development, the user's attention is the bottleneck for work. Devices that do not require special attention to themselves, it is more convenient to use. Thus, computers must become proactive. What should it look like? Let's sort on a small example.

Suppose you are repairing a device that is so complex that you often have to check the instructions. How convenient it would be if someone would helpfully open the necessary instruction page for you depending on what you are doing right now. This is easy to achieve if this someone understands what you are doing and what your difficulties are. In order to achieve such an understanding, an activity recognition system is created.

The system receives input information from various sensors that are hung on a person - gyroscopes, microphones, compass, ultrasonic and inertial sensors, etc. How the system is trained, the authors do not disclose, but I think there is nothing breakthrough at this moment: most likely, hidden Markov models are used, which de facto have already become the standard for recognizing continuous processes. The system is already well trained and recognizes different types of activities (during the report, Kai honestly admitted that the system is now tuned to a specific user, since the signals from different people doing the same work vary greatly). For each kind of activity you need your own set of sensors. But there are a number of problems.

Can you imagine that in the morning your grandmother would hang about a dozen sensors on herself, and when changing activities, he would replace them? Well, unless you are Kai Kunze, to whom it was the grandmother who helped with the research. Therefore, for real work, you need to automatically calibrate the data from the sensors in order to dynamically determine on which part of the body the sensor is located, and then evaluate the data received from it. In fact, today people already carry sensors on themselves, in the same smartphone there is both a gyroscope and a microphone, according to Kai, already sensitive enough for his purposes. With the spread of GoogleGlasses, the task will be quite real.

It is intended to activate a set of bases from people’s existing sensors, and, as Kai himself predicts, recognition accuracy will be over 95% between 2015 and 2020 — and this is for ordinary people with smartphones, and not for students hung with sensors.

Now back to the conference topic. Kai and his colleagues presented two developments. The first is an electroencephalogram attempt to find out what a person is reading - a scientific article, news, or manga (Kai works at Osaka University). Moreover, in this experiment, the system was trained on one subject, and tested on the other. The result is still negative: it’s not possible to find out exactly what a person is reading - the system gives random answers. But it turned out very well to distinguish reading from watching videos and pictures - though the experiments were not done too much (three times each task), so it’s too early to talk seriously about a positive result.

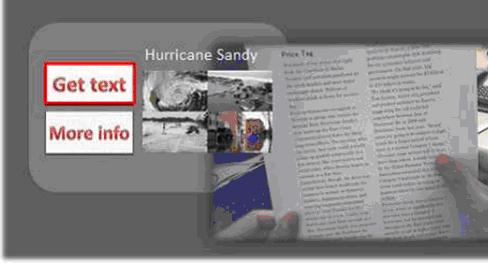

The second development of the group, which they told about at the conference, is eye-tracking system for analyzing what the user is reading on the document. Already two reports were devoted to this system. The idea is simple - a small camera to fix the direction of gaze when the user is studying a document - no matter whether on paper or on a computer. Points for this look like this:

Before each experiment, the system is calibrated, prompting the user to consistently look at the center of the document and at four corners. To overcome perspective distortions, the document is so far selected from the base, where it is stored in undistorted form.

The base was, frankly, not very large, but the documents were recognizable from it quite reliably. The system made many requests (each took about 40 milliseconds) and almost always correctly recognized which of the documents the user was reading.

Need it can be for many different purposes. For example, you can keep statistics from the series “only 10% of users read to this point.” How to do this was covered in the reports. Let's just say - the idea of controlling the reading of the user agreement was not voiced :) The speaker showed a log of his reading, where it was clear how he looked at the next page, having encountered an incomprehensible abbreviation. For the development of this system, in addition to the camera that tracks the view, a special semi-transparent virtual reality helmet (see-through head mounted display, HMD) was worn on the subject, which could be controlled with a look (the button was considered to be pressed if the user looked at it for more than two seconds ). It looked like this from the user's side:

The report talked about the testing of this device. The experiment was like this - the user was given to read the text (from the database), which contained the line “now look at the monitor”. The article reported about 100% recall (whenever the user looked at the screen, the system understood this) and 44% precision (in more than half of the cases when the system thought that the user was looking at the screen, she was mistaken).

It is clear that for the time being this is only a scientific development and it is difficult to say whether it will “grow” out of it. But Kai Kunze now is just that person who, with his gaze, can move the mouse pointer and click on the plus sign, evaluating this review.

Slides of the large plenary report can be viewed here .

Dmitry Deryagin

technology development department

Source: https://habr.com/ru/post/193412/

All Articles