Artificial Intelligence and Why My Computer Doesn't Understand Me?

Hector Levek wiki claims his computer is dumb. And yours too. Siri and Google voice search are able to understand the proposals. For example, “What films will be shown nearby at 7 o'clock?” But what about the question “Can an alligator run a 100-meter barrier?” Nobody asked such a question before. But any adult can find the answer to it (No. Alligators cannot participate in the run with barriers). But if you try to enter this question into Google, you’ll get tons of information about the Florida Gators athletics team. Other search engines, such as Wolfram Alpha, are also not able to find the answer to the question posed. Watson, the computer system that won the “Jeopardy!” Quiz, is unlikely to show itself better.

In a frightening article , recently presented at the international joint conference on artificial intelligence wiki , Leveque, a scientist from the University of Toronto dealing with these issues, told all those involved in the development of AI. He claimed that his colleagues had forgotten about the word “intelligence” in the term “artificial intelligence”.

Leveque began by criticizing the famous "Turing Test" , in which a person, through a series of questions and answers, tries to distinguish a car from a live companion. It is believed that if the computer can pass the Turing test, then we can confidently conclude that the machine has intelligence. But Leveque claims that the Turing test is practically useless, as it is a simple game. Every year several bots pass this test live in competitions for the Löbner Prize . But the winners cannot be called truly intellectual; they resort to all sorts of tricks and essentially engage in deception. If a person asks the bot “What is your height?”, Then the system will have to fantasize in order to pass the Turing test. In practice, it turned out that the winners bots too often brag and try to mislead them to be called intellectual. One program pretended to be paranoid ; others have shown themselves well in the use of trolling interlocutor with single-line phrases. It is very symbolic that programs resort to all sorts of tricks and scams in an attempt to pass the Turing test. The true purpose of AI is to build intelligence, and not to create a program, honed to pass a single test.

Trying to direct the flow in the right direction, Levek offers another test for AI researchers, much more difficult. A test based on the work he did with Leora Morgenstern and Ernest Davis. Together, they developed a set of questions called Vinograd Schemes , named after the Terry Vinograd wiki , a pioneer in artificial intelligence at Stanford University. In the early seventies, Grapes asked the question of building a machine that would be able to answer the following question:

')

City managers refused to give permission to the evil protesters because they were afraid of violence. Who is afraid of violence?

a) City managers

b) Angry demonstrators

Leveque, Davis and Morgenstern have developed a set of similar tasks that seem simple to a reasonable person, but very difficult for machines that can only google. Some of them are difficult to solve with the help of Google, because the question involves fictional people who, by definition, have few links in a search engine:

Joanna certainly thanked Suzanne for the help she provided to her. Who helped?

a) Joanna

b) Suzanne

(To complicate the task, you can replace "rendered" with "received")

You can count the web pages on which a person named Joanna or Susanna is helping someone. Therefore, the answer to this question requires a very deep understanding of the subtleties of human language and the nature of social interaction.

Other issues are difficult to google for the same reason, why the alligator question is hard to find: alligators are real, but the specific fact in the question is rarely discussed by people. For example:

The big ball made a hole in the table because it was made of foam. What was made of foam? (In alternative formulation, foam is replaced with iron)

a) Big ball

b) Table

Sam tried to draw a picture of a shepherd with a ram, but in the end it turned out like a golfer. Who is like a golfer?

a) shepherd

b) Baran

These examples, which are closely related to the linguistic phenomenon called anaphora (the phenomenon when some expression denotes the same essence as some other expression previously found in the text), are difficult because they require common sense - which is still not accessible to machines - and because they contain things that people do not often mention on web pages, and therefore these facts do not fall into the giant databases.

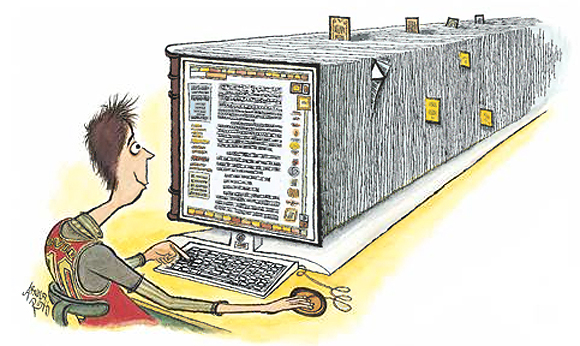

In other words, these are examples of what I like to call the Heavy Tails Problem: ordinary questions can often be answered on the Web, but rare questions baffle the entire Web with its Big Data systems. Most AI programs face a problem if what they are looking for is not found in the form of an exact quotation on web pages. This is partly one of the reasons for Watson's most famous mistake - to confuse the city of Toronto in Canada with the city of the same name in the United States.

A similar problem arises in the search for images, for two reasons: there are many rare pictures, there are many rare picture captions. There are millions of pictures with the caption "cat"; but Google search can show almost nothing relevant for the query “scuba diver with a chocolate cigarette” (tons of pictures of cigars, painted beauties, beaches and chocolate cakes). In this case, anyone can easily build an imaginary picture of the desired diver. Or take the query "right-handed men" ("right-handed man"). There are a lot of right-handed images in the Web that are engaged in some business with the right hand (for example, throwing a baseball). Anyone can quickly extract such images from the photo archive. But very few such pictures are signed with the word "right-handed" ("right-handed-man"). The search for the word “right-handed” gives out to the mountain a huge number of pictures of sports stars, bells, golf clubs, keys of keys and mugs of coffee. Some match the request, but most do not.

Leveque saved his most critical remarks by the end of the report. His concern is not that modern artificial intelligence does not know how to solve this kind of problem, but that modern artificial intelligence has completely forgotten about them. From the point of view of Levek, the development of AI fell into the trap of "changing silver bullets" and is constantly looking for the next big breakthrough, be it expert systems or BigData, but all barely noticeable and deep knowledge that every normal person has is never scrupulously analyzed. This is a colossal task - “how to reduce the mountain instead of laying the road,” Levek writes. But this is exactly what researchers need to do.

In the end, Leveque called on his colleagues to stop misleading. He says: “There is much to be achieved by determining what exactly does not fall within the scope of our research, and recognize that other approaches may be needed.” In other words, trying to compete with the human intellect, without realizing all the confusion of the human mind, is like asking an alligator to run a hundred meter barrier.

From translator

I was interested in Leveque’s critical view of modern Siri and Google. Intellectual search engines have perfectly learned to imitate the understanding of the question asked by users. But they are still infinitely far from the AI, about which they make films and write books.

Source: https://habr.com/ru/post/193000/

All Articles